Ivor W. Tsang

Dataset Color Quantization: A Training-Oriented Framework for Dataset-Level Compression

Feb 24, 2026Abstract:Large-scale image datasets are fundamental to deep learning, but their high storage demands pose challenges for deployment in resource-constrained environments. While existing approaches reduce dataset size by discarding samples, they often ignore the significant redundancy within each image -- particularly in the color space. To address this, we propose Dataset Color Quantization (DCQ), a unified framework that compresses visual datasets by reducing color-space redundancy while preserving information crucial for model training. DCQ achieves this by enforcing consistent palette representations across similar images, selectively retaining semantically important colors guided by model perception, and maintaining structural details necessary for effective feature learning. Extensive experiments across CIFAR-10, CIFAR-100, Tiny-ImageNet, and ImageNet-1K show that DCQ significantly improves training performance under aggressive compression, offering a scalable and robust solution for dataset-level storage reduction. Code is available at \href{https://github.com/he-y/Dataset-Color-Quantization}{https://github.com/he-y/Dataset-Color-Quantization}.

Olaf-World: Orienting Latent Actions for Video World Modeling

Feb 10, 2026Abstract:Scaling action-controllable world models is limited by the scarcity of action labels. While latent action learning promises to extract control interfaces from unlabeled video, learned latents often fail to transfer across contexts: they entangle scene-specific cues and lack a shared coordinate system. This occurs because standard objectives operate only within each clip, providing no mechanism to align action semantics across contexts. Our key insight is that although actions are unobserved, their semantic effects are observable and can serve as a shared reference. We introduce Seq$Δ$-REPA, a sequence-level control-effect alignment objective that anchors integrated latent action to temporal feature differences from a frozen, self-supervised video encoder. Building on this, we present Olaf-World, a pipeline that pretrains action-conditioned video world models from large-scale passive video. Extensive experiments demonstrate that our method learns a more structured latent action space, leading to stronger zero-shot action transfer and more data-efficient adaptation to new control interfaces than state-of-the-art baselines.

Collaborative Group-Aware Hashing for Fast Recommender Systems

Dec 23, 2025

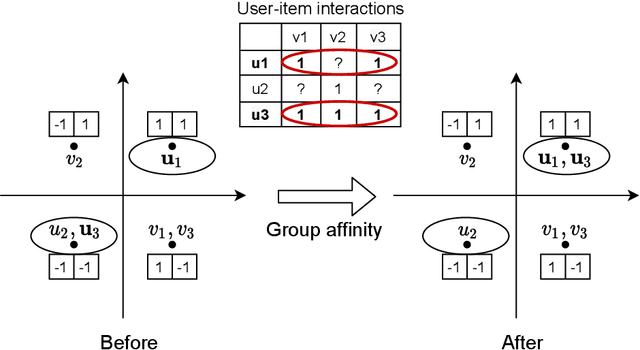

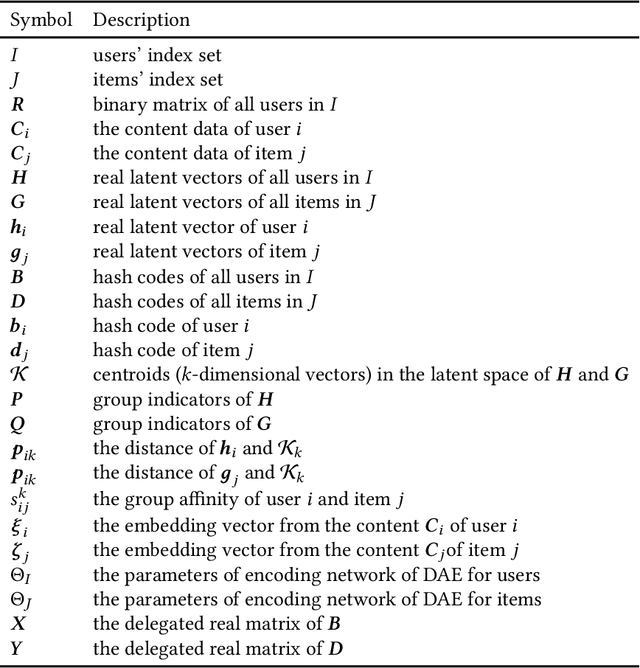

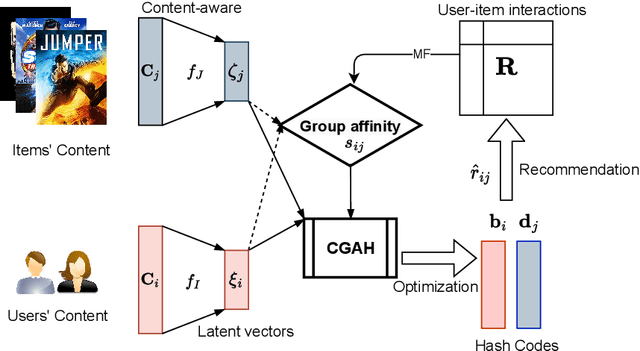

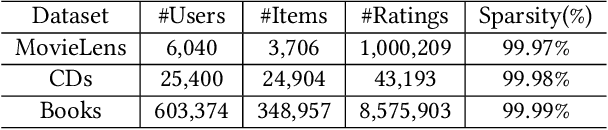

Abstract:The fast online recommendation is critical for applications with large-scale databases; meanwhile, it is challenging to provide accurate recommendations in sparse scenarios. Hash technique has shown its superiority for speeding up the online recommendation by bit operations on Hamming distance computations. However, existing hashing-based recommendations suffer from low accuracy, especially with sparse settings, due to the limited representation capability of each bit and neglected inherent relations among users and items. To this end, this paper lodges a Collaborative Group-Aware Hashing (CGAH) method for both collaborative filtering (namely CGAH-CF) and content-aware recommendations (namely CGAH) by integrating the inherent group information to alleviate the sparse issue. Firstly, we extract inherent group affinities of users and items by classifying their latent vectors into different groups. Then, the preference is formulated as the inner product of the group affinity and the similarity of hash codes. By learning hash codes with the inherent group information, CGAH obtains more effective hash codes than other discrete methods with sparse interactive data. Extensive experiments on three public datasets show the superior performance of our proposed CGAH and CGAH-CF over the state-of-the-art discrete collaborative filtering methods and discrete content-aware recommendations under different sparse settings.

Uncover and Unlearn Nuisances: Agnostic Fully Test-Time Adaptation

Nov 16, 2025Abstract:Fully Test-Time Adaptation (FTTA) addresses domain shifts without access to source data and training protocols of the pre-trained models. Traditional strategies that align source and target feature distributions are infeasible in FTTA due to the absence of training data and unpredictable target domains. In this work, we exploit a dual perspective on FTTA, and propose Agnostic FTTA (AFTTA) as a novel formulation that enables the usage of off-the-shelf domain transformations during test-time to enable direct generalization to unforeseeable target data. To address this, we develop an uncover-and-unlearn approach. First, we uncover potential unwanted shifts between source and target domains by simulating them through predefined mappings and consider them as nuisances. Then, during test-time prediction, the model is enforced to unlearn these nuisances by regularizing the consequent shifts in latent representations and label predictions. Specifically, a mutual information-based criterion is devised and applied to guide nuisances unlearning in the feature space and encourage confident and consistent prediction in label space. Our proposed approach explicitly addresses agnostic domain shifts, enabling superior model generalization under FTTA constraints. Extensive experiments on various tasks, involving corruption and style shifts, demonstrate that our method consistently outperforms existing approaches.

* 26 pages, 4 figures

FOCUS: Frequency-Optimized Conditioning of DiffUSion Models for mitigating catastrophic forgetting during Test-Time Adaptation

Aug 20, 2025Abstract:Test-time adaptation enables models to adapt to evolving domains. However, balancing the tradeoff between preserving knowledge and adapting to domain shifts remains challenging for model adaptation methods, since adapting to domain shifts can induce forgetting of task-relevant knowledge. To address this problem, we propose FOCUS, a novel frequency-based conditioning approach within a diffusion-driven input-adaptation framework. Utilising learned, spatially adaptive frequency priors, our approach conditions the reverse steps during diffusion-driven denoising to preserve task-relevant semantic information for dense prediction. FOCUS leverages a trained, lightweight, Y-shaped Frequency Prediction Network (Y-FPN) that disentangles high and low frequency information from noisy images. This minimizes the computational costs involved in implementing our approach in a diffusion-driven framework. We train Y-FPN with FrequencyMix, a novel data augmentation method that perturbs the images across diverse frequency bands, which improves the robustness of our approach to diverse corruptions. We demonstrate the effectiveness of FOCUS for semantic segmentation and monocular depth estimation across 15 corruption types and three datasets, achieving state-of-the-art averaged performance. In addition to improving standalone performance, FOCUS complements existing model adaptation methods since we can derive pseudo labels from FOCUS-denoised images for additional supervision. Even under limited, intermittent supervision with the pseudo labels derived from the FOCUS denoised images, we show that FOCUS mitigates catastrophic forgetting for recent model adaptation methods.

Decentralized Optimization on Compact Submanifolds by Quantized Riemannian Gradient Tracking

Jun 09, 2025Abstract:This paper considers the problem of decentralized optimization on compact submanifolds, where a finite sum of smooth (possibly non-convex) local functions is minimized by $n$ agents forming an undirected and connected graph. However, the efficiency of distributed optimization is often hindered by communication bottlenecks. To mitigate this, we propose the Quantized Riemannian Gradient Tracking (Q-RGT) algorithm, where agents update their local variables using quantized gradients. The introduction of quantization noise allows our algorithm to bypass the constraints of the accurate Riemannian projection operator (such as retraction), further improving iterative efficiency. To the best of our knowledge, this is the first algorithm to achieve an $\mathcal{O}(1/K)$ convergence rate in the presence of quantization, matching the convergence rate of methods without quantization. Additionally, we explicitly derive lower bounds on decentralized consensus associated with a function of quantization levels. Numerical experiments demonstrate that Q-RGT performs comparably to non-quantized methods while reducing communication bottlenecks and computational overhead.

Branches, Assemble! Multi-Branch Cooperation Network for Large-Scale Click-Through Rate Prediction at Taobao

Nov 20, 2024

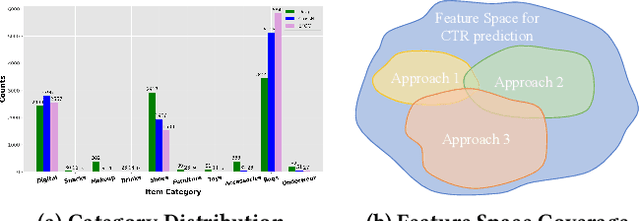

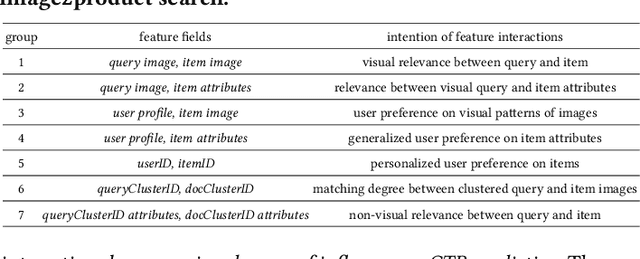

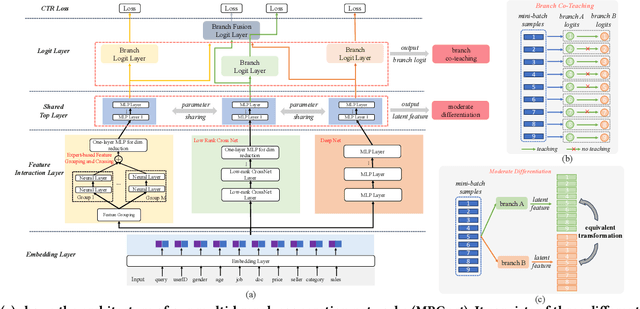

Abstract:Existing click-through rate (CTR) prediction works have studied the role of feature interaction through a variety of techniques. Each interaction technique exhibits its own strength, and solely using one type could constrain the model's capability to capture the complex feature relationships, especially for industrial large-scale data with enormous users and items. Recent research shows that effective CTR models often combine an MLP network with a dedicated feature interaction network in a two-parallel structure. However, the interplay and cooperative dynamics between different streams or branches remain under-researched. In this work, we introduce a novel Multi-Branch Cooperation Network (MBCnet) which enables multiple branch networks to collaborate with each other for better complex feature interaction modeling. Specifically, MBCnet consists of three branches: the Expert-based Feature Grouping and Crossing (EFGC) branch that promotes the model's memorization ability of specific feature fields, the low rank Cross Net branch and Deep branch to enhance both explicit and implicit feature crossing for improved generalization. Among branches, a novel cooperation scheme is proposed based on two principles: branch co-teaching and moderate differentiation. Branch co-teaching encourages well-learned branches to support poorly-learned ones on specific training samples. Moderate differentiation advocates branches to maintain a reasonable level of difference in their feature representations. The cooperation strategy improves learning through mutual knowledge sharing via co-teaching and boosts the discovery of diverse feature interactions across branches. Extensive experiments on large-scale industrial datasets and online A/B test demonstrate MBCnet's superior performance, delivering a 0.09 point increase in CTR, 1.49% growth in deals, and 1.62% rise in GMV. Core codes will be released soon.

Imitation from Diverse Behaviors: Wasserstein Quality Diversity Imitation Learning with Single-Step Archive Exploration

Nov 11, 2024Abstract:Learning diverse and high-performance behaviors from a limited set of demonstrations is a grand challenge. Traditional imitation learning methods usually fail in this task because most of them are designed to learn one specific behavior even with multiple demonstrations. Therefore, novel techniques for quality diversity imitation learning are needed to solve the above challenge. This work introduces Wasserstein Quality Diversity Imitation Learning (WQDIL), which 1) improves the stability of imitation learning in the quality diversity setting with latent adversarial training based on a Wasserstein Auto-Encoder (WAE), and 2) mitigates a behavior-overfitting issue using a measure-conditioned reward function with a single-step archive exploration bonus. Empirically, our method significantly outperforms state-of-the-art IL methods, achieving near-expert or beyond-expert QD performance on the challenging continuous control tasks derived from MuJoCo environments.

Alpha and Prejudice: Improving $α$-sized Worst-case Fairness via Intrinsic Reweighting

Nov 05, 2024Abstract:Worst-case fairness with off-the-shelf demographics achieves group parity by maximizing the model utility of the worst-off group. Nevertheless, demographic information is often unavailable in practical scenarios, which impedes the use of such a direct max-min formulation. Recent advances have reframed this learning problem by introducing the lower bound of minimal partition ratio, denoted as $\alpha$, as side information, referred to as ``$\alpha$-sized worst-case fairness'' in this paper. We first justify the practical significance of this setting by presenting noteworthy evidence from the data privacy perspective, which has been overlooked by existing research. Without imposing specific requirements on loss functions, we propose reweighting the training samples based on their intrinsic importance to fairness. Given the global nature of the worst-case formulation, we further develop a stochastic learning scheme to simplify the training process without compromising model performance. Additionally, we address the issue of outliers and provide a robust variant to handle potential outliers during model training. Our theoretical analysis and experimental observations reveal the connections between the proposed approaches and existing ``fairness-through-reweighting'' studies, with extensive experimental results on fairness benchmarks demonstrating the superiority of our methods.

Towards Harmless Rawlsian Fairness Regardless of Demographic Prior

Nov 04, 2024Abstract:Due to privacy and security concerns, recent advancements in group fairness advocate for model training regardless of demographic information. However, most methods still require prior knowledge of demographics. In this study, we explore the potential for achieving fairness without compromising its utility when no prior demographics are provided to the training set, namely \emph{harmless Rawlsian fairness}. We ascertain that such a fairness requirement with no prior demographic information essential promotes training losses to exhibit a Dirac delta distribution. To this end, we propose a simple but effective method named VFair to minimize the variance of training losses inside the optimal set of empirical losses. This problem is then optimized by a tailored dynamic update approach that operates in both loss and gradient dimensions, directing the model towards relatively fairer solutions while preserving its intact utility. Our experimental findings indicate that regression tasks, which are relatively unexplored from literature, can achieve significant fairness improvement through VFair regardless of any prior, whereas classification tasks usually do not because of their quantized utility measurements. The implementation of our method is publicly available at \url{https://github.com/wxqpxw/VFair}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge