Feng He

Unleashing Foundation Vision Models: Adaptive Transfer for Diverse Data-Limited Scientific Domains

Dec 27, 2025Abstract:In the big data era, the computer vision field benefits from large-scale datasets such as LAION-2B, LAION-400M, and ImageNet-21K, Kinetics, on which popular models like the ViT and ConvNeXt series have been pre-trained, acquiring substantial knowledge. However, numerous downstream tasks in specialized and data-limited scientific domains continue to pose significant challenges. In this paper, we propose a novel Cluster Attention Adapter (CLAdapter), which refines and adapts the rich representations learned from large-scale data to various data-limited downstream tasks. Specifically, CLAdapter introduces attention mechanisms and cluster centers to personalize the enhancement of transformed features through distribution correlation and transformation matrices. This enables models fine-tuned with CLAdapter to learn distinct representations tailored to different feature sets, facilitating the models' adaptation from rich pre-trained features to various downstream scenarios effectively. In addition, CLAdapter's unified interface design allows for seamless integration with multiple model architectures, including CNNs and Transformers, in both 2D and 3D contexts. Through extensive experiments on 10 datasets spanning domains such as generic, multimedia, biological, medical, industrial, agricultural, environmental, geographical, materials science, out-of-distribution (OOD), and 3D analysis, CLAdapter achieves state-of-the-art performance across diverse data-limited scientific domains, demonstrating its effectiveness in unleashing the potential of foundation vision models via adaptive transfer. Code is available at https://github.com/qklee-lz/CLAdapter.

From Pixels to Views: Learning Angular-Aware and Physics-Consistent Representations for Light Field Microscopy

Oct 26, 2025Abstract:Light field microscopy (LFM) has become an emerging tool in neuroscience for large-scale neural imaging in vivo, notable for its single-exposure volumetric imaging, broad field of view, and high temporal resolution. However, learning-based 3D reconstruction in XLFM remains underdeveloped due to two core challenges: the absence of standardized datasets and the lack of methods that can efficiently model its angular-spatial structure while remaining physically grounded. We address these challenges by introducing three key contributions. First, we construct the XLFM-Zebrafish benchmark, a large-scale dataset and evaluation suite for XLFM reconstruction. Second, we propose Masked View Modeling for Light Fields (MVN-LF), a self-supervised task that learns angular priors by predicting occluded views, improving data efficiency. Third, we formulate the Optical Rendering Consistency Loss (ORC Loss), a differentiable rendering constraint that enforces alignment between predicted volumes and their PSF-based forward projections. On the XLFM-Zebrafish benchmark, our method improves PSNR by 7.7% over state-of-the-art baselines.

ProtoReasoning: Prototypes as the Foundation for Generalizable Reasoning in LLMs

Jun 18, 2025Abstract:Recent advances in Large Reasoning Models (LRMs) trained with Long Chain-of-Thought (Long CoT) reasoning have demonstrated remarkable cross-domain generalization capabilities. However, the underlying mechanisms supporting such transfer remain poorly understood. We hypothesize that cross-domain generalization arises from shared abstract reasoning prototypes -- fundamental reasoning patterns that capture the essence of problems across domains. These prototypes minimize the nuances of the representation, revealing that seemingly diverse tasks are grounded in shared reasoning structures.Based on this hypothesis, we propose ProtoReasoning, a framework that enhances the reasoning ability of LLMs by leveraging scalable and verifiable prototypical representations (Prolog for logical reasoning, PDDL for planning).ProtoReasoning features: (1) an automated prototype construction pipeline that transforms problems into corresponding prototype representations; (2) a comprehensive verification system providing reliable feedback through Prolog/PDDL interpreters; (3) the scalability to synthesize problems arbitrarily within prototype space while ensuring correctness. Extensive experiments show that ProtoReasoning achieves 4.7% improvement over baseline models on logical reasoning (Enigmata-Eval), 6.3% improvement on planning tasks, 4.0% improvement on general reasoning (MMLU) and 1.0% on mathematics (AIME24). Significantly, our ablation studies confirm that learning in prototype space also demonstrates enhanced generalization to structurally similar problems compared to training solely on natural language representations, validating our hypothesis that reasoning prototypes serve as the foundation for generalizable reasoning in large language models.

Implicit Priors Editing in Stable Diffusion via Targeted Token Adjustment

Dec 04, 2024Abstract:Implicit assumptions and priors are often necessary in text-to-image generation tasks, especially when textual prompts lack sufficient context. However, these assumptions can sometimes reflect outdated concepts, inaccuracies, or societal bias embedded in the training data. We present Embedding-only Editing (Embedit), a method designed to efficiently adjust implict assumptions and priors in the model without affecting its interpretation of unrelated objects or overall performance. Given a "source" prompt (e.g., "rose") that elicits an implicit assumption (e.g., rose is red) and a "destination" prompt that specifies the desired attribute (e.g., "blue rose"), Embedit fine-tunes only the word token embedding (WTE) of the target object ("rose") to optimize the last hidden state of text encoder in Stable Diffusion, a SOTA text-to-image model. This targeted adjustment prevents unintended effects on other objects in the model's knowledge base, as the WTEs for unrelated objects and the model weights remain unchanged. Consequently, when a prompt does not contain the edited object, all representations, and the model outputs are identical to those of the original, unedited model. Our method is highly efficient, modifying only 768 parameters for Stable Diffusion 1.4 and 2048 for XL in a single edit, matching the WTE dimension of each respective model. This minimal scope, combined with rapid execution, makes Embedit highly practical for real-world applications. Additionally, changes are easily reversible by restoring the original WTE layers. Our experimental results demonstrate that Embedit consistently outperforms previous methods across various models, tasks, and editing scenarios (both single and sequential multiple edits), achieving at least a 6.01% improvement (from 87.17% to 93.18%).

Generalizing Graph Transformers Across Diverse Graphs and Tasks via Pre-Training on Industrial-Scale Data

Jul 04, 2024Abstract:Graph pre-training has been concentrated on graph-level on small graphs (e.g., molecular graphs) or learning node representations on a fixed graph. Extending graph pre-trained models to web-scale graphs with billions of nodes in industrial scenarios, while avoiding negative transfer across graphs or tasks, remains a challenge. We aim to develop a general graph pre-trained model with inductive ability that can make predictions for unseen new nodes and even new graphs. In this work, we introduce a scalable transformer-based graph pre-training framework called PGT (Pre-trained Graph Transformer). Specifically, we design a flexible and scalable graph transformer as the backbone network. Meanwhile, based on the masked autoencoder architecture, we design two pre-training tasks: one for reconstructing node features and the other one for reconstructing local structures. Unlike the original autoencoder architecture where the pre-trained decoder is discarded, we propose a novel strategy that utilizes the decoder for feature augmentation. We have deployed our framework on Tencent's online game data. Extensive experiments have demonstrated that our framework can perform pre-training on real-world web-scale graphs with over 540 million nodes and 12 billion edges and generalizes effectively to unseen new graphs with different downstream tasks. We further conduct experiments on the publicly available ogbn-papers100M dataset, which consists of 111 million nodes and 1.6 billion edges. Our framework achieves state-of-the-art performance on both industrial datasets and public datasets, while also enjoying scalability and efficiency.

Improving Video Retrieval by Adaptive Margin

Mar 09, 2023Abstract:Video retrieval is becoming increasingly important owing to the rapid emergence of videos on the Internet. The dominant paradigm for video retrieval learns video-text representations by pushing the distance between the similarity of positive pairs and that of negative pairs apart from a fixed margin. However, negative pairs used for training are sampled randomly, which indicates that the semantics between negative pairs may be related or even equivalent, while most methods still enforce dissimilar representations to decrease their similarity. This phenomenon leads to inaccurate supervision and poor performance in learning video-text representations. While most video retrieval methods overlook that phenomenon, we propose an adaptive margin changed with the distance between positive and negative pairs to solve the aforementioned issue. First, we design the calculation framework of the adaptive margin, including the method of distance measurement and the function between the distance and the margin. Then, we explore a novel implementation called "Cross-Modal Generalized Self-Distillation" (CMGSD), which can be built on the top of most video retrieval models with few modifications. Notably, CMGSD adds few computational overheads at train time and adds no computational overhead at test time. Experimental results on three widely used datasets demonstrate that the proposed method can yield significantly better performance than the corresponding backbone model, and it outperforms state-of-the-art methods by a large margin.

Friend Recall in Online Games via Pre-training Edge Transformers

Feb 20, 2023

Abstract:Friend recall is an important way to improve Daily Active Users (DAU) in Tencent games. Traditional friend recall methods focus on rules like friend intimacy or training a classifier for predicting lost players' return probability, but ignore feature information of (active) players and historical friend recall events. In this work, we treat friend recall as a link prediction problem and explore several link prediction methods which can use features of both active and lost players, as well as historical events. Furthermore, we propose a novel Edge Transformer model and pre-train the model via masked auto-encoders. Our method achieves state-of-the-art results in the offline experiments and online A/B Tests of three Tencent games.

CLOP: Video-and-Language Pre-Training with Knowledge Regularizations

Nov 07, 2022Abstract:Video-and-language pre-training has shown promising results for learning generalizable representations. Most existing approaches usually model video and text in an implicit manner, without considering explicit structural representations of the multi-modal content. We denote such form of representations as structural knowledge, which express rich semantics of multiple granularities. There are related works that propose object-aware approaches to inject similar knowledge as inputs. However, the existing methods usually fail to effectively utilize such knowledge as regularizations to shape a superior cross-modal representation space. To this end, we propose a Cross-modaL knOwledge-enhanced Pre-training (CLOP) method with Knowledge Regularizations. There are two key designs of ours: 1) a simple yet effective Structural Knowledge Prediction (SKP) task to pull together the latent representations of similar videos; and 2) a novel Knowledge-guided sampling approach for Contrastive Learning (KCL) to push apart cross-modal hard negative samples. We evaluate our method on four text-video retrieval tasks and one multi-choice QA task. The experiments show clear improvements, outperforming prior works by a substantial margin. Besides, we provide ablations and insights of how our methods affect the latent representation space, demonstrating the value of incorporating knowledge regularizations into video-and-language pre-training.

A CLIP-Enhanced Method for Video-Language Understanding

Oct 14, 2021

Abstract:This technical report summarizes our method for the Video-And-Language Understanding Evaluation (VALUE) challenge (https://value-benchmark.github.io/challenge\_2021.html). We propose a CLIP-Enhanced method to incorporate the image-text pretrained knowledge into downstream video-text tasks. Combined with several other improved designs, our method outperforms the state-of-the-art by $2.4\%$ ($57.58$ to $60.00$) Meta-Ave score on VALUE benchmark.

Effective and Efficient Network Embedding Initialization via Graph Partitioning

Aug 28, 2019

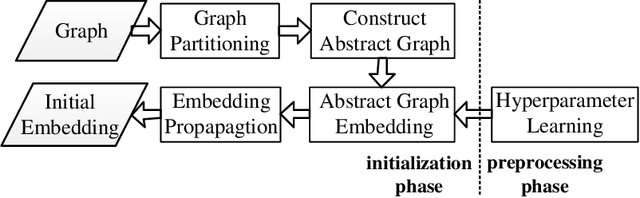

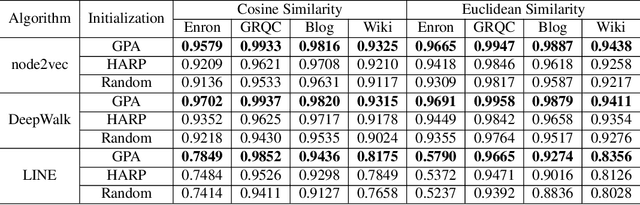

Abstract:Network embedding has been intensively studied in the literature and widely used in various applications, such as link prediction and node classification. While previous work focus on the design of new algorithms or are tailored for various problem settings, the discussion of initialization strategies in the learning process is often missed. In this work, we address this important issue of initialization for network embedding that could dramatically improve the performance of the algorithms on both effectiveness and efficiency. Specifically, we first exploit the graph partition technique that divides the graph into several disjoint subsets, and then construct an abstract graph based on the partitions. We obtain the initialization of the embedding for each node in the graph by computing the network embedding on the abstract graph, which is much smaller than the input graph, and then propagating the embedding among the nodes in the input graph. With extensive experiments on various datasets, we demonstrate that our initialization technique significantly improves the performance of the state-of-the-art algorithms on the evaluations of link prediction and node classification by up to 7.76% and 8.74% respectively. Besides, we show that the technique of initialization reduces the running time of the state-of-the-arts by at least 20%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge