Yuhao Chen

Training-Free Text-to-Image Compositional Food Generation via Prompt Grafting

Jan 25, 2026Abstract:Real-world meal images often contain multiple food items, making reliable compositional food image generation important for applications such as image-based dietary assessment, where multi-food data augmentation is needed, and recipe visualization. However, modern text-to-image diffusion models struggle to generate accurate multi-food images due to object entanglement, where adjacent foods (e.g., rice and soup) fuse together because many foods do not have clear boundaries. To address this challenge, we introduce Prompt Grafting (PG), a training-free framework that combines explicit spatial cues in text with implicit layout guidance during sampling. PG runs a two-stage process where a layout prompt first establishes distinct regions and the target prompt is grafted once layout formation stabilizes. The framework enables food entanglement control: users can specify which food items should remain separated or be intentionally mixed by editing the arrangement of layouts. Across two food datasets, our method significantly improves the presence of target objects and provides qualitative evidence of controllable separation.

Bridging the Discrete-Continuous Gap: Unified Multimodal Generation via Coupled Manifold Discrete Absorbing Diffusion

Jan 07, 2026Abstract:The bifurcation of generative modeling into autoregressive approaches for discrete data (text) and diffusion approaches for continuous data (images) hinders the development of truly unified multimodal systems. While Masked Language Models (MLMs) offer efficient bidirectional context, they traditionally lack the generative fidelity of autoregressive models and the semantic continuity of diffusion models. Furthermore, extending masked generation to multimodal settings introduces severe alignment challenges and training instability. In this work, we propose \textbf{CoM-DAD} (\textbf{Co}upled \textbf{M}anifold \textbf{D}iscrete \textbf{A}bsorbing \textbf{D}iffusion), a novel probabilistic framework that reformulates multimodal generation as a hierarchical dual-process. CoM-DAD decouples high-level semantic planning from low-level token synthesis. First, we model the semantic manifold via a continuous latent diffusion process; second, we treat token generation as a discrete absorbing diffusion process, regulated by a \textbf{Variable-Rate Noise Schedule}, conditioned on these evolving semantic priors. Crucially, we introduce a \textbf{Stochastic Mixed-Modal Transport} strategy that aligns disparate modalities without requiring heavy contrastive dual-encoders. Our method demonstrates superior stability over standard masked modeling, establishing a new paradigm for scalable, unified text-image generation.

Stable Language Guidance for Vision-Language-Action Models

Jan 07, 2026Abstract:Vision-Language-Action (VLA) models have demonstrated impressive capabilities in generalized robotic control; however, they remain notoriously brittle to linguistic perturbations. We identify a critical ``modality collapse'' phenomenon where strong visual priors overwhelm sparse linguistic signals, causing agents to overfit to specific instruction phrasings while ignoring the underlying semantic intent. To address this, we propose \textbf{Residual Semantic Steering (RSS)}, a probabilistic framework that disentangles physical affordance from semantic execution. RSS introduces two theoretical innovations: (1) \textbf{Monte Carlo Syntactic Integration}, which approximates the true semantic posterior via dense, LLM-driven distributional expansion, and (2) \textbf{Residual Affordance Steering}, a dual-stream decoding mechanism that explicitly isolates the causal influence of language by subtracting the visual affordance prior. Theoretical analysis suggests that RSS effectively maximizes the mutual information between action and intent while suppressing visual distractors. Empirical results across diverse manipulation benchmarks demonstrate that RSS achieves state-of-the-art robustness, maintaining performance even under adversarial linguistic perturbations.

Specific Multi-emitter Identification: Theoretical Limits and Low-complexity Design

Dec 22, 2025Abstract:Specific emitter identification (SEI) distinguishes emitters by utilizing hardware-induced signal imperfections. However, conventional SEI techniques are primarily designed for single-emitter scenarios. This poses a fundamental limitation in distributed wireless networks, where simultaneous transmissions from multiple emitters result in overlapping signals that conventional single-emitter identification methods cannot effectively handle. To overcome this limitation, we present a specific multi-emitter identification (SMEI) framework via multi-label learning, treating identification as a problem of directly decoding emitter states from overlapping signals. Theoretically, we establish performance bounds using Fano's inequality. Methodologically, the multi-label formulation reduces output dimensionality from exponential to linear scale, thereby substantially decreasing computational complexity. Additionally, we propose an improved SMEI (I-SMEI), which incorporates multi-head attention to effectively capture features in correlated signal combinations. Experimental results demonstrate that SMEI achieves high identification accuracy with a linear computational complexity. Furthermore, the proposed I-SMEI scheme significantly improves identification accuracy across various overlapping scenarios compared to the proposed SMEI and other advanced methods.

Avatar4D: Synthesizing Domain-Specific 4D Humans for Real-World Pose Estimation

Dec 18, 2025Abstract:We present Avatar4D, a real-world transferable pipeline for generating customizable synthetic human motion datasets tailored to domain-specific applications. Unlike prior works, which focus on general, everyday motions and offer limited flexibility, our approach provides fine-grained control over body pose, appearance, camera viewpoint, and environmental context, without requiring any manual annotations. To validate the impact of Avatar4D, we focus on sports, where domain-specific human actions and movement patterns pose unique challenges for motion understanding. In this setting, we introduce Syn2Sport, a large-scale synthetic dataset spanning sports, including baseball and ice hockey. Avatar4D features high-fidelity 4D (3D geometry over time) human motion sequences with varying player appearances rendered in diverse environments. We benchmark several state-of-the-art pose estimation models on Syn2Sport and demonstrate their effectiveness for supervised learning, zero-shot transfer to real-world data, and generalization across sports. Furthermore, we evaluate how closely the generated synthetic data aligns with real-world datasets in feature space. Our results highlight the potential of such systems to generate scalable, controllable, and transferable human datasets for diverse domain-specific tasks without relying on domain-specific real data.

Composite Classifier-Free Guidance for Multi-Modal Conditioning in Wind Dynamics Super-Resolution

Dec 13, 2025

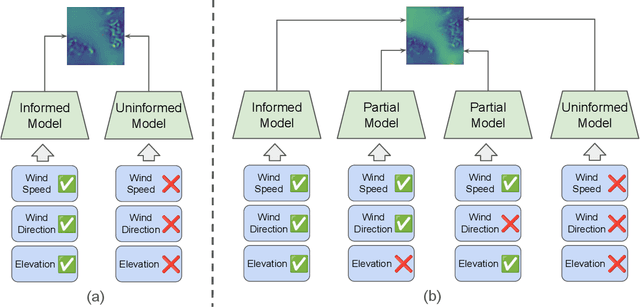

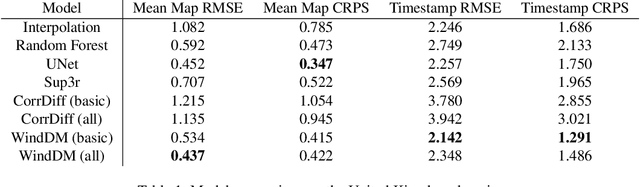

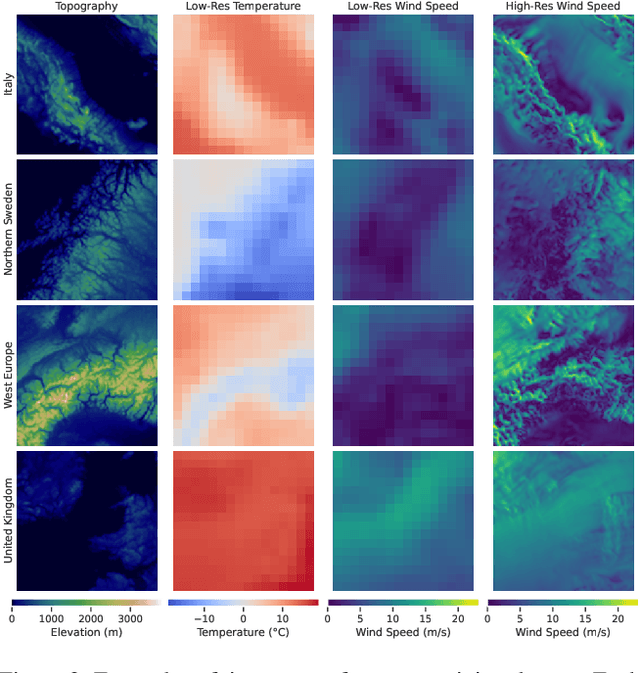

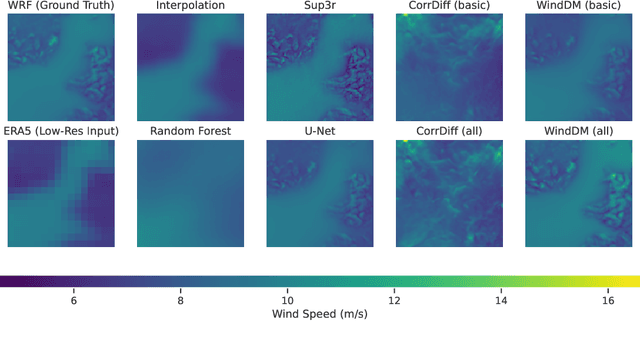

Abstract:Various weather modelling problems (e.g., weather forecasting, optimizing turbine placements, etc.) require ample access to high-resolution, highly accurate wind data. Acquiring such high-resolution wind data, however, remains a challenging and expensive endeavour. Traditional reconstruction approaches are typically either cost-effective or accurate, but not both. Deep learning methods, including diffusion models, have been proposed to resolve this trade-off by leveraging advances in natural image super-resolution. Wind data, however, is distinct from natural images, and wind super-resolvers often use upwards of 10 input channels, significantly more than the usual 3-channel RGB inputs in natural images. To better leverage a large number of conditioning variables in diffusion models, we present a generalization of classifier-free guidance (CFG) to multiple conditioning inputs. Our novel composite classifier-free guidance (CCFG) can be dropped into any pre-trained diffusion model trained with standard CFG dropout. We demonstrate that CCFG outputs are higher-fidelity than those from CFG on wind super-resolution tasks. We present WindDM, a diffusion model trained for industrial-scale wind dynamics reconstruction and leveraging CCFG. WindDM achieves state-of-the-art reconstruction quality among deep learning models and costs up to $1000\times$ less than classical methods.

Food Image Generation on Multi-Noun Categories

Dec 09, 2025Abstract:Generating realistic food images for categories with multiple nouns is surprisingly challenging. For instance, the prompt "egg noodle" may result in images that incorrectly contain both eggs and noodles as separate entities. Multi-noun food categories are common in real-world datasets and account for a large portion of entries in benchmarks such as UEC-256. These compound names often cause generative models to misinterpret the semantics, producing unintended ingredients or objects. This is due to insufficient multi-noun category related knowledge in the text encoder and misinterpretation of multi-noun relationships, leading to incorrect spatial layouts. To overcome these challenges, we propose FoCULR (Food Category Understanding and Layout Refinement) which incorporates food domain knowledge and introduces core concepts early in the generation process. Experimental results demonstrate that the integration of these techniques improves image generation performance in the food domain.

Look As You Think: Unifying Reasoning and Visual Evidence Attribution for Verifiable Document RAG via Reinforcement Learning

Nov 15, 2025Abstract:Aiming to identify precise evidence sources from visual documents, visual evidence attribution for visual document retrieval-augmented generation (VD-RAG) ensures reliable and verifiable predictions from vision-language models (VLMs) in multimodal question answering. Most existing methods adopt end-to-end training to facilitate intuitive answer verification. However, they lack fine-grained supervision and progressive traceability throughout the reasoning process. In this paper, we introduce the Chain-of-Evidence (CoE) paradigm for VD-RAG. CoE unifies Chain-of-Thought (CoT) reasoning and visual evidence attribution by grounding reference elements in reasoning steps to specific regions with bounding boxes and page indexes. To enable VLMs to generate such evidence-grounded reasoning, we propose Look As You Think (LAT), a reinforcement learning framework that trains models to produce verifiable reasoning paths with consistent attribution. During training, LAT evaluates the attribution consistency of each evidence region and provides rewards only when the CoE trajectory yields correct answers, encouraging process-level self-verification. Experiments on vanilla Qwen2.5-VL-7B-Instruct with Paper- and Wiki-VISA benchmarks show that LAT consistently improves the vanilla model in both single- and multi-image settings, yielding average gains of 8.23% in soft exact match (EM) and 47.0% in IoU@0.5. Meanwhile, LAT not only outperforms the supervised fine-tuning baseline, which is trained to directly produce answers with attribution, but also exhibits stronger generalization across domains.

SCALEX: Scalable Concept and Latent Exploration for Diffusion Models

Nov 13, 2025Abstract:Image generation models frequently encode social biases, including stereotypes tied to gender, race, and profession. Existing methods for analyzing these biases in diffusion models either focus narrowly on predefined categories or depend on manual interpretation of latent directions. These constraints limit scalability and hinder the discovery of subtle or unanticipated patterns. We introduce SCALEX, a framework for scalable and automated exploration of diffusion model latent spaces. SCALEX extracts semantically meaningful directions from H-space using only natural language prompts, enabling zero-shot interpretation without retraining or labelling. This allows systematic comparison across arbitrary concepts and large-scale discovery of internal model associations. We show that SCALEX detects gender bias in profession prompts, ranks semantic alignment across identity descriptors, and reveals clustered conceptual structure without supervision. By linking prompts to latent directions directly, SCALEX makes bias analysis in diffusion models more scalable, interpretable, and extensible than prior approaches.

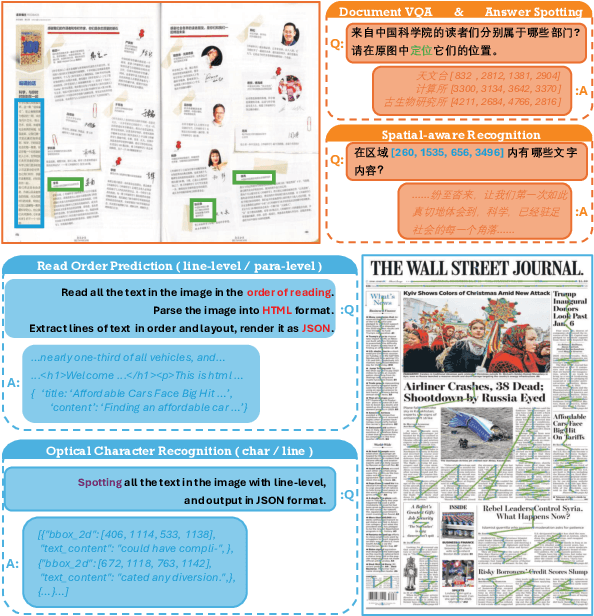

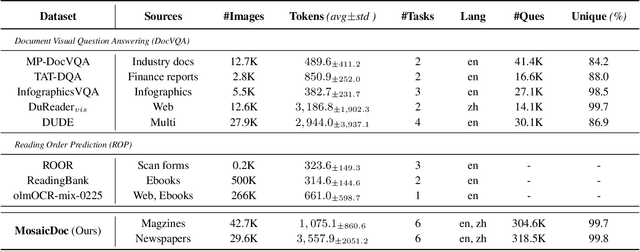

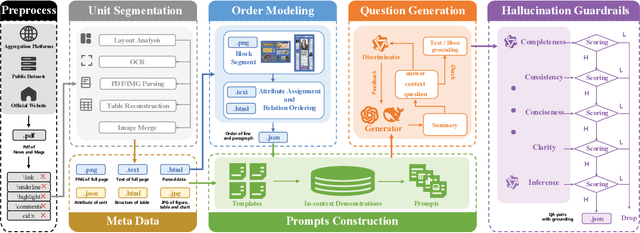

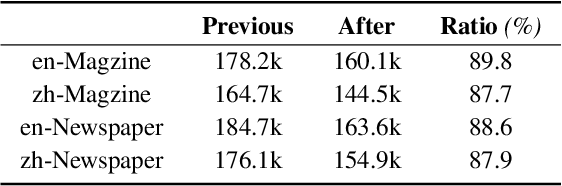

MosaicDoc: A Large-Scale Bilingual Benchmark for Visually Rich Document Understanding

Nov 13, 2025

Abstract:Despite the rapid progress of Vision-Language Models (VLMs), their capabilities are inadequately assessed by existing benchmarks, which are predominantly English-centric, feature simplistic layouts, and support limited tasks. Consequently, they fail to evaluate model performance for Visually Rich Document Understanding (VRDU), a critical challenge involving complex layouts and dense text. To address this, we introduce DocWeaver, a novel multi-agent pipeline that leverages Large Language Models to automatically generate a new benchmark. The result is MosaicDoc, a large-scale, bilingual (Chinese and English) resource designed to push the boundaries of VRDU. Sourced from newspapers and magazines, MosaicDoc features diverse and complex layouts (including multi-column and non-Manhattan), rich stylistic variety from 196 publishers, and comprehensive multi-task annotations (OCR, VQA, reading order, and localization). With 72K images and over 600K QA pairs, MosaicDoc serves as a definitive benchmark for the field. Our extensive evaluation of state-of-the-art models on this benchmark reveals their current limitations in handling real-world document complexity and charts a clear path for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge