Yuchen Cui

Casper: Inferring Diverse Intents for Assistive Teleoperation with Vision Language Models

Jun 17, 2025

Abstract:Assistive teleoperation, where control is shared between a human and a robot, enables efficient and intuitive human-robot collaboration in diverse and unstructured environments. A central challenge in real-world assistive teleoperation is for the robot to infer a wide range of human intentions from user control inputs and to assist users with correct actions. Existing methods are either confined to simple, predefined scenarios or restricted to task-specific data distributions at training, limiting their support for real-world assistance. We introduce Casper, an assistive teleoperation system that leverages commonsense knowledge embedded in pre-trained visual language models (VLMs) for real-time intent inference and flexible skill execution. Casper incorporates an open-world perception module for a generalized understanding of novel objects and scenes, a VLM-powered intent inference mechanism that leverages commonsense reasoning to interpret snippets of teleoperated user input, and a skill library that expands the scope of prior assistive teleoperation systems to support diverse, long-horizon mobile manipulation tasks. Extensive empirical evaluation, including human studies and system ablations, demonstrates that Casper improves task performance, reduces human cognitive load, and achieves higher user satisfaction than direct teleoperation and assistive teleoperation baselines.

SAMJAM: Zero-Shot Video Scene Graph Generation for Egocentric Kitchen Videos

Apr 10, 2025Abstract:Video Scene Graph Generation (VidSGG) is an important topic in understanding dynamic kitchen environments. Current models for VidSGG require extensive training to produce scene graphs. Recently, Vision Language Models (VLM) and Vision Foundation Models (VFM) have demonstrated impressive zero-shot capabilities in a variety of tasks. However, VLMs like Gemini struggle with the dynamics for VidSGG, failing to maintain stable object identities across frames. To overcome this limitation, we propose SAMJAM, a zero-shot pipeline that combines SAM2's temporal tracking with Gemini's semantic understanding. SAM2 also improves upon Gemini's object grounding by producing more accurate bounding boxes. In our method, we first prompt Gemini to generate a frame-level scene graph. Then, we employ a matching algorithm to map each object in the scene graph with a SAM2-generated or SAM2-propagated mask, producing a temporally-consistent scene graph in dynamic environments. Finally, we repeat this process again in each of the following frames. We empirically demonstrate that SAMJAM outperforms Gemini by 8.33% in mean recall on the EPIC-KITCHENS and EPIC-KITCHENS-100 datasets.

How to Train Your Robots? The Impact of Demonstration Modality on Imitation Learning

Mar 10, 2025Abstract:Imitation learning is a promising approach for learning robot policies with user-provided data. The way demonstrations are provided, i.e., demonstration modality, influences the quality of the data. While existing research shows that kinesthetic teaching (physically guiding the robot) is preferred by users for the intuitiveness and ease of use, the majority of existing manipulation datasets were collected through teleoperation via a VR controller or spacemouse. In this work, we investigate how different demonstration modalities impact downstream learning performance as well as user experience. Specifically, we compare low-cost demonstration modalities including kinesthetic teaching, teleoperation with a VR controller, and teleoperation with a spacemouse controller. We experiment with three table-top manipulation tasks with different motion constraints. We evaluate and compare imitation learning performance using data from different demonstration modalities, and collected subjective feedback on user experience. Our results show that kinesthetic teaching is rated the most intuitive for controlling the robot and provides cleanest data for best downstream learning performance. However, it is not preferred as the way for large-scale data collection due to the physical load. Based on such insight, we propose a simple data collection scheme that relies on a small number of kinesthetic demonstrations mixed with data collected through teleoperation to achieve the best overall learning performance while maintaining low data-collection effort.

Shared Autonomy for Proximal Teaching

Feb 27, 2025Abstract:Motor skill learning often requires experienced professionals who can provide personalized instruction. Unfortunately, the availability of high-quality training can be limited for specialized tasks, such as high performance racing. Several recent works have leveraged AI-assistance to improve instruction of tasks ranging from rehabilitation to surgical robot tele-operation. However, these works often make simplifying assumptions on the student learning process, and fail to model how a teacher's assistance interacts with different individuals' abilities when determining optimal teaching strategies. Inspired by the idea of scaffolding from educational psychology, we leverage shared autonomy, a framework for combining user inputs with robot autonomy, to aid with curriculum design. Our key insight is that the way a student's behavior improves in the presence of assistance from an autonomous agent can highlight which sub-skills might be most ``learnable'' for the student, or within their Zone of Proximal Development. We use this to design Z-COACH, a method for using shared autonomy to provide personalized instruction targeting interpretable task sub-skills. In a user study (n=50), where we teach high performance racing in a simulated environment of the Thunderhill Raceway Park with the CARLA Autonomous Driving simulator, we show that Z-COACH helps identify which skills each student should first practice, leading to an overall improvement in driving time, behavior, and smoothness. Our work shows that increasingly available semi-autonomous capabilities (e.g. in vehicles, robots) can not only assist human users, but also help *teach* them.

Statistical Guarantees for Lifelong Reinforcement Learning using PAC-Bayesian Theory

Nov 01, 2024

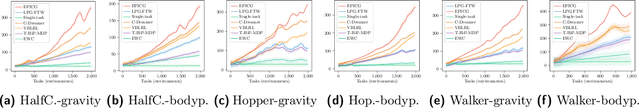

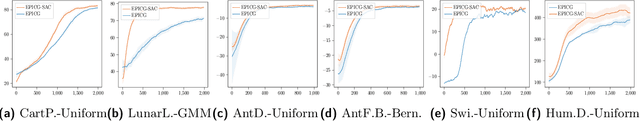

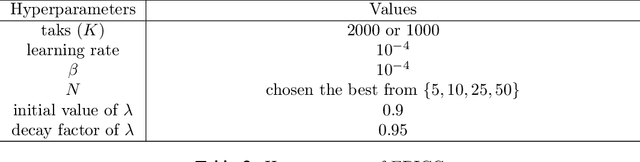

Abstract:Lifelong reinforcement learning (RL) has been developed as a paradigm for extending single-task RL to more realistic, dynamic settings. In lifelong RL, the "life" of an RL agent is modeled as a stream of tasks drawn from a task distribution. We propose EPIC (\underline{E}mpirical \underline{P}AC-Bayes that \underline{I}mproves \underline{C}ontinuously), a novel algorithm designed for lifelong RL using PAC-Bayes theory. EPIC learns a shared policy distribution, referred to as the \textit{world policy}, which enables rapid adaptation to new tasks while retaining valuable knowledge from previous experiences. Our theoretical analysis establishes a relationship between the algorithm's generalization performance and the number of prior tasks preserved in memory. We also derive the sample complexity of EPIC in terms of RL regret. Extensive experiments on a variety of environments demonstrate that EPIC significantly outperforms existing methods in lifelong RL, offering both theoretical guarantees and practical efficacy through the use of the world policy.

FlowRetrieval: Flow-Guided Data Retrieval for Few-Shot Imitation Learning

Aug 29, 2024Abstract:Few-shot imitation learning relies on only a small amount of task-specific demonstrations to efficiently adapt a policy for a given downstream tasks. Retrieval-based methods come with a promise of retrieving relevant past experiences to augment this target data when learning policies. However, existing data retrieval methods fall under two extremes: they either rely on the existence of exact behaviors with visually similar scenes in the prior data, which is impractical to assume; or they retrieve based on semantic similarity of high-level language descriptions of the task, which might not be that informative about the shared low-level behaviors or motions across tasks that is often a more important factor for retrieving relevant data for policy learning. In this work, we investigate how we can leverage motion similarity in the vast amount of cross-task data to improve few-shot imitation learning of the target task. Our key insight is that motion-similar data carries rich information about the effects of actions and object interactions that can be leveraged during few-shot adaptation. We propose FlowRetrieval, an approach that leverages optical flow representations for both extracting similar motions to target tasks from prior data, and for guiding learning of a policy that can maximally benefit from such data. Our results show FlowRetrieval significantly outperforms prior methods across simulated and real-world domains, achieving on average 27% higher success rate than the best retrieval-based prior method. In the Pen-in-Cup task with a real Franka Emika robot, FlowRetrieval achieves 3.7x the performance of the baseline imitation learning technique that learns from all prior and target data. Website: https://flow-retrieval.github.io

Distilling and Retrieving Generalizable Knowledge for Robot Manipulation via Language Corrections

Nov 17, 2023

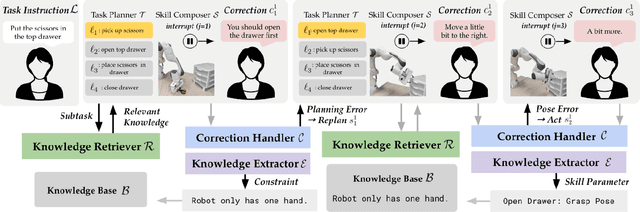

Abstract:Today's robot policies exhibit subpar performance when faced with the challenge of generalizing to novel environments. Human corrective feedback is a crucial form of guidance to enable such generalization. However, adapting to and learning from online human corrections is a non-trivial endeavor: not only do robots need to remember human feedback over time to retrieve the right information in new settings and reduce the intervention rate, but also they would need to be able to respond to feedback that can be arbitrary corrections about high-level human preferences to low-level adjustments to skill parameters. In this work, we present Distillation and Retrieval of Online Corrections (DROC), a large language model (LLM)-based system that can respond to arbitrary forms of language feedback, distill generalizable knowledge from corrections, and retrieve relevant past experiences based on textual and visual similarity for improving performance in novel settings. DROC is able to respond to a sequence of online language corrections that address failures in both high-level task plans and low-level skill primitives. We demonstrate that DROC effectively distills the relevant information from the sequence of online corrections in a knowledge base and retrieves that knowledge in settings with new task or object instances. DROC outperforms other techniques that directly generate robot code via LLMs by using only half of the total number of corrections needed in the first round and requires little to no corrections after two iterations. We show further results, videos, prompts and code on https://sites.google.com/stanford.edu/droc .

Open X-Embodiment: Robotic Learning Datasets and RT-X Models

Oct 17, 2023

Abstract:Large, high-capacity models trained on diverse datasets have shown remarkable successes on efficiently tackling downstream applications. In domains from NLP to Computer Vision, this has led to a consolidation of pretrained models, with general pretrained backbones serving as a starting point for many applications. Can such a consolidation happen in robotics? Conventionally, robotic learning methods train a separate model for every application, every robot, and even every environment. Can we instead train generalist X-robot policy that can be adapted efficiently to new robots, tasks, and environments? In this paper, we provide datasets in standardized data formats and models to make it possible to explore this possibility in the context of robotic manipulation, alongside experimental results that provide an example of effective X-robot policies. We assemble a dataset from 22 different robots collected through a collaboration between 21 institutions, demonstrating 527 skills (160266 tasks). We show that a high-capacity model trained on this data, which we call RT-X, exhibits positive transfer and improves the capabilities of multiple robots by leveraging experience from other platforms. More details can be found on the project website $\href{https://robotics-transformer-x.github.io}{\text{robotics-transformer-x.github.io}}$.

Gesture-Informed Robot Assistance via Foundation Models

Sep 07, 2023

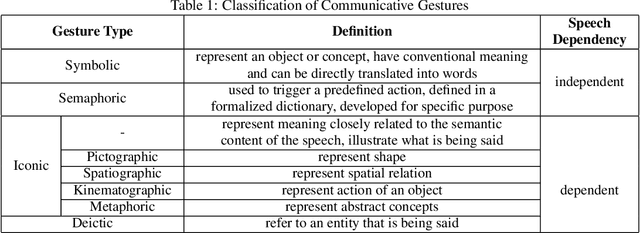

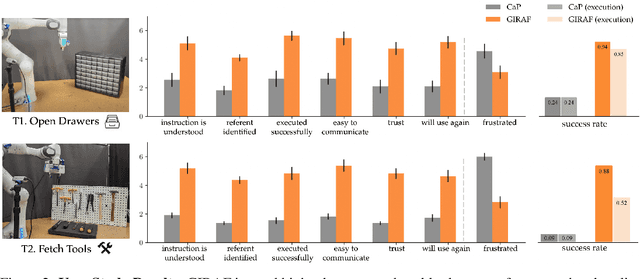

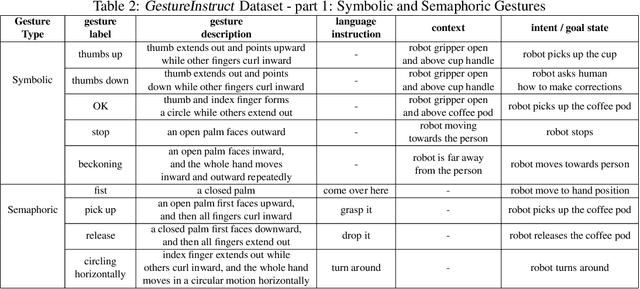

Abstract:Gestures serve as a fundamental and significant mode of non-verbal communication among humans. Deictic gestures (such as pointing towards an object), in particular, offer valuable means of efficiently expressing intent in situations where language is inaccessible, restricted, or highly specialized. As a result, it is essential for robots to comprehend gestures in order to infer human intentions and establish more effective coordination with them. Prior work often rely on a rigid hand-coded library of gestures along with their meanings. However, interpretation of gestures is often context-dependent, requiring more flexibility and common-sense reasoning. In this work, we propose a framework, GIRAF, for more flexibly interpreting gesture and language instructions by leveraging the power of large language models. Our framework is able to accurately infer human intent and contextualize the meaning of their gestures for more effective human-robot collaboration. We instantiate the framework for interpreting deictic gestures in table-top manipulation tasks and demonstrate that it is both effective and preferred by users, achieving 70% higher success rates than the baseline. We further demonstrate GIRAF's ability on reasoning about diverse types of gestures by curating a GestureInstruct dataset consisting of 36 different task scenarios. GIRAF achieved 81% success rate on finding the correct plan for tasks in GestureInstruct. Website: https://tinyurl.com/giraf23

HYDRA: Hybrid Robot Actions for Imitation Learning

Jun 29, 2023Abstract:Imitation Learning (IL) is a sample efficient paradigm for robot learning using expert demonstrations. However, policies learned through IL suffer from state distribution shift at test time, due to compounding errors in action prediction which lead to previously unseen states. Choosing an action representation for the policy that minimizes this distribution shift is critical in imitation learning. Prior work propose using temporal action abstractions to reduce compounding errors, but they often sacrifice policy dexterity or require domain-specific knowledge. To address these trade-offs, we introduce HYDRA, a method that leverages a hybrid action space with two levels of action abstractions: sparse high-level waypoints and dense low-level actions. HYDRA dynamically switches between action abstractions at test time to enable both coarse and fine-grained control of a robot. In addition, HYDRA employs action relabeling to increase the consistency of actions in the dataset, further reducing distribution shift. HYDRA outperforms prior imitation learning methods by 30-40% on seven challenging simulation and real world environments, involving long-horizon tasks in the real world like making coffee and toasting bread. Videos are found on our website: https://tinyurl.com/3mc6793z

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge