Haochen Zhang

Legendre Memory Unit with A Multi-Slice Compensation Model for Short-Term Wind Speed Forecasting Based on Wind Farm Cluster Data

Feb 04, 2026Abstract:With more wind farms clustered for integration, the short-term wind speed prediction of such wind farm clusters is critical for normal operation of power systems. This paper focuses on achieving accurate, fast, and robust wind speed prediction by full use of cluster data with spatial-temporal correlation. First, weighted mean filtering (WMF) is applied to denoise wind speed data at the single-farm level. The Legendre memory unit (LMU) is then innovatively applied for the wind speed prediction, in combination with the Compensating Parameter based on Kendall rank correlation coefficient (CPK) of wind farm cluster data, to construct the multi-slice LMU (MSLMU). Finally, an innovative ensemble model WMF-CPK-MSLMU is proposed herein, with three key blocks: data pre-processing, forecasting, and multi-slice compensation. Advantages include: 1) LMU jointly models linear and nonlinear dependencies among farms to capture spatial-temporal correlations through backpropagation; 2) MSLMU enhances forecasting by using CPK-derived weights instead of random initialization, allowing spatial correlations to fully activate hidden nodes across clustered wind farms.; 3) CPK adaptively weights the compensation model in MSLMU and complements missing data spatially, to facilitate the whole model highly accurate and robust. Test results on different wind farm clusters indicate the effectiveness and superiority of proposed ensemble model WMF-CPK-MSLMU in the short-term prediction of wind farm clusters compared to the existing models.

Non-Markov Multi-Round Conversational Image Generation with History-Conditioned MLLMs

Jan 28, 2026Abstract:Conversational image generation requires a model to follow user instructions across multiple rounds of interaction, grounded in interleaved text and images that accumulate as chat history. While recent multimodal large language models (MLLMs) can generate and edit images, most existing multi-turn benchmarks and training recipes are effectively Markov: the next output depends primarily on the most recent image, enabling shortcut solutions that ignore long-range history. In this work we formalize and target the more challenging non-Markov setting, where a user may refer back to earlier states, undo changes, or reference entities introduced several rounds ago. We present (i) non-Markov multi-round data construction strategies, including rollback-style editing that forces retrieval of earlier visual states and name-based multi-round personalization that binds names to appearances across rounds; (ii) a history-conditioned training and inference framework with token-level caching to prevent multi-round identity drift; and (iii) enabling improvements for high-fidelity image reconstruction and editable personalization, including a reconstruction-based DiT detokenizer and a multi-stage fine-tuning curriculum. We demonstrate that explicitly training for non-Markov interactions yields substantial improvements in multi-round consistency and instruction compliance, while maintaining strong single-round editing and personalization.

Support Basis: Fast Attention Beyond Bounded Entries

Oct 02, 2025

Abstract:The quadratic complexity of softmax attention remains a central bottleneck in scaling large language models (LLMs). [Alman and Song, NeurIPS 2023] proposed a sub-quadratic attention approximation algorithm, but it works only under the restrictive bounded-entry assumption. Since this assumption rarely holds in practice, its applicability to modern LLMs is limited. In this paper, we introduce support-basis decomposition, a new framework for efficient attention approximation beyond bounded entries. We empirically demonstrate that the entries of the query and key matrices exhibit sub-Gaussian behavior. Our approach uses this property to split large and small entries, enabling exact computation on sparse components and polynomial approximation on dense components. We establish rigorous theoretical guarantees, proving a sub-quadratic runtime, and extend the method to a multi-threshold setting that eliminates all distributional assumptions. Furthermore, we provide the first theoretical justification for the empirical success of polynomial attention [Kacham, Mirrokni, and Zhong, ICML 2024], showing that softmax attention can be closely approximated by a combination of multiple polynomial attentions with sketching.

Regret-Optimal Q-Learning with Low Cost for Single-Agent and Federated Reinforcement Learning

Jun 05, 2025Abstract:Motivated by real-world settings where data collection and policy deployment -- whether for a single agent or across multiple agents -- are costly, we study the problem of on-policy single-agent reinforcement learning (RL) and federated RL (FRL) with a focus on minimizing burn-in costs (the sample sizes needed to reach near-optimal regret) and policy switching or communication costs. In parallel finite-horizon episodic Markov Decision Processes (MDPs) with $S$ states and $A$ actions, existing methods either require superlinear burn-in costs in $S$ and $A$ or fail to achieve logarithmic switching or communication costs. We propose two novel model-free RL algorithms -- Q-EarlySettled-LowCost and FedQ-EarlySettled-LowCost -- that are the first in the literature to simultaneously achieve: (i) the best near-optimal regret among all known model-free RL or FRL algorithms, (ii) low burn-in cost that scales linearly with $S$ and $A$, and (iii) logarithmic policy switching cost for single-agent RL or communication cost for FRL. Additionally, we establish gap-dependent theoretical guarantees for both regret and switching/communication costs, improving or matching the best-known gap-dependent bounds.

SORT3D: Spatial Object-centric Reasoning Toolbox for Zero-Shot 3D Grounding Using Large Language Models

Apr 25, 2025Abstract:Interpreting object-referential language and grounding objects in 3D with spatial relations and attributes is essential for robots operating alongside humans. However, this task is often challenging due to the diversity of scenes, large number of fine-grained objects, and complex free-form nature of language references. Furthermore, in the 3D domain, obtaining large amounts of natural language training data is difficult. Thus, it is important for methods to learn from little data and zero-shot generalize to new environments. To address these challenges, we propose SORT3D, an approach that utilizes rich object attributes from 2D data and merges a heuristics-based spatial reasoning toolbox with the ability of large language models (LLMs) to perform sequential reasoning. Importantly, our method does not require text-to-3D data for training and can be applied zero-shot to unseen environments. We show that SORT3D achieves state-of-the-art performance on complex view-dependent grounding tasks on two benchmarks. We also implement the pipeline to run real-time on an autonomous vehicle and demonstrate that our approach can be used for object-goal navigation on previously unseen real-world environments. All source code for the system pipeline is publicly released at https://github.com/nzantout/SORT3D .

I3S: Importance Sampling Subspace Selection for Low-Rank Optimization in LLM Pretraining

Feb 09, 2025

Abstract:Low-rank optimization has emerged as a promising approach to enabling memory-efficient training of large language models (LLMs). Existing low-rank optimization methods typically project gradients onto a low-rank subspace, reducing the memory cost of storing optimizer states. A key challenge in these methods is identifying suitable subspaces to ensure an effective optimization trajectory. Most existing approaches select the dominant subspace to preserve gradient information, as this intuitively provides the best approximation. However, we find that in practice, the dominant subspace stops changing during pretraining, thereby constraining weight updates to similar subspaces. In this paper, we propose importance sampling subspace selection (I3S) for low-rank optimization, which theoretically offers a comparable convergence rate to the dominant subspace approach. Empirically, we demonstrate that I3S significantly outperforms previous methods in LLM pretraining tasks.

Gap-Dependent Bounds for Federated $Q$-learning

Feb 05, 2025Abstract:We present the first gap-dependent analysis of regret and communication cost for on-policy federated $Q$-Learning in tabular episodic finite-horizon Markov decision processes (MDPs). Existing FRL methods focus on worst-case scenarios, leading to $\sqrt{T}$-type regret bounds and communication cost bounds with a $\log T$ term scaling with the number of agents $M$, states $S$, and actions $A$, where $T$ is the average total number of steps per agent. In contrast, our novel framework leverages the benign structures of MDPs, such as a strictly positive suboptimality gap, to achieve a $\log T$-type regret bound and a refined communication cost bound that disentangles exploration and exploitation. Our gap-dependent regret bound reveals a distinct multi-agent speedup pattern, and our gap-dependent communication cost bound removes the dependence on $MSA$ from the $\log T$ term. Notably, our gap-dependent communication cost bound also yields a better global switching cost when $M=1$, removing $SA$ from the $\log T$ term.

Topology-Preserving Image Segmentation with Spatial-Aware Persistent Feature Matching

Dec 03, 2024

Abstract:Topological correctness is critical for segmentation of tubular structures. Existing topological segmentation loss functions are primarily based on the persistent homology of the image. They match the persistent features from the segmentation with the persistent features from the ground truth and minimize the difference between them. However, these methods suffer from an ambiguous matching problem since the matching only relies on the information in the topological space. In this work, we propose an effective and efficient Spatial-Aware Topological Loss Function that further leverages the information in the original spatial domain of the image to assist the matching of persistent features. Extensive experiments on images of various types of tubular structures show that the proposed method has superior performance in improving the topological accuracy of the segmentation compared with state-of-the-art methods.

VLA-3D: A Dataset for 3D Semantic Scene Understanding and Navigation

Nov 05, 2024

Abstract:With the recent rise of Large Language Models (LLMs), Vision-Language Models (VLMs), and other general foundation models, there is growing potential for multimodal, multi-task embodied agents that can operate in diverse environments given only natural language as input. One such application area is indoor navigation using natural language instructions. However, despite recent progress, this problem remains challenging due to the spatial reasoning and semantic understanding required, particularly in arbitrary scenes that may contain many objects belonging to fine-grained classes. To address this challenge, we curate the largest real-world dataset for Vision and Language-guided Action in 3D Scenes (VLA-3D), consisting of over 11.5K scanned 3D indoor rooms from existing datasets, 23.5M heuristically generated semantic relations between objects, and 9.7M synthetically generated referential statements. Our dataset consists of processed 3D point clouds, semantic object and room annotations, scene graphs, navigable free space annotations, and referential language statements that specifically focus on view-independent spatial relations for disambiguating objects. The goal of these features is to aid the downstream task of navigation, especially on real-world systems where some level of robustness must be guaranteed in an open world of changing scenes and imperfect language. We benchmark our dataset with current state-of-the-art models to obtain a performance baseline. All code to generate and visualize the dataset is publicly released, see https://github.com/HaochenZ11/VLA-3D. With the release of this dataset, we hope to provide a resource for progress in semantic 3D scene understanding that is robust to changes and one which will aid the development of interactive indoor navigation systems.

Statistical Guarantees for Lifelong Reinforcement Learning using PAC-Bayesian Theory

Nov 01, 2024

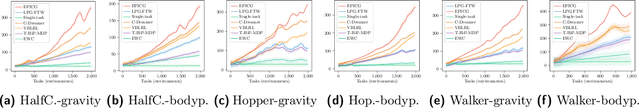

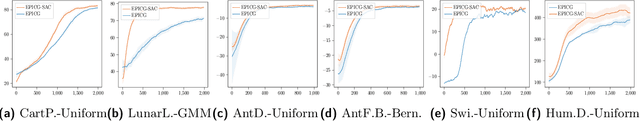

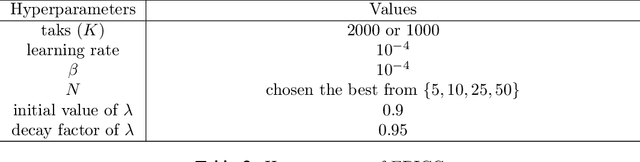

Abstract:Lifelong reinforcement learning (RL) has been developed as a paradigm for extending single-task RL to more realistic, dynamic settings. In lifelong RL, the "life" of an RL agent is modeled as a stream of tasks drawn from a task distribution. We propose EPIC (\underline{E}mpirical \underline{P}AC-Bayes that \underline{I}mproves \underline{C}ontinuously), a novel algorithm designed for lifelong RL using PAC-Bayes theory. EPIC learns a shared policy distribution, referred to as the \textit{world policy}, which enables rapid adaptation to new tasks while retaining valuable knowledge from previous experiences. Our theoretical analysis establishes a relationship between the algorithm's generalization performance and the number of prior tasks preserved in memory. We also derive the sample complexity of EPIC in terms of RL regret. Extensive experiments on a variety of environments demonstrate that EPIC significantly outperforms existing methods in lifelong RL, offering both theoretical guarantees and practical efficacy through the use of the world policy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge