Yu Jiang

Certifying the Right to Be Forgotten: Primal-Dual Optimization for Sample and Label Unlearning in Vertical Federated Learning

Dec 29, 2025Abstract:Federated unlearning has become an attractive approach to address privacy concerns in collaborative machine learning, for situations when sensitive data is remembered by AI models during the machine learning process. It enables the removal of specific data influences from trained models, aligning with the growing emphasis on the "right to be forgotten." While extensively studied in horizontal federated learning, unlearning in vertical federated learning (VFL) remains challenging due to the distributed feature architecture. VFL unlearning includes sample unlearning that removes specific data points' influence and label unlearning that removes entire classes. Since different parties hold complementary features of the same samples, unlearning tasks require cross-party coordination, creating computational overhead and complexities from feature interdependencies. To address such challenges, we propose FedORA (Federated Optimization for data Removal via primal-dual Algorithm), designed for sample and label unlearning in VFL. FedORA formulates the removal of certain samples or labels as a constrained optimization problem solved using a primal-dual framework. Our approach introduces a new unlearning loss function that promotes classification uncertainty rather than misclassification. An adaptive step size enhances stability, while an asymmetric batch design, considering the prior influence of the remaining data on the model, handles unlearning and retained data differently to efficiently reduce computational costs. We provide theoretical analysis proving that the model difference between FedORA and Train-from-scratch is bounded, establishing guarantees for unlearning effectiveness. Experiments on tabular and image datasets demonstrate that FedORA achieves unlearning effectiveness and utility preservation comparable to Train-from-scratch with reduced computation and communication overhead.

* Published in the IEEE Transactions on Information Forensics and Security

Rare Word Recognition and Translation Without Fine-Tuning via Task Vector in Speech Models

Dec 26, 2025Abstract:Rare words remain a critical bottleneck for speech-to-text systems. While direct fine-tuning improves recognition of target words, it often incurs high cost, catastrophic forgetting, and limited scalability. To address these challenges, we propose a training-free paradigm based on task vectors for rare word recognition and translation. By defining task vectors as parameter differences and introducing word-level task vector arithmetic, our approach enables flexible composition of rare-word capabilities, greatly enhancing scalability and reusability. Extensive experiments across multiple domains show that the proposed method matches or surpasses fine-tuned models on target words, improves general performance by about 5 BLEU, and mitigates catastrophic forgetting.

FineSkiing: A Fine-grained Benchmark for Skiing Action Quality Assessment

Nov 13, 2025Abstract:Action Quality Assessment (AQA) aims to evaluate and score sports actions, which has attracted widespread interest in recent years. Existing AQA methods primarily predict scores based on features extracted from the entire video, resulting in limited interpretability and reliability. Meanwhile, existing AQA datasets also lack fine-grained annotations for action scores, especially for deduction items and sub-score annotations. In this paper, we construct the first AQA dataset containing fine-grained sub-score and deduction annotations for aerial skiing, which will be released as a new benchmark. For the technical challenges, we propose a novel AQA method, named JudgeMind, which significantly enhances performance and reliability by simulating the judgment and scoring mindset of professional referees. Our method segments the input action video into different stages and scores each stage to enhance accuracy. Then, we propose a stage-aware feature enhancement and fusion module to boost the perception of stage-specific key regions and enhance the robustness to visual changes caused by frequent camera viewpoints switching. In addition, we propose a knowledge-based grade-aware decoder to incorporate possible deduction items as prior knowledge to predict more accurate and reliable scores. Experimental results demonstrate that our method achieves state-of-the-art performance.

H3Former: Hypergraph-based Semantic-Aware Aggregation via Hyperbolic Hierarchical Contrastive Loss for Fine-Grained Visual Classification

Nov 13, 2025Abstract:Fine-Grained Visual Classification (FGVC) remains a challenging task due to subtle inter-class differences and large intra-class variations. Existing approaches typically rely on feature-selection mechanisms or region-proposal strategies to localize discriminative regions for semantic analysis. However, these methods often fail to capture discriminative cues comprehensively while introducing substantial category-agnostic redundancy. To address these limitations, we propose H3Former, a novel token-to-region framework that leverages high-order semantic relations to aggregate local fine-grained representations with structured region-level modeling. Specifically, we propose the Semantic-Aware Aggregation Module (SAAM), which exploits multi-scale contextual cues to dynamically construct a weighted hypergraph among tokens. By applying hypergraph convolution, SAAM captures high-order semantic dependencies and progressively aggregates token features into compact region-level representations. Furthermore, we introduce the Hyperbolic Hierarchical Contrastive Loss (HHCL), which enforces hierarchical semantic constraints in a non-Euclidean embedding space. The HHCL enhances inter-class separability and intra-class consistency while preserving the intrinsic hierarchical relationships among fine-grained categories. Comprehensive experiments conducted on four standard FGVC benchmarks validate the superiority of our H3Former framework.

DATR: Diffusion-based 3D Apple Tree Reconstruction Framework with Sparse-View

Aug 27, 2025Abstract:Digital twin applications offered transformative potential by enabling real-time monitoring and robotic simulation through accurate virtual replicas of physical assets. The key to these systems is 3D reconstruction with high geometrical fidelity. However, existing methods struggled under field conditions, especially with sparse and occluded views. This study developed a two-stage framework (DATR) for the reconstruction of apple trees from sparse views. The first stage leverages onboard sensors and foundation models to semi-automatically generate tree masks from complex field images. Tree masks are used to filter out background information in multi-modal data for the single-image-to-3D reconstruction at the second stage. This stage consists of a diffusion model and a large reconstruction model for respective multi view and implicit neural field generation. The training of the diffusion model and LRM was achieved by using realistic synthetic apple trees generated by a Real2Sim data generator. The framework was evaluated on both field and synthetic datasets. The field dataset includes six apple trees with field-measured ground truth, while the synthetic dataset featured structurally diverse trees. Evaluation results showed that our DATR framework outperformed existing 3D reconstruction methods across both datasets and achieved domain-trait estimation comparable to industrial-grade stationary laser scanners while improving the throughput by $\sim$360 times, demonstrating strong potential for scalable agricultural digital twin systems.

Aleks: AI powered Multi Agent System for Autonomous Scientific Discovery via Data-Driven Approaches in Plant Science

Aug 26, 2025Abstract:Modern plant science increasingly relies on large, heterogeneous datasets, but challenges in experimental design, data preprocessing, and reproducibility hinder research throughput. Here we introduce Aleks, an AI-powered multi-agent system that integrates domain knowledge, data analysis, and machine learning within a structured framework to autonomously conduct data-driven scientific discovery. Once provided with a research question and dataset, Aleks iteratively formulated problems, explored alternative modeling strategies, and refined solutions across multiple cycles without human intervention. In a case study on grapevine red blotch disease, Aleks progressively identified biologically meaningful features and converged on interpretable models with robust performance. Ablation studies underscored the importance of domain knowledge and memory for coherent outcomes. This exploratory work highlights the promise of agentic AI as an autonomous collaborator for accelerating scientific discovery in plant sciences.

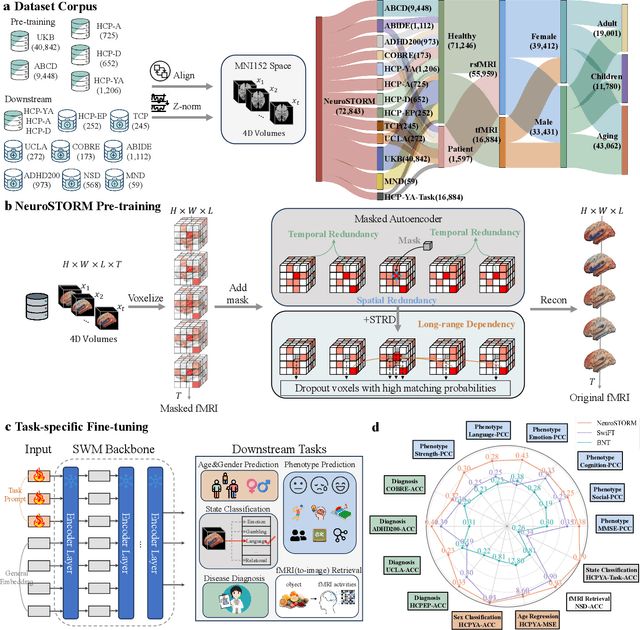

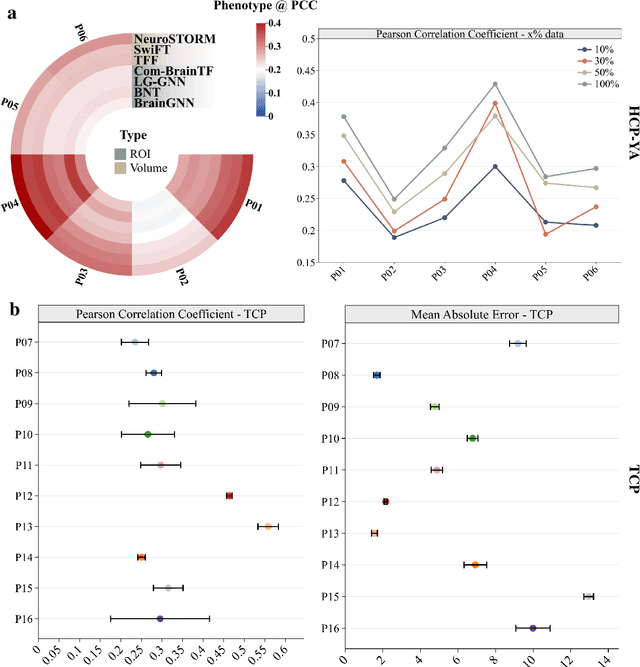

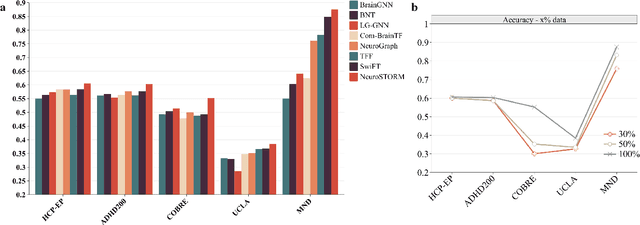

Towards a general-purpose foundation model for fMRI analysis

Jun 11, 2025

Abstract:Functional Magnetic Resonance Imaging (fMRI) is essential for studying brain function and diagnosing neurological disorders, but current analysis methods face reproducibility and transferability issues due to complex pre-processing and task-specific models. We introduce NeuroSTORM (Neuroimaging Foundation Model with Spatial-Temporal Optimized Representation Modeling), a generalizable framework that directly learns from 4D fMRI volumes and enables efficient knowledge transfer across diverse applications. NeuroSTORM is pre-trained on 28.65 million fMRI frames (>9,000 hours) from over 50,000 subjects across multiple centers and ages 5 to 100. Using a Mamba backbone and a shifted scanning strategy, it efficiently processes full 4D volumes. We also propose a spatial-temporal optimized pre-training approach and task-specific prompt tuning to improve transferability. NeuroSTORM outperforms existing methods across five tasks: age/gender prediction, phenotype prediction, disease diagnosis, fMRI-to-image retrieval, and task-based fMRI classification. It demonstrates strong clinical utility on datasets from hospitals in the U.S., South Korea, and Australia, achieving top performance in disease diagnosis and cognitive phenotype prediction. NeuroSTORM provides a standardized, open-source foundation model to improve reproducibility and transferability in fMRI-based clinical research.

KITINet: Kinetics Theory Inspired Network Architectures with PDE Simulation Approaches

May 23, 2025Abstract:Despite the widely recognized success of residual connections in modern neural networks, their design principles remain largely heuristic. This paper introduces KITINet (Kinetics Theory Inspired Network), a novel architecture that reinterprets feature propagation through the lens of non-equilibrium particle dynamics and partial differential equation (PDE) simulation. At its core, we propose a residual module that models feature updates as the stochastic evolution of a particle system, numerically simulated via a discretized solver for the Boltzmann transport equation (BTE). This formulation mimics particle collisions and energy exchange, enabling adaptive feature refinement via physics-informed interactions. Additionally, we reveal that this mechanism induces network parameter condensation during training, where parameters progressively concentrate into a sparse subset of dominant channels. Experiments on scientific computation (PDE operator), image classification (CIFAR-10/100), and text classification (IMDb/SNLI) show consistent improvements over classic network baselines, with negligible increase of FLOPs.

Neural Co-Optimization of Structural Topology, Manufacturable Layers, and Path Orientations for Fiber-Reinforced Composites

Apr 30, 2025Abstract:We propose a neural network-based computational framework for the simultaneous optimization of structural topology, curved layers, and path orientations to achieve strong anisotropic strength in fiber-reinforced thermoplastic composites while ensuring manufacturability. Our framework employs three implicit neural fields to represent geometric shape, layer sequence, and fiber orientation. This enables the direct formulation of both design and manufacturability objectives - such as anisotropic strength, structural volume, machine motion control, layer curvature, and layer thickness - into an integrated and differentiable optimization process. By incorporating these objectives as loss functions, the framework ensures that the resultant composites exhibit optimized mechanical strength while remaining its manufacturability for filament-based multi-axis 3D printing across diverse hardware platforms. Physical experiments demonstrate that the composites generated by our co-optimization method can achieve an improvement of up to 33.1% in failure loads compared to composites with sequentially optimized structures and manufacturing sequences.

Joint 3D Point Cloud Segmentation using Real-Sim Loop: From Panels to Trees and Branches

Mar 07, 2025Abstract:Modern orchards are planted in structured rows with distinct panel divisions to improve management. Accurate and efficient joint segmentation of point cloud from Panel to Tree and Branch (P2TB) is essential for robotic operations. However, most current segmentation methods focus on single instance segmentation and depend on a sequence of deep networks to perform joint tasks. This strategy hinders the use of hierarchical information embedded in the data, leading to both error accumulation and increased costs for annotation and computation, which limits its scalability for real-world applications. In this study, we proposed a novel approach that incorporated a Real2Sim L-TreeGen for training data generation and a joint model (J-P2TB) designed for the P2TB task. The J-P2TB model, trained on the generated simulation dataset, was used for joint segmentation of real-world panel point clouds via zero-shot learning. Compared to representative methods, our model outperformed them in most segmentation metrics while using 40% fewer learnable parameters. This Sim2Real result highlighted the efficacy of L-TreeGen in model training and the performance of J-P2TB for joint segmentation, demonstrating its strong accuracy, efficiency, and generalizability for real-world applications. These improvements would not only greatly benefit the development of robots for automated orchard operations but also advance digital twin technology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge