Xin Xie

HyperAlign: Hypernetwork for Efficient Test-Time Alignment of Diffusion Models

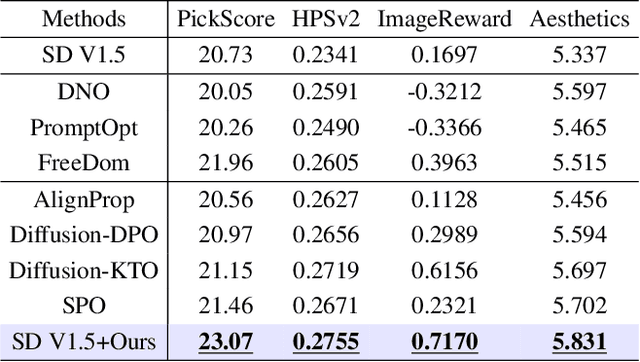

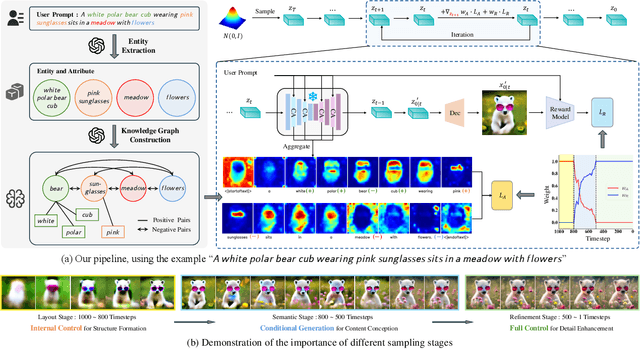

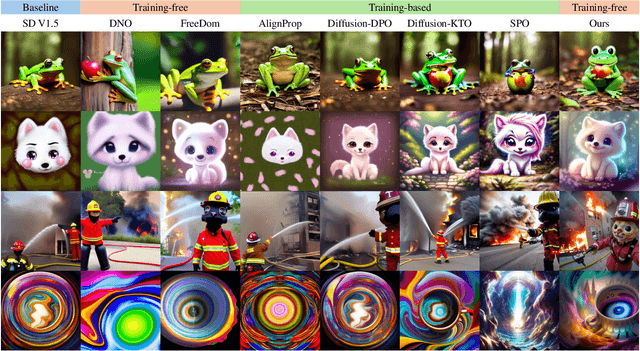

Jan 22, 2026Abstract:Diffusion models achieve state-of-the-art performance but often fail to generate outputs that align with human preferences and intentions, resulting in images with poor aesthetic quality and semantic inconsistencies. Existing alignment methods present a difficult trade-off: fine-tuning approaches suffer from loss of diversity with reward over-optimization, while test-time scaling methods introduce significant computational overhead and tend to under-optimize. To address these limitations, we propose HyperAlign, a novel framework that trains a hypernetwork for efficient and effective test-time alignment. Instead of modifying latent states, HyperAlign dynamically generates low-rank adaptation weights to modulate the diffusion model's generation operators. This allows the denoising trajectory to be adaptively adjusted based on input latents, timesteps and prompts for reward-conditioned alignment. We introduce multiple variants of HyperAlign that differ in how frequently the hypernetwork is applied, balancing between performance and efficiency. Furthermore, we optimize the hypernetwork using a reward score objective regularized with preference data to reduce reward hacking. We evaluate HyperAlign on multiple extended generative paradigms, including Stable Diffusion and FLUX. It significantly outperforms existing fine-tuning and test-time scaling baselines in enhancing semantic consistency and visual appeal.

Training-Free Motion Customization for Distilled Video Generators with Adaptive Test-Time Distillation

Jun 24, 2025Abstract:Distilled video generation models offer fast and efficient synthesis but struggle with motion customization when guided by reference videos, especially under training-free settings. Existing training-free methods, originally designed for standard diffusion models, fail to generalize due to the accelerated generative process and large denoising steps in distilled models. To address this, we propose MotionEcho, a novel training-free test-time distillation framework that enables motion customization by leveraging diffusion teacher forcing. Our approach uses high-quality, slow teacher models to guide the inference of fast student models through endpoint prediction and interpolation. To maintain efficiency, we dynamically allocate computation across timesteps according to guidance needs. Extensive experiments across various distilled video generation models and benchmark datasets demonstrate that our method significantly improves motion fidelity and generation quality while preserving high efficiency. Project page: https://euminds.github.io/motionecho/

DeepSeek-V3 Technical Report

Dec 27, 2024

Abstract:We present DeepSeek-V3, a strong Mixture-of-Experts (MoE) language model with 671B total parameters with 37B activated for each token. To achieve efficient inference and cost-effective training, DeepSeek-V3 adopts Multi-head Latent Attention (MLA) and DeepSeekMoE architectures, which were thoroughly validated in DeepSeek-V2. Furthermore, DeepSeek-V3 pioneers an auxiliary-loss-free strategy for load balancing and sets a multi-token prediction training objective for stronger performance. We pre-train DeepSeek-V3 on 14.8 trillion diverse and high-quality tokens, followed by Supervised Fine-Tuning and Reinforcement Learning stages to fully harness its capabilities. Comprehensive evaluations reveal that DeepSeek-V3 outperforms other open-source models and achieves performance comparable to leading closed-source models. Despite its excellent performance, DeepSeek-V3 requires only 2.788M H800 GPU hours for its full training. In addition, its training process is remarkably stable. Throughout the entire training process, we did not experience any irrecoverable loss spikes or perform any rollbacks. The model checkpoints are available at https://github.com/deepseek-ai/DeepSeek-V3.

DeepSeek-VL2: Mixture-of-Experts Vision-Language Models for Advanced Multimodal Understanding

Dec 13, 2024

Abstract:We present DeepSeek-VL2, an advanced series of large Mixture-of-Experts (MoE) Vision-Language Models that significantly improves upon its predecessor, DeepSeek-VL, through two key major upgrades. For the vision component, we incorporate a dynamic tiling vision encoding strategy designed for processing high-resolution images with different aspect ratios. For the language component, we leverage DeepSeekMoE models with the Multi-head Latent Attention mechanism, which compresses Key-Value cache into latent vectors, to enable efficient inference and high throughput. Trained on an improved vision-language dataset, DeepSeek-VL2 demonstrates superior capabilities across various tasks, including but not limited to visual question answering, optical character recognition, document/table/chart understanding, and visual grounding. Our model series is composed of three variants: DeepSeek-VL2-Tiny, DeepSeek-VL2-Small and DeepSeek-VL2, with 1.0B, 2.8B and 4.5B activated parameters respectively. DeepSeek-VL2 achieves competitive or state-of-the-art performance with similar or fewer activated parameters compared to existing open-source dense and MoE-based models. Codes and pre-trained models are publicly accessible at https://github.com/deepseek-ai/DeepSeek-VL2.

DyMO: Training-Free Diffusion Model Alignment with Dynamic Multi-Objective Scheduling

Dec 03, 2024

Abstract:Text-to-image diffusion model alignment is critical for improving the alignment between the generated images and human preferences. While training-based methods are constrained by high computational costs and dataset requirements, training-free alignment methods remain underexplored and are often limited by inaccurate guidance. We propose a plug-and-play training-free alignment method, DyMO, for aligning the generated images and human preferences during inference. Apart from text-aware human preference scores, we introduce a semantic alignment objective for enhancing the semantic alignment in the early stages of diffusion, relying on the fact that the attention maps are effective reflections of the semantics in noisy images. We propose dynamic scheduling of multiple objectives and intermediate recurrent steps to reflect the requirements at different steps. Experiments with diverse pre-trained diffusion models and metrics demonstrate the effectiveness and robustness of the proposed method.

Learned HDR Image Compression for Perceptually Optimal Storage and Display

Jul 18, 2024

Abstract:High dynamic range (HDR) capture and display have seen significant growth in popularity driven by the advancements in technology and increasing consumer demand for superior image quality. As a result, HDR image compression is crucial to fully realize the benefits of HDR imaging without suffering from large file sizes and inefficient data handling. Conventionally, this is achieved by introducing a residual/gain map as additional metadata to bridge the gap between HDR and low dynamic range (LDR) images, making the former compatible with LDR image codecs but offering suboptimal rate-distortion performance. In this work, we initiate efforts towards end-to-end optimized HDR image compression for perceptually optimal storage and display. Specifically, we learn to compress an HDR image into two bitstreams: one for generating an LDR image to ensure compatibility with legacy LDR displays, and another as side information to aid HDR image reconstruction from the output LDR image. To measure the perceptual quality of output HDR and LDR images, we use two recently proposed image distortion metrics, both validated against human perceptual data of image quality and with reference to the uncompressed HDR image. Through end-to-end optimization for rate-distortion performance, our method dramatically improves HDR and LDR image quality at all bit rates.

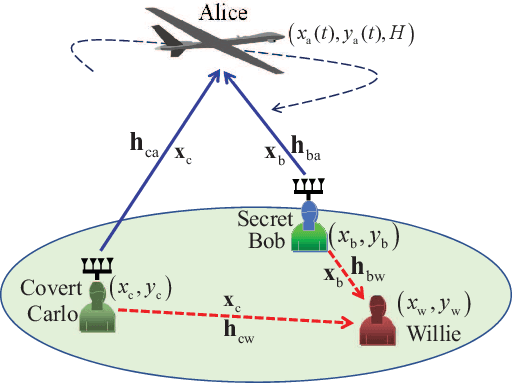

Collaborative Secret and Covert Communications for Multi-User Multi-Antenna Uplink UAV Systems: Design and Optimization

Jul 08, 2024

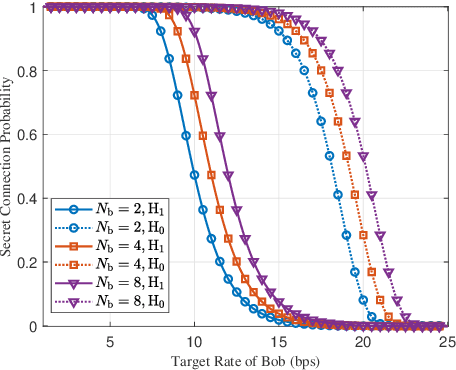

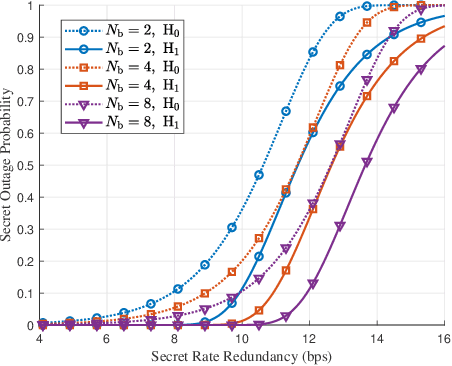

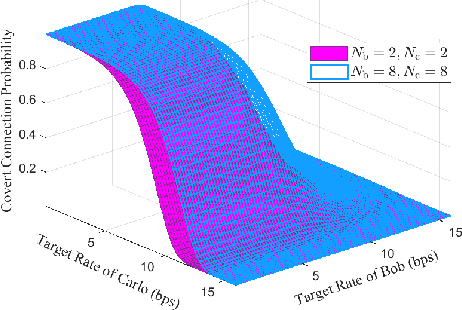

Abstract:Motivated by diverse secure requirements of multi-user in UAV systems, we propose a collaborative secret and covert transmission method for multi-antenna ground users to unmanned aerial vehicle (UAV) communications. Specifically, based on the power domain non-orthogonal multiple access (NOMA), two ground users with distinct security requirements, named Bob and Carlo, superimpose their signals and transmit the combined signal to the UAV named Alice. An adversary Willie attempts to simultaneously eavesdrop Bob's confidential message and detect whether Carlo is transmitting or not. We derive close-form expressions of the secrecy connection probability (SCP) and the covert connection probability (CCP) to evaluate the link reliability for wiretap and covert transmissions, respectively. Furthermore, we bound the secrecy outage probability (SOP) from Bob to Alice and the detection error probability (DEP) of Willie to evaluate the link security for wiretap and covert transmissions, respectively. To characterize the theoretical benchmark of the above model, we formulate a weighted multi-objective optimization problem to maximize the average of secret and covert transmission rates subject to constraints SOP, DEP, the beamformers of Bob and Carlo, and UAV trajectory parameters. To solve the optimization problem, we propose an iterative optimization algorithm using successive convex approximation and block coordinate descent (SCA-BCD) methods. Our results reveal the influence of design parameters of the system on the wiretap and covert rates, analytically and numerically. In summary, our study fills the gaps in joint secret and covert transmission for multi-user multi-antenna uplink UAV communications and provides insights to construct such systems.

DeepSeek-Coder-V2: Breaking the Barrier of Closed-Source Models in Code Intelligence

Jun 17, 2024

Abstract:We present DeepSeek-Coder-V2, an open-source Mixture-of-Experts (MoE) code language model that achieves performance comparable to GPT4-Turbo in code-specific tasks. Specifically, DeepSeek-Coder-V2 is further pre-trained from an intermediate checkpoint of DeepSeek-V2 with additional 6 trillion tokens. Through this continued pre-training, DeepSeek-Coder-V2 substantially enhances the coding and mathematical reasoning capabilities of DeepSeek-V2, while maintaining comparable performance in general language tasks. Compared to DeepSeek-Coder-33B, DeepSeek-Coder-V2 demonstrates significant advancements in various aspects of code-related tasks, as well as reasoning and general capabilities. Additionally, DeepSeek-Coder-V2 expands its support for programming languages from 86 to 338, while extending the context length from 16K to 128K. In standard benchmark evaluations, DeepSeek-Coder-V2 achieves superior performance compared to closed-source models such as GPT4-Turbo, Claude 3 Opus, and Gemini 1.5 Pro in coding and math benchmarks.

RoNet: Rotation-oriented Continuous Image Translation

Apr 06, 2024Abstract:The generation of smooth and continuous images between domains has recently drawn much attention in image-to-image (I2I) translation. Linear relationship acts as the basic assumption in most existing approaches, while applied to different aspects including features, models or labels. However, the linear assumption is hard to conform with the element dimension increases and suffers from the limit that having to obtain both ends of the line. In this paper, we propose a novel rotation-oriented solution and model the continuous generation with an in-plane rotation over the style representation of an image, achieving a network named RoNet. A rotation module is implanted in the generation network to automatically learn the proper plane while disentangling the content and the style of an image. To encourage realistic texture, we also design a patch-based semantic style loss that learns the different styles of the similar object in different domains. We conduct experiments on forest scenes (where the complex texture makes the generation very challenging), faces, streetscapes and the iphone2dslr task. The results validate the superiority of our method in terms of visual quality and continuity.

Reliable long timescale decision-directed channel estimation for OFDM system

Feb 18, 2024

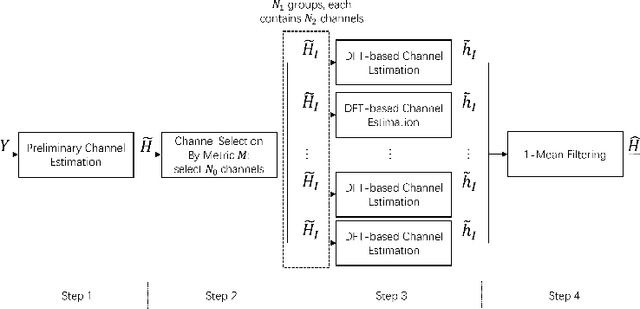

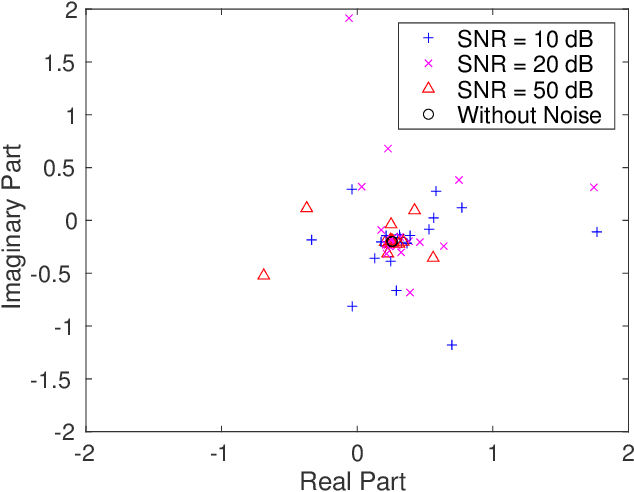

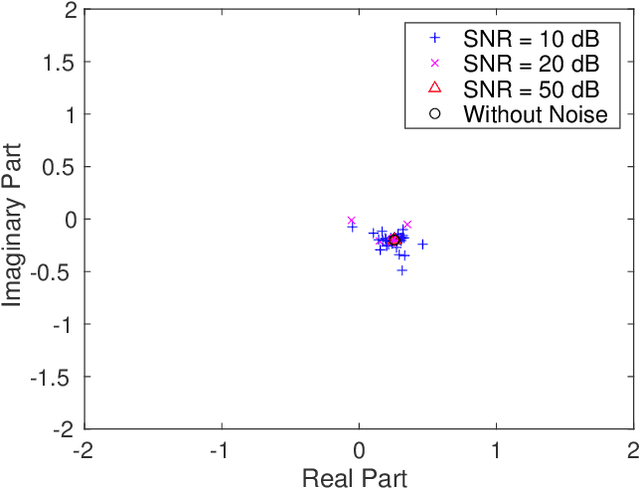

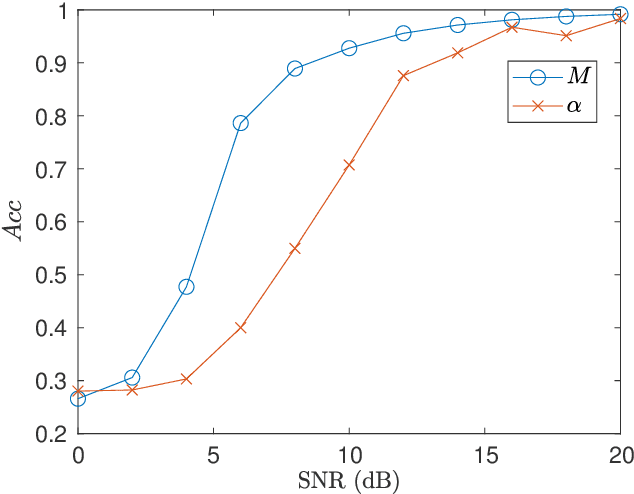

Abstract:Decision-directed channel estimation (DDCE) is one kind of blind channel estimation method that tracks the channel blindly by an iterative algorithm without relying on the pilots, which can increase the utilization of wireless resource. However, one major problem of DDCE is the performance degradation caused by error accumulation during the tracking process. In this paper, we propose an reliable DDCE (RDDCE) scheme for an OFDM-based communication system in the time-varying deep fading environment. By combining the conventional DDCE and discrete Fourier transform (DFT) channel estimation method, the proposed RDDCE scheme selects the reliable estimated channels on the subcarriers which are less affected by deep fading, and then estimates the channel based on the selected subcarriers by an extended DFT channel estimation where the indices of selected subcarriers are not distributed evenly. Simulation results show that RRDCE can alleviate the performance degradation effectively, track the channel with high accuracy on a long time scale, and has good performance under time-varying and noisy channel conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge