Xiaoguang Li

ReThinker: Scientific Reasoning by Rethinking with Guided Reflection and Confidence Control

Feb 04, 2026Abstract:Expert-level scientific reasoning remains challenging for large language models, particularly on benchmarks such as Humanity's Last Exam (HLE), where rigid tool pipelines, brittle multi-agent coordination, and inefficient test-time scaling often limit performance. We introduce ReThinker, a confidence-aware agentic framework that orchestrates retrieval, tool use, and multi-agent reasoning through a stage-wise Solver-Critic-Selector architecture. Rather than following a fixed pipeline, ReThinker dynamically allocates computation based on model confidence, enabling adaptive tool invocation, guided multi-dimensional reflection, and robust confidence-weighted selection. To support scalable training without human annotation, we further propose a reverse data synthesis pipeline and an adaptive trajectory recycling strategy that transform successful reasoning traces into high-quality supervision. Experiments on HLE, GAIA, and XBench demonstrate that ReThinker consistently outperforms state-of-the-art foundation models with tools and existing deep research systems, achieving state-of-the-art results on expert-level reasoning tasks.

Pangu DeepDiver: Adaptive Search Intensity Scaling via Open-Web Reinforcement Learning

May 30, 2025Abstract:Information seeking demands iterative evidence gathering and reflective reasoning, yet large language models (LLMs) still struggle with it in open-web question answering. Existing methods rely on static prompting rules or training with Wikipedia-based corpora and retrieval environments, limiting adaptability to the real-world web environment where ambiguity, conflicting evidence, and noise are prevalent. These constrained training settings hinder LLMs from learning to dynamically decide when and where to search, and how to adjust search depth and frequency based on informational demands. We define this missing capacity as Search Intensity Scaling (SIS)--the emergent skill to intensify search efforts under ambiguous or conflicting conditions, rather than settling on overconfident, under-verification answers. To study SIS, we introduce WebPuzzle, the first dataset designed to foster information-seeking behavior in open-world internet environments. WebPuzzle consists of 24K training instances and 275 test questions spanning both wiki-based and open-web queries. Building on this dataset, we propose DeepDiver, a Reinforcement Learning (RL) framework that promotes SIS by encouraging adaptive search policies through exploration under a real-world open-web environment. Experimental results show that Pangu-7B-Reasoner empowered by DeepDiver achieve performance on real-web tasks comparable to the 671B-parameter DeepSeek-R1. We detail DeepDiver's training curriculum from cold-start supervised fine-tuning to a carefully designed RL phase, and present that its capability of SIS generalizes from closed-form QA to open-ended tasks such as long-form writing. Our contributions advance adaptive information seeking in LLMs and provide a valuable benchmark and dataset for future research.

MTGR: Industrial-Scale Generative Recommendation Framework in Meituan

May 24, 2025

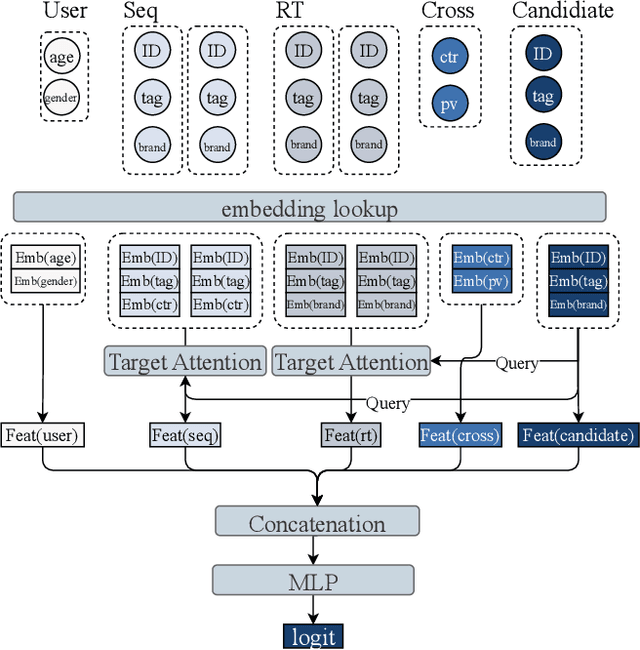

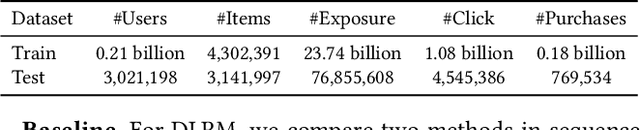

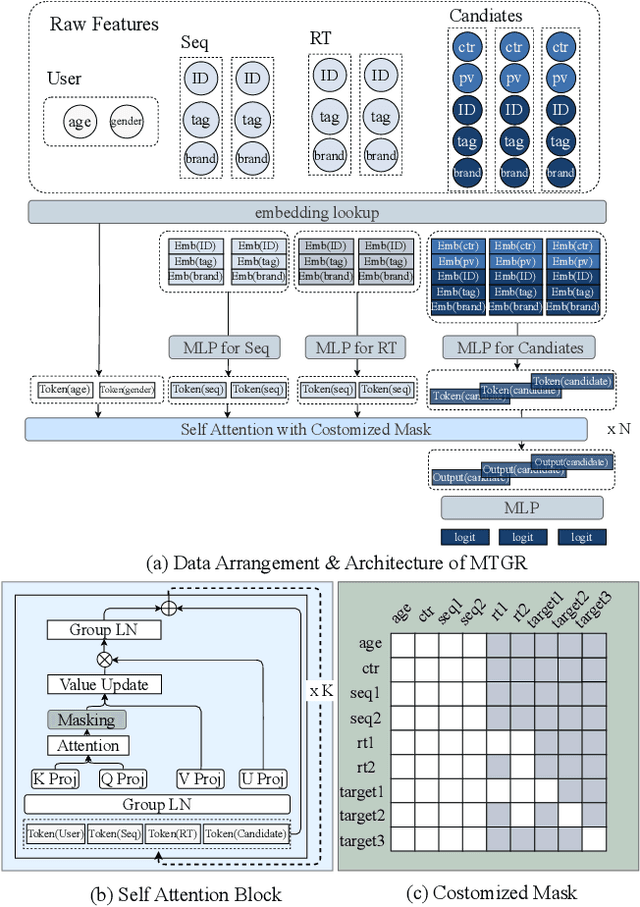

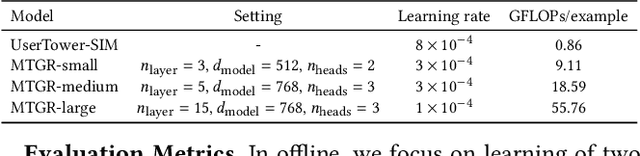

Abstract:Scaling law has been extensively validated in many domains such as natural language processing and computer vision. In the recommendation system, recent work has adopted generative recommendations to achieve scalability, but their generative approaches require abandoning the carefully constructed cross features of traditional recommendation models. We found that this approach significantly degrades model performance, and scaling up cannot compensate for it at all. In this paper, we propose MTGR (Meituan Generative Recommendation) to address this issue. MTGR is modeling based on the HSTU architecture and can retain the original deep learning recommendation model (DLRM) features, including cross features. Additionally, MTGR achieves training and inference acceleration through user-level compression to ensure efficient scaling. We also propose Group-Layer Normalization (GLN) to enhance the performance of encoding within different semantic spaces and the dynamic masking strategy to avoid information leakage. We further optimize the training frameworks, enabling support for our models with 10 to 100 times computational complexity compared to the DLRM, without significant cost increases. MTGR achieved 65x FLOPs for single-sample forward inference compared to the DLRM model, resulting in the largest gain in nearly two years both offline and online. This breakthrough was successfully deployed on Meituan, the world's largest food delivery platform, where it has been handling the main traffic.

DPSeg: Dual-Prompt Cost Volume Learning for Open-Vocabulary Semantic Segmentation

May 16, 2025Abstract:Open-vocabulary semantic segmentation aims to segment images into distinct semantic regions for both seen and unseen categories at the pixel level. Current methods utilize text embeddings from pre-trained vision-language models like CLIP but struggle with the inherent domain gap between image and text embeddings, even after extensive alignment during training. Additionally, relying solely on deep text-aligned features limits shallow-level feature guidance, which is crucial for detecting small objects and fine details, ultimately reducing segmentation accuracy. To address these limitations, we propose a dual prompting framework, DPSeg, for this task. Our approach combines dual-prompt cost volume generation, a cost volume-guided decoder, and a semantic-guided prompt refinement strategy that leverages our dual prompting scheme to mitigate alignment issues in visual prompt generation. By incorporating visual embeddings from a visual prompt encoder, our approach reduces the domain gap between text and image embeddings while providing multi-level guidance through shallow features. Extensive experiments demonstrate that our method significantly outperforms existing state-of-the-art approaches on multiple public datasets.

DocPuzzle: A Process-Aware Benchmark for Evaluating Realistic Long-Context Reasoning Capabilities

Feb 25, 2025Abstract:We present DocPuzzle, a rigorously constructed benchmark for evaluating long-context reasoning capabilities in large language models (LLMs). This benchmark comprises 100 expert-level QA problems requiring multi-step reasoning over long real-world documents. To ensure the task quality and complexity, we implement a human-AI collaborative annotation-validation pipeline. DocPuzzle introduces an innovative evaluation framework that mitigates guessing bias through checklist-guided process analysis, establishing new standards for assessing reasoning capacities in LLMs. Our evaluation results show that: 1)Advanced slow-thinking reasoning models like o1-preview(69.7%) and DeepSeek-R1(66.3%) significantly outperform best general instruct models like Claude 3.5 Sonnet(57.7%); 2)Distilled reasoning models like DeepSeek-R1-Distill-Qwen-32B(41.3%) falls far behind the teacher model, suggesting challenges to maintain the generalization of reasoning capabilities relying solely on distillation.

More Tokens, Lower Precision: Towards the Optimal Token-Precision Trade-off in KV Cache Compression

Dec 17, 2024Abstract:As large language models (LLMs) process increasing context windows, the memory usage of KV cache has become a critical bottleneck during inference. The mainstream KV compression methods, including KV pruning and KV quantization, primarily focus on either token or precision dimension and seldom explore the efficiency of their combination. In this paper, we comprehensively investigate the token-precision trade-off in KV cache compression. Experiments demonstrate that storing more tokens in the KV cache with lower precision, i.e., quantized pruning, can significantly enhance the long-context performance of LLMs. Furthermore, in-depth analysis regarding token-precision trade-off from a series of key aspects exhibit that, quantized pruning achieves substantial improvements in retrieval-related tasks and consistently performs well across varying input lengths. Moreover, quantized pruning demonstrates notable stability across different KV pruning methods, quantization strategies, and model scales. These findings provide valuable insights into the token-precision trade-off in KV cache compression. We plan to release our code in the near future.

Quasi-Newton OMP Approach for Super-Resolution Channel Estimation and Extrapolation

Nov 09, 2024

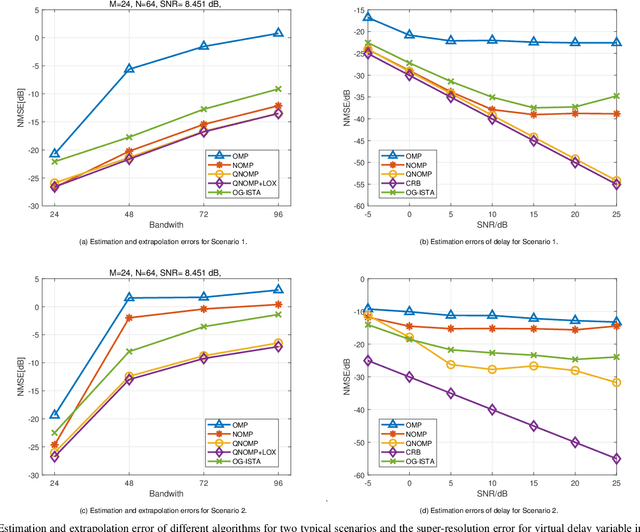

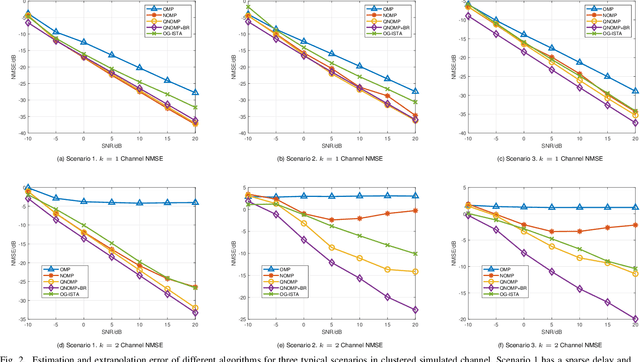

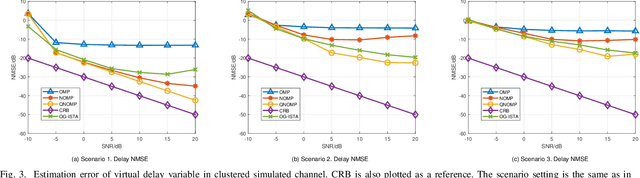

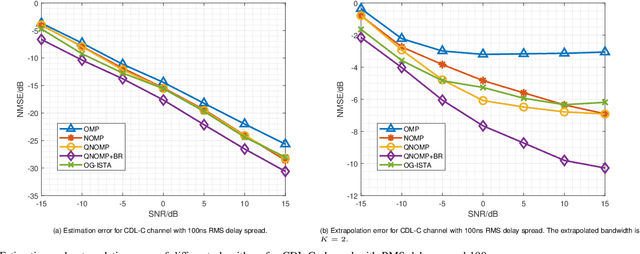

Abstract:Channel estimation and extrapolation are fundamental issues in MIMO communication systems. In this paper, we proposed the quasi-Newton orthogonal matching pursuit (QNOMP) approach to overcome these issues with high efficiency while maintaining accuracy. The algorithm consists of two stages on the super-resolution recovery: we first performed a cheap on-grid OMP estimation of channel parameters in the sparsity domain (e.g., delay or angle), then an off-grid optimization to achieve the super-resolution. In the off-grid stage, we employed the BFGS quasi-Newton method to jointly estimate the parameters through a multipath model, which improved the speed and accuracy significantly. Furthermore, we derived the optimal extrapolated solution in the linear minimum mean squared estimator criterion, revealed its connection with Slepian basis, and presented a practical algorithm to realize the extrapolation based on the QNOMP results. Special treatment utilizing the block sparsity nature of the considered channels was also proposed. Numerical experiments on the simulated models and CDL-C channels demonstrated the high performance and low computational complexity of QNOMP.

DMRA: An Adaptive Line Spectrum Estimation Method through Dynamical Multi-Resolution of Atoms

Sep 01, 2024Abstract:We proposed a novel dense line spectrum super-resolution algorithm, the DMRA, that leverages dynamical multi-resolution of atoms technique to address the limitation of traditional compressed sensing methods when handling dense point-source signals. The algorithm utilizes a smooth $\tanh$ relaxation function to replace the $\ell_0$ norm, promoting sparsity and jointly estimating the frequency atoms and complex gains. To reduce computational complexity and improve frequency estimation accuracy, a two-stage strategy was further introduced to dynamically adjust the number of the optimized degrees of freedom. The strategy first increases candidate frequencies through local refinement, then applies a sparse selector to eliminate insignificant frequencies, thereby adaptively adjusting the degrees of freedom to improve estimation accuracy. Theoretical analysis were provided to validate the proposed method for multi-parameter estimations. Computational results demonstrated that this algorithm achieves good super-resolution performance in various practical scenarios and outperforms the state-of-the-art methods in terms of frequency estimation accuracy and computational efficiency.

DePrompt: Desensitization and Evaluation of Personal Identifiable Information in Large Language Model Prompts

Aug 16, 2024Abstract:Prompt serves as a crucial link in interacting with large language models (LLMs), widely impacting the accuracy and interpretability of model outputs. However, acquiring accurate and high-quality responses necessitates precise prompts, which inevitably pose significant risks of personal identifiable information (PII) leakage. Therefore, this paper proposes DePrompt, a desensitization protection and effectiveness evaluation framework for prompt, enabling users to safely and transparently utilize LLMs. Specifically, by leveraging large model fine-tuning techniques as the underlying privacy protection method, we integrate contextual attributes to define privacy types, achieving high-precision PII entity identification. Additionally, through the analysis of key features in prompt desensitization scenarios, we devise adversarial generative desensitization methods that retain important semantic content while disrupting the link between identifiers and privacy attributes. Furthermore, we present utility evaluation metrics for prompt to better gauge and balance privacy and usability. Our framework is adaptable to prompts and can be extended to text usability-dependent scenarios. Through comparison with benchmarks and other model methods, experimental evaluations demonstrate that our desensitized prompt exhibit superior privacy protection utility and model inference results.

Leveraging Adaptive Implicit Representation Mapping for Ultra High-Resolution Image Segmentation

Jul 31, 2024Abstract:Implicit representation mapping (IRM) can translate image features to any continuous resolution, showcasing its potent capability for ultra-high-resolution image segmentation refinement. Current IRM-based methods for refining ultra-high-resolution image segmentation often rely on CNN-based encoders to extract image features and apply a Shared Implicit Representation Mapping Function (SIRMF) to convert pixel-wise features into segmented results. Hence, these methods exhibit two crucial limitations. Firstly, the CNN-based encoder may not effectively capture long-distance information, resulting in a lack of global semantic information in the pixel-wise features. Secondly, SIRMF is shared across all samples, which limits its ability to generalize and handle diverse inputs. To address these limitations, we propose a novel approach that leverages the newly proposed Adaptive Implicit Representation Mapping (AIRM) for ultra-high-resolution Image Segmentation. Specifically, the proposed method comprises two components: (1) the Affinity Empowered Encoder (AEE), a robust feature extractor that leverages the benefits of the transformer architecture and semantic affinity to model long-distance features effectively, and (2) the Adaptive Implicit Representation Mapping Function (AIRMF), which adaptively translates pixel-wise features without neglecting the global semantic information, allowing for flexible and precise feature translation. We evaluated our method on the commonly used ultra-high-resolution segmentation refinement datasets, i.e., BIG and PASCAL VOC 2012. The extensive experiments demonstrate that our method outperforms competitors by a large margin. The code is provided in supplementary material.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge