Rui Zhou

Machine Learning with Multitype Protected Attributes: Intersectional Fairness through Regularisation

Sep 09, 2025

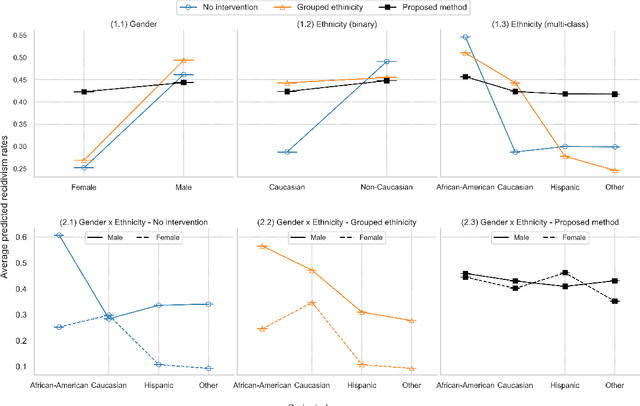

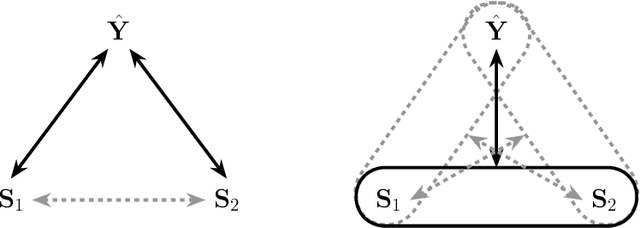

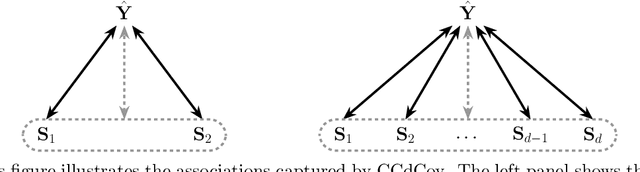

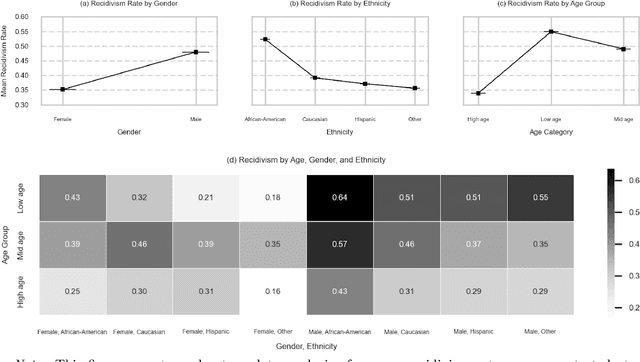

Abstract:Ensuring equitable treatment (fairness) across protected attributes (such as gender or ethnicity) is a critical issue in machine learning. Most existing literature focuses on binary classification, but achieving fairness in regression tasks-such as insurance pricing or hiring score assessments-is equally important. Moreover, anti-discrimination laws also apply to continuous attributes, such as age, for which many existing methods are not applicable. In practice, multiple protected attributes can exist simultaneously; however, methods targeting fairness across several attributes often overlook so-called "fairness gerrymandering", thereby ignoring disparities among intersectional subgroups (e.g., African-American women or Hispanic men). In this paper, we propose a distance covariance regularisation framework that mitigates the association between model predictions and protected attributes, in line with the fairness definition of demographic parity, and that captures both linear and nonlinear dependencies. To enhance applicability in the presence of multiple protected attributes, we extend our framework by incorporating two multivariate dependence measures based on distance covariance: the previously proposed joint distance covariance (JdCov) and our novel concatenated distance covariance (CCdCov), which effectively address fairness gerrymandering in both regression and classification tasks involving protected attributes of various types. We discuss and illustrate how to calibrate regularisation strength, including a method based on Jensen-Shannon divergence, which quantifies dissimilarities in prediction distributions across groups. We apply our framework to the COMPAS recidivism dataset and a large motor insurance claims dataset.

Large Model Empowered Embodied AI: A Survey on Decision-Making and Embodied Learning

Aug 14, 2025

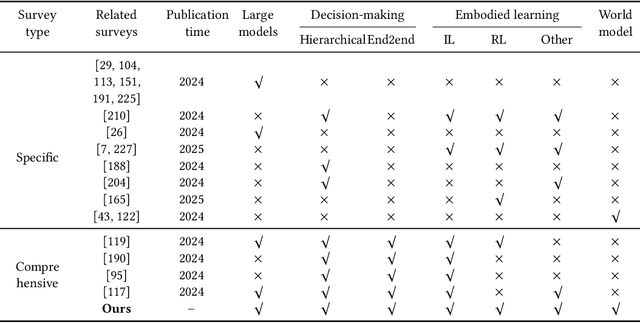

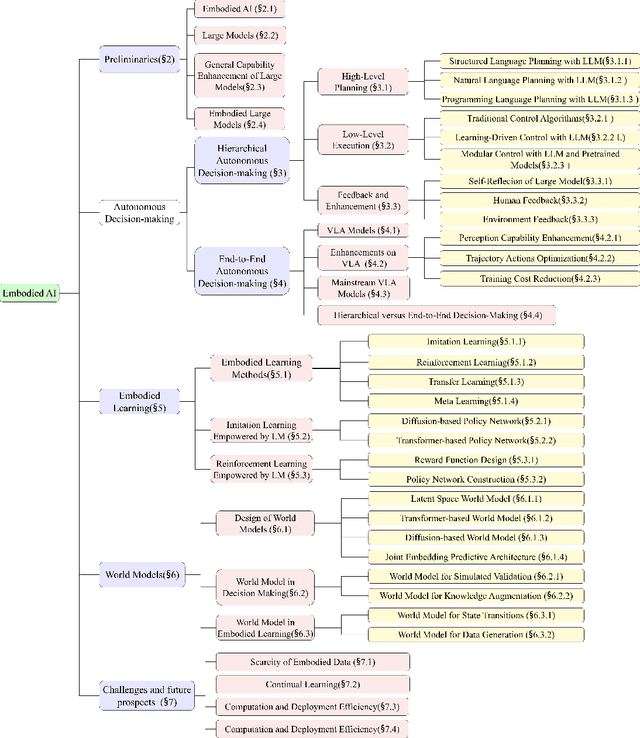

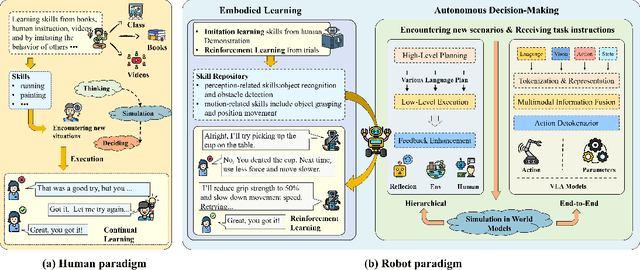

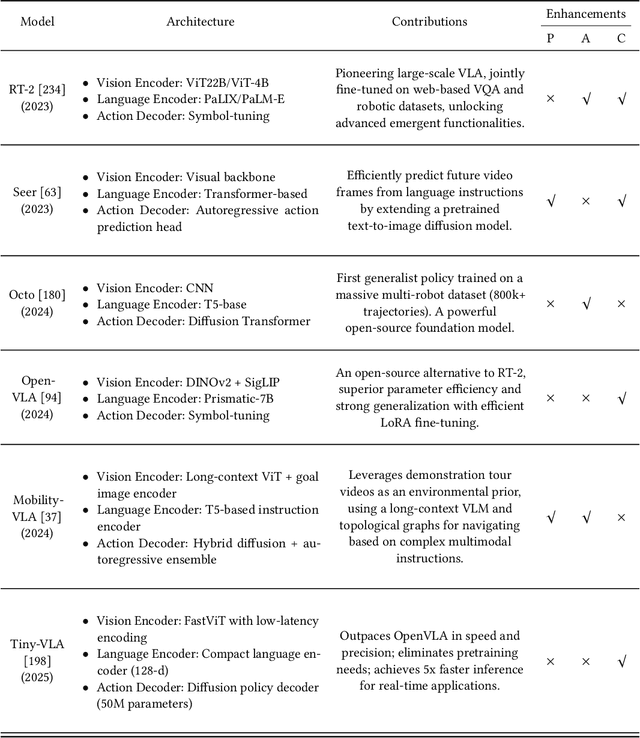

Abstract:Embodied AI aims to develop intelligent systems with physical forms capable of perceiving, decision-making, acting, and learning in real-world environments, providing a promising way to Artificial General Intelligence (AGI). Despite decades of explorations, it remains challenging for embodied agents to achieve human-level intelligence for general-purpose tasks in open dynamic environments. Recent breakthroughs in large models have revolutionized embodied AI by enhancing perception, interaction, planning and learning. In this article, we provide a comprehensive survey on large model empowered embodied AI, focusing on autonomous decision-making and embodied learning. We investigate both hierarchical and end-to-end decision-making paradigms, detailing how large models enhance high-level planning, low-level execution, and feedback for hierarchical decision-making, and how large models enhance Vision-Language-Action (VLA) models for end-to-end decision making. For embodied learning, we introduce mainstream learning methodologies, elaborating on how large models enhance imitation learning and reinforcement learning in-depth. For the first time, we integrate world models into the survey of embodied AI, presenting their design methods and critical roles in enhancing decision-making and learning. Though solid advances have been achieved, challenges still exist, which are discussed at the end of this survey, potentially as the further research directions.

Multi-Task Multi-Agent Reinforcement Learning via Skill Graphs

Jul 09, 2025Abstract:Multi-task multi-agent reinforcement learning (MT-MARL) has recently gained attention for its potential to enhance MARL's adaptability across multiple tasks. However, it is challenging for existing multi-task learning methods to handle complex problems, as they are unable to handle unrelated tasks and possess limited knowledge transfer capabilities. In this paper, we propose a hierarchical approach that efficiently addresses these challenges. The high-level module utilizes a skill graph, while the low-level module employs a standard MARL algorithm. Our approach offers two contributions. First, we consider the MT-MARL problem in the context of unrelated tasks, expanding the scope of MTRL. Second, the skill graph is used as the upper layer of the standard hierarchical approach, with training independent of the lower layer, effectively handling unrelated tasks and enhancing knowledge transfer capabilities. Extensive experiments are conducted to validate these advantages and demonstrate that the proposed method outperforms the latest hierarchical MAPPO algorithms. Videos and code are available at https://github.com/WindyLab/MT-MARL-SG

GenHSI: Controllable Generation of Human-Scene Interaction Videos

Jun 24, 2025Abstract:Large-scale pre-trained video diffusion models have exhibited remarkable capabilities in diverse video generation. However, existing solutions face several challenges in using these models to generate long movie-like videos with rich human-object interactions that include unrealistic human-scene interaction, lack of subject identity preservation, and require expensive training. We propose GenHSI, a training-free method for controllable generation of long human-scene interaction videos (HSI). Taking inspiration from movie animation, our key insight is to overcome the limitations of previous work by subdividing the long video generation task into three stages: (1) script writing, (2) pre-visualization, and (3) animation. Given an image of a scene, a user description, and multiple images of a person, we use these three stages to generate long-videos that preserve human-identity and provide rich human-scene interactions. Script writing converts complex human tasks into simple atomic tasks that are used in the pre-visualization stage to generate 3D keyframes (storyboards). These 3D keyframes are rendered and animated by off-the-shelf video diffusion models for consistent long video generation with rich contacts in a 3D-aware manner. A key advantage of our work is that we alleviate the need for scanned, accurate scenes and create 3D keyframes from single-view images. We are the first to generate a long video sequence with a consistent camera pose that contains arbitrary numbers of character actions without training. Experiments demonstrate that our method can generate long videos that effectively preserve scene content and character identity with plausible human-scene interaction from a single image scene. Visit our project homepage https://kunkun0w0.github.io/project/GenHSI/ for more information.

GenSwarm: Scalable Multi-Robot Code-Policy Generation and Deployment via Language Models

Mar 31, 2025Abstract:The development of control policies for multi-robot systems traditionally follows a complex and labor-intensive process, often lacking the flexibility to adapt to dynamic tasks. This has motivated research on methods to automatically create control policies. However, these methods require iterative processes of manually crafting and refining objective functions, thereby prolonging the development cycle. This work introduces \textit{GenSwarm}, an end-to-end system that leverages large language models to automatically generate and deploy control policies for multi-robot tasks based on simple user instructions in natural language. As a multi-language-agent system, GenSwarm achieves zero-shot learning, enabling rapid adaptation to altered or unseen tasks. The white-box nature of the code policies ensures strong reproducibility and interpretability. With its scalable software and hardware architectures, GenSwarm supports efficient policy deployment on both simulated and real-world multi-robot systems, realizing an instruction-to-execution end-to-end functionality that could prove valuable for robotics specialists and non-specialists alike.The code of the proposed GenSwarm system is available online: https://github.com/WindyLab/GenSwarm.

CleanMel: Mel-Spectrogram Enhancement for Improving Both Speech Quality and ASR

Feb 27, 2025

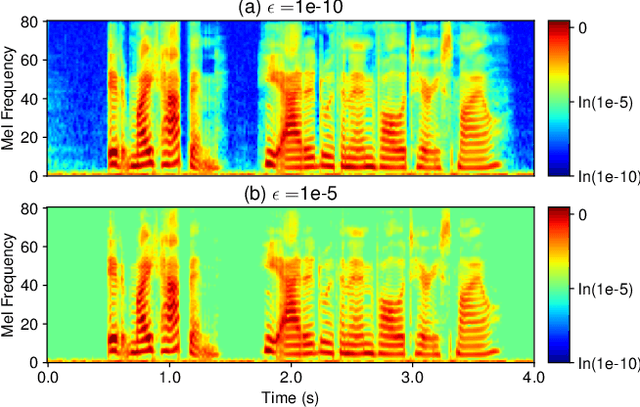

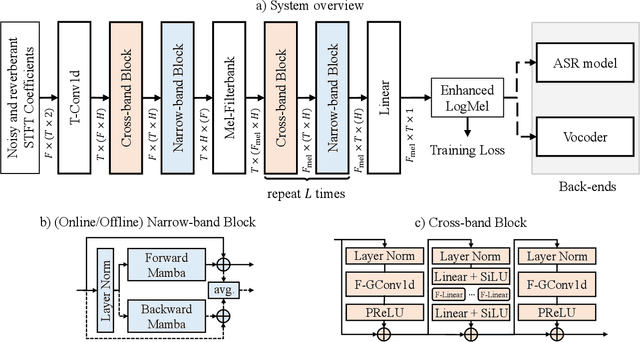

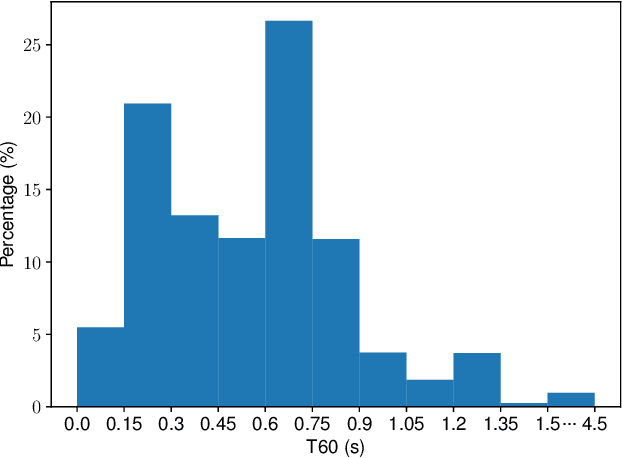

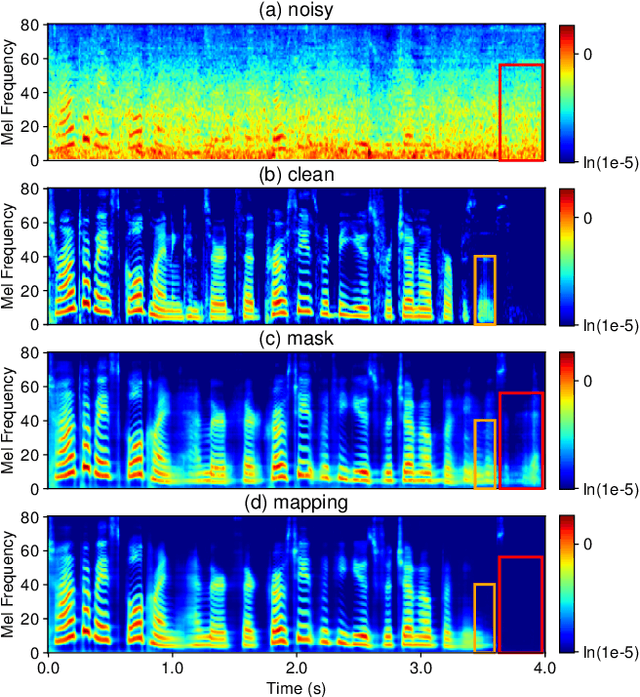

Abstract:In this work, we propose CleanMel, a single-channel Mel-spectrogram denoising and dereverberation network for improving both speech quality and automatic speech recognition (ASR) performance. The proposed network takes as input the noisy and reverberant microphone recording and predicts the corresponding clean Mel-spectrogram. The enhanced Mel-spectrogram can be either transformed to speech waveform with a neural vocoder or directly used for ASR. The proposed network is composed of interleaved cross-band and narrow-band processing in the Mel-frequency domain, for learning the full-band spectral pattern and the narrow-band properties of signals, respectively. Compared to linear-frequency domain or time-domain speech enhancement, the key advantage of Mel-spectrogram enhancement is that Mel-frequency presents speech in a more compact way and thus is easier to learn, which will benefit both speech quality and ASR. Experimental results on four English and one Chinese datasets demonstrate a significant improvement in both speech quality and ASR performance achieved by the proposed model. Code and audio examples of our model are available online in https://audio.westlake.edu.cn/Research/CleanMel.html.

DGSense: A Domain Generalization Framework for Wireless Sensing

Feb 12, 2025Abstract:Wireless sensing is of great benefits to our daily lives. However, wireless signals are sensitive to the surroundings. Various factors, e.g. environments, locations, and individuals, may induce extra impact on wireless propagation. Such a change can be regarded as a domain, in which the data distribution shifts. A vast majority of the sensing schemes are learning-based. They are dependent on the training domains, resulting in performance degradation in unseen domains. Researchers have proposed various solutions to address this issue. But these solutions leverage either semi-supervised or unsupervised domain adaptation techniques. They still require some data in the target domains and do not perform well in unseen domains. In this paper, we propose a domain generalization framework DGSense, to eliminate the domain dependence problem in wireless sensing. The framework is a general solution working across diverse sensing tasks and wireless technologies. Once the sensing model is built, it can generalize to unseen domains without any data from the target domain. To achieve the goal, we first increase the diversity of the training set by a virtual data generator, and then extract the domain independent features via episodic training between the main feature extractor and the domain feature extractors. The feature extractors employ a pre-trained Residual Network (ResNet) with an attention mechanism for spatial features, and a 1D Convolutional Neural Network (1DCNN) for temporal features. To demonstrate the effectiveness and generality of DGSense, we evaluated on WiFi gesture recognition, Millimeter Wave (mmWave) activity recognition, and acoustic fall detection. All the systems exhibited high generalization capability to unseen domains, including new users, locations, and environments, free of new data and retraining.

Leveraging LLM Agents for Automated Optimization Modeling for SASP Problems: A Graph-RAG based Approach

Jan 30, 2025

Abstract:Automated optimization modeling (AOM) has evoked considerable interest with the rapid evolution of large language models (LLMs). Existing approaches predominantly rely on prompt engineering, utilizing meticulously designed expert response chains or structured guidance. However, prompt-based techniques have failed to perform well in the sensor array signal processing (SASP) area due the lack of specific domain knowledge. To address this issue, we propose an automated modeling approach based on retrieval-augmented generation (RAG) technique, which consists of two principal components: a multi-agent (MA) structure and a graph-based RAG (Graph-RAG) process. The MA structure is tailored for the architectural AOM process, with each agent being designed based on principles of human modeling procedure. The Graph-RAG process serves to match user query with specific SASP modeling knowledge, thereby enhancing the modeling result. Results on ten classical signal processing problems demonstrate that the proposed approach (termed as MAG-RAG) outperforms several AOM benchmarks.

MapExpert: Online HD Map Construction with Simple and Efficient Sparse Map Element Expert

Dec 17, 2024

Abstract:Constructing online High-Definition (HD) maps is crucial for the static environment perception of autonomous driving systems (ADS). Existing solutions typically attempt to detect vectorized HD map elements with unified models; however, these methods often overlook the distinct characteristics of different non-cubic map elements, making accurate distinction challenging. To address these issues, we introduce an expert-based online HD map method, termed MapExpert. MapExpert utilizes sparse experts, distributed by our routers, to describe various non-cubic map elements accurately. Additionally, we propose an auxiliary balance loss function to distribute the load evenly across experts. Furthermore, we theoretically analyze the limitations of prevalent bird's-eye view (BEV) feature temporal fusion methods and introduce an efficient temporal fusion module called Learnable Weighted Moving Descentage. This module effectively integrates relevant historical information into the final BEV features. Combined with an enhanced slice head branch, the proposed MapExpert achieves state-of-the-art performance and maintains good efficiency on both nuScenes and Argoverse2 datasets.

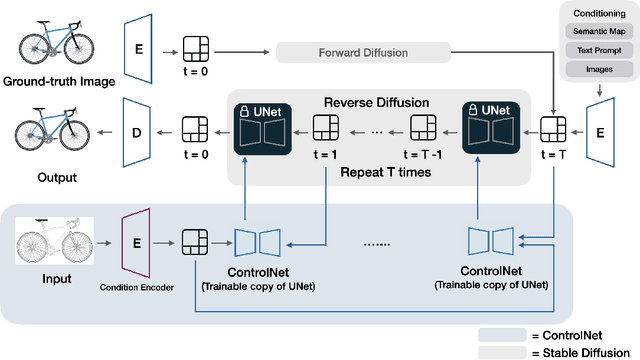

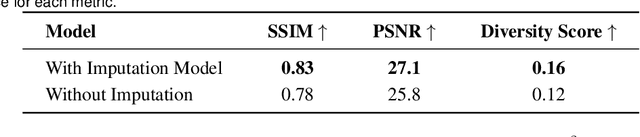

Parametric-ControlNet: Multimodal Control in Foundation Models for Precise Engineering Design Synthesis

Dec 06, 2024

Abstract:This paper introduces a generative model designed for multimodal control over text-to-image foundation generative AI models such as Stable Diffusion, specifically tailored for engineering design synthesis. Our model proposes parametric, image, and text control modalities to enhance design precision and diversity. Firstly, it handles both partial and complete parametric inputs using a diffusion model that acts as a design autocomplete co-pilot, coupled with a parametric encoder to process the information. Secondly, the model utilizes assembly graphs to systematically assemble input component images, which are then processed through a component encoder to capture essential visual data. Thirdly, textual descriptions are integrated via CLIP encoding, ensuring a comprehensive interpretation of design intent. These diverse inputs are synthesized through a multimodal fusion technique, creating a joint embedding that acts as the input to a module inspired by ControlNet. This integration allows the model to apply robust multimodal control to foundation models, facilitating the generation of complex and precise engineering designs. This approach broadens the capabilities of AI-driven design tools and demonstrates significant advancements in precise control based on diverse data modalities for enhanced design generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge