Jie Zou

Unleashing the Potential of Neighbors: Diffusion-based Latent Neighbor Generation for Session-based Recommendation

Jan 07, 2026Abstract:Session-based recommendation aims to predict the next item that anonymous users may be interested in, based on their current session interactions. Recent studies have demonstrated that retrieving neighbor sessions to augment the current session can effectively alleviate the data sparsity issue and improve recommendation performance. However, existing methods typically rely on explicitly observed session data, neglecting latent neighbors - not directly observed but potentially relevant within the interest space - thereby failing to fully exploit the potential of neighbor sessions in recommendation. To address the above limitation, we propose a novel model of diffusion-based latent neighbor generation for session-based recommendation, named DiffSBR. Specifically, DiffSBR leverages two diffusion modules, including retrieval-augmented diffusion and self-augmented diffusion, to generate high-quality latent neighbors. In the retrieval-augmented diffusion module, we leverage retrieved neighbors as guiding signals to constrain and reconstruct the distribution of latent neighbors. Meanwhile, we adopt a training strategy that enables the retriever to learn from the feedback provided by the generator. In the self-augmented diffusion module, we explicitly guide the generation of latent neighbors by injecting the current session's multi-modal signals through contrastive learning. After obtaining the generated latent neighbors, we utilize them to enhance session representations for improving session-based recommendation. Extensive experiments on four public datasets show that DiffSBR generates effective latent neighbors and improves recommendation performance against state-of-the-art baselines.

HS-SLAM: A Fast and Hybrid Strategy-Based SLAM Approach for Low-Speed Autonomous Driving

May 27, 2025

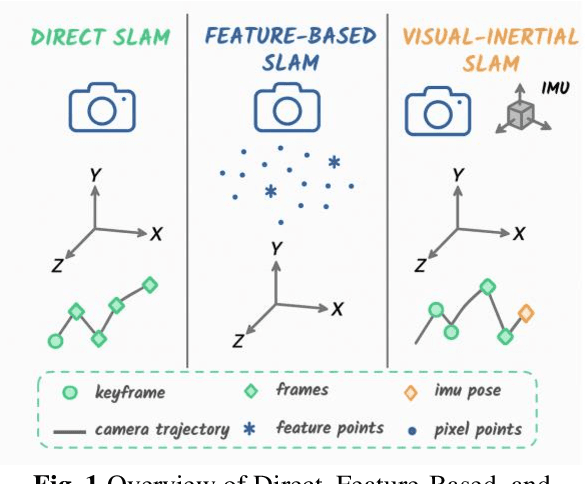

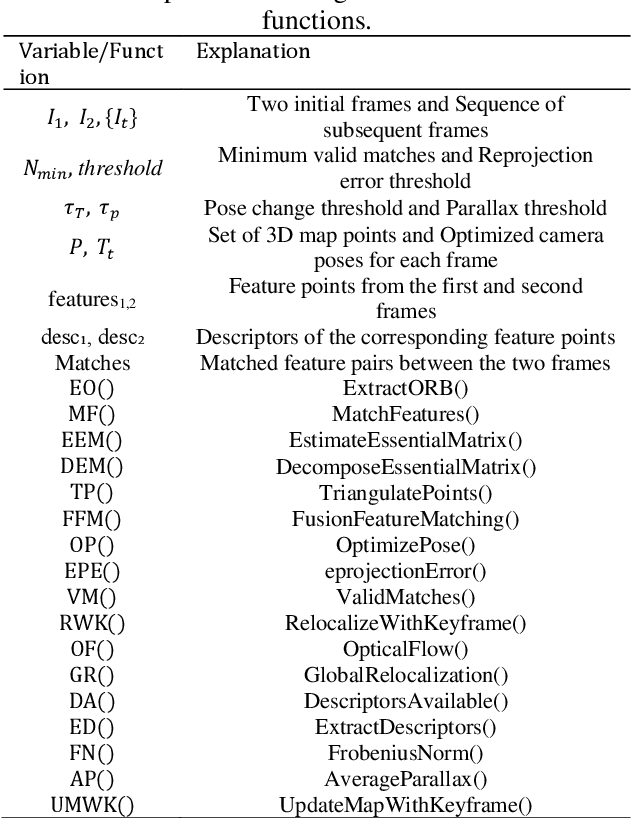

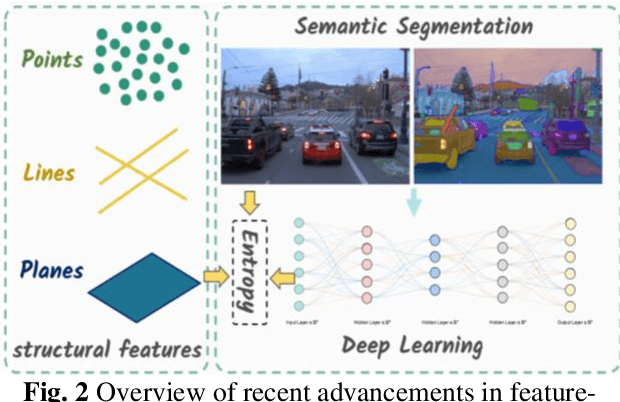

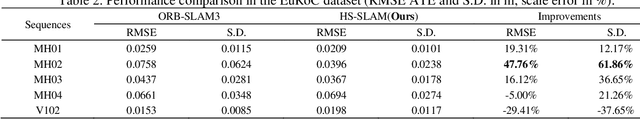

Abstract:Visual-inertial simultaneous localization and mapping (SLAM) is a key module of robotics and low-speed autonomous vehicles, which is usually limited by the high computation burden for practical applications. To this end, an innovative strategy-based hybrid framework HS-SLAM is proposed to integrate the advantages of direct and feature-based methods for fast computation without decreasing the performance. It first estimates the relative positions of consecutive frames using IMU pose estimation within the tracking thread. Then, it refines these estimates through a multi-layer direct method, which progressively corrects the relative pose from coarse to fine, ultimately achieving accurate corner-based feature matching. This approach serves as an alternative to the conventional constant-velocity tracking model. By selectively bypassing descriptor extraction for non-critical frames, HS-SLAM significantly improves the tracking speed. Experimental evaluations on the EuRoC MAV dataset demonstrate that HS-SLAM achieves higher localization accuracies than ORB-SLAM3 while improving the average tracking efficiency by 15%.

Beyond Whole Dialogue Modeling: Contextual Disentanglement for Conversational Recommendation

Apr 24, 2025Abstract:Conversational recommender systems aim to provide personalized recommendations by analyzing and utilizing contextual information related to dialogue. However, existing methods typically model the dialogue context as a whole, neglecting the inherent complexity and entanglement within the dialogue. Specifically, a dialogue comprises both focus information and background information, which mutually influence each other. Current methods tend to model these two types of information mixedly, leading to misinterpretation of users' actual needs, thereby lowering the accuracy of recommendations. To address this issue, this paper proposes a novel model to introduce contextual disentanglement for improving conversational recommender systems, named DisenCRS. The proposed model DisenCRS employs a dual disentanglement framework, including self-supervised contrastive disentanglement and counterfactual inference disentanglement, to effectively distinguish focus information and background information from the dialogue context under unsupervised conditions. Moreover, we design an adaptive prompt learning module to automatically select the most suitable prompt based on the specific dialogue context, fully leveraging the power of large language models. Experimental results on two widely used public datasets demonstrate that DisenCRS significantly outperforms existing conversational recommendation models, achieving superior performance on both item recommendation and response generation tasks.

Multi-Type Context-Aware Conversational Recommender Systems via Mixture-of-Experts

Apr 18, 2025Abstract:Conversational recommender systems enable natural language conversations and thus lead to a more engaging and effective recommendation scenario. As the conversations for recommender systems usually contain limited contextual information, many existing conversational recommender systems incorporate external sources to enrich the contextual information. However, how to combine different types of contextual information is still a challenge. In this paper, we propose a multi-type context-aware conversational recommender system, called MCCRS, effectively fusing multi-type contextual information via mixture-of-experts to improve conversational recommender systems. MCCRS incorporates both structured information and unstructured information, including the structured knowledge graph, unstructured conversation history, and unstructured item reviews. It consists of several experts, with each expert specialized in a particular domain (i.e., one specific contextual information). Multiple experts are then coordinated by a ChairBot to generate the final results. Our proposed MCCRS model takes advantage of different contextual information and the specialization of different experts followed by a ChairBot breaks the model bottleneck on a single contextual information. Experimental results demonstrate that our proposed MCCRS method achieves significantly higher performance compared to existing baselines.

MSCRS: Multi-modal Semantic Graph Prompt Learning Framework for Conversational Recommender Systems

Apr 15, 2025Abstract:Conversational Recommender Systems (CRSs) aim to provide personalized recommendations by interacting with users through conversations. Most existing studies of CRS focus on extracting user preferences from conversational contexts. However, due to the short and sparse nature of conversational contexts, it is difficult to fully capture user preferences by conversational contexts only. We argue that multi-modal semantic information can enrich user preference expressions from diverse dimensions (e.g., a user preference for a certain movie may stem from its magnificent visual effects and compelling storyline). In this paper, we propose a multi-modal semantic graph prompt learning framework for CRS, named MSCRS. First, we extract textual and image features of items mentioned in the conversational contexts. Second, we capture higher-order semantic associations within different semantic modalities (collaborative, textual, and image) by constructing modality-specific graph structures. Finally, we propose an innovative integration of multi-modal semantic graphs with prompt learning, harnessing the power of large language models to comprehensively explore high-dimensional semantic relationships. Experimental results demonstrate that our proposed method significantly improves accuracy in item recommendation, as well as generates more natural and contextually relevant content in response generation. We have released the code and the expanded multi-modal CRS datasets to facilitate further exploration in related research\footnote{https://github.com/BIAOBIAO12138/MSCRS-main}.

PSCon: Toward Conversational Product Search

Feb 19, 2025

Abstract:Conversational Product Search (CPS) is confined to simulated conversations due to the lack of real-world CPS datasets that reflect human-like language. Additionally, current conversational datasets are limited to support cross-market and multi-lingual usage. In this paper, we introduce a new CPS data collection protocol and present PSCon, a novel CPS dataset designed to assist product search via human-like conversations. The dataset is constructed using a coached human-to-human data collection protocol and supports two languages and dual markets. Also, the dataset enables thorough exploration of six subtasks of CPS: user intent detection, keyword extraction, system action prediction, question selection, item ranking, and response generation. Furthermore, we also offer an analysis of the dataset and propose a benchmark model on the proposed CPS dataset.

Knowledge-Enhanced Conversational Recommendation via Transformer-based Sequential Modelling

Dec 03, 2024

Abstract:In conversational recommender systems (CRSs), conversations usually involve a set of items and item-related entities or attributes, e.g., director is a related entity of a movie. These items and item-related entities are often mentioned along the development of a dialog, leading to potential sequential dependencies among them. However, most of existing CRSs neglect these potential sequential dependencies. In this article, we first propose a Transformer-based sequential conversational recommendation method, named TSCR, to model the sequential dependencies in the conversations to improve CRS. In TSCR, we represent conversations by items and the item-related entities, and construct user sequences to discover user preferences by considering both the mentioned items and item-related entities. Based on the constructed sequences, we deploy a Cloze task to predict the recommended items along a sequence. Meanwhile, in certain domains, knowledge graphs formed by the items and their related entities are readily available, which provide various different kinds of associations among them. Given that TSCR does not benefit from such knowledge graphs, we then propose a knowledge graph enhanced version of TSCR, called TSCRKG. In specific, we leverage the knowledge graph to offline initialize our model TSCRKG, and augment the user sequence of conversations (i.e., sequence of the mentioned items and item-related entities in the conversation) with multi-hop paths in the knowledge graph. Experimental results demonstrate that our TSCR model significantly outperforms state-of-the-art baselines, and the enhanced version TSCRKG further improves recommendation performance on top of TSCR.

Learning to Ask: Conversational Product Search via Representation Learning

Nov 18, 2024Abstract:Online shopping platforms, such as Amazon and AliExpress, are increasingly prevalent in society, helping customers purchase products conveniently. With recent progress in natural language processing, researchers and practitioners shift their focus from traditional product search to conversational product search. Conversational product search enables user-machine conversations and through them collects explicit user feedback that allows to actively clarify the users' product preferences. Therefore, prospective research on an intelligent shopping assistant via conversations is indispensable. Existing publications on conversational product search either model conversations independently from users, queries, and products or lead to a vocabulary mismatch. In this work, we propose a new conversational product search model, ConvPS, to assist users in locating desirable items. The model is first trained to jointly learn the semantic representations of user, query, item, and conversation via a unified generative framework. After learning these representations, they are integrated to retrieve the target items in the latent semantic space. Meanwhile, we propose a set of greedy and explore-exploit strategies to learn to ask the user a sequence of high-performance questions for conversations. Our proposed ConvPS model can naturally integrate the representation learning of the user, query, item, and conversation into a unified generative framework, which provides a promising avenue for constructing accurate and robust conversational product search systems that are flexible and adaptive. Experimental results demonstrate that our ConvPS model significantly outperforms state-of-the-art baselines.

NoRA: Nested Low-Rank Adaptation for Efficient Fine-Tuning Large Models

Aug 18, 2024

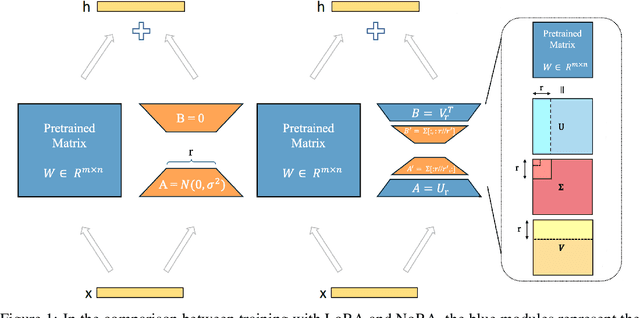

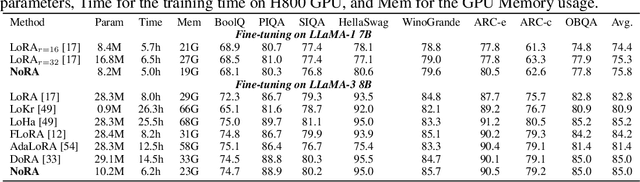

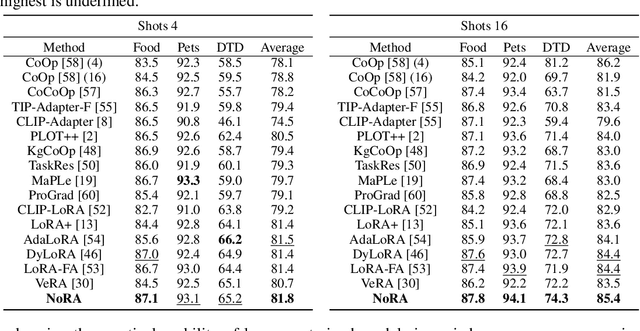

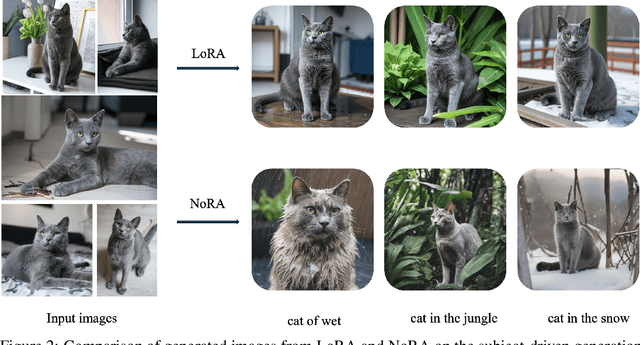

Abstract:In this paper, we introduce Nested Low-Rank Adaptation (NoRA), a novel approach to parameter-efficient fine-tuning that extends the capabilities of Low-Rank Adaptation (LoRA) techniques. Vanilla LoRA overlooks pre-trained weight inheritance and still requires fine-tuning numerous parameters. To addresses these issues, our NoRA adopts a dual-layer nested structure with Singular Value Decomposition (SVD), effectively leveraging original matrix knowledge while reducing tunable parameters. Specifically, NoRA freezes the outer LoRA weights and utilizes an inner LoRA design, providing enhanced control over model optimization. This approach allows the model to more precisely adapt to specific tasks while maintaining a compact parameter space. By freezing outer LoRA weights and using an inner LoRA design, NoRA enables precise task adaptation with a compact parameter space. Evaluations on tasks including commonsense reasoning with large language models, fine-tuning vision-language models, and subject-driven generation demonstrate NoRA's superiority over LoRA and its variants. Notably, NoRA reduces fine-tuning parameters|training-time|memory-usage by 4\%|22.5\%|20.7\% compared to LoRA on LLaMA-3 8B, while achieving 2.2\% higher performance. Code will be released upon acceptance.

Evaluating multiple large language models in pediatric ophthalmology

Nov 07, 2023

Abstract:IMPORTANCE The response effectiveness of different large language models (LLMs) and various individuals, including medical students, graduate students, and practicing physicians, in pediatric ophthalmology consultations, has not been clearly established yet. OBJECTIVE Design a 100-question exam based on pediatric ophthalmology to evaluate the performance of LLMs in highly specialized scenarios and compare them with the performance of medical students and physicians at different levels. DESIGN, SETTING, AND PARTICIPANTS This survey study assessed three LLMs, namely ChatGPT (GPT-3.5), GPT-4, and PaLM2, were assessed alongside three human cohorts: medical students, postgraduate students, and attending physicians, in their ability to answer questions related to pediatric ophthalmology. It was conducted by administering questionnaires in the form of test papers through the LLM network interface, with the valuable participation of volunteers. MAIN OUTCOMES AND MEASURES Mean scores of LLM and humans on 100 multiple-choice questions, as well as the answer stability, correlation, and response confidence of each LLM. RESULTS GPT-4 performed comparably to attending physicians, while ChatGPT (GPT-3.5) and PaLM2 outperformed medical students but slightly trailed behind postgraduate students. Furthermore, GPT-4 exhibited greater stability and confidence when responding to inquiries compared to ChatGPT (GPT-3.5) and PaLM2. CONCLUSIONS AND RELEVANCE Our results underscore the potential for LLMs to provide medical assistance in pediatric ophthalmology and suggest significant capacity to guide the education of medical students.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge