Huan Zhao

PTalker: Personalized Speech-Driven 3D Talking Head Animation via Style Disentanglement and Modality Alignment

Dec 27, 2025Abstract:Speech-driven 3D talking head generation aims to produce lifelike facial animations precisely synchronized with speech. While considerable progress has been made in achieving high lip-synchronization accuracy, existing methods largely overlook the intricate nuances of individual speaking styles, which limits personalization and realism. In this work, we present a novel framework for personalized 3D talking head animation, namely "PTalker". This framework preserves speaking style through style disentanglement from audio and facial motion sequences and enhances lip-synchronization accuracy through a three-level alignment mechanism between audio and mesh modalities. Specifically, to effectively disentangle style and content, we design disentanglement constraints that encode driven audio and motion sequences into distinct style and content spaces to enhance speaking style representation. To improve lip-synchronization accuracy, we adopt a modality alignment mechanism incorporating three aspects: spatial alignment using Graph Attention Networks to capture vertex connectivity in the 3D mesh structure, temporal alignment using cross-attention to capture and synchronize temporal dependencies, and feature alignment by top-k bidirectional contrastive losses and KL divergence constraints to ensure consistency between speech and mesh modalities. Extensive qualitative and quantitative experiments on public datasets demonstrate that PTalker effectively generates realistic, stylized 3D talking heads that accurately match identity-specific speaking styles, outperforming state-of-the-art methods. The source code and supplementary videos are available at: PTalker.

Foundation Model-based Evaluation of Neuropsychiatric Disorders: A Lifespan-Inclusive, Multi-Modal, and Multi-Lingual Study

Dec 24, 2025Abstract:Neuropsychiatric disorders, such as Alzheimer's disease (AD), depression, and autism spectrum disorder (ASD), are characterized by linguistic and acoustic abnormalities, offering potential biomarkers for early detection. Despite the promise of multi-modal approaches, challenges like multi-lingual generalization and the absence of a unified evaluation framework persist. To address these gaps, we propose FEND (Foundation model-based Evaluation of Neuropsychiatric Disorders), a comprehensive multi-modal framework integrating speech and text modalities for detecting AD, depression, and ASD across the lifespan. Leveraging 13 multi-lingual datasets spanning English, Chinese, Greek, French, and Dutch, we systematically evaluate multi-modal fusion performance. Our results show that multi-modal fusion excels in AD and depression detection but underperforms in ASD due to dataset heterogeneity. We also identify modality imbalance as a prevalent issue, where multi-modal fusion fails to surpass the best mono-modal models. Cross-corpus experiments reveal robust performance in task- and language-consistent scenarios but noticeable degradation in multi-lingual and task-heterogeneous settings. By providing extensive benchmarks and a detailed analysis of performance-influencing factors, FEND advances the field of automated, lifespan-inclusive, and multi-lingual neuropsychiatric disorder assessment. We encourage researchers to adopt the FEND framework for fair comparisons and reproducible research.

Robotic Grinding Skills Learning Based on Geodesic Length Dynamic Motion Primitives

Apr 24, 2025Abstract:Learning grinding skills from human craftsmen via imitation learning has become a key research topic in robotic machining. Due to their strong generalization and robustness to external disturbances, Dynamical Movement Primitives (DMPs) offer a promising approach for robotic grinding skill learning. However, directly applying DMPs to grinding tasks faces challenges, such as low orientation accuracy, unsynchronized position-orientation-force, and limited generalization for surface trajectories. To address these issues, this paper proposes a robotic grinding skill learning method based on geodesic length DMPs (Geo-DMPs). First, a normalized 2D weighted Gaussian kernel and intrinsic mean clustering algorithm are developed to extract geometric features from multiple demonstrations. Then, an orientation manifold distance metric removes the time dependency in traditional orientation DMPs, enabling accurate orientation learning via Geo-DMPs. A synchronization encoding framework is further proposed to jointly model position, orientation, and force using a geodesic length-based phase function. This framework enables robotic grinding actions to be generated between any two surface points. Experiments on robotic chamfer grinding and free-form surface grinding validate that the proposed method achieves high geometric accuracy and generalization in skill encoding and generation. To our knowledge, this is the first attempt to use DMPs for jointly learning and generating grinding skills in position, orientation, and force on model-free surfaces, offering a novel path for robotic grinding.

Coordinated Power Smoothing Control for Wind Storage Integrated System with Physics-informed Deep Reinforcement Learning

Dec 17, 2024

Abstract:The Wind Storage Integrated System with Power Smoothing Control (PSC) has emerged as a promising solution to ensure both efficient and reliable wind energy generation. However, existing PSC strategies overlook the intricate interplay and distinct control frequencies between batteries and wind turbines, and lack consideration of wake effect and battery degradation cost. In this paper, a novel coordinated control framework with hierarchical levels is devised to address these challenges effectively, which integrates the wake model and battery degradation model. In addition, after reformulating the problem as a Markov decision process, the multi-agent reinforcement learning method is introduced to overcome the bi-level characteristic of the problem. Moreover, a Physics-informed Neural Network-assisted Multi-agent Deep Deterministic Policy Gradient (PAMA-DDPG) algorithm is proposed to incorporate the power fluctuation differential equation and expedite the learning process. The effectiveness of the proposed methodology is evaluated through simulations conducted in four distinct scenarios using WindFarmSimulator (WFSim). The results demonstrate that the proposed algorithm facilitates approximately an 11% increase in total profit and a 19% decrease in power fluctuation compared to the traditional methods, thereby addressing the dual objectives of economic efficiency and grid-connected energy reliability.

Hard-Synth: Synthesizing Diverse Hard Samples for ASR using Zero-Shot TTS and LLM

Nov 20, 2024

Abstract:Text-to-speech (TTS) models have been widely adopted to enhance automatic speech recognition (ASR) systems using text-only corpora, thereby reducing the cost of labeling real speech data. Existing research primarily utilizes additional text data and predefined speech styles supported by TTS models. In this paper, we propose Hard-Synth, a novel ASR data augmentation method that leverages large language models (LLMs) and advanced zero-shot TTS. Our approach employs LLMs to generate diverse in-domain text through rewriting, without relying on additional text data. Rather than using predefined speech styles, we introduce a hard prompt selection method with zero-shot TTS to clone speech styles that the ASR model finds challenging to recognize. Experiments demonstrate that Hard-Synth significantly enhances the Conformer model, achieving relative word error rate (WER) reductions of 6.5\%/4.4\% on LibriSpeech dev/test-other subsets. Additionally, we show that Hard-Synth is data-efficient and capable of reducing bias in ASR.

Legal Evalutions and Challenges of Large Language Models

Nov 15, 2024

Abstract:In this paper, we review legal testing methods based on Large Language Models (LLMs), using the OPENAI o1 model as a case study to evaluate the performance of large models in applying legal provisions. We compare current state-of-the-art LLMs, including open-source, closed-source, and legal-specific models trained specifically for the legal domain. Systematic tests are conducted on English and Chinese legal cases, and the results are analyzed in depth. Through systematic testing of legal cases from common law systems and China, this paper explores the strengths and weaknesses of LLMs in understanding and applying legal texts, reasoning through legal issues, and predicting judgments. The experimental results highlight both the potential and limitations of LLMs in legal applications, particularly in terms of challenges related to the interpretation of legal language and the accuracy of legal reasoning. Finally, the paper provides a comprehensive analysis of the advantages and disadvantages of various types of models, offering valuable insights and references for the future application of AI in the legal field.

Leveraging Surgical Activity Grammar for Primary Intention Prediction in Laparoscopy Procedures

Sep 29, 2024

Abstract:Surgical procedures are inherently complex and dynamic, with intricate dependencies and various execution paths. Accurate identification of the intentions behind critical actions, referred to as Primary Intentions (PIs), is crucial to understanding and planning the procedure. This paper presents a novel framework that advances PI recognition in instructional videos by combining top-down grammatical structure with bottom-up visual cues. The grammatical structure is based on a rich corpus of surgical procedures, offering a hierarchical perspective on surgical activities. A grammar parser, utilizing the surgical activity grammar, processes visual data obtained from laparoscopic images through surgical action detectors, ensuring a more precise interpretation of the visual information. Experimental results on the benchmark dataset demonstrate that our method outperforms existing surgical activity detectors that rely solely on visual features. Our research provides a promising foundation for developing advanced robotic surgical systems with enhanced planning and automation capabilities.

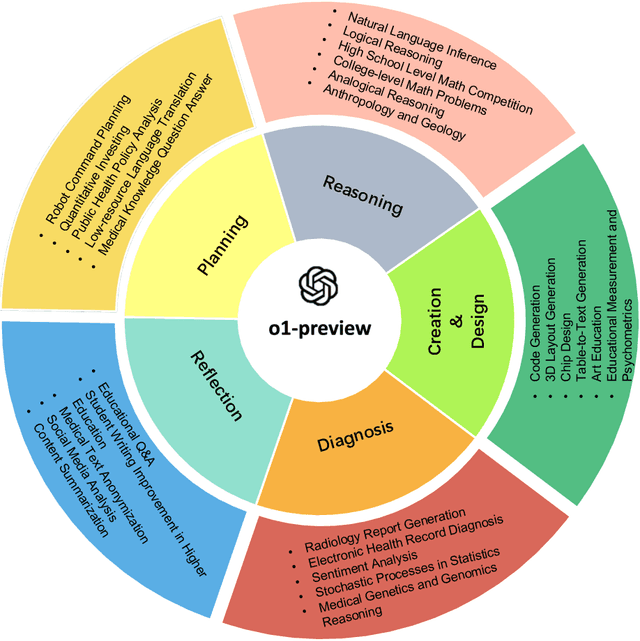

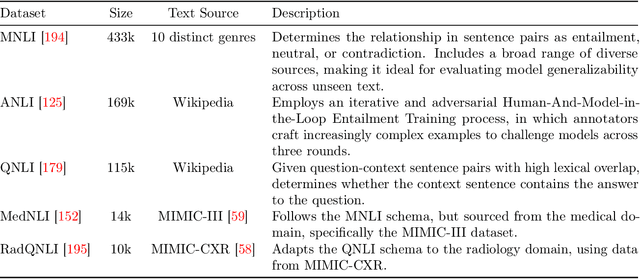

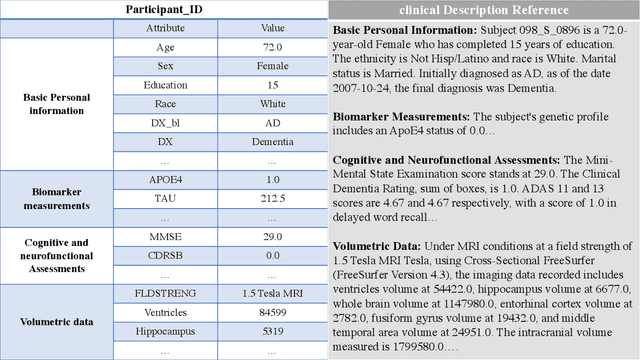

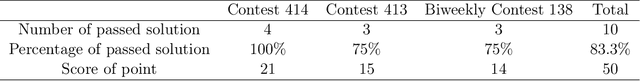

Evaluation of OpenAI o1: Opportunities and Challenges of AGI

Sep 27, 2024

Abstract:This comprehensive study evaluates the performance of OpenAI's o1-preview large language model across a diverse array of complex reasoning tasks, spanning multiple domains, including computer science, mathematics, natural sciences, medicine, linguistics, and social sciences. Through rigorous testing, o1-preview demonstrated remarkable capabilities, often achieving human-level or superior performance in areas ranging from coding challenges to scientific reasoning and from language processing to creative problem-solving. Key findings include: -83.3% success rate in solving complex competitive programming problems, surpassing many human experts. -Superior ability in generating coherent and accurate radiology reports, outperforming other evaluated models. -100% accuracy in high school-level mathematical reasoning tasks, providing detailed step-by-step solutions. -Advanced natural language inference capabilities across general and specialized domains like medicine. -Impressive performance in chip design tasks, outperforming specialized models in areas such as EDA script generation and bug analysis. -Remarkable proficiency in anthropology and geology, demonstrating deep understanding and reasoning in these specialized fields. -Strong capabilities in quantitative investing. O1 has comprehensive financial knowledge and statistical modeling skills. -Effective performance in social media analysis, including sentiment analysis and emotion recognition. The model excelled particularly in tasks requiring intricate reasoning and knowledge integration across various fields. While some limitations were observed, including occasional errors on simpler problems and challenges with certain highly specialized concepts, the overall results indicate significant progress towards artificial general intelligence.

ElecBench: a Power Dispatch Evaluation Benchmark for Large Language Models

Jul 07, 2024Abstract:In response to the urgent demand for grid stability and the complex challenges posed by renewable energy integration and electricity market dynamics, the power sector increasingly seeks innovative technological solutions. In this context, large language models (LLMs) have become a key technology to improve efficiency and promote intelligent progress in the power sector with their excellent natural language processing, logical reasoning, and generalization capabilities. Despite their potential, the absence of a performance evaluation benchmark for LLM in the power sector has limited the effective application of these technologies. Addressing this gap, our study introduces "ElecBench", an evaluation benchmark of LLMs within the power sector. ElecBench aims to overcome the shortcomings of existing evaluation benchmarks by providing comprehensive coverage of sector-specific scenarios, deepening the testing of professional knowledge, and enhancing decision-making precision. The framework categorizes scenarios into general knowledge and professional business, further divided into six core performance metrics: factuality, logicality, stability, security, fairness, and expressiveness, and is subdivided into 24 sub-metrics, offering profound insights into the capabilities and limitations of LLM applications in the power sector. To ensure transparency, we have made the complete test set public, evaluating the performance of eight LLMs across various scenarios and metrics. ElecBench aspires to serve as the standard benchmark for LLM applications in the power sector, supporting continuous updates of scenarios, metrics, and models to drive technological progress and application.

WenetSpeech4TTS: A 12,800-hour Mandarin TTS Corpus for Large Speech Generation Model Benchmark

Jun 11, 2024

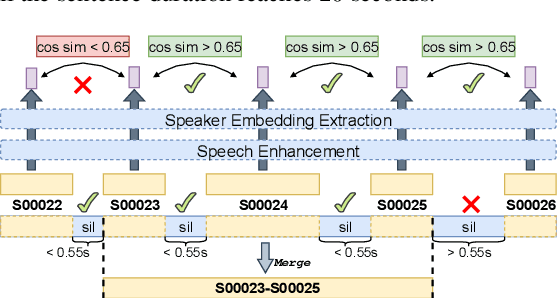

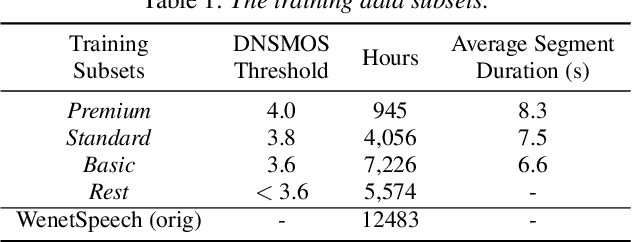

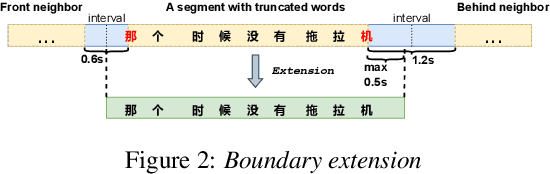

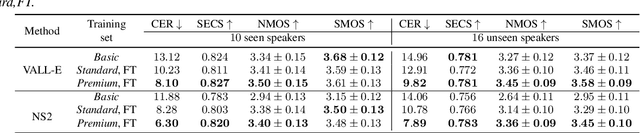

Abstract:With the development of large text-to-speech (TTS) models and scale-up of the training data, state-of-the-art TTS systems have achieved impressive performance. In this paper, we present WenetSpeech4TTS, a multi-domain Mandarin corpus derived from the open-sourced WenetSpeech dataset. Tailored for the text-to-speech tasks, we refined WenetSpeech by adjusting segment boundaries, enhancing the audio quality, and eliminating speaker mixing within each segment. Following a more accurate transcription process and quality-based data filtering process, the obtained WenetSpeech4TTS corpus contains $12,800$ hours of paired audio-text data. Furthermore, we have created subsets of varying sizes, categorized by segment quality scores to allow for TTS model training and fine-tuning. VALL-E and NaturalSpeech 2 systems are trained and fine-tuned on these subsets to validate the usability of WenetSpeech4TTS, establishing baselines on benchmark for fair comparison of TTS systems. The corpus and corresponding benchmarks are publicly available on huggingface.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge