Junhua Zhao

Multi-Objective Large Language Model Unlearning

Dec 29, 2024Abstract:Machine unlearning in the domain of large language models (LLMs) has attracted great attention recently, which aims to effectively eliminate undesirable behaviors from LLMs without full retraining from scratch. In this paper, we explore the Gradient Ascent (GA) approach in LLM unlearning, which is a proactive way to decrease the prediction probability of the model on the target data in order to remove their influence. We analyze two challenges that render the process impractical: gradient explosion and catastrophic forgetting. To address these issues, we propose Multi-Objective Large Language Model Unlearning (MOLLM) algorithm. We first formulate LLM unlearning as a multi-objective optimization problem, in which the cross-entropy loss is modified to the unlearning version to overcome the gradient explosion issue. A common descent update direction is then calculated, which enables the model to forget the target data while preserving the utility of the LLM. Our empirical results verify that MoLLM outperforms the SOTA GA-based LLM unlearning methods in terms of unlearning effect and model utility preservation.

Federated Unlearning with Gradient Descent and Conflict Mitigation

Dec 28, 2024

Abstract:Federated Learning (FL) has received much attention in recent years. However, although clients are not required to share their data in FL, the global model itself can implicitly remember clients' local data. Therefore, it's necessary to effectively remove the target client's data from the FL global model to ease the risk of privacy leakage and implement ``the right to be forgotten". Federated Unlearning (FU) has been considered a promising way to remove data without full retraining. But the model utility easily suffers significant reduction during unlearning due to the gradient conflicts. Furthermore, when conducting the post-training to recover the model utility, the model is prone to move back and revert what has already been unlearned. To address these issues, we propose Federated Unlearning with Orthogonal Steepest Descent (FedOSD). We first design an unlearning Cross-Entropy loss to overcome the convergence issue of the gradient ascent. A steepest descent direction for unlearning is then calculated in the condition of being non-conflicting with other clients' gradients and closest to the target client's gradient. This benefits to efficiently unlearn and mitigate the model utility reduction. After unlearning, we recover the model utility by maintaining the achievement of unlearning. Finally, extensive experiments in several FL scenarios verify that FedOSD outperforms the SOTA FU algorithms in terms of unlearning and model utility.

Coordinated Power Smoothing Control for Wind Storage Integrated System with Physics-informed Deep Reinforcement Learning

Dec 17, 2024

Abstract:The Wind Storage Integrated System with Power Smoothing Control (PSC) has emerged as a promising solution to ensure both efficient and reliable wind energy generation. However, existing PSC strategies overlook the intricate interplay and distinct control frequencies between batteries and wind turbines, and lack consideration of wake effect and battery degradation cost. In this paper, a novel coordinated control framework with hierarchical levels is devised to address these challenges effectively, which integrates the wake model and battery degradation model. In addition, after reformulating the problem as a Markov decision process, the multi-agent reinforcement learning method is introduced to overcome the bi-level characteristic of the problem. Moreover, a Physics-informed Neural Network-assisted Multi-agent Deep Deterministic Policy Gradient (PAMA-DDPG) algorithm is proposed to incorporate the power fluctuation differential equation and expedite the learning process. The effectiveness of the proposed methodology is evaluated through simulations conducted in four distinct scenarios using WindFarmSimulator (WFSim). The results demonstrate that the proposed algorithm facilitates approximately an 11% increase in total profit and a 19% decrease in power fluctuation compared to the traditional methods, thereby addressing the dual objectives of economic efficiency and grid-connected energy reliability.

From News to Forecast: Integrating Event Analysis in LLM-Based Time Series Forecasting with Reflection

Sep 26, 2024

Abstract:This paper introduces a novel approach to enhance time series forecasting using Large Language Models (LLMs) and Generative Agents. With language as a medium, our method adaptively integrates various social events into forecasting models, aligning news content with time series fluctuations for enriched insights. Specifically, we utilize LLM-based agents to iteratively filter out irrelevant news and employ human-like reasoning and reflection to evaluate predictions. This enables our model to analyze complex events, such as unexpected incidents and shifts in social behavior, and continuously refine the selection logic of news and the robustness of the agent's output. By compiling selected news with time series data, we fine-tune the LLaMa2 pre-trained model. The results demonstrate significant improvements in forecasting accuracy and suggest a potential paradigm shift in time series forecasting by effectively harnessing unstructured news data.

Carbon Footprint Accounting Driven by Large Language Models and Retrieval-augmented Generation

Aug 20, 2024Abstract:Carbon footprint accounting is crucial for quantifying greenhouse gas emissions and achieving carbon neutrality.The dynamic nature of processes, accounting rules, carbon-related policies, and energy supply structures necessitates real-time updates of CFA. Traditional life cycle assessment methods rely heavily on human expertise, making near-real-time updates challenging. This paper introduces a novel approach integrating large language models (LLMs) with retrieval-augmented generation technology to enhance the real-time, professional, and economical aspects of carbon footprint information retrieval and analysis. By leveraging LLMs' logical and language understanding abilities and RAG's efficient retrieval capabilities, the proposed method LLMs-RAG-CFA can retrieve more relevant professional information to assist LLMs, enhancing the model's generative abilities. This method offers broad professional coverage, efficient real-time carbon footprint information acquisition and accounting, and cost-effective automation without frequent LLMs' parameter updates. Experimental results across five industries(primary aluminum, lithium battery, photovoltaic, new energy vehicles, and transformers)demonstrate that the LLMs-RAG-CFA method outperforms traditional methods and other LLMs, achieving higher information retrieval rates and significantly lower information deviations and carbon footprint accounting deviations. The economically viable design utilizes RAG technology to balance real-time updates with cost-effectiveness, providing an efficient, reliable, and cost-saving solution for real-time carbon emission management, thereby enhancing environmental sustainability practices.

ElecBench: a Power Dispatch Evaluation Benchmark for Large Language Models

Jul 07, 2024Abstract:In response to the urgent demand for grid stability and the complex challenges posed by renewable energy integration and electricity market dynamics, the power sector increasingly seeks innovative technological solutions. In this context, large language models (LLMs) have become a key technology to improve efficiency and promote intelligent progress in the power sector with their excellent natural language processing, logical reasoning, and generalization capabilities. Despite their potential, the absence of a performance evaluation benchmark for LLM in the power sector has limited the effective application of these technologies. Addressing this gap, our study introduces "ElecBench", an evaluation benchmark of LLMs within the power sector. ElecBench aims to overcome the shortcomings of existing evaluation benchmarks by providing comprehensive coverage of sector-specific scenarios, deepening the testing of professional knowledge, and enhancing decision-making precision. The framework categorizes scenarios into general knowledge and professional business, further divided into six core performance metrics: factuality, logicality, stability, security, fairness, and expressiveness, and is subdivided into 24 sub-metrics, offering profound insights into the capabilities and limitations of LLM applications in the power sector. To ensure transparency, we have made the complete test set public, evaluating the performance of eight LLMs across various scenarios and metrics. ElecBench aspires to serve as the standard benchmark for LLM applications in the power sector, supporting continuous updates of scenarios, metrics, and models to drive technological progress and application.

Survey on Large Language Model-Enhanced Reinforcement Learning: Concept, Taxonomy, and Methods

Mar 30, 2024Abstract:With extensive pre-trained knowledge and high-level general capabilities, large language models (LLMs) emerge as a promising avenue to augment reinforcement learning (RL) in aspects such as multi-task learning, sample efficiency, and task planning. In this survey, we provide a comprehensive review of the existing literature in $\textit{LLM-enhanced RL}$ and summarize its characteristics compared to conventional RL methods, aiming to clarify the research scope and directions for future studies. Utilizing the classical agent-environment interaction paradigm, we propose a structured taxonomy to systematically categorize LLMs' functionalities in RL, including four roles: information processor, reward designer, decision-maker, and generator. Additionally, for each role, we summarize the methodologies, analyze the specific RL challenges that are mitigated, and provide insights into future directions. Lastly, potential applications, prospective opportunities and challenges of the $\textit{LLM-enhanced RL}$ are discussed.

Exploring Large Language Model based Intelligent Agents: Definitions, Methods, and Prospects

Jan 07, 2024Abstract:Intelligent agents stand out as a potential path toward artificial general intelligence (AGI). Thus, researchers have dedicated significant effort to diverse implementations for them. Benefiting from recent progress in large language models (LLMs), LLM-based agents that use universal natural language as an interface exhibit robust generalization capabilities across various applications -- from serving as autonomous general-purpose task assistants to applications in coding, social, and economic domains, LLM-based agents offer extensive exploration opportunities. This paper surveys current research to provide an in-depth overview of LLM-based intelligent agents within single-agent and multi-agent systems. It covers their definitions, research frameworks, and foundational components such as their composition, cognitive and planning methods, tool utilization, and responses to environmental feedback. We also delve into the mechanisms of deploying LLM-based agents in multi-agent systems, including multi-role collaboration, message passing, and strategies to alleviate communication issues between agents. The discussions also shed light on popular datasets and application scenarios. We conclude by envisioning prospects for LLM-based agents, considering the evolving landscape of AI and natural language processing.

Applying Large Language Models to Power Systems: Potential Security Threats

Nov 22, 2023Abstract:Applying large language models (LLMs) to power systems presents a promising avenue for enhancing decision-making and operational efficiency. However, this action may also incur potential security threats, which have not been fully recognized so far. To this end, this letter analyzes potential threats incurred by applying LLMs to power systems, emphasizing the need for urgent research and development of countermeasures.

Fed-NILM: A Federated Learning-based Non-Intrusive Load Monitoring Method for Privacy-Protection

May 24, 2021

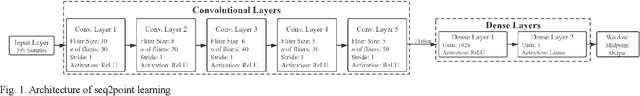

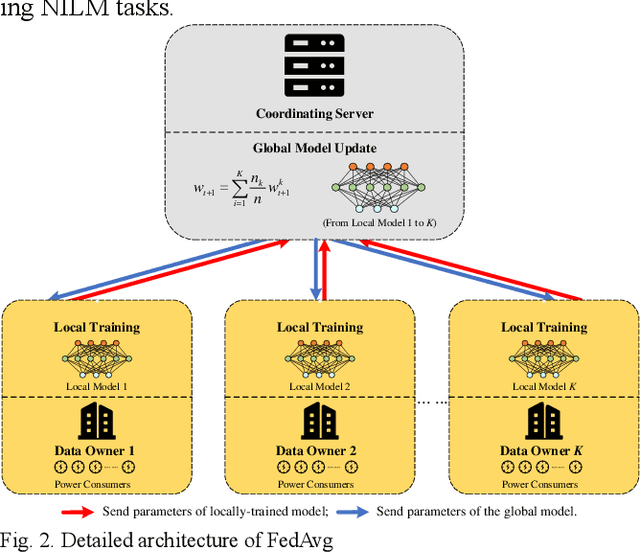

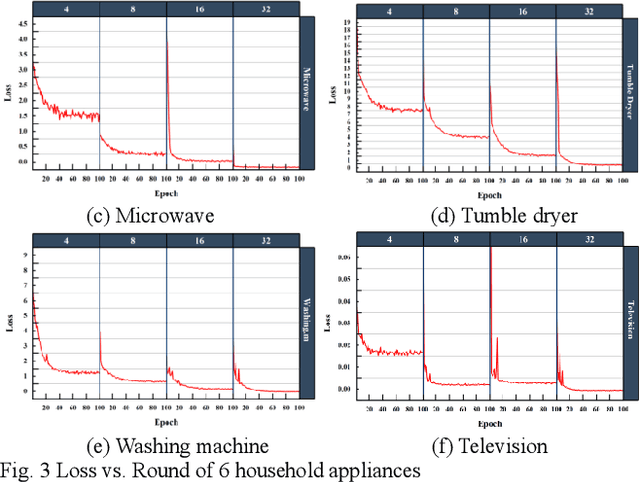

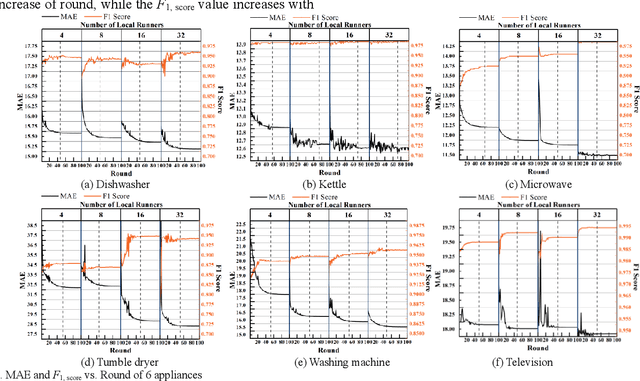

Abstract:Non-intrusive load monitoring (NILM) decomposes the total load reading into appliance-level load signals. Many deep learning-based methods have been developed to accomplish NILM, and the training of deep neural networks (DNN) requires massive load data containing different types of appliances. For local data owners with inadequate load data but expect to accomplish a promising model performance, the conduction of effective NILM co-modelling is increasingly significant. While during the cooperation of local data owners, data exchange and centralized data storage may increase the risk of power consumer privacy breaches. To eliminate the potential risks, a novel NILM method named Fed-NILM ap-plying Federated Learning (FL) is proposed in this paper. In Fed-NILM, local parameters instead of load data are shared among local data owners. The global model is obtained by weighted averaging the parameters. In the experiments, Fed-NILM is validated on two real-world datasets. Besides, a comparison of Fed-NILM with locally-trained NILMs and the centrally-trained one is conducted in both residential and industrial scenarios. The experimental results show that Fed-NILM outperforms locally-trained NILMs and approximate the centrally-trained NILM which is trained on the entire load dataset without privacy preservation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge