Jie Lian

A Unified Spoken Language Model with Injected Emotional-Attribution Thinking for Human-like Interaction

Jan 08, 2026Abstract:This paper presents a unified spoken language model for emotional intelligence, enhanced by a novel data construction strategy termed Injected Emotional-Attribution Thinking (IEAT). IEAT incorporates user emotional states and their underlying causes into the model's internal reasoning process, enabling emotion-aware reasoning to be internalized rather than treated as explicit supervision. The model is trained with a two-stage progressive strategy. The first stage performs speech-text alignment and emotional attribute modeling via self-distillation, while the second stage conducts end-to-end cross-modal joint optimization to ensure consistency between textual and spoken emotional expressions. Experiments on the Human-like Spoken Dialogue Systems Challenge (HumDial) Emotional Intelligence benchmark demonstrate that the proposed approach achieves top-ranked performance across emotional trajectory modeling, emotional reasoning, and empathetic response generation under both LLM-based and human evaluations.

TELEVAL: A Dynamic Benchmark Designed for Spoken Language Models in Chinese Interactive Scenarios

Jul 24, 2025Abstract:Spoken language models (SLMs) have seen rapid progress in recent years, along with the development of numerous benchmarks for evaluating their performance. However, most existing benchmarks primarily focus on evaluating whether SLMs can perform complex tasks comparable to those tackled by large language models (LLMs), often failing to align with how users naturally interact in real-world conversational scenarios. In this paper, we propose TELEVAL, a dynamic benchmark specifically designed to evaluate SLMs' effectiveness as conversational agents in realistic Chinese interactive settings. TELEVAL defines three evaluation dimensions: Explicit Semantics, Paralinguistic and Implicit Semantics, and System Abilities. It adopts a dialogue format consistent with real-world usage and evaluates text and audio outputs separately. TELEVAL particularly focuses on the model's ability to extract implicit cues from user speech and respond appropriately without additional instructions. Our experiments demonstrate that despite recent progress, existing SLMs still have considerable room for improvement in natural conversational tasks. We hope that TELEVAL can serve as a user-centered evaluation framework that directly reflects the user experience and contributes to the development of more capable dialogue-oriented SLMs.

BoSS: Beyond-Semantic Speech

Jul 23, 2025Abstract:Human communication involves more than explicit semantics, with implicit signals and contextual cues playing a critical role in shaping meaning. However, modern speech technologies, such as Automatic Speech Recognition (ASR) and Text-to-Speech (TTS) often fail to capture these beyond-semantic dimensions. To better characterize and benchmark the progression of speech intelligence, we introduce Spoken Interaction System Capability Levels (L1-L5), a hierarchical framework illustrated the evolution of spoken dialogue systems from basic command recognition to human-like social interaction. To support these advanced capabilities, we propose Beyond-Semantic Speech (BoSS), which refers to the set of information in speech communication that encompasses but transcends explicit semantics. It conveys emotions, contexts, and modifies or extends meanings through multidimensional features such as affective cues, contextual dynamics, and implicit semantics, thereby enhancing the understanding of communicative intentions and scenarios. We present a formalized framework for BoSS, leveraging cognitive relevance theories and machine learning models to analyze temporal and contextual speech dynamics. We evaluate BoSS-related attributes across five different dimensions, reveals that current spoken language models (SLMs) are hard to fully interpret beyond-semantic signals. These findings highlight the need for advancing BoSS research to enable richer, more context-aware human-machine communication.

GOAT-TTS: LLM-based Text-To-Speech Generation Optimized via A Dual-Branch Architecture

Apr 15, 2025Abstract:While large language models (LLMs) have revolutionized text-to-speech (TTS) synthesis through discrete tokenization paradigms, current architectures exhibit fundamental tensions between three critical dimensions: 1) irreversible loss of acoustic characteristics caused by quantization of speech prompts; 2) stringent dependence on precisely aligned prompt speech-text pairs that limit real-world deployment; and 3) catastrophic forgetting of the LLM's native text comprehension during optimization for speech token generation. To address these challenges, we propose an LLM-based text-to-speech Generation approach Optimized via a novel dual-branch ArchiTecture (GOAT-TTS). Our framework introduces two key innovations: (1) The modality-alignment branch combines a speech encoder and projector to capture continuous acoustic embeddings, enabling bidirectional correlation between paralinguistic features (language, timbre, emotion) and semantic text representations without transcript dependency; (2) The speech-generation branch employs modular fine-tuning on top-k layers of an LLM for speech token prediction while freezing the bottom-k layers to preserve foundational linguistic knowledge. Moreover, multi-token prediction is introduced to support real-time streaming TTS synthesis. Experimental results demonstrate that our GOAT-TTS achieves performance comparable to state-of-the-art TTS models while validating the efficacy of synthesized dialect speech data.

Physics-Inspired Degradation Models for Hyperspectral Image Fusion

Feb 04, 2024Abstract:The fusion of a low-spatial-resolution hyperspectral image (LR-HSI) with a high-spatial-resolution multispectral image (HR-MSI) has garnered increasing research interest. However, most fusion methods solely focus on the fusion algorithm itself and overlook the degradation models, which results in unsatisfactory performance in practical scenarios. To fill this gap, we propose physics-inspired degradation models (PIDM) to model the degradation of LR-HSI and HR-MSI, which comprises a spatial degradation network (SpaDN) and a spectral degradation network (SpeDN). SpaDN and SpeDN are designed based on two insights. First, we employ spatial warping and spectral modulation operations to simulate lens aberrations, thereby introducing non-uniformity into the spatial and spectral degradation processes. Second, we utilize asymmetric downsampling and parallel downsampling operations to separately reduce the spatial and spectral resolutions of the images, thus ensuring the matching of spatial and spectral degradation processes with specific physical characteristics. Once SpaDN and SpeDN are established, we adopt a self-supervised training strategy to optimize the network parameters and provide a plug-and-play solution for fusion methods. Comprehensive experiments demonstrate that our proposed PIDM can boost the fusion performance of existing fusion methods in practical scenarios.

AdaMedGraph: Adaboosting Graph Neural Networks for Personalized Medicine

Nov 24, 2023Abstract:Precision medicine tailored to individual patients has gained significant attention in recent times. Machine learning techniques are now employed to process personalized data from various sources, including images, genetics, and assessments. These techniques have demonstrated good outcomes in many clinical prediction tasks. Notably, the approach of constructing graphs by linking similar patients and then applying graph neural networks (GNNs) stands out, because related information from analogous patients are aggregated and considered for prediction. However, selecting the appropriate edge feature to define patient similarity and construct the graph is challenging, given that each patient is depicted by high-dimensional features from diverse sources. Previous studies rely on human expertise to select the edge feature, which is neither scalable nor efficient in pinpointing crucial edge features for complex diseases. In this paper, we propose a novel algorithm named \ours, which can automatically select important features to construct multiple patient similarity graphs, and train GNNs based on these graphs as weak learners in adaptive boosting. \ours{} is evaluated on two real-world medical scenarios and shows superiors performance.

Eve Said Yes: AirBone Authentication for Head-Wearable Smart Voice Assistant

Sep 26, 2023

Abstract:Recent advances in machine learning and natural language processing have fostered the enormous prosperity of smart voice assistants and their services, e.g., Alexa, Google Home, Siri, etc. However, voice spoofing attacks are deemed to be one of the major challenges of voice control security, and never stop evolving such as deep-learning-based voice conversion and speech synthesis techniques. To solve this problem outside the acoustic domain, we focus on head-wearable devices, such as earbuds and virtual reality (VR) headsets, which are feasible to continuously monitor the bone-conducted voice in the vibration domain. Specifically, we identify that air and bone conduction (AC/BC) from the same vocalization are coupled (or concurrent) and user-level unique, which makes them suitable behavior and biometric factors for multi-factor authentication (MFA). The legitimate user can defeat acoustic domain and even cross-domain spoofing samples with the proposed two-stage AirBone authentication. The first stage answers \textit{whether air and bone conduction utterances are time domain consistent (TC)} and the second stage runs \textit{bone conduction speaker recognition (BC-SR)}. The security level is hence increased for two reasons: (1) current acoustic attacks on smart voice assistants cannot affect bone conduction, which is in the vibration domain; (2) even for advanced cross-domain attacks, the unique bone conduction features can detect adversary's impersonation and machine-induced vibration. Finally, AirBone authentication has good usability (the same level as voice authentication) compared with traditional MFA and those specially designed to enhance smart voice security. Our experimental results show that the proposed AirBone authentication is usable and secure, and can be easily equipped by commercial off-the-shelf head wearables with good user experience.

Auto robust relative radiometric normalization via latent change noise modelling

Nov 24, 2021

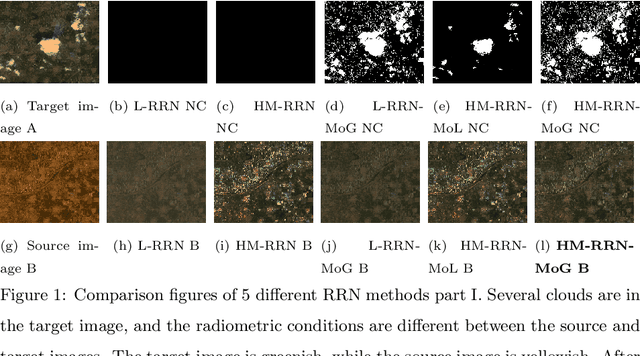

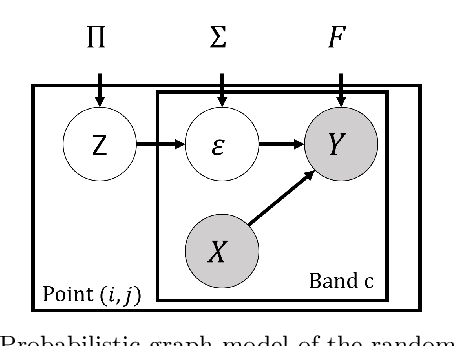

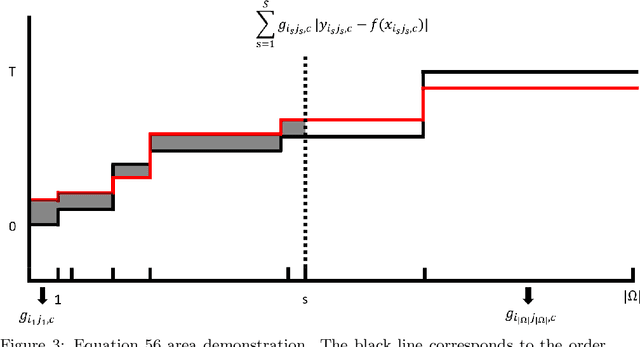

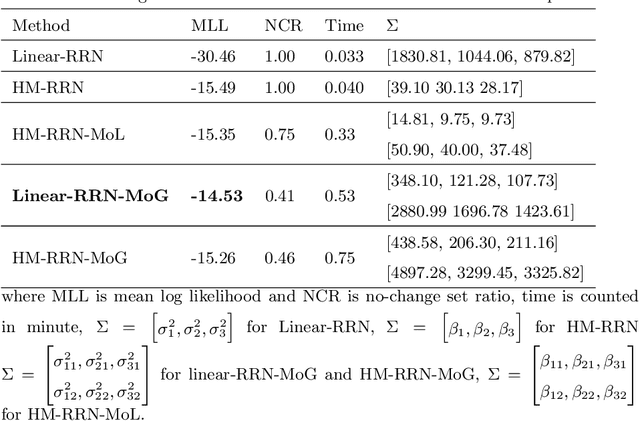

Abstract:Relative radiometric normalization(RRN) of different satellite images of the same terrain is necessary for change detection, object classification/segmentation, and map-making tasks. However, traditional RRN models are not robust, disturbing by object change, and RRN models precisely considering object change can not robustly obtain the no-change set. This paper proposes auto robust relative radiometric normalization methods via latent change noise modeling. They utilize the prior knowledge that no change points possess small-scale noise under relative radiometric normalization and that change points possess large-scale radiometric noise after radiometric normalization, combining the stochastic expectation maximization method to quickly and robustly extract the no-change set to learn the relative radiometric normalization mapping functions. This makes our model theoretically grounded regarding the probabilistic theory and mathematics deduction. Specifically, when we select histogram matching as the relative radiometric normalization learning scheme integrating with the mixture of Gaussian noise(HM-RRN-MoG), the HM-RRN-MoG model achieves the best performance. Our model possesses the ability to robustly against clouds/fogs/changes. Our method naturally generates a robust evaluation indicator for RRN that is the no-change set root mean square error. We apply the HM-RRN-MoG model to the latter vegetation/water change detection task, which reduces the radiometric contrast and NDVI/NDWI differences on the no-change set, generates consistent and comparable results. We utilize the no-change set into the building change detection task, efficiently reducing the pseudo-change and boosting the precision.

Automatically eliminating seam lines with Poisson editing in complex relative radiometric normalization mosaicking scenarios

Jun 14, 2021Abstract:Relative radiometric normalization (RRN) mosaicking among multiple remote sensing images is crucial for the downstream tasks, including map-making, image recognition, semantic segmentation, and change detection. However, there are often seam lines on the mosaic boundary and radiometric contrast left, especially in complex scenarios, making the appearance of mosaic images unsightly and reducing the accuracy of the latter classification/recognition algorithms. This paper renders a novel automatical approach to eliminate seam lines in complex RRN mosaicking scenarios. It utilizes the histogram matching on the overlap area to alleviate radiometric contrast, Poisson editing to remove the seam lines, and merging procedure to determine the normalization transfer order. Our method can handle the mosaicking seam lines with arbitrary shapes and images with extreme topological relationships (with a small intersection area). These conditions make the main feathering or blending methods, e.g., linear weighted blending and Laplacian pyramid blending, unavailable. In the experiment, our approach visually surpasses the automatic methods without Poisson editing and the manual blurring and feathering method using GIMP software.

A Structure-Aware Relation Network for Thoracic Diseases Detection and Segmentation

Apr 21, 2021

Abstract:Instance level detection and segmentation of thoracic diseases or abnormalities are crucial for automatic diagnosis in chest X-ray images. Leveraging on constant structure and disease relations extracted from domain knowledge, we propose a structure-aware relation network (SAR-Net) extending Mask R-CNN. The SAR-Net consists of three relation modules: 1. the anatomical structure relation module encoding spatial relations between diseases and anatomical parts. 2. the contextual relation module aggregating clues based on query-key pair of disease RoI and lung fields. 3. the disease relation module propagating co-occurrence and causal relations into disease proposals. Towards making a practical system, we also provide ChestX-Det, a chest X-Ray dataset with instance-level annotations (boxes and masks). ChestX-Det is a subset of the public dataset NIH ChestX-ray14. It contains ~3500 images of 13 common disease categories labeled by three board-certified radiologists. We evaluate our SAR-Net on it and another dataset DR-Private. Experimental results show that it can enhance the strong baseline of Mask R-CNN with significant improvements. The ChestX-Det is released at https://github.com/Deepwise-AILab/ChestX-Det-Dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge