Hui Zhong

ERNIE 5.0 Technical Report

Feb 04, 2026Abstract:In this report, we introduce ERNIE 5.0, a natively autoregressive foundation model desinged for unified multimodal understanding and generation across text, image, video, and audio. All modalities are trained from scratch under a unified next-group-of-tokens prediction objective, based on an ultra-sparse mixture-of-experts (MoE) architecture with modality-agnostic expert routing. To address practical challenges in large-scale deployment under diverse resource constraints, ERNIE 5.0 adopts a novel elastic training paradigm. Within a single pre-training run, the model learns a family of sub-models with varying depths, expert capacities, and routing sparsity, enabling flexible trade-offs among performance, model size, and inference latency in memory- or time-constrained scenarios. Moreover, we systematically address the challenges of scaling reinforcement learning to unified foundation models, thereby guaranteeing efficient and stable post-training under ultra-sparse MoE architectures and diverse multimodal settings. Extensive experiments demonstrate that ERNIE 5.0 achieves strong and balanced performance across multiple modalities. To the best of our knowledge, among publicly disclosed models, ERNIE 5.0 represents the first production-scale realization of a trillion-parameter unified autoregressive model that supports both multimodal understanding and generation. To facilitate further research, we present detailed visualizations of modality-agnostic expert routing in the unified model, alongside comprehensive empirical analysis of elastic training, aiming to offer profound insights to the community.

A Physics-informed End-to-End Occupancy Framework for Motion Planning of Autonomous Vehicles

May 08, 2025Abstract:Accurate and interpretable motion planning is essential for autonomous vehicles (AVs) navigating complex and uncertain environments. While recent end-to-end occupancy prediction methods have improved environmental understanding, they typically lack explicit physical constraints, limiting safety and generalization. In this paper, we propose a unified end-to-end framework that integrates verifiable physical rules into the occupancy learning process. Specifically, we embed artificial potential fields (APF) as physics-informed guidance during network training to ensure that predicted occupancy maps are both data-efficient and physically plausible. Our architecture combines convolutional and recurrent neural networks to capture spatial and temporal dependencies while preserving model flexibility. Experimental results demonstrate that our method improves task completion rate, safety margins, and planning efficiency across diverse driving scenarios, confirming its potential for reliable deployment in real-world AV systems.

Deployment-friendly Lane-changing Intention Prediction Powered by Brain-inspired Spiking Neural Networks

Feb 09, 2025Abstract:Accurate and real-time prediction of surrounding vehicles' lane-changing intentions is a critical challenge in deploying safe and efficient autonomous driving systems in open-world scenarios. Existing high-performing methods remain hard to deploy due to their high computational cost, long training times, and excessive memory requirements. Here, we propose an efficient lane-changing intention prediction approach based on brain-inspired Spiking Neural Networks (SNN). By leveraging the event-driven nature of SNN, the proposed approach enables us to encode the vehicle's states in a more efficient manner. Comparison experiments conducted on HighD and NGSIM datasets demonstrate that our method significantly improves training efficiency and reduces deployment costs while maintaining comparable prediction accuracy. Particularly, compared to the baseline, our approach reduces training time by 75% and memory usage by 99.9%. These results validate the efficiency and reliability of our method in lane-changing predictions, highlighting its potential for safe and efficient autonomous driving systems while offering significant advantages in deployment, including reduced training time, lower memory usage, and faster inference.

High-resolution on-road air pollution exposure informed by taxi-based mobile monitoring sensors

Dec 13, 2024Abstract:Air pollutant exposure exhibits significant spatial and temporal variability, with localized hotspots, particularly in traffic microenvironments, posing health risks to commuters. Although widely used for air quality assessment, fixed-site monitoring stations are limited by sparse distribution, high costs, and maintenance needs, making them less effective in capturing on-road pollution levels. This study utilizes a fleet of 314 taxis equipped with sensors to measure NO\textsubscript{2}, PM\textsubscript{2.5}, and PM\textsubscript{10} concentrations and identify high-exposure hotspots. The findings reveal disparities between mobile and stationary measurements, map the spatiotemporal exposure patterns, and highlight local hotspots. These results demonstrate the potential of mobile monitoring to provide fine-scale, on-road air pollution assessments, offering valuable insights for policymakers to design targeted interventions and protect public health, particularly for sensitive populations.

EcoFollower: An Environment-Friendly Car Following Model Considering Fuel Consumption

Jul 22, 2024

Abstract:To alleviate energy shortages and environmental impacts caused by transportation, this study introduces EcoFollower, a novel eco-car-following model developed using reinforcement learning (RL) to optimize fuel consumption in car-following scenarios. Employing the NGSIM datasets, the performance of EcoFollower was assessed in comparison with the well-established Intelligent Driver Model (IDM). The findings demonstrate that EcoFollower excels in simulating realistic driving behaviors, maintaining smooth vehicle operations, and closely matching the ground truth metrics of time-to-collision (TTC), headway, and comfort. Notably, the model achieved a significant reduction in fuel consumption, lowering it by 10.42\% compared to actual driving scenarios. These results underscore the capability of RL-based models like EcoFollower to enhance autonomous vehicle algorithms, promoting safer and more energy-efficient driving strategies.

Harnessing Inherent Noises for Privacy Preservation in Quantum Machine Learning

Dec 18, 2023

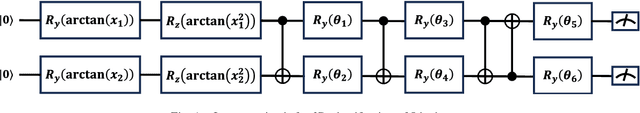

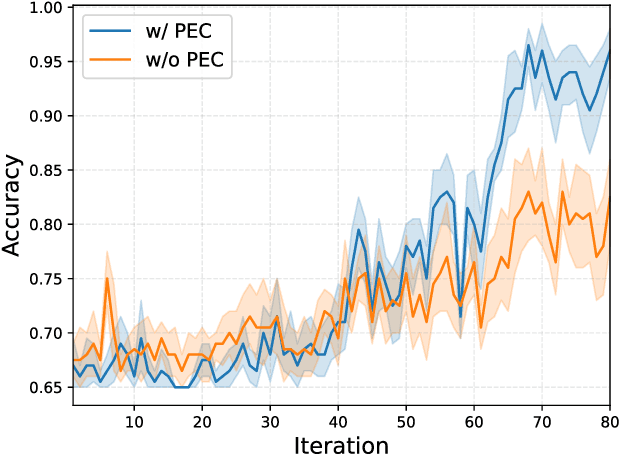

Abstract:Quantum computing revolutionizes the way of solving complex problems and handling vast datasets, which shows great potential to accelerate the machine learning process. However, data leakage in quantum machine learning (QML) may present privacy risks. Although differential privacy (DP), which protects privacy through the injection of artificial noise, is a well-established approach, its application in the QML domain remains under-explored. In this paper, we propose to harness inherent quantum noises to protect data privacy in QML. Especially, considering the Noisy Intermediate-Scale Quantum (NISQ) devices, we leverage the unavoidable shot noise and incoherent noise in quantum computing to preserve the privacy of QML models for binary classification. We mathematically analyze that the gradient of quantum circuit parameters in QML satisfies a Gaussian distribution, and derive the upper and lower bounds on its variance, which can potentially provide the DP guarantee. Through simulations, we show that a target privacy protection level can be achieved by running the quantum circuit a different number of times.

BEVGPT: Generative Pre-trained Large Model for Autonomous Driving Prediction, Decision-Making, and Planning

Oct 16, 2023

Abstract:Prediction, decision-making, and motion planning are essential for autonomous driving. In most contemporary works, they are considered as individual modules or combined into a multi-task learning paradigm with a shared backbone but separate task heads. However, we argue that they should be integrated into a comprehensive framework. Although several recent approaches follow this scheme, they suffer from complicated input representations and redundant framework designs. More importantly, they can not make long-term predictions about future driving scenarios. To address these issues, we rethink the necessity of each module in an autonomous driving task and incorporate only the required modules into a minimalist autonomous driving framework. We propose BEVGPT, a generative pre-trained large model that integrates driving scenario prediction, decision-making, and motion planning. The model takes the bird's-eye-view (BEV) images as the only input source and makes driving decisions based on surrounding traffic scenarios. To ensure driving trajectory feasibility and smoothness, we develop an optimization-based motion planning method. We instantiate BEVGPT on Lyft Level 5 Dataset and use Woven Planet L5Kit for realistic driving simulation. The effectiveness and robustness of the proposed framework are verified by the fact that it outperforms previous methods in 100% decision-making metrics and 66% motion planning metrics. Furthermore, the ability of our framework to accurately generate BEV images over the long term is demonstrated through the task of driving scenario prediction. To the best of our knowledge, this is the first generative pre-trained large model for autonomous driving prediction, decision-making, and motion planning with only BEV images as input.

Eve Said Yes: AirBone Authentication for Head-Wearable Smart Voice Assistant

Sep 26, 2023

Abstract:Recent advances in machine learning and natural language processing have fostered the enormous prosperity of smart voice assistants and their services, e.g., Alexa, Google Home, Siri, etc. However, voice spoofing attacks are deemed to be one of the major challenges of voice control security, and never stop evolving such as deep-learning-based voice conversion and speech synthesis techniques. To solve this problem outside the acoustic domain, we focus on head-wearable devices, such as earbuds and virtual reality (VR) headsets, which are feasible to continuously monitor the bone-conducted voice in the vibration domain. Specifically, we identify that air and bone conduction (AC/BC) from the same vocalization are coupled (or concurrent) and user-level unique, which makes them suitable behavior and biometric factors for multi-factor authentication (MFA). The legitimate user can defeat acoustic domain and even cross-domain spoofing samples with the proposed two-stage AirBone authentication. The first stage answers \textit{whether air and bone conduction utterances are time domain consistent (TC)} and the second stage runs \textit{bone conduction speaker recognition (BC-SR)}. The security level is hence increased for two reasons: (1) current acoustic attacks on smart voice assistants cannot affect bone conduction, which is in the vibration domain; (2) even for advanced cross-domain attacks, the unique bone conduction features can detect adversary's impersonation and machine-induced vibration. Finally, AirBone authentication has good usability (the same level as voice authentication) compared with traditional MFA and those specially designed to enhance smart voice security. Our experimental results show that the proposed AirBone authentication is usable and secure, and can be easily equipped by commercial off-the-shelf head wearables with good user experience.

Simple and Effective Relation-based Embedding Propagation for Knowledge Representation Learning

May 13, 2022

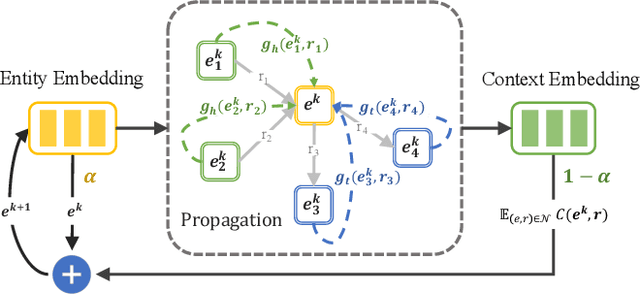

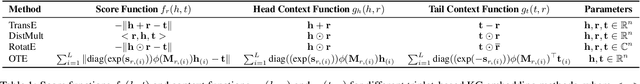

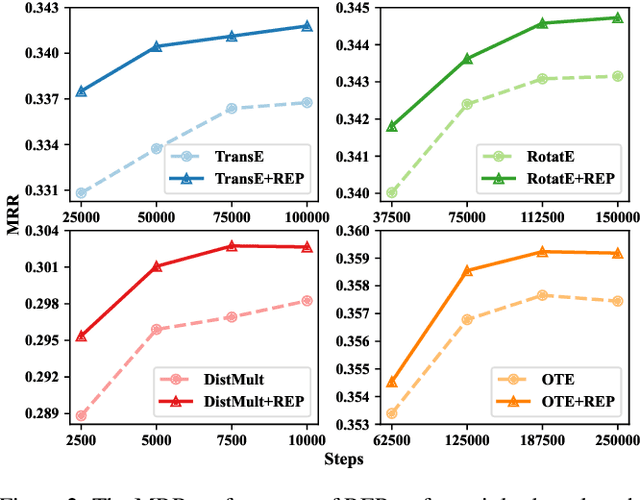

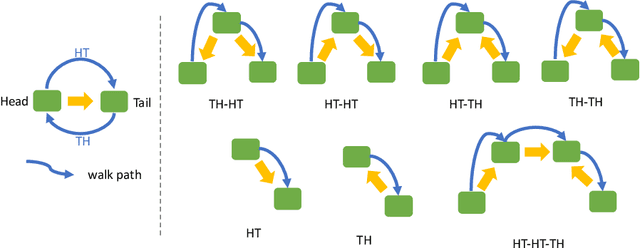

Abstract:Relational graph neural networks have garnered particular attention to encode graph context in knowledge graphs (KGs). Although they achieved competitive performance on small KGs, how to efficiently and effectively utilize graph context for large KGs remains an open problem. To this end, we propose the Relation-based Embedding Propagation (REP) method. It is a post-processing technique to adapt pre-trained KG embeddings with graph context. As relations in KGs are directional, we model the incoming head context and the outgoing tail context separately. Accordingly, we design relational context functions with no external parameters. Besides, we use averaging to aggregate context information, making REP more computation-efficient. We theoretically prove that such designs can avoid information distortion during propagation. Extensive experiments also demonstrate that REP has significant scalability while improving or maintaining prediction quality. Notably, it averagely brings about 10% relative improvement to triplet-based embedding methods on OGBL-WikiKG2 and takes 5%-83% time to achieve comparable results as the state-of-the-art GC-OTE.

NOTE: Solution for KDD-CUP 2021 WikiKG90M-LSC

Jul 05, 2021

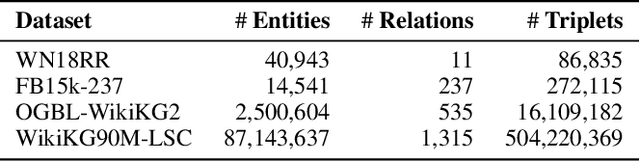

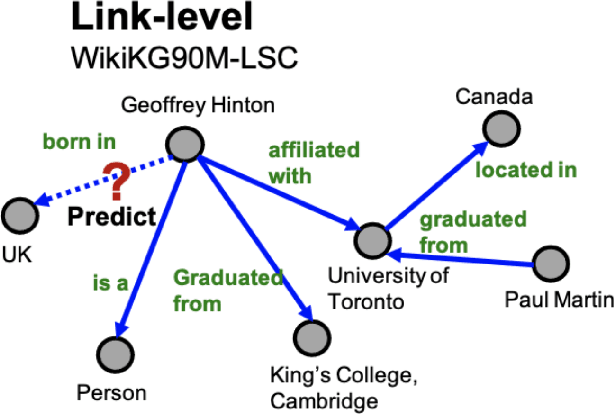

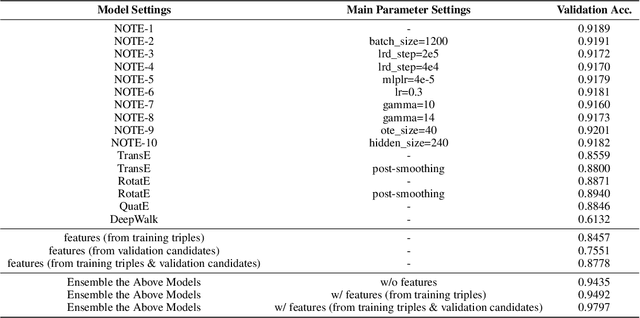

Abstract:WikiKG90M in KDD Cup 2021 is a large encyclopedic knowledge graph, which could benefit various downstream applications such as question answering and recommender systems. Participants are invited to complete the knowledge graph by predicting missing triplets. Recent representation learning methods have achieved great success on standard datasets like FB15k-237. Thus, we train the advanced algorithms in different domains to learn the triplets, including OTE, QuatE, RotatE and TransE. Significantly, we modified OTE into NOTE (short for Norm-OTE) for better performance. Besides, we use both the DeepWalk and the post-smoothing technique to capture the graph structure for supplementation. In addition to the representations, we also use various statistical probabilities among the head entities, the relations and the tail entities for the final prediction. Experimental results show that the ensemble of state-of-the-art representation learning methods could draw on each others strengths. And we develop feature engineering from validation candidates for further improvements. Please note that we apply the same strategy on the test set for final inference. And these features may not be practical in the real world when considering ranking against all the entities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge