Dian Shi

Eve Said Yes: AirBone Authentication for Head-Wearable Smart Voice Assistant

Sep 26, 2023

Abstract:Recent advances in machine learning and natural language processing have fostered the enormous prosperity of smart voice assistants and their services, e.g., Alexa, Google Home, Siri, etc. However, voice spoofing attacks are deemed to be one of the major challenges of voice control security, and never stop evolving such as deep-learning-based voice conversion and speech synthesis techniques. To solve this problem outside the acoustic domain, we focus on head-wearable devices, such as earbuds and virtual reality (VR) headsets, which are feasible to continuously monitor the bone-conducted voice in the vibration domain. Specifically, we identify that air and bone conduction (AC/BC) from the same vocalization are coupled (or concurrent) and user-level unique, which makes them suitable behavior and biometric factors for multi-factor authentication (MFA). The legitimate user can defeat acoustic domain and even cross-domain spoofing samples with the proposed two-stage AirBone authentication. The first stage answers \textit{whether air and bone conduction utterances are time domain consistent (TC)} and the second stage runs \textit{bone conduction speaker recognition (BC-SR)}. The security level is hence increased for two reasons: (1) current acoustic attacks on smart voice assistants cannot affect bone conduction, which is in the vibration domain; (2) even for advanced cross-domain attacks, the unique bone conduction features can detect adversary's impersonation and machine-induced vibration. Finally, AirBone authentication has good usability (the same level as voice authentication) compared with traditional MFA and those specially designed to enhance smart voice security. Our experimental results show that the proposed AirBone authentication is usable and secure, and can be easily equipped by commercial off-the-shelf head wearables with good user experience.

Energy and Spectrum Efficient Federated Learning via High-Precision Over-the-Air Computation

Aug 15, 2022

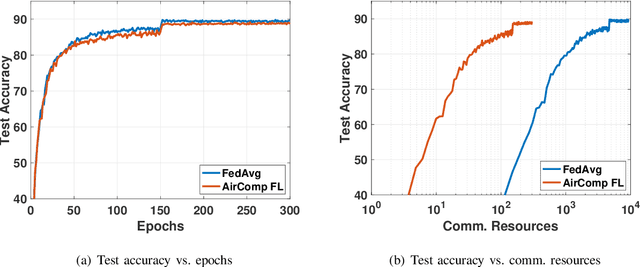

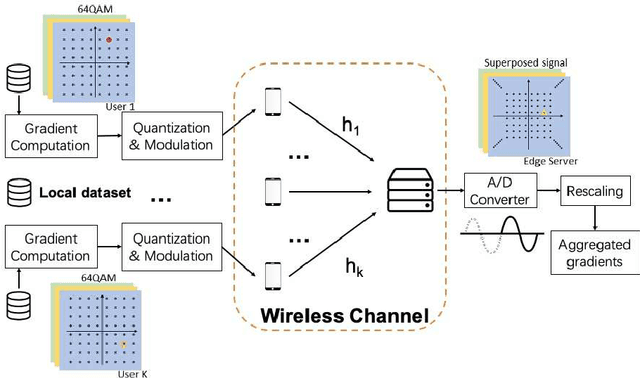

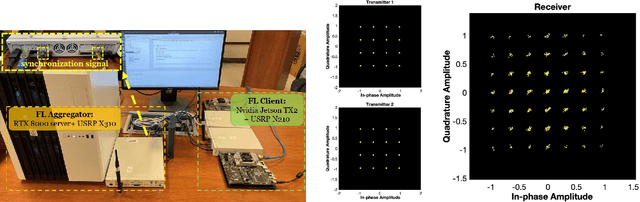

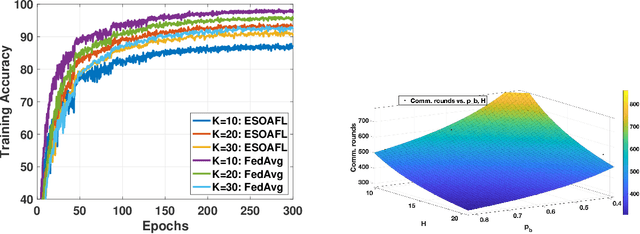

Abstract:Federated learning (FL) enables mobile devices to collaboratively learn a shared prediction model while keeping data locally. However, there are two major research challenges to practically deploy FL over mobile devices: (i) frequent wireless updates of huge size gradients v.s. limited spectrum resources, and (ii) energy-hungry FL communication and local computing during training v.s. battery-constrained mobile devices. To address those challenges, in this paper, we propose a novel multi-bit over-the-air computation (M-AirComp) approach for spectrum-efficient aggregation of local model updates in FL and further present an energy-efficient FL design for mobile devices. Specifically, a high-precision digital modulation scheme is designed and incorporated in the M-AirComp, allowing mobile devices to upload model updates at the selected positions simultaneously in the multi-access channel. Moreover, we theoretically analyze the convergence property of our FL algorithm. Guided by FL convergence analysis, we formulate a joint transmission probability and local computing control optimization, aiming to minimize the overall energy consumption (i.e., iterative local computing + multi-round communications) of mobile devices in FL. Extensive simulation results show that our proposed scheme outperforms existing ones in terms of spectrum utilization, energy efficiency, and learning accuracy.

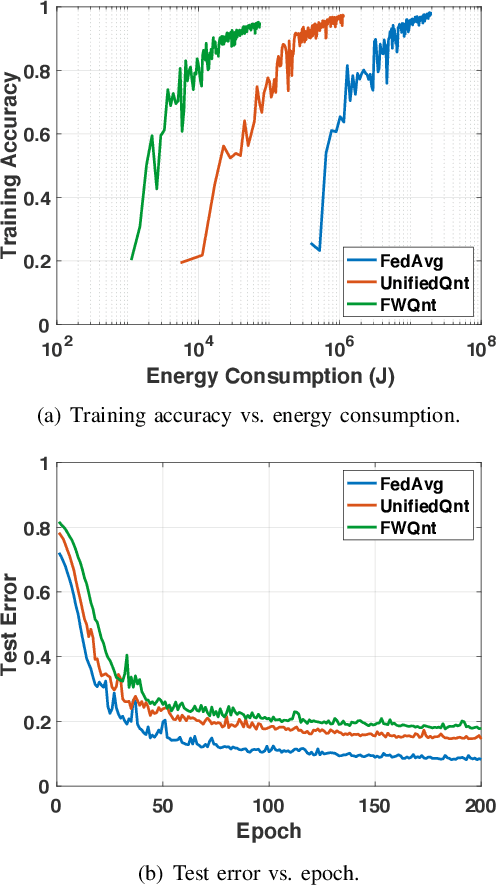

Service Delay Minimization for Federated Learning over Mobile Devices

May 19, 2022

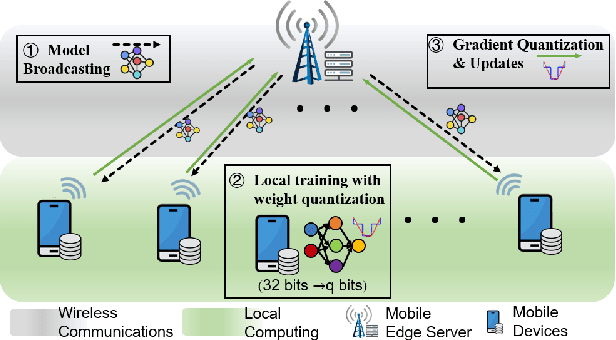

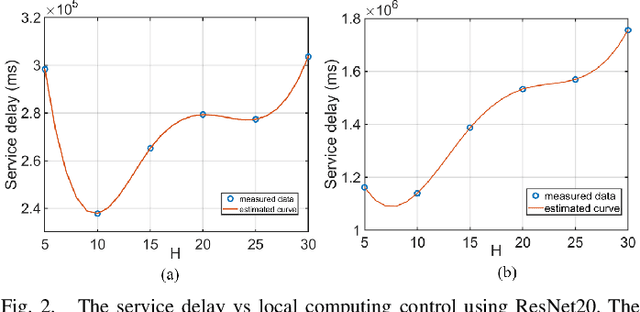

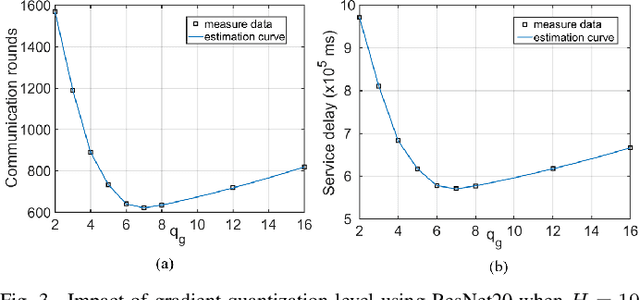

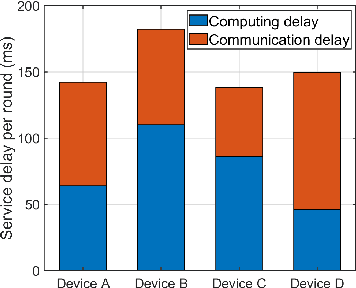

Abstract:Federated learning (FL) over mobile devices has fostered numerous intriguing applications/services, many of which are delay-sensitive. In this paper, we propose a service delay efficient FL (SDEFL) scheme over mobile devices. Unlike traditional communication efficient FL, which regards wireless communications as the bottleneck, we find that under many situations, the local computing delay is comparable to the communication delay during the FL training process, given the development of high-speed wireless transmission techniques. Thus, the service delay in FL should be computing delay + communication delay over training rounds. To minimize the service delay of FL, simply reducing local computing/communication delay independently is not enough. The delay trade-off between local computing and wireless communications must be considered. Besides, we empirically study the impacts of local computing control and compression strategies (i.e., the number of local updates, weight quantization, and gradient quantization) on computing, communication and service delays. Based on those trade-off observation and empirical studies, we develop an optimization scheme to minimize the service delay of FL over heterogeneous devices. We establish testbeds and conduct extensive emulations/experiments to verify our theoretical analysis. The results show that SDEFL reduces notable service delay with a small accuracy drop compared to peer designs.

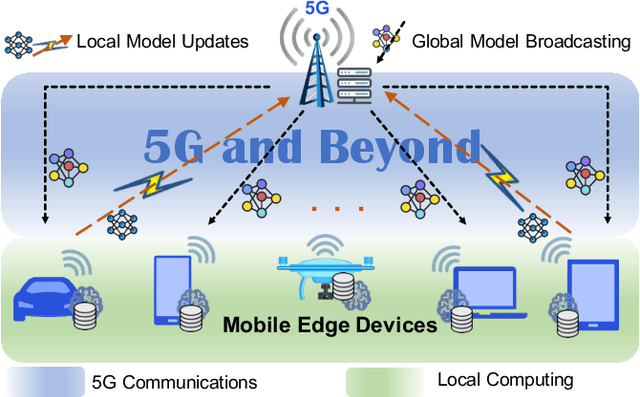

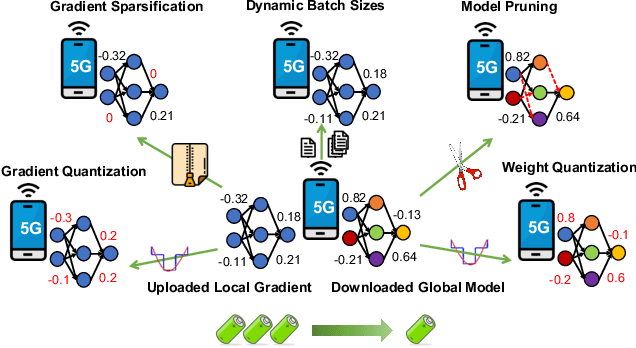

Towards Energy Efficient Federated Learning over 5G+ Mobile Devices

Jan 13, 2021

Abstract:The continuous convergence of machine learning algorithms, 5G and beyond (5G+) wireless communications, and artificial intelligence (AI) hardware implementation hastens the birth of federated learning (FL) over 5G+ mobile devices, which pushes AI functions to mobile devices and initiates a new era of on-device AI applications. Despite the remarkable progress made in FL, huge energy consumption is one of the most significant obstacles restricting the development of FL over battery-constrained 5G+ mobile devices. To address this issue, in this paper, we investigate how to develop energy efficient FL over 5G+ mobile devices by making a trade-off between energy consumption for "working" (i.e., local computing) and that for "talking" (i.e., wireless communications) in order to boost the overall energy efficiency. Specifically, we first examine energy consumption models for graphics processing unit (GPU) computation and wireless transmissions. Then, we overview the state of the art of integrating FL procedure with energy-efficient learning techniques (e.g., gradient sparsification, weight quantization, pruning, etc.). Finally, we present several potential future research directions for FL over 5G+ mobile devices from the perspective of energy efficiency.

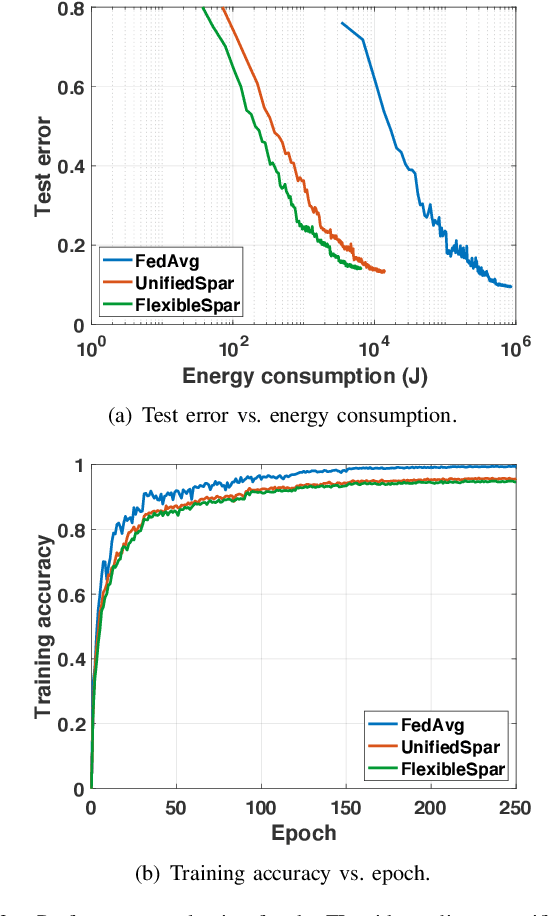

To Talk or to Work: Flexible Communication Compression for Energy Efficient Federated Learning over Heterogeneous Mobile Edge Devices

Dec 22, 2020

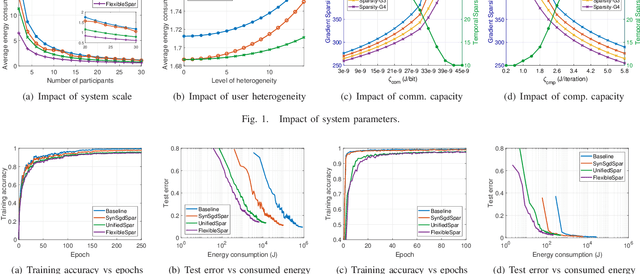

Abstract:Recent advances in machine learning, wireless communication, and mobile hardware technologies promisingly enable federated learning (FL) over massive mobile edge devices, which opens new horizons for numerous intelligent mobile applications. Despite the potential benefits, FL imposes huge communication and computation burdens on participating devices due to periodical global synchronization and continuous local training, raising great challenges to battery constrained mobile devices. In this work, we target at improving the energy efficiency of FL over mobile edge networks to accommodate heterogeneous participating devices without sacrificing the learning performance. To this end, we develop a convergence-guaranteed FL algorithm enabling flexible communication compression. Guided by the derived convergence bound, we design a compression control scheme to balance the energy consumption of local computing (i.e., "working") and wireless communication (i.e., "talking") from the long-term learning perspective. In particular, the compression parameters are elaborately chosen for FL participants adapting to their computing and communication environments. Extensive simulations are conducted using various datasets to validate our theoretical analysis, and the results also demonstrate the efficacy of the proposed scheme in energy saving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge