Dongqi Han

VidGuard-R1: AI-Generated Video Detection and Explanation via Reasoning MLLMs and RL

Oct 02, 2025Abstract:With the rapid advancement of AI-generated videos, there is an urgent need for effective detection tools to mitigate societal risks such as misinformation and reputational harm. In addition to accurate classification, it is essential that detection models provide interpretable explanations to ensure transparency for regulators and end users. To address these challenges, we introduce VidGuard-R1, the first video authenticity detector that fine-tunes a multi-modal large language model (MLLM) using group relative policy optimization (GRPO). Our model delivers both highly accurate judgments and insightful reasoning. We curate a challenging dataset of 140k real and AI-generated videos produced by state-of-the-art generation models, carefully designing the generation process to maximize discrimination difficulty. We then fine-tune Qwen-VL using GRPO with two specialized reward models that target temporal artifacts and generation complexity. Extensive experiments demonstrate that VidGuard-R1 achieves state-of-the-art zero-shot performance on existing benchmarks, with additional training pushing accuracy above 95%. Case studies further show that VidGuard-R1 produces precise and interpretable rationales behind its predictions. The code is publicly available at https://VidGuard-R1.github.io.

Do Not Let Low-Probability Tokens Over-Dominate in RL for LLMs

May 19, 2025Abstract:Reinforcement learning (RL) has become a cornerstone for enhancing the reasoning capabilities of large language models (LLMs), with recent innovations such as Group Relative Policy Optimization (GRPO) demonstrating exceptional effectiveness. In this study, we identify a critical yet underexplored issue in RL training: low-probability tokens disproportionately influence model updates due to their large gradient magnitudes. This dominance hinders the effective learning of high-probability tokens, whose gradients are essential for LLMs' performance but are substantially suppressed. To mitigate this interference, we propose two novel methods: Advantage Reweighting and Low-Probability Token Isolation (Lopti), both of which effectively attenuate gradients from low-probability tokens while emphasizing parameter updates driven by high-probability tokens. Our approaches promote balanced updates across tokens with varying probabilities, thereby enhancing the efficiency of RL training. Experimental results demonstrate that they substantially improve the performance of GRPO-trained LLMs, achieving up to a 46.2% improvement in K&K Logic Puzzle reasoning tasks. Our implementation is available at https://github.com/zhyang2226/AR-Lopti.

Habitizing Diffusion Planning for Efficient and Effective Decision Making

Feb 10, 2025

Abstract:Diffusion models have shown great promise in decision-making, also known as diffusion planning. However, the slow inference speeds limit their potential for broader real-world applications. Here, we introduce Habi, a general framework that transforms powerful but slow diffusion planning models into fast decision-making models, which mimics the cognitive process in the brain that costly goal-directed behavior gradually transitions to efficient habitual behavior with repetitive practice. Even using a laptop CPU, the habitized model can achieve an average 800+ Hz decision-making frequency (faster than previous diffusion planners by orders of magnitude) on standard offline reinforcement learning benchmarks D4RL, while maintaining comparable or even higher performance compared to its corresponding diffusion planner. Our work proposes a fresh perspective of leveraging powerful diffusion models for real-world decision-making tasks. We also provide robust evaluations and analysis, offering insights from both biological and engineering perspectives for efficient and effective decision-making.

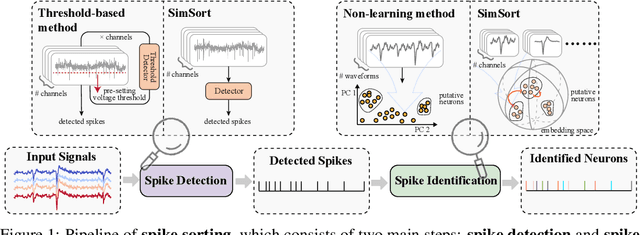

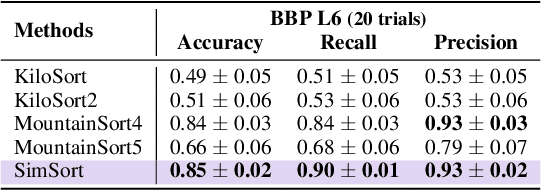

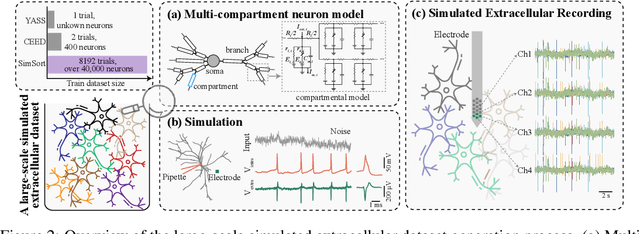

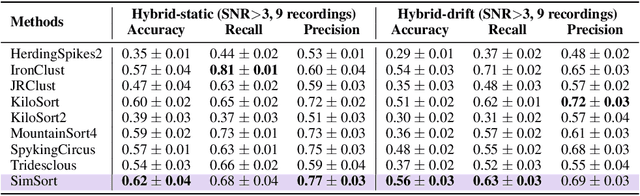

SimSort: A Powerful Framework for Spike Sorting by Large-Scale Electrophysiology Simulation

Feb 05, 2025

Abstract:Spike sorting is an essential process in neural recording, which identifies and separates electrical signals from individual neurons recorded by electrodes in the brain, enabling researchers to study how specific neurons communicate and process information. Although there exist a number of spike sorting methods which have contributed to significant neuroscientific breakthroughs, many are heuristically designed, making it challenging to verify their correctness due to the difficulty of obtaining ground truth labels from real-world neural recordings. In this work, we explore a data-driven, deep learning-based approach. We begin by creating a large-scale dataset through electrophysiology simulations using biologically realistic computational models. We then present \textbf{SimSort}, a pretraining framework for spike sorting. Remarkably, when trained on our simulated dataset, SimSort demonstrates strong zero-shot generalization to real-world spike sorting tasks, significantly outperforming existing methods. Our findings underscore the potential of data-driven techniques to enhance the reliability and scalability of spike sorting in experimental neuroscience.

Toward Relative Positional Encoding in Spiking Transformers

Jan 28, 2025

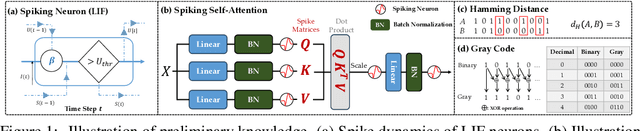

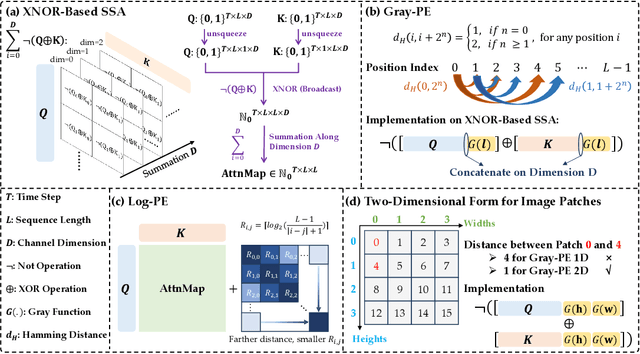

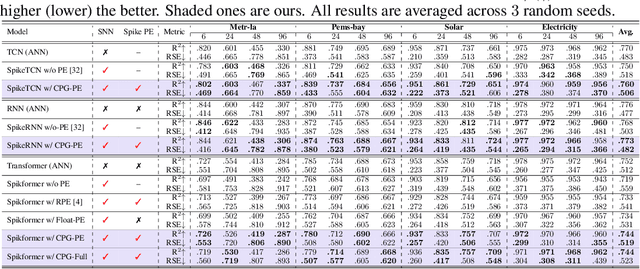

Abstract:Spiking neural networks (SNNs) are bio-inspired networks that model how neurons in the brain communicate through discrete spikes, which have great potential in various tasks due to their energy efficiency and temporal processing capabilities. SNNs with self-attention mechanisms (Spiking Transformers) have recently shown great advancements in various tasks such as sequential modeling and image classifications. However, integrating positional information, which is essential for capturing sequential relationships in data, remains a challenge in Spiking Transformers. In this paper, we introduce an approximate method for relative positional encoding (RPE) in Spiking Transformers, leveraging Gray Code as the foundation for our approach. We provide comprehensive proof of the method's effectiveness in partially capturing relative positional information for sequential tasks. Additionally, we extend our RPE approach by adapting it to a two-dimensional form suitable for image patch processing. We evaluate the proposed RPE methods on several tasks, including time series forecasting, text classification, and patch-based image classification. Our experimental results demonstrate that the incorporation of RPE significantly enhances performance by effectively capturing relative positional information.

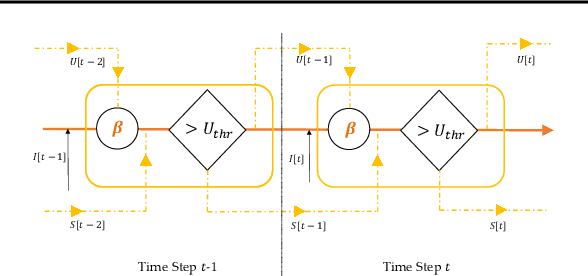

The VEP Booster: A Closed-Loop AI System for Visual EEG Biomarker Auto-generation

Jul 21, 2024Abstract:Effective visual brain-machine interfaces (BMI) is based on reliable and stable EEG biomarkers. However, traditional adaptive filter-based approaches may suffer from individual variations in EEG signals, while deep neural network-based approaches may be hindered by the non-stationarity of EEG signals caused by biomarker attenuation and background oscillations. To address these challenges, we propose the Visual Evoked Potential Booster (VEP Booster), a novel closed-loop AI framework that generates reliable and stable EEG biomarkers under visual stimulation protocols. Our system leverages an image generator to refine stimulus images based on real-time feedback from human EEG signals, generating visual stimuli tailored to the preferences of primary visual cortex (V1) neurons and enabling effective targeting of neurons most responsive to stimuli. We validated our approach by implementing a system and employing steady-state visual evoked potential (SSVEP) visual protocols in five human subjects. Our results show significant enhancements in the reliability and utility of EEG biomarkers for all individuals, with the largest improvement in SSVEP response being 105%, the smallest being 28%, and the average increase being 76.5%. These promising results have implications for both clinical and technological applications

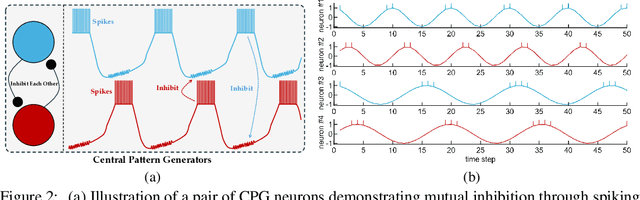

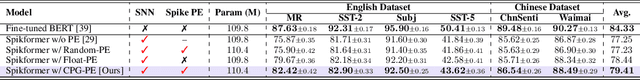

Advancing Spiking Neural Networks for Sequential Modeling with Central Pattern Generators

May 23, 2024

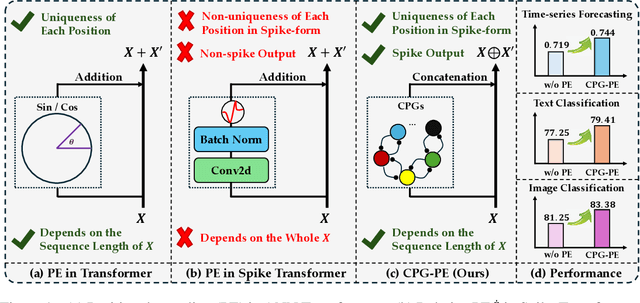

Abstract:Spiking neural networks (SNNs) represent a promising approach to developing artificial neural networks that are both energy-efficient and biologically plausible. However, applying SNNs to sequential tasks, such as text classification and time-series forecasting, has been hindered by the challenge of creating an effective and hardware-friendly spike-form positional encoding (PE) strategy. Drawing inspiration from the central pattern generators (CPGs) in the human brain, which produce rhythmic patterned outputs without requiring rhythmic inputs, we propose a novel PE technique for SNNs, termed CPG-PE. We demonstrate that the commonly used sinusoidal PE is mathematically a specific solution to the membrane potential dynamics of a particular CPG. Moreover, extensive experiments across various domains, including time-series forecasting, natural language processing, and image classification, show that SNNs with CPG-PE outperform their conventional counterparts. Additionally, we perform analysis experiments to elucidate the mechanism through which SNNs encode positional information and to explore the function of CPGs in the human brain. This investigation may offer valuable insights into the fundamental principles of neural computation.

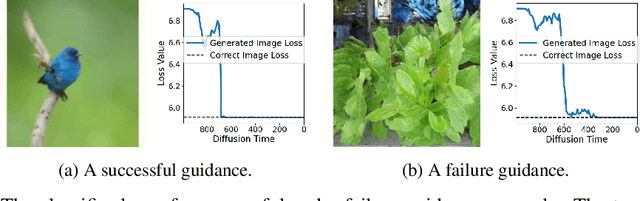

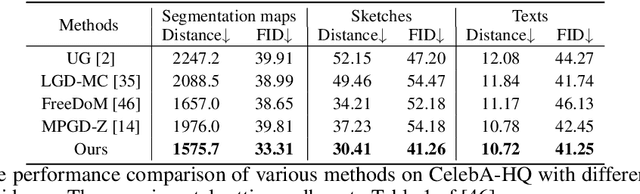

Understanding Training-free Diffusion Guidance: Mechanisms and Limitations

Mar 19, 2024

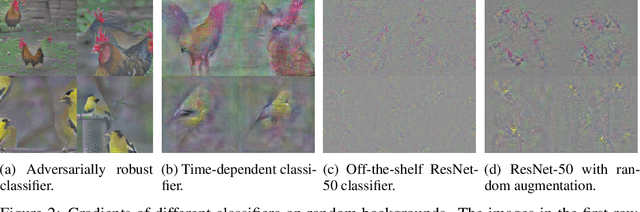

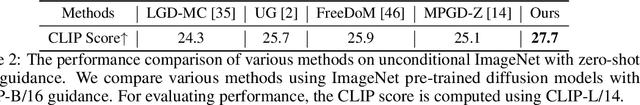

Abstract:Adding additional control to pretrained diffusion models has become an increasingly popular research area, with extensive applications in computer vision, reinforcement learning, and AI for science. Recently, several studies have proposed training-free diffusion guidance by using off-the-shelf networks pretrained on clean images. This approach enables zero-shot conditional generation for universal control formats, which appears to offer a free lunch in diffusion guidance. In this paper, we aim to develop a deeper understanding of the operational mechanisms and fundamental limitations of training-free guidance. We offer a theoretical analysis that supports training-free guidance from the perspective of optimization, distinguishing it from classifier-based (or classifier-free) guidance. To elucidate their drawbacks, we theoretically demonstrate that training-free methods are more susceptible to adversarial gradients and exhibit slower convergence rates compared to classifier guidance. We then introduce a collection of techniques designed to overcome the limitations, accompanied by theoretical rationale and empirical evidence. Our experiments in image and motion generation confirm the efficacy of these techniques.

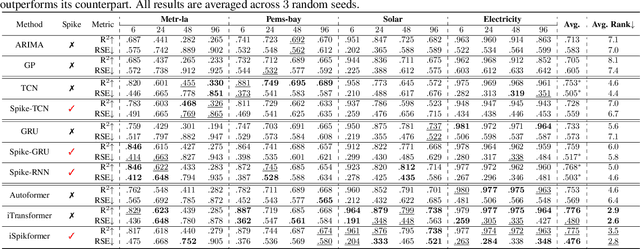

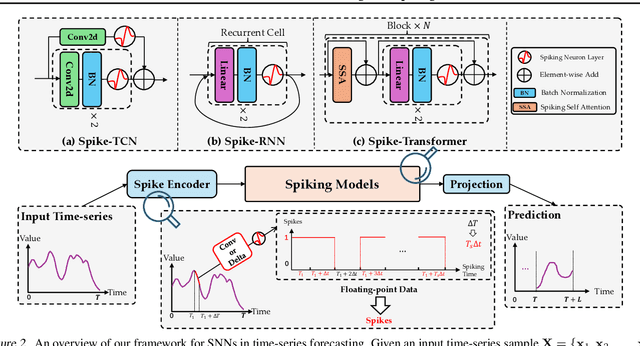

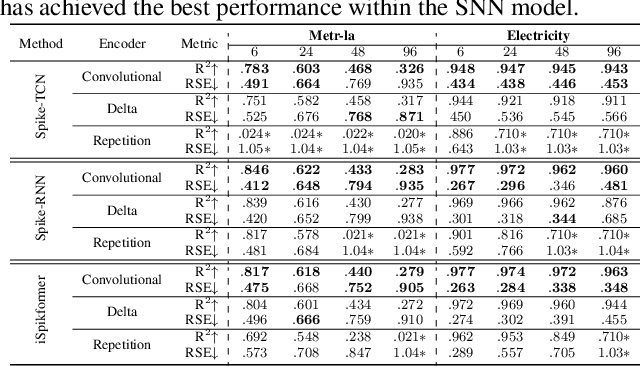

Efficient and Effective Time-Series Forecasting with Spiking Neural Networks

Feb 02, 2024

Abstract:Spiking neural networks (SNNs), inspired by the spiking behavior of biological neurons, provide a unique pathway for capturing the intricacies of temporal data. However, applying SNNs to time-series forecasting is challenging due to difficulties in effective temporal alignment, complexities in encoding processes, and the absence of standardized guidelines for model selection. In this paper, we propose a framework for SNNs in time-series forecasting tasks, leveraging the efficiency of spiking neurons in processing temporal information. Through a series of experiments, we demonstrate that our proposed SNN-based approaches achieve comparable or superior results to traditional time-series forecasting methods on diverse benchmarks with much less energy consumption. Furthermore, we conduct detailed analysis experiments to assess the SNN's capacity to capture temporal dependencies within time-series data, offering valuable insights into its nuanced strengths and effectiveness in modeling the intricate dynamics of temporal data. Our study contributes to the expanding field of SNNs and offers a promising alternative for time-series forecasting tasks, presenting a pathway for the development of more biologically inspired and temporally aware forecasting models.

Toward Open-ended Embodied Tasks Solving

Dec 10, 2023

Abstract:Empowering embodied agents, such as robots, with Artificial Intelligence (AI) has become increasingly important in recent years. A major challenge is task open-endedness. In practice, robots often need to perform tasks with novel goals that are multifaceted, dynamic, lack a definitive "end-state", and were not encountered during training. To tackle this problem, this paper introduces \textit{Diffusion for Open-ended Goals} (DOG), a novel framework designed to enable embodied AI to plan and act flexibly and dynamically for open-ended task goals. DOG synergizes the generative prowess of diffusion models with state-of-the-art, training-free guidance techniques to adaptively perform online planning and control. Our evaluations demonstrate that DOG can handle various kinds of novel task goals not seen during training, in both maze navigation and robot control problems. Our work sheds light on enhancing embodied AI's adaptability and competency in tackling open-ended goals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge