Junwen Luo

The VEP Booster: A Closed-Loop AI System for Visual EEG Biomarker Auto-generation

Jul 21, 2024Abstract:Effective visual brain-machine interfaces (BMI) is based on reliable and stable EEG biomarkers. However, traditional adaptive filter-based approaches may suffer from individual variations in EEG signals, while deep neural network-based approaches may be hindered by the non-stationarity of EEG signals caused by biomarker attenuation and background oscillations. To address these challenges, we propose the Visual Evoked Potential Booster (VEP Booster), a novel closed-loop AI framework that generates reliable and stable EEG biomarkers under visual stimulation protocols. Our system leverages an image generator to refine stimulus images based on real-time feedback from human EEG signals, generating visual stimuli tailored to the preferences of primary visual cortex (V1) neurons and enabling effective targeting of neurons most responsive to stimuli. We validated our approach by implementing a system and employing steady-state visual evoked potential (SSVEP) visual protocols in five human subjects. Our results show significant enhancements in the reliability and utility of EEG biomarkers for all individuals, with the largest improvement in SSVEP response being 105%, the smallest being 28%, and the average increase being 76.5%. These promising results have implications for both clinical and technological applications

A Consumer-tier based Visual-Brain Machine Interface for Augmented Reality Glasses Interactions

Aug 29, 2023

Abstract:Objective.Visual-Brain Machine Interface(V-BMI) has provide a novel interaction technique for Augmented Reality (AR) industries. Several state-of-arts work has demonstates its high accuracy and real-time interaction capbilities. However, most of the studies employ EEGs devices that are rigid and difficult to apply in real-life AR glasseses application sceniraros. Here we develop a consumer-tier Visual-Brain Machine Inteface(V-BMI) system specialized for Augmented Reality(AR) glasses interactions. Approach. The developed system consists of a wearable hardware which takes advantages of fast set-up, reliable recording and comfortable wearable experience that specificized for AR glasses applications. Complementing this hardware, we have devised a software framework that facilitates real-time interactions within the system while accommodating a modular configuration to enhance scalability. Main results. The developed hardware is only 110g and 120x85x23 mm, which with 1 Tohm and peak to peak voltage is less than 1.5 uV, and a V-BMI based angry bird game and an Internet of Thing (IoT) AR applications are deisgned, we demonstrated such technology merits of intuitive experience and efficiency interaction. The real-time interaction accuracy is between 85 and 96 percentages in a commercial AR glasses (DTI is 2.24s and ITR 65 bits-min ). Significance. Our study indicates the developed system can provide an essential hardware-software framework for consumer based V-BMI AR glasses. Also, we derive several pivotal design factors for a consumer-grade V-BMI-based AR system: 1) Dynamic adaptation of stimulation patterns-classification methods via computer vision algorithms is necessary for AR glasses applications; and 2) Algorithmic localization to foster system stability and latency reduction.

The Spike Gating Flow: A Hierarchical Structure Based Spiking Neural Network for Online Gesture Recognition

Jun 07, 2022

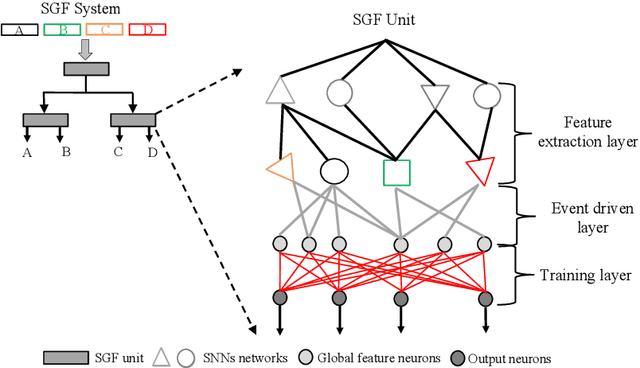

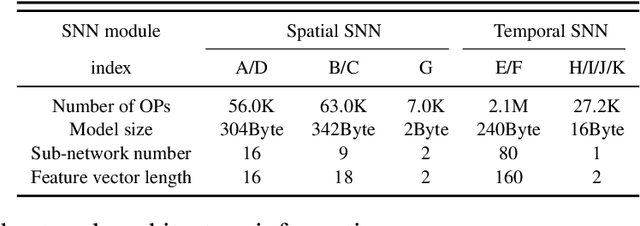

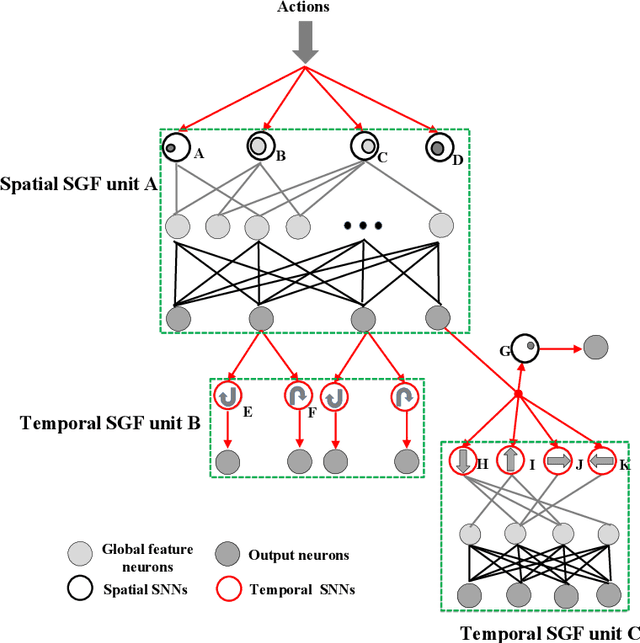

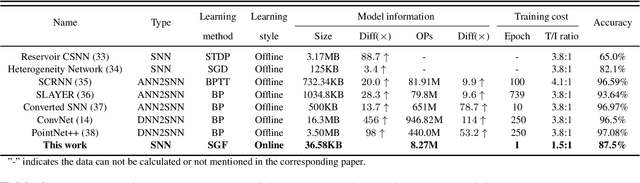

Abstract:Action recognition is an exciting research avenue for artificial intelligence since it may be a game changer in the emerging industrial fields such as robotic visions and automobiles. However, current deep learning faces major challenges for such applications because of the huge computational cost and the inefficient learning. Hence, we develop a novel brain-inspired Spiking Neural Network (SNN) based system titled Spiking Gating Flow (SGF) for online action learning. The developed system consists of multiple SGF units which assembled in a hierarchical manner. A single SGF unit involves three layers: a feature extraction layer, an event-driven layer and a histogram-based training layer. To demonstrate the developed system capabilities, we employ a standard Dynamic Vision Sensor (DVS) gesture classification as a benchmark. The results indicate that we can achieve 87.5% accuracy which is comparable with Deep Learning (DL), but at smaller training/inference data number ratio 1.5:1. And only a single training epoch is required during the learning process. Meanwhile, to the best of our knowledge, this is the highest accuracy among the non-backpropagation algorithm based SNNs. At last, we conclude the few-shot learning paradigm of the developed network: 1) a hierarchical structure-based network design involves human prior knowledge; 2) SNNs for content based global dynamic feature detection.

Load-balanced Gather-scatter Patterns for Sparse Deep Neural Networks

Dec 20, 2021

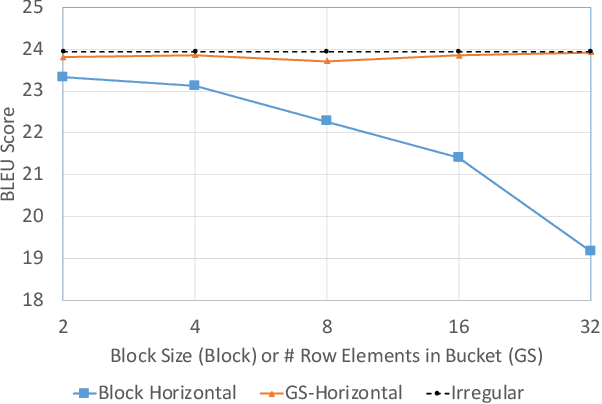

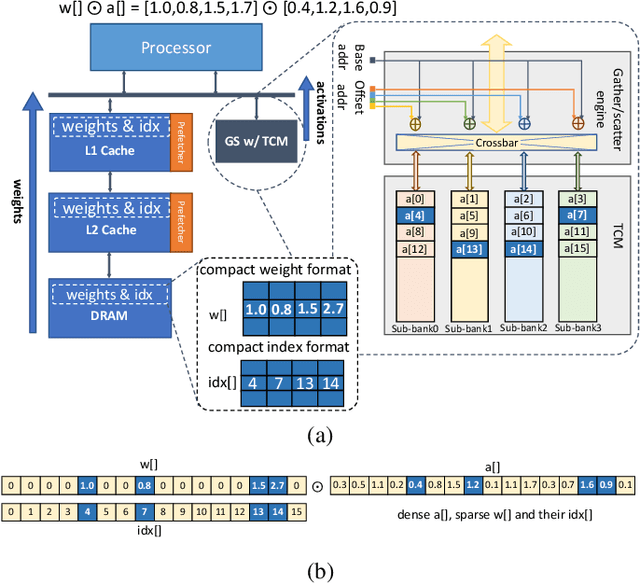

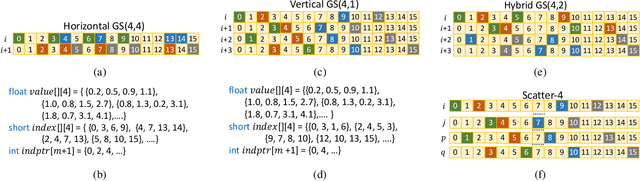

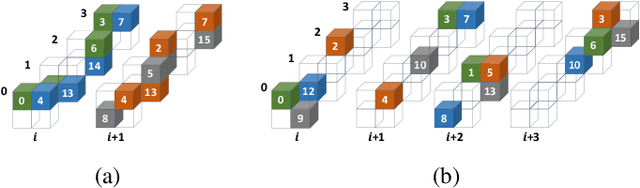

Abstract:Deep neural networks (DNNs) have been proven to be effective in solving many real-life problems, but its high computation cost prohibits those models from being deployed to edge devices. Pruning, as a method to introduce zeros to model weights, has shown to be an effective method to provide good trade-offs between model accuracy and computation efficiency, and is a widely-used method to generate compressed models. However, the granularity of pruning makes important trade-offs. At the same sparsity level, a coarse-grained structured sparse pattern is more efficient on conventional hardware but results in worse accuracy, while a fine-grained unstructured sparse pattern can achieve better accuracy but is inefficient on existing hardware. On the other hand, some modern processors are equipped with fast on-chip scratchpad memories and gather/scatter engines that perform indirect load and store operations on such memories. In this work, we propose a set of novel sparse patterns, named gather-scatter (GS) patterns, to utilize the scratchpad memories and gather/scatter engines to speed up neural network inferences. Correspondingly, we present a compact sparse format. The proposed set of sparse patterns, along with a novel pruning methodology, address the load imbalance issue and result in models with quality close to unstructured sparse models and computation efficiency close to structured sparse models. Our experiments show that GS patterns consistently make better trade-offs between accuracy and computation efficiency compared to conventional structured sparse patterns. GS patterns can reduce the runtime of the DNN components by two to three times at the same accuracy levels. This is confirmed on three different deep learning tasks and popular models, namely, GNMT for machine translation, ResNet50 for image recognition, and Japser for acoustic speech recognition.

An Internal Clock Based Space-time Neural Network for Motion Speed Recognition

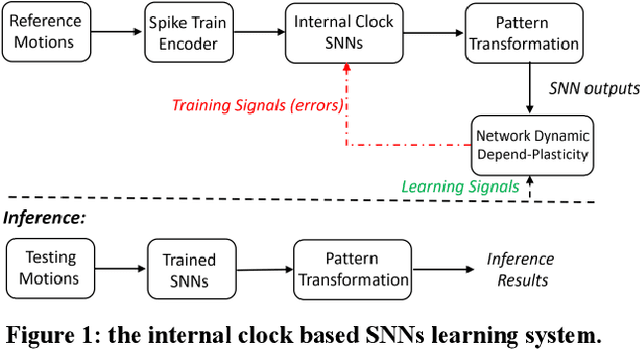

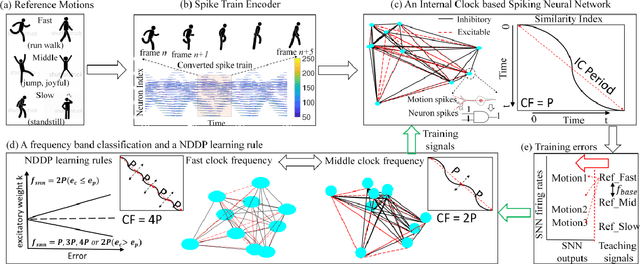

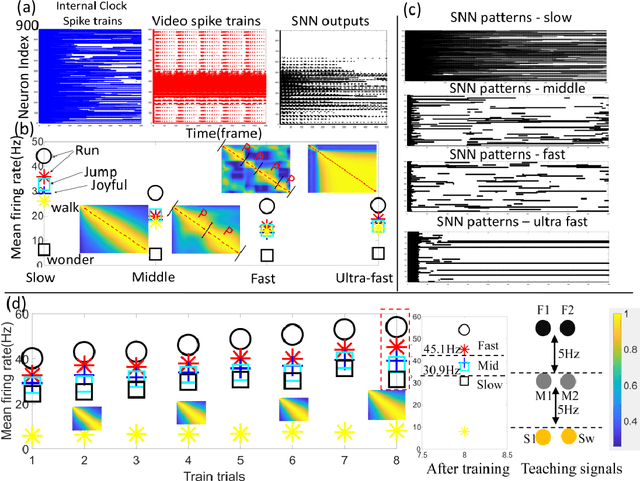

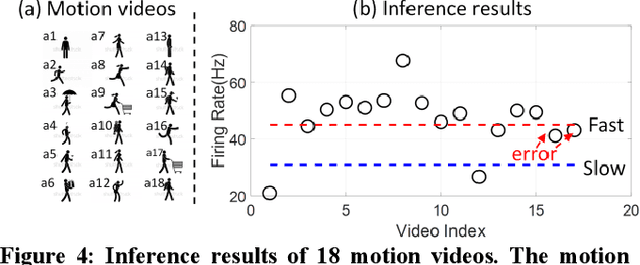

Jan 28, 2020

Abstract:In this work we present a novel internal clock based space-time neural network for motion speed recognition. The developed system has a spike train encoder, a Spiking Neural Network (SNN) with internal clocking behaviors, a pattern transformation block and a Network Dynamic Dependent Plasticity (NDDP) learning block. The core principle is that the developed SNN will automatically tune its network pattern frequency (internal clock frequency) to recognize human motions in a speed domain. We employed both cartoons and real-world videos as training benchmarks, results demonstrate that our system can not only recognize motions with considerable speed differences (e.g. run, walk, jump, wonder(think) and standstill), but also motions with subtle speed gaps such as run and fast walk. The inference accuracy can be up to 83.3% (cartoon videos) and 75% (real-world videos). Meanwhile, the system only requires six video datasets in the learning stage and with up to 42 training trials. Hardware performance estimation indicates that the training time is 0.84-4.35s and power consumption is 33.26-201mW (based on an ARM Cortex M4 processor). Therefore, our system takes unique learning advantages of the requirement of the small dataset, quick learning and low power performance, which shows great potentials for edge or scalable AI-based applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge