An Internal Clock Based Space-time Neural Network for Motion Speed Recognition

Paper and Code

Jan 28, 2020

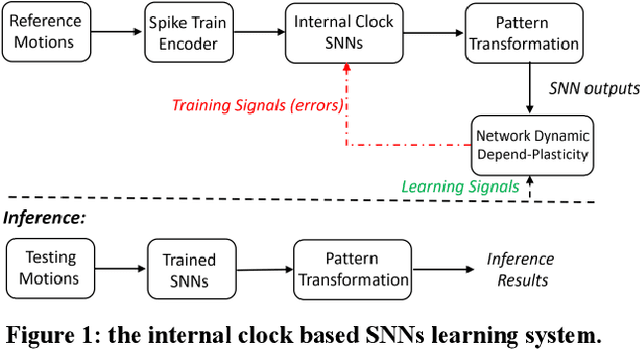

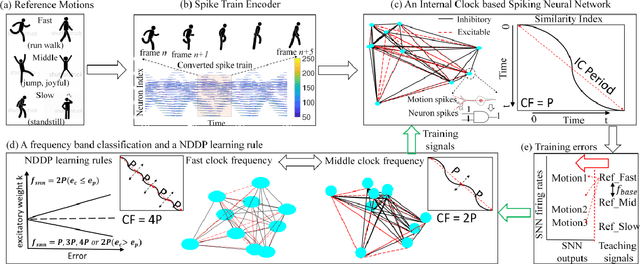

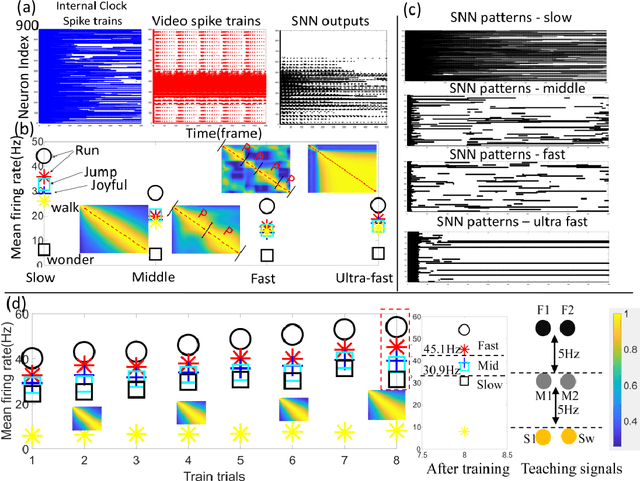

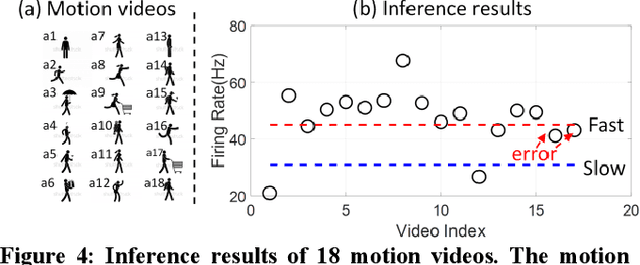

In this work we present a novel internal clock based space-time neural network for motion speed recognition. The developed system has a spike train encoder, a Spiking Neural Network (SNN) with internal clocking behaviors, a pattern transformation block and a Network Dynamic Dependent Plasticity (NDDP) learning block. The core principle is that the developed SNN will automatically tune its network pattern frequency (internal clock frequency) to recognize human motions in a speed domain. We employed both cartoons and real-world videos as training benchmarks, results demonstrate that our system can not only recognize motions with considerable speed differences (e.g. run, walk, jump, wonder(think) and standstill), but also motions with subtle speed gaps such as run and fast walk. The inference accuracy can be up to 83.3% (cartoon videos) and 75% (real-world videos). Meanwhile, the system only requires six video datasets in the learning stage and with up to 42 training trials. Hardware performance estimation indicates that the training time is 0.84-4.35s and power consumption is 33.26-201mW (based on an ARM Cortex M4 processor). Therefore, our system takes unique learning advantages of the requirement of the small dataset, quick learning and low power performance, which shows great potentials for edge or scalable AI-based applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge