Xiaoan Wang

A Consumer-tier based Visual-Brain Machine Interface for Augmented Reality Glasses Interactions

Aug 29, 2023

Abstract:Objective.Visual-Brain Machine Interface(V-BMI) has provide a novel interaction technique for Augmented Reality (AR) industries. Several state-of-arts work has demonstates its high accuracy and real-time interaction capbilities. However, most of the studies employ EEGs devices that are rigid and difficult to apply in real-life AR glasseses application sceniraros. Here we develop a consumer-tier Visual-Brain Machine Inteface(V-BMI) system specialized for Augmented Reality(AR) glasses interactions. Approach. The developed system consists of a wearable hardware which takes advantages of fast set-up, reliable recording and comfortable wearable experience that specificized for AR glasses applications. Complementing this hardware, we have devised a software framework that facilitates real-time interactions within the system while accommodating a modular configuration to enhance scalability. Main results. The developed hardware is only 110g and 120x85x23 mm, which with 1 Tohm and peak to peak voltage is less than 1.5 uV, and a V-BMI based angry bird game and an Internet of Thing (IoT) AR applications are deisgned, we demonstrated such technology merits of intuitive experience and efficiency interaction. The real-time interaction accuracy is between 85 and 96 percentages in a commercial AR glasses (DTI is 2.24s and ITR 65 bits-min ). Significance. Our study indicates the developed system can provide an essential hardware-software framework for consumer based V-BMI AR glasses. Also, we derive several pivotal design factors for a consumer-grade V-BMI-based AR system: 1) Dynamic adaptation of stimulation patterns-classification methods via computer vision algorithms is necessary for AR glasses applications; and 2) Algorithmic localization to foster system stability and latency reduction.

The Spike Gating Flow: A Hierarchical Structure Based Spiking Neural Network for Online Gesture Recognition

Jun 07, 2022

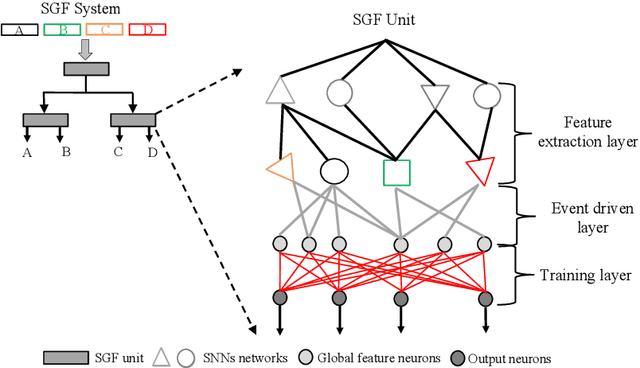

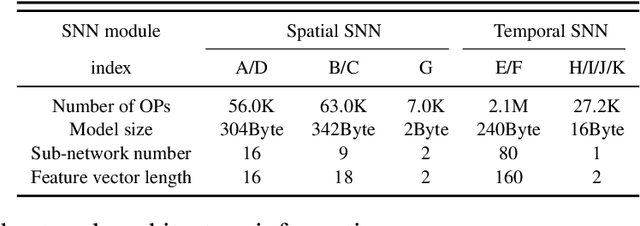

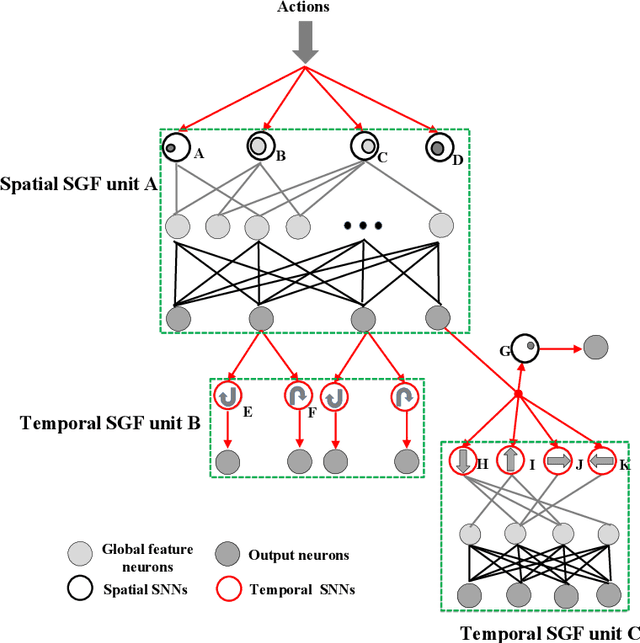

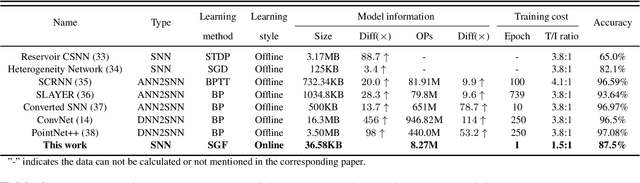

Abstract:Action recognition is an exciting research avenue for artificial intelligence since it may be a game changer in the emerging industrial fields such as robotic visions and automobiles. However, current deep learning faces major challenges for such applications because of the huge computational cost and the inefficient learning. Hence, we develop a novel brain-inspired Spiking Neural Network (SNN) based system titled Spiking Gating Flow (SGF) for online action learning. The developed system consists of multiple SGF units which assembled in a hierarchical manner. A single SGF unit involves three layers: a feature extraction layer, an event-driven layer and a histogram-based training layer. To demonstrate the developed system capabilities, we employ a standard Dynamic Vision Sensor (DVS) gesture classification as a benchmark. The results indicate that we can achieve 87.5% accuracy which is comparable with Deep Learning (DL), but at smaller training/inference data number ratio 1.5:1. And only a single training epoch is required during the learning process. Meanwhile, to the best of our knowledge, this is the highest accuracy among the non-backpropagation algorithm based SNNs. At last, we conclude the few-shot learning paradigm of the developed network: 1) a hierarchical structure-based network design involves human prior knowledge; 2) SNNs for content based global dynamic feature detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge