Hong Zhu

RelayGR: Scaling Long-Sequence Generative Recommendation via Cross-Stage Relay-Race Inference

Jan 05, 2026Abstract:Real-time recommender systems execute multi-stage cascades (retrieval, pre-processing, fine-grained ranking) under strict tail-latency SLOs, leaving only tens of milliseconds for ranking. Generative recommendation (GR) models can improve quality by consuming long user-behavior sequences, but in production their online sequence length is tightly capped by the ranking-stage P99 budget. We observe that the majority of GR tokens encode user behaviors that are independent of the item candidates, suggesting an opportunity to pre-infer a user-behavior prefix once and reuse it during ranking rather than recomputing it on the critical path. Realizing this idea at industrial scale is non-trivial: the prefix cache must survive across multiple pipeline stages before the final ranking instance is determined, the user population implies cache footprints far beyond a single device, and indiscriminate pre-inference would overload shared resources under high QPS. We present RelayGR, a production system that enables in-HBM relay-race inference for GR. RelayGR selectively pre-infers long-term user prefixes, keeps their KV caches resident in HBM over the request lifecycle, and ensures the subsequent ranking can consume them without remote fetches. RelayGR combines three techniques: 1) a sequence-aware trigger that admits only at-risk requests under a bounded cache footprint and pre-inference load, 2) an affinity-aware router that co-locates cache production and consumption by routing both the auxiliary pre-infer signal and the ranking request to the same instance, and 3) a memory-aware expander that uses server-local DRAM to capture short-term cross-request reuse while avoiding redundant reloads. We implement RelayGR on Huawei Ascend NPUs and evaluate it with real queries. Under a fixed P99 SLO, RelayGR supports up to 1.5$\times$ longer sequences and improves SLO-compliant throughput by up to 3.6$\times$.

Multi-Sensor Fusion for Extended Object Tracking Exploiting Active and Passive Radio Signals

Sep 03, 2025Abstract:Reliable and robust positioning of radio devices remains a challenging task due to multipath propagation, hardware impairments, and interference from other radio transmitters. A frequently overlooked but critical factor is the agent itself, e.g., the user carrying the device, which potentially obstructs line-of-sight (LOS) links to the base stations (anchors). This paper addresses the problem of accurate positioning in scenarios where LOS links are partially blocked by the agent. The agent is modeled as an extended object (EO) that scatters, attenuates, and blocks radio signals. We propose a Bayesian method that fuses ``active'' measurements (between device and anchors) with ``passive'' multistatic radar-type measurements (between anchors, reflected by the EO). To handle measurement origin uncertainty, we introduce an multi-sensor and multiple-measurement probabilistic data association (PDA) algorithm that jointly fuses all EO-related measurements. Furthermore, we develop an EO model tailored to agents such as human users, accounting for multiple reflections scattered off the body surface, and propose a simplified variant for low-complexity implementation. Evaluation on both synthetic and real radio measurements demonstrates that the proposed algorithm outperforms conventional PDA methods based on point target assumptions, particularly during and after obstructed line-of-sight (OLOS) conditions.

Multi-Sensor Fusion of Active and Passive Measurements for Extended Object Tracking

Apr 25, 2025

Abstract:This paper addresses the challenge of achieving robust and reliable positioning of a radio device carried by an agent, in scenarios where direct line-of-sight (LOS) radio links are obstructed by the agent. We propose a Bayesian estimation algorithm that integrates active measurements between the radio device and anchors with passive measurements in-between anchors reflecting off the agent. A geometry-based scattering measurement model is introduced for multi-sensor structures, and multiple object-related measurements are incorporated to formulate an extended object probabilistic data association (PDA) algorithm, where the agent that blocks, scatters and attenuates radio signals is modeled as an extended object (EO). The proposed approach significantly improves the accuracy during and after obstructed LOS conditions, outperforming the conventional PDA (which is based on the point-target-assumption) and methods relying solely on active measurements.

Separated Contrastive Learning for Matching in Cross-domain Recommendation with Curriculum Scheduling

Feb 22, 2025

Abstract:Cross-domain recommendation (CDR) is a task that aims to improve the recommendation performance in a target domain by leveraging the information from source domains. Contrastive learning methods have been widely adopted among intra-domain (intra-CL) and inter-domain (inter-CL) users/items for their representation learning and knowledge transfer during the matching stage of CDR. However, we observe that directly employing contrastive learning on mixed-up intra-CL and inter-CL tasks ignores the difficulty of learning from inter-domain over learning from intra-domain, and thus could cause severe training instability. Therefore, this instability deteriorates the representation learning process and hurts the quality of generated embeddings. To this end, we propose a novel framework named SCCDR built up on a separated intra-CL and inter-CL paradigm and a stop-gradient operation to handle the drawback. Specifically, SCCDR comprises two specialized curriculum stages: intra-inter separation and inter-domain curriculum scheduling. The former stage explicitly uses two distinct contrastive views for the intra-CL task in the source and target domains, respectively. Meanwhile, the latter stage deliberately tackles the inter-CL tasks with a curriculum scheduling strategy that derives effective curricula by accounting for the difficulty of negative samples anchored by overlapping users. Empirical experiments on various open-source datasets and an offline proprietary industrial dataset extracted from a real-world recommender system, and an online A/B test verify that SCCDR achieves state-of-the-art performance over multiple baselines.

FuXi-$α$: Scaling Recommendation Model with Feature Interaction Enhanced Transformer

Feb 05, 2025

Abstract:Inspired by scaling laws and large language models, research on large-scale recommendation models has gained significant attention. Recent advancements have shown that expanding sequential recommendation models to large-scale recommendation models can be an effective strategy. Current state-of-the-art sequential recommendation models primarily use self-attention mechanisms for explicit feature interactions among items, while implicit interactions are managed through Feed-Forward Networks (FFNs). However, these models often inadequately integrate temporal and positional information, either by adding them to attention weights or by blending them with latent representations, which limits their expressive power. A recent model, HSTU, further reduces the focus on implicit feature interactions, constraining its performance. We propose a new model called FuXi-$\alpha$ to address these issues. This model introduces an Adaptive Multi-channel Self-attention mechanism that distinctly models temporal, positional, and semantic features, along with a Multi-stage FFN to enhance implicit feature interactions. Our offline experiments demonstrate that our model outperforms existing models, with its performance continuously improving as the model size increases. Additionally, we conducted an online A/B test within the Huawei Music app, which showed a $4.76\%$ increase in the average number of songs played per user and a $5.10\%$ increase in the average listening duration per user. Our code has been released at https://github.com/USTC-StarTeam/FuXi-alpha.

LIBER: Lifelong User Behavior Modeling Based on Large Language Models

Nov 22, 2024

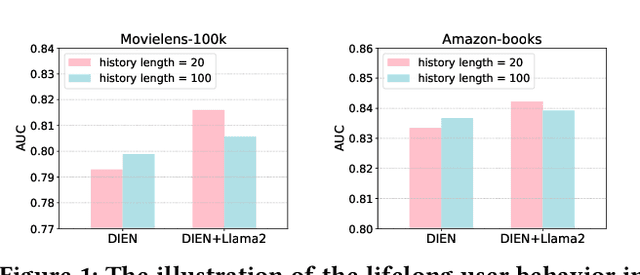

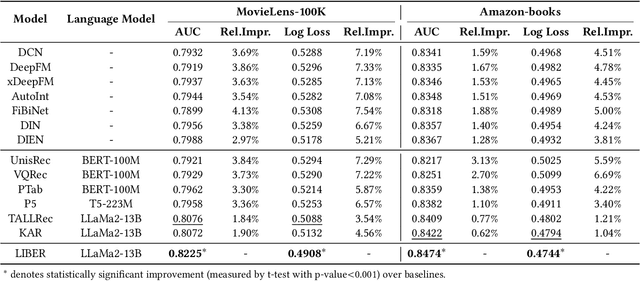

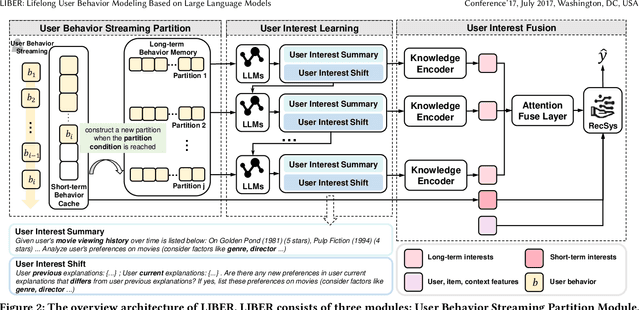

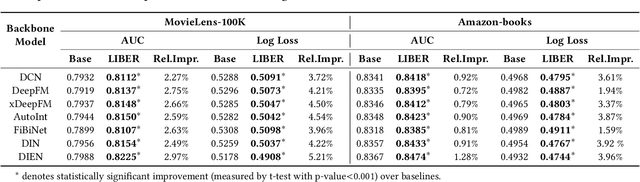

Abstract:CTR prediction plays a vital role in recommender systems. Recently, large language models (LLMs) have been applied in recommender systems due to their emergence abilities. While leveraging semantic information from LLMs has shown some improvements in the performance of recommender systems, two notable limitations persist in these studies. First, LLM-enhanced recommender systems encounter challenges in extracting valuable information from lifelong user behavior sequences within textual contexts for recommendation tasks. Second, the inherent variability in human behaviors leads to a constant stream of new behaviors and irregularly fluctuating user interests. This characteristic imposes two significant challenges on existing models. On the one hand, it presents difficulties for LLMs in effectively capturing the dynamic shifts in user interests within these sequences, and on the other hand, there exists the issue of substantial computational overhead if the LLMs necessitate recurrent calls upon each update to the user sequences. In this work, we propose Lifelong User Behavior Modeling (LIBER) based on large language models, which includes three modules: (1) User Behavior Streaming Partition (UBSP), (2) User Interest Learning (UIL), and (3) User Interest Fusion (UIF). Initially, UBSP is employed to condense lengthy user behavior sequences into shorter partitions in an incremental paradigm, facilitating more efficient processing. Subsequently, UIL leverages LLMs in a cascading way to infer insights from these partitions. Finally, UIF integrates the textual outputs generated by the aforementioned processes to construct a comprehensive representation, which can be incorporated by any recommendation model to enhance performance. LIBER has been deployed on Huawei's music recommendation service and achieved substantial improvements in users' play count and play time by 3.01% and 7.69%.

SimpleBEV: Improved LiDAR-Camera Fusion Architecture for 3D Object Detection

Nov 08, 2024

Abstract:More and more research works fuse the LiDAR and camera information to improve the 3D object detection of the autonomous driving system. Recently, a simple yet effective fusion framework has achieved an excellent detection performance, fusing the LiDAR and camera features in a unified bird's-eye-view (BEV) space. In this paper, we propose a LiDAR-camera fusion framework, named SimpleBEV, for accurate 3D object detection, which follows the BEV-based fusion framework and improves the camera and LiDAR encoders, respectively. Specifically, we perform the camera-based depth estimation using a cascade network and rectify the depth results with the depth information derived from the LiDAR points. Meanwhile, an auxiliary branch that implements the 3D object detection using only the camera-BEV features is introduced to exploit the camera information during the training phase. Besides, we improve the LiDAR feature extractor by fusing the multi-scaled sparse convolutional features. Experimental results demonstrate the effectiveness of our proposed method. Our method achieves 77.6\% NDS accuracy on the nuScenes dataset, showcasing superior performance in the 3D object detection track.

Efficient and Deployable Knowledge Infusion for Open-World Recommendations via Large Language Models

Aug 20, 2024

Abstract:Recommender systems (RSs) play a pervasive role in today's online services, yet their closed-loop nature constrains their access to open-world knowledge. Recently, large language models (LLMs) have shown promise in bridging this gap. However, previous attempts to directly implement LLMs as recommenders fall short in meeting the requirements of industrial RSs, particularly in terms of online inference latency and offline resource efficiency. Thus, we propose REKI to acquire two types of external knowledge about users and items from LLMs. Specifically, we introduce factorization prompting to elicit accurate knowledge reasoning on user preferences and items. We develop individual knowledge extraction and collective knowledge extraction tailored for different scales of scenarios, effectively reducing offline resource consumption. Subsequently, generated knowledge undergoes efficient transformation and condensation into augmented vectors through a hybridized expert-integrated network, ensuring compatibility. The obtained vectors can then be used to enhance any conventional recommendation model. We also ensure efficient inference by preprocessing and prestoring the knowledge from LLMs. Experiments demonstrate that REKI outperforms state-of-the-art baselines and is compatible with lots of recommendation algorithms and tasks. Now, REKI has been deployed to Huawei's news and music recommendation platforms and gained a 7% and 1.99% improvement during the online A/B test.

SimpleLLM4AD: An End-to-End Vision-Language Model with Graph Visual Question Answering for Autonomous Driving

Jul 31, 2024

Abstract:Many fields could benefit from the rapid development of the large language models (LLMs). The end-to-end autonomous driving (e2eAD) is one of the typically fields facing new opportunities as the LLMs have supported more and more modalities. Here, by utilizing vision-language model (VLM), we proposed an e2eAD method called SimpleLLM4AD. In our method, the e2eAD task are divided into four stages, which are perception, prediction, planning, and behavior. Each stage consists of several visual question answering (VQA) pairs and VQA pairs interconnect with each other constructing a graph called Graph VQA (GVQA). By reasoning each VQA pair in the GVQA through VLM stage by stage, our method could achieve e2e driving with language. In our method, vision transformers (ViT) models are employed to process nuScenes visual data, while VLM are utilized to interpret and reason about the information extracted from the visual inputs. In the perception stage, the system identifies and classifies objects from the driving environment. The prediction stage involves forecasting the potential movements of these objects. The planning stage utilizes the gathered information to develop a driving strategy, ensuring the safety and efficiency of the autonomous vehicle. Finally, the behavior stage translates the planned actions into executable commands for the vehicle. Our experiments demonstrate that SimpleLLM4AD achieves competitive performance in complex driving scenarios.

ScenEval: A Benchmark for Scenario-Based Evaluation of Code Generation

Jun 18, 2024

Abstract:In the scenario-based evaluation of machine learning models, a key problem is how to construct test datasets that represent various scenarios. The methodology proposed in this paper is to construct a benchmark and attach metadata to each test case. Then a test system can be constructed with test morphisms that filter the test cases based on metadata to form a dataset. The paper demonstrates this methodology with large language models for code generation. A benchmark called ScenEval is constructed from problems in textbooks, an online tutorial website and Stack Overflow. Filtering by scenario is demonstrated and the test sets are used to evaluate ChatGPT for Java code generation. Our experiments found that the performance of ChatGPT decreases with the complexity of the coding task. It is weakest for advanced topics like multi-threading, data structure algorithms and recursive methods. The Java code generated by ChatGPT tends to be much shorter than reference solution in terms of number of lines, while it is more likely to be more complex in both cyclomatic and cognitive complexity metrics, if the generated code is correct. However, the generated code is more likely to be less complex than the reference solution if the code is incorrect.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge