Ziqing Wang

RAPTOR: Ridge-Adaptive Logistic Probes

Jan 29, 2026Abstract:Probing studies what information is encoded in a frozen LLM's layer representations by training a lightweight predictor on top of them. Beyond analysis, probes are often used operationally in probe-then-steer pipelines: a learned concept vector is extracted from a probe and injected via additive activation steering by adding it to a layer representation during the forward pass. The effectiveness of this pipeline hinges on estimating concept vectors that are accurate, directionally stable under ablation, and inexpensive to obtain. Motivated by these desiderata, we propose RAPTOR (Ridge-Adaptive Logistic Probe), a simple L2-regularized logistic probe whose validation-tuned ridge strength yields concept vectors from normalized weights. Across extensive experiments on instruction-tuned LLMs and human-written concept datasets, RAPTOR matches or exceeds strong baselines in accuracy while achieving competitive directional stability and substantially lower training cost; these quantitative results are supported by qualitative downstream steering demonstrations. Finally, using the Convex Gaussian Min-max Theorem (CGMT), we provide a mechanistic characterization of ridge logistic regression in an idealized Gaussian teacher-student model in the high-dimensional few-shot regime, explaining how penalty strength mediates probe accuracy and concept-vector stability and yielding structural predictions that qualitatively align with trends observed on real LLM embeddings.

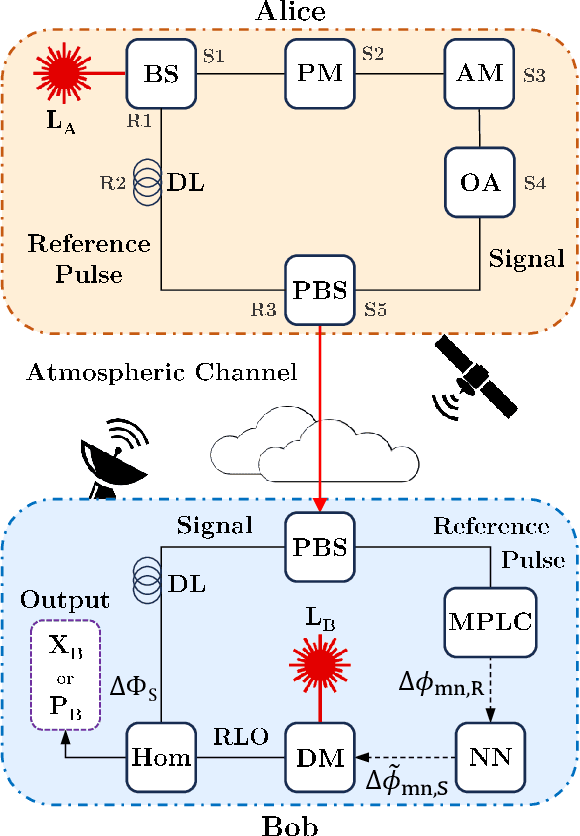

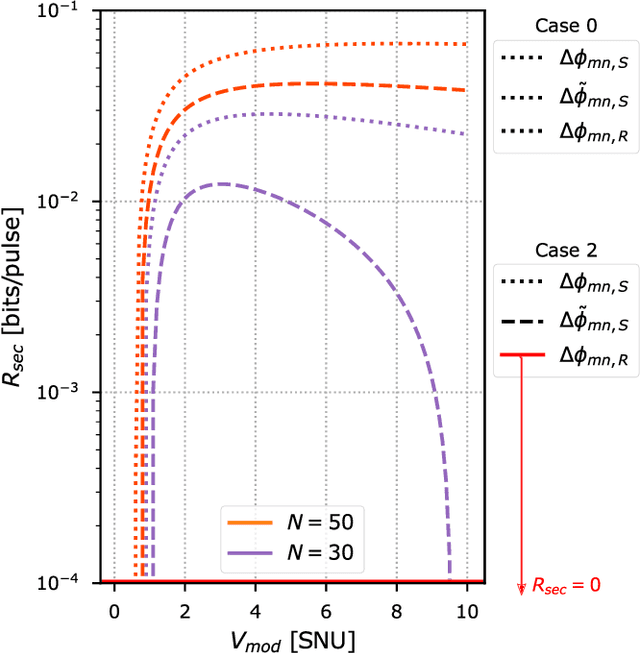

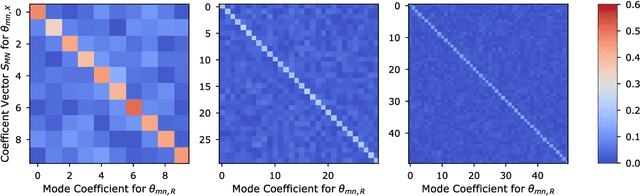

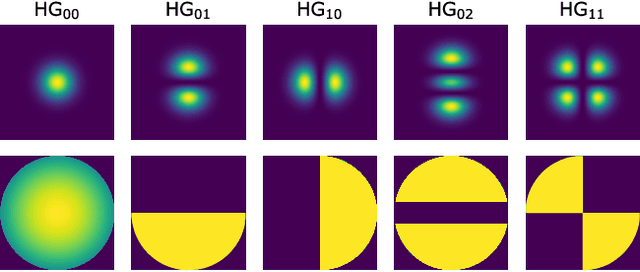

Quantum Wavefront Correction via Machine Learning for Satellite-to-Earth CV-QKD

Aug 14, 2025

Abstract:State-of-the-art free-space continuous-variable quantum key distribution (CV-QKD) protocols use phase reference pulses to modulate the wavefront of a real local oscillator at the receiver, thereby compensating for wavefront distortions caused by atmospheric turbulence. It is normally assumed that the wavefront distortion in these phase reference pulses is identical to the wavefront distortion in the quantum signals, which are multiplexed during transmission. However, in many real-world deployments, there can exist a relative wavefront error (WFE) between the reference pulses and quantum signals, which, among other deleterious effects, can severely limit secure key transfer in satellite-to-Earth CV-QKD. In this work, we introduce novel machine learning-based wavefront correction algorithms, which utilize multi-plane light conversion for decomposition of the reference pulses and quantum signals into the Hermite-Gaussian (HG) basis, then estimate the difference in HG mode phase measurements, effectively eliminating this problem. Through detailed simulations of the Earth-satellite channel, we demonstrate that our new algorithm can rapidly identify and compensate for any relative WFEs that may exist, whilst causing no harm when WFEs are similar across both the reference pulses and quantum signals. We quantify the gains available in our algorithm in terms of the CV-QKD secure key rate. We show channels where positive secure key rates are obtained using our algorithms, while information loss without wavefront correction would result in null key rates.

Spiking Transformers Need High Frequency Information

May 24, 2025Abstract:Spiking Transformers offer an energy-efficient alternative to conventional deep learning by transmitting information solely through binary (0/1) spikes. However, there remains a substantial performance gap compared to artificial neural networks. A common belief is that their binary and sparse activation transmission leads to information loss, thus degrading feature representation and accuracy. In this work, however, we reveal for the first time that spiking neurons preferentially propagate low-frequency information. We hypothesize that the rapid dissipation of high-frequency components is the primary cause of performance degradation. For example, on Cifar-100, adopting Avg-Pooling (low-pass) for token mixing lowers performance to 76.73%; interestingly, replacing it with Max-Pooling (high-pass) pushes the top-1 accuracy to 79.12%, surpassing the well-tuned Spikformer baseline by 0.97%. Accordingly, we introduce Max-Former that restores high-frequency signals through two frequency-enhancing operators: extra Max-Pooling in patch embedding and Depth-Wise Convolution in place of self-attention. Notably, our Max-Former (63.99 M) hits the top-1 accuracy of 82.39% on ImageNet, showing a +7.58% improvement over Spikformer with comparable model size (74.81%, 66.34 M). We hope this simple yet effective solution inspires future research to explore the distinctive nature of spiking neural networks, beyond the established practice in standard deep learning.

A Survey of Large Language Models for Text-Guided Molecular Discovery: from Molecule Generation to Optimization

May 22, 2025Abstract:Large language models (LLMs) are introducing a paradigm shift in molecular discovery by enabling text-guided interaction with chemical spaces through natural language, symbolic notations, with emerging extensions to incorporate multi-modal inputs. To advance the new field of LLM for molecular discovery, this survey provides an up-to-date and forward-looking review of the emerging use of LLMs for two central tasks: molecule generation and molecule optimization. Based on our proposed taxonomy for both problems, we analyze representative techniques in each category, highlighting how LLM capabilities are leveraged across different learning settings. In addition, we include the commonly used datasets and evaluation protocols. We conclude by discussing key challenges and future directions, positioning this survey as a resource for researchers working at the intersection of LLMs and molecular science. A continuously updated reading list is available at https://github.com/REAL-Lab-NU/Awesome-LLM-Centric-Molecular-Discovery.

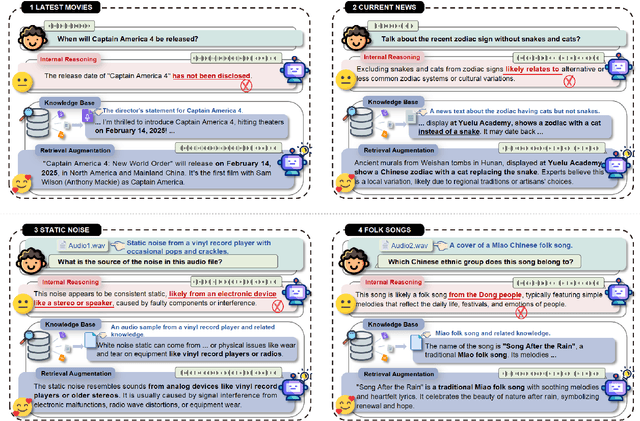

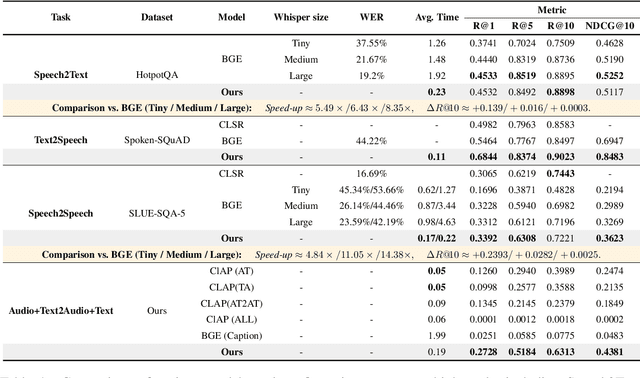

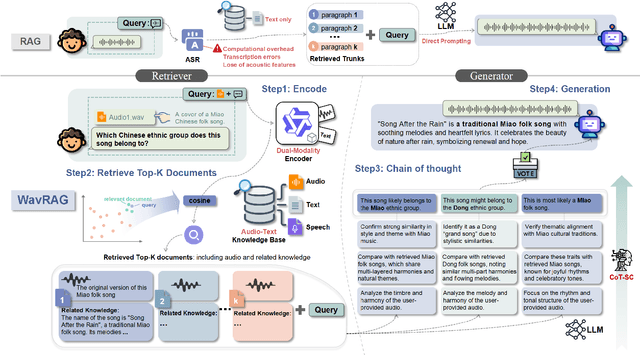

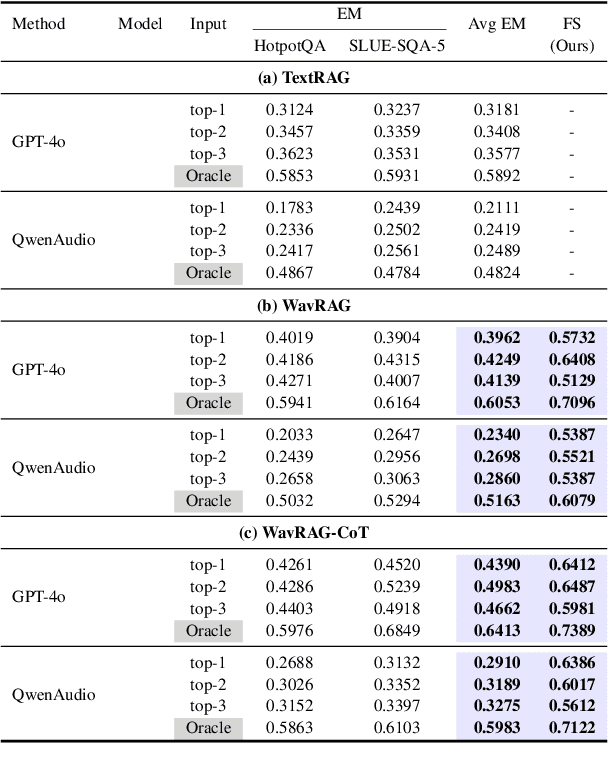

WavRAG: Audio-Integrated Retrieval Augmented Generation for Spoken Dialogue Models

Feb 20, 2025

Abstract:Retrieval Augmented Generation (RAG) has gained widespread adoption owing to its capacity to empower large language models (LLMs) to integrate external knowledge. However, existing RAG frameworks are primarily designed for text-based LLMs and rely on Automatic Speech Recognition to process speech input, which discards crucial audio information, risks transcription errors, and increases computational overhead. Therefore, we introduce WavRAG, the first retrieval augmented generation framework with native, end-to-end audio support. WavRAG offers two key features: 1) Bypassing ASR, WavRAG directly processes raw audio for both embedding and retrieval. 2) WavRAG integrates audio and text into a unified knowledge representation. Specifically, we propose the WavRetriever to facilitate the retrieval from a text-audio hybrid knowledge base, and further enhance the in-context capabilities of spoken dialogue models through the integration of chain-of-thought reasoning. In comparison to state-of-the-art ASR-Text RAG pipelines, WavRAG achieves comparable retrieval performance while delivering a 10x acceleration. Furthermore, WavRAG's unique text-audio hybrid retrieval capability extends the boundaries of RAG to the audio modality.

Adaptive Calibration: A Unified Conversion Framework of Spiking Neural Network

Dec 18, 2024Abstract:Spiking Neural Networks (SNNs) are seen as an energy-efficient alternative to traditional Artificial Neural Networks (ANNs), but the performance gap remains a challenge. While this gap is narrowing through ANN-to-SNN conversion, substantial computational resources are still needed, and the energy efficiency of converted SNNs cannot be ensured. To address this, we present a unified training-free conversion framework that significantly enhances both the performance and efficiency of converted SNNs. Inspired by the biological nervous system, we propose a novel Adaptive-Firing Neuron Model (AdaFire), which dynamically adjusts firing patterns across different layers to substantially reduce the Unevenness Error - the primary source of error of converted SNNs within limited inference timesteps. We further introduce two efficiency-enhancing techniques: the Sensitivity Spike Compression (SSC) technique for reducing spike operations, and the Input-aware Adaptive Timesteps (IAT) technique for decreasing latency. These methods collectively enable our approach to achieve state-of-the-art performance while delivering significant energy savings of up to 70.1%, 60.3%, and 43.1% on CIFAR-10, CIFAR-100, and ImageNet datasets, respectively. Extensive experiments across 2D, 3D, event-driven classification tasks, object detection, and segmentation tasks, demonstrate the effectiveness of our method in various domains. The code is available at: https://github.com/bic-L/burst-ann2snn.

Spiking Neural Network as Adaptive Event Stream Slicer

Oct 03, 2024

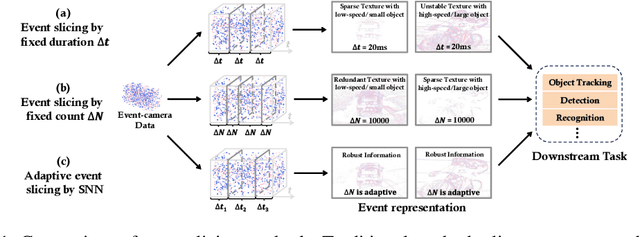

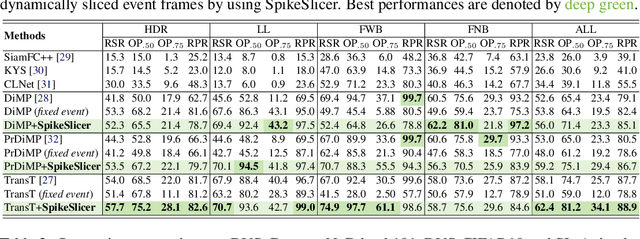

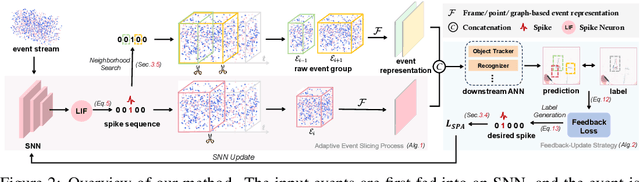

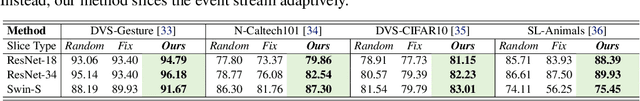

Abstract:Event-based cameras are attracting significant interest as they provide rich edge information, high dynamic range, and high temporal resolution. Many state-of-the-art event-based algorithms rely on splitting the events into fixed groups, resulting in the omission of crucial temporal information, particularly when dealing with diverse motion scenarios (e.g., high/low speed). In this work, we propose SpikeSlicer, a novel-designed plug-and-play event processing method capable of splitting events stream adaptively. SpikeSlicer utilizes a lightweight (0.41M) and low-energy spiking neural network (SNN) to trigger event slicing. To guide the SNN to fire spikes at optimal time steps, we propose the Spiking Position-aware Loss (SPA-Loss) to modulate the neuron's state. Additionally, we develop a Feedback-Update training strategy that refines the slicing decisions using feedback from the downstream artificial neural network (ANN). Extensive experiments demonstrate that our method yields significant performance improvements in event-based object tracking and recognition. Notably, SpikeSlicer provides a brand-new SNN-ANN cooperation paradigm, where the SNN acts as an efficient, low-energy data processor to assist the ANN in improving downstream performance, injecting new perspectives and potential avenues of exploration.

LayerKV: Optimizing Large Language Model Serving with Layer-wise KV Cache Management

Oct 01, 2024Abstract:The expanding context windows in large language models (LLMs) have greatly enhanced their capabilities in various applications, but they also introduce significant challenges in maintaining low latency, particularly in Time to First Token (TTFT). This paper identifies that the sharp rise in TTFT as context length increases is predominantly driven by queuing delays, which are caused by the growing demands for GPU Key-Value (KV) cache allocation clashing with the limited availability of KV cache blocks. To address this issue, we propose LayerKV, a simple yet effective plug-in method that effectively reduces TTFT without requiring additional hardware or compromising output performance, while seamlessly integrating with existing parallelism strategies and scheduling techniques. Specifically, LayerKV introduces layer-wise KV block allocation, management, and offloading for fine-grained control over system memory, coupled with an SLO-aware scheduler to optimize overall Service Level Objectives (SLOs). Comprehensive evaluations on representative models, ranging from 7B to 70B parameters, across various GPU configurations, demonstrate that LayerKV improves TTFT latency up to 11x and reduces SLO violation rates by 28.7\%, significantly enhancing the user experience

Spiking Diffusion Models

Aug 29, 2024Abstract:Recent years have witnessed Spiking Neural Networks (SNNs) gaining attention for their ultra-low energy consumption and high biological plausibility compared with traditional Artificial Neural Networks (ANNs). Despite their distinguished properties, the application of SNNs in the computationally intensive field of image generation is still under exploration. In this paper, we propose the Spiking Diffusion Models (SDMs), an innovative family of SNN-based generative models that excel in producing high-quality samples with significantly reduced energy consumption. In particular, we propose a Temporal-wise Spiking Mechanism (TSM) that allows SNNs to capture more temporal features from a bio-plasticity perspective. In addition, we propose a threshold-guided strategy that can further improve the performances by up to 16.7% without any additional training. We also make the first attempt to use the ANN-SNN approach for SNN-based generation tasks. Extensive experimental results reveal that our approach not only exhibits comparable performance to its ANN counterpart with few spiking time steps, but also outperforms previous SNN-based generative models by a large margin. Moreover, we also demonstrate the high-quality generation ability of SDM on large-scale datasets, e.g., LSUN bedroom. This development marks a pivotal advancement in the capabilities of SNN-based generation, paving the way for future research avenues to realize low-energy and low-latency generative applications. Our code is available at https://github.com/AndyCao1125/SDM.

Exploiting Spatial Diversity in Earth-to-Satellite Quantum-Classical Communications

Jul 02, 2024

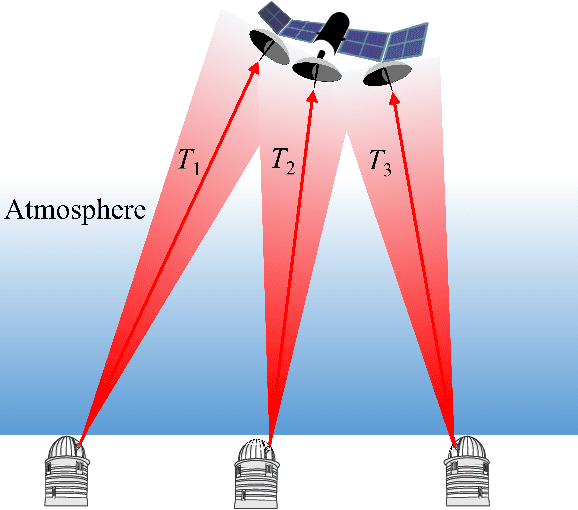

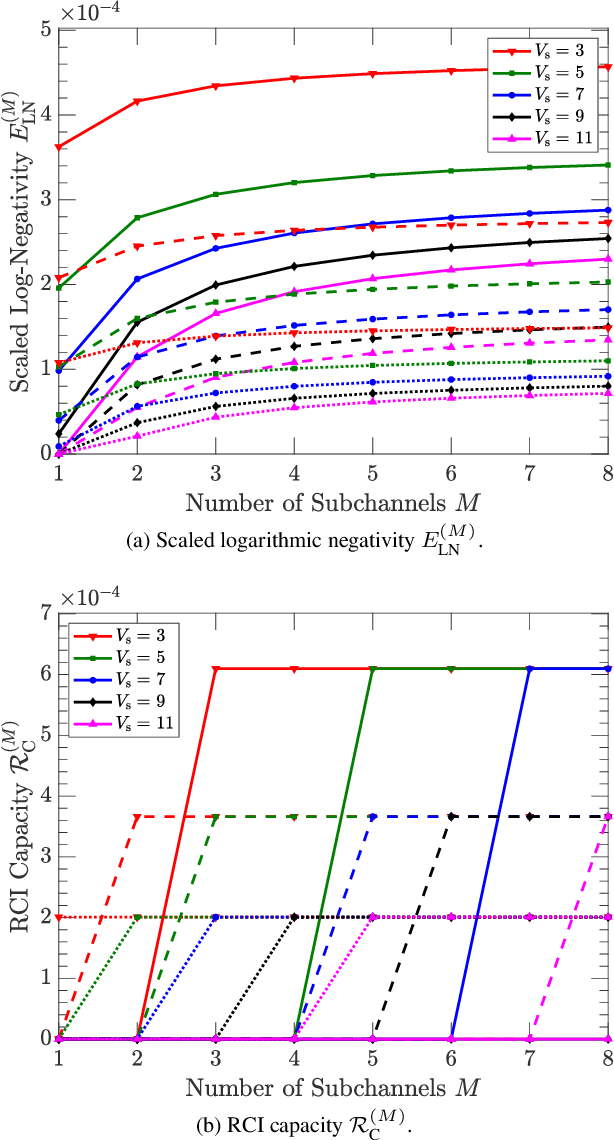

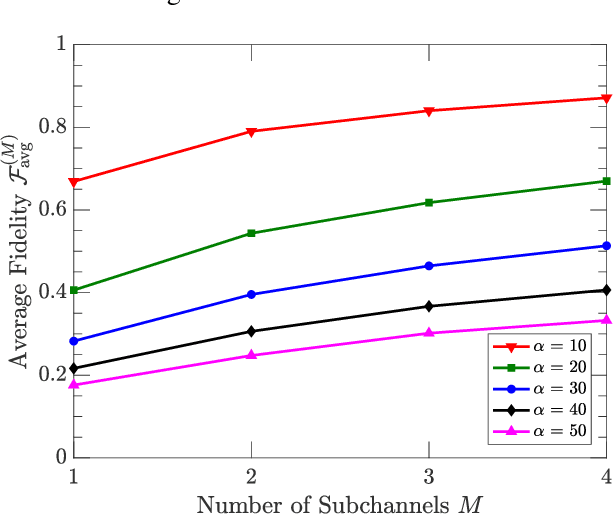

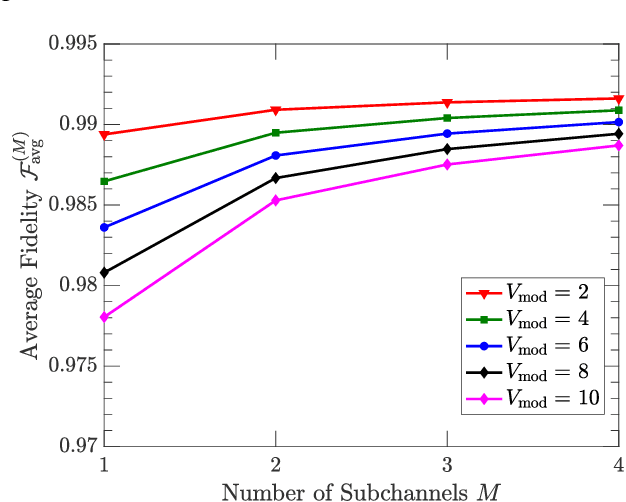

Abstract:Despite being an integral part of the vision of quantum Internet, Earth-to-satellite (uplink) quantum communications have been considered more challenging than their satellite-to-Earth (downlink) counterparts due to the severe channel-loss fluctuations (fading) induced by atmospheric turbulence. The question of how to address the negative impact of fading on Earth-to-satellite quantum communications remains largely an open issue. In this work, we explore the feasibility of exploiting spatial diversity as a means of fading mitigation in Earth-to-satellite Continuous-Variable (CV) quantum-classical optical communications. We demonstrate, via both our theoretical analyses of quantum-state evolution and our detailed numerical simulations of uplink optical channels, that the use of spatial diversity can improve the effectiveness of entanglement distribution through the use of multiple transmitting ground stations and a single satellite with multiple receiving apertures. We further show that the transfer of both large (classically-encoded) and small (quantum-modulated) coherent states can benefit from the use of diversity over fading channels. Our work represents the first quantitative investigation into the use of spatial diversity for satellite-based quantum communications in the uplink direction, showing under what circumstances this fading-mitigation paradigm, which has been widely adopted in classical communications, can be helpful within the context of Earth-to-satellite CV quantum communications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge