Haoxiao Wang

TransDiff: Diffusion-Based Method for Manipulating Transparent Objects Using a Single RGB-D Image

Mar 17, 2025Abstract:Manipulating transparent objects presents significant challenges due to the complexities introduced by their reflection and refraction properties, which considerably hinder the accurate estimation of their 3D shapes. To address these challenges, we propose a single-view RGB-D-based depth completion framework, TransDiff, that leverages the Denoising Diffusion Probabilistic Models(DDPM) to achieve material-agnostic object grasping in desktop. Specifically, we leverage features extracted from RGB images, including semantic segmentation, edge maps, and normal maps, to condition the depth map generation process. Our method learns an iterative denoising process that transforms a random depth distribution into a depth map, guided by initially refined depth information, ensuring more accurate depth estimation in scenarios involving transparent objects. Additionally, we propose a novel training method to better align the noisy depth and RGB image features, which are used as conditions to refine depth estimation step by step. Finally, we utilized an improved inference process to accelerate the denoising procedure. Through comprehensive experimental validation, we demonstrate that our method significantly outperforms the baselines in both synthetic and real-world benchmarks with acceptable inference time. The demo of our method can be found on https://wang-haoxiao.github.io/TransDiff/

WavRAG: Audio-Integrated Retrieval Augmented Generation for Spoken Dialogue Models

Feb 20, 2025

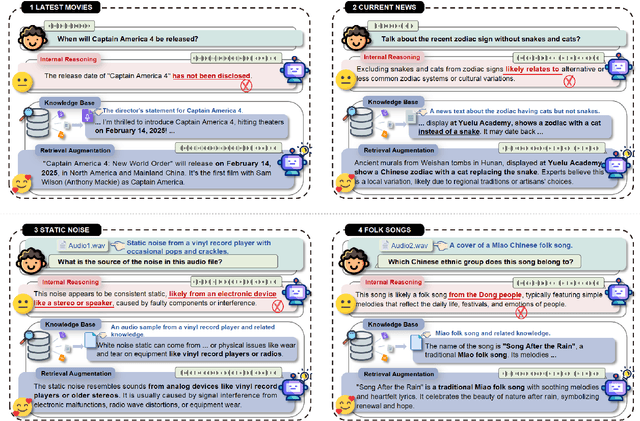

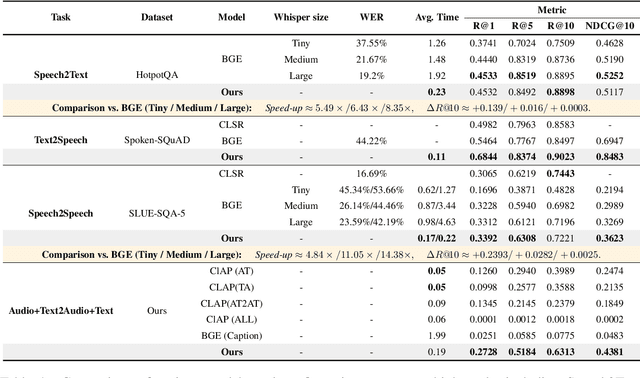

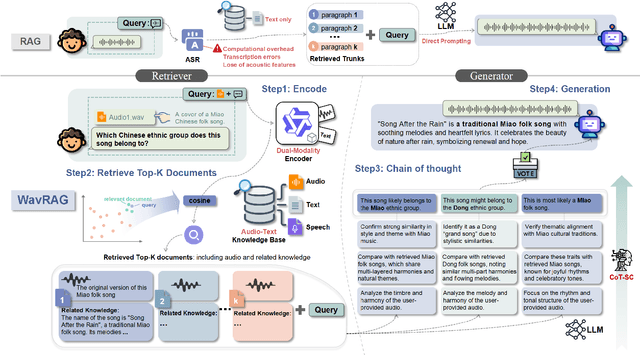

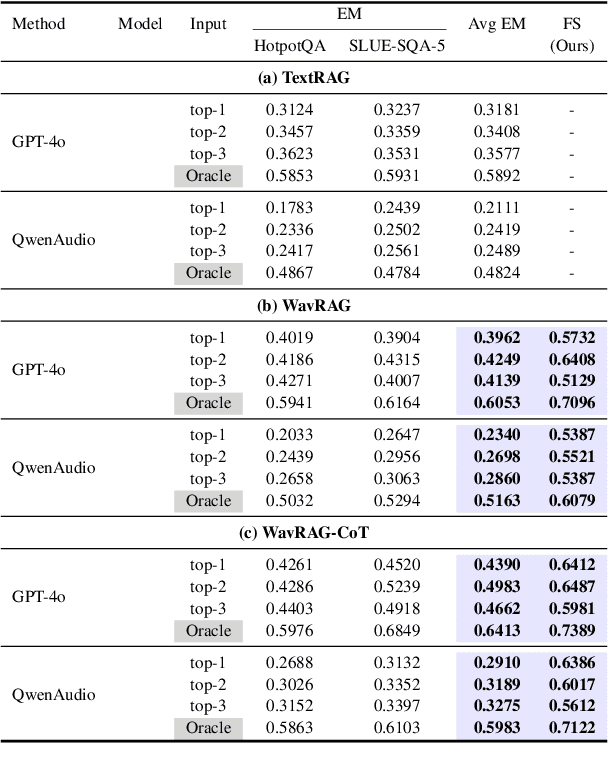

Abstract:Retrieval Augmented Generation (RAG) has gained widespread adoption owing to its capacity to empower large language models (LLMs) to integrate external knowledge. However, existing RAG frameworks are primarily designed for text-based LLMs and rely on Automatic Speech Recognition to process speech input, which discards crucial audio information, risks transcription errors, and increases computational overhead. Therefore, we introduce WavRAG, the first retrieval augmented generation framework with native, end-to-end audio support. WavRAG offers two key features: 1) Bypassing ASR, WavRAG directly processes raw audio for both embedding and retrieval. 2) WavRAG integrates audio and text into a unified knowledge representation. Specifically, we propose the WavRetriever to facilitate the retrieval from a text-audio hybrid knowledge base, and further enhance the in-context capabilities of spoken dialogue models through the integration of chain-of-thought reasoning. In comparison to state-of-the-art ASR-Text RAG pipelines, WavRAG achieves comparable retrieval performance while delivering a 10x acceleration. Furthermore, WavRAG's unique text-audio hybrid retrieval capability extends the boundaries of RAG to the audio modality.

AdaFSNet: Time Series Classification Based on Convolutional Network with a Adaptive and Effective Kernel Size Configuration

Apr 28, 2024

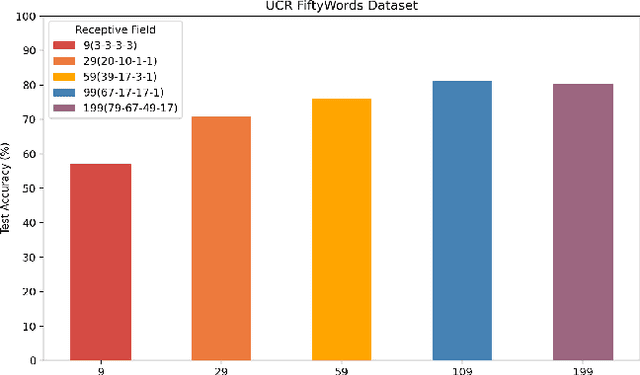

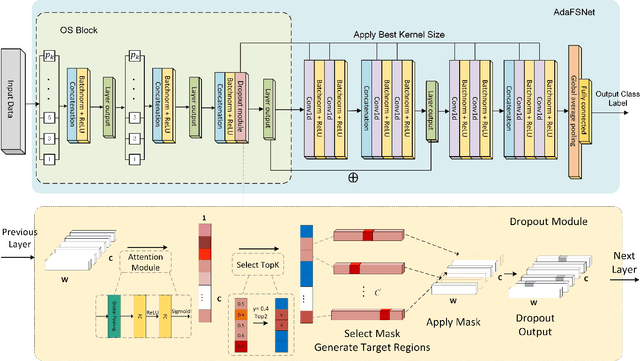

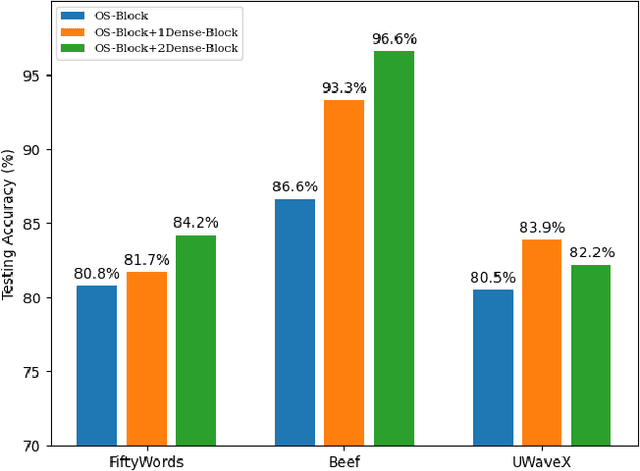

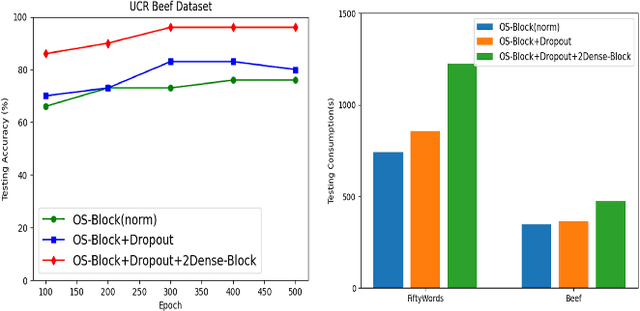

Abstract:Time series classification is one of the most critical and challenging problems in data mining, existing widely in various fields and holding significant research importance. Despite extensive research and notable achievements with successful real-world applications, addressing the challenge of capturing the appropriate receptive field (RF) size from one-dimensional or multi-dimensional time series of varying lengths remains a persistent issue, which greatly impacts performance and varies considerably across different datasets. In this paper, we propose an Adaptive and Effective Full-Scope Convolutional Neural Network (AdaFSNet) to enhance the accuracy of time series classification. This network includes two Dense Blocks. Particularly, it can dynamically choose a range of kernel sizes that effectively encompass the optimal RF size for various datasets by incorporating multiple prime numbers corresponding to the time series length. We also design a TargetDrop block, which can reduce redundancy while extracting a more effective RF. To assess the effectiveness of the AdaFSNet network, comprehensive experiments were conducted using the UCR and UEA datasets, which include one-dimensional and multi-dimensional time series data, respectively. Our model surpassed baseline models in terms of classification accuracy, underscoring the AdaFSNet network's efficiency and effectiveness in handling time series classification tasks.

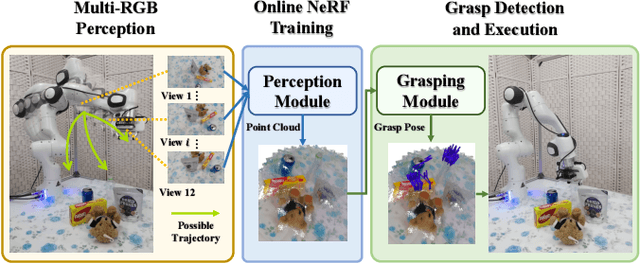

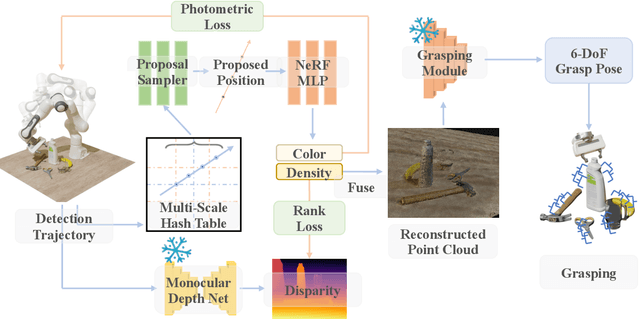

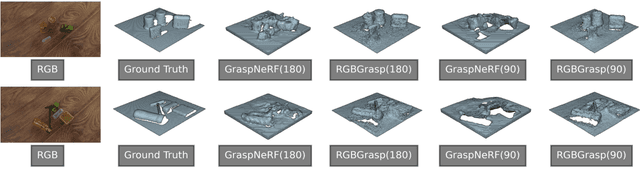

RGBGrasp: Image-based Object Grasping by Capturing Multiple Views during Robot Arm Movement with Neural Radiance Fields

Nov 28, 2023

Abstract:Robotic research encounters a significant hurdle when it comes to the intricate task of grasping objects that come in various shapes, materials, and textures. Unlike many prior investigations that heavily leaned on specialized point-cloud cameras or abundant RGB visual data to gather 3D insights for object-grasping missions, this paper introduces a pioneering approach called RGBGrasp. This method depends on a limited set of RGB views to perceive the 3D surroundings containing transparent and specular objects and achieve accurate grasping. Our method utilizes pre-trained depth prediction models to establish geometry constraints, enabling precise 3D structure estimation, even under limited view conditions. Finally, we integrate hash encoding and a proposal sampler strategy to significantly accelerate the 3D reconstruction process. These innovations significantly enhance the adaptability and effectiveness of our algorithm in real-world scenarios. Through comprehensive experimental validation, we demonstrate that RGBGrasp achieves remarkable success across a wide spectrum of object-grasping scenarios, establishing it as a promising solution for real-world robotic manipulation tasks. The demo of our method can be found on: https://sites.google.com/view/rgbgrasp

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge