Zhengyu Zhang

A Deployment-Friendly Foundational Framework for Efficient Computational Pathology

Feb 15, 2026Abstract:Pathology foundation models (PFMs) have enabled robust generalization in computational pathology through large-scale datasets and expansive architectures, but their substantial computational cost, particularly for gigapixel whole slide images, limits clinical accessibility and scalability. Here, we present LitePath, a deployment-friendly foundational framework designed to mitigate model over-parameterization and patch level redundancy. LitePath integrates LiteFM, a compact model distilled from three large PFMs (Virchow2, H-Optimus-1 and UNI2) using 190 million patches, and the Adaptive Patch Selector (APS), a lightweight component for task-specific patch selection. The framework reduces model parameters by 28x and lowers FLOPs by 403.5x relative to Virchow2, enabling deployment on low-power edge hardware such as the NVIDIA Jetson Orin Nano Super. On this device, LitePath processes 208 slides per hour, 104.5x faster than Virchow2, and consumes 0.36 kWh per 3,000 slides, 171x lower than Virchow2 on an RTX3090 GPU. We validated accuracy using 37 cohorts across four organs and 26 tasks (26 internal, 9 external, and 2 prospective), comprising 15,672 slides from 9,808 patients disjoint from the pretraining data. LitePath ranks second among 19 evaluated models and outperforms larger models including H-Optimus-1, mSTAR, UNI2 and GPFM, while retaining 99.71% of the AUC of Virchow2 on average. To quantify the balance between accuracy and efficiency, we propose the Deployability Score (D-Score), defined as the weighted geometric mean of normalized AUC and normalized FLOP, where LitePath achieves the highest value, surpassing Virchow2 by 10.64%. These results demonstrate that LitePath enables rapid, cost-effective and energy-efficient pathology image analysis on accessible hardware while maintaining accuracy comparable to state-of-the-art PFMs and reducing the carbon footprint of AI deployment.

Artificial Intelligence Empowered Channel Prediction: A New Paradigm for Propagation Channel Modeling

Jan 14, 2026Abstract:This paper proposes a novel paradigm centered on Artificial Intelligence (AI)-empowered propagation channel prediction to address the limitations of traditional channel modeling. We present a comprehensive framework that deeply integrates heterogeneous environmental data and physical propagation knowledge into AI models for site-specific channel prediction, which referred to as channel inference. By leveraging AI to infer site-specific wireless channel states, the proposed paradigm enables accurate prediction of channel characteristics at both link and area levels, capturing spatio-temporal evolution of radio propagation. Some novel strategies to realize the paradigm are introduced and discussed, including AI-native and AI-hybrid inference approaches. This paper also investigates how to enhance model generalization through transfer learning and improve interpretability via explainable AI techniques. Our approach demonstrates significant practical efficacy, achieving an average path loss prediction root mean square error (RMSE) of $\sim$ 4 dB and reducing training time by 60\%-75\%. This new modeling paradigm provides a foundational pathway toward high-fidelity, generalizable, and physically consistent propagation channel prediction for future communication networks.

MambaMIL+: Modeling Long-Term Contextual Patterns for Gigapixel Whole Slide Image

Dec 19, 2025

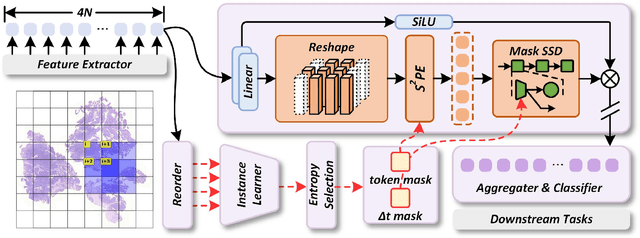

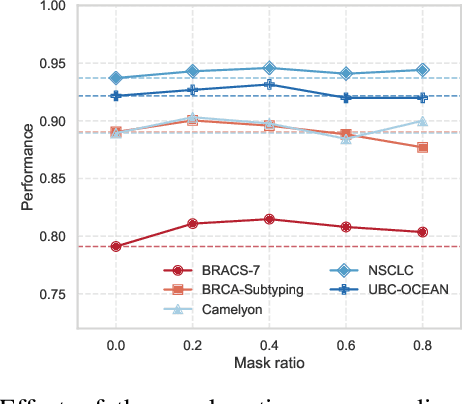

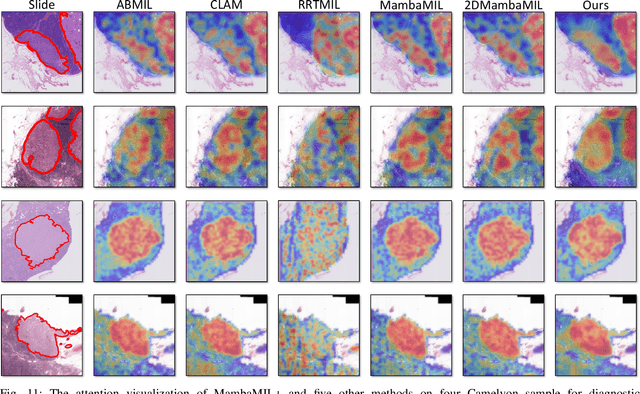

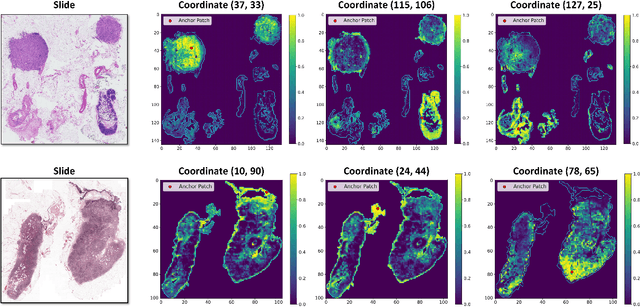

Abstract:Whole-slide images (WSIs) are an important data modality in computational pathology, yet their gigapixel resolution and lack of fine-grained annotations challenge conventional deep learning models. Multiple instance learning (MIL) offers a solution by treating each WSI as a bag of patch-level instances, but effectively modeling ultra-long sequences with rich spatial context remains difficult. Recently, Mamba has emerged as a promising alternative for long sequence learning, scaling linearly to thousands of tokens. However, despite its efficiency, it still suffers from limited spatial context modeling and memory decay, constraining its effectiveness to WSI analysis. To address these limitations, we propose MambaMIL+, a new MIL framework that explicitly integrates spatial context while maintaining long-range dependency modeling without memory forgetting. Specifically, MambaMIL+ introduces 1) overlapping scanning, which restructures the patch sequence to embed spatial continuity and instance correlations; 2) a selective stripe position encoder (S2PE) that encodes positional information while mitigating the biases of fixed scanning orders; and 3) a contextual token selection (CTS) mechanism, which leverages supervisory knowledge to dynamically enlarge the contextual memory for stable long-range modeling. Extensive experiments on 20 benchmarks across diagnostic classification, molecular prediction, and survival analysis demonstrate that MambaMIL+ consistently achieves state-of-the-art performance under three feature extractors (ResNet-50, PLIP, and CONCH), highlighting its effectiveness and robustness for large-scale computational pathology

Meta Lattice: Model Space Redesign for Cost-Effective Industry-Scale Ads Recommendations

Dec 15, 2025Abstract:The rapidly evolving landscape of products, surfaces, policies, and regulations poses significant challenges for deploying state-of-the-art recommendation models at industry scale, primarily due to data fragmentation across domains and escalating infrastructure costs that hinder sustained quality improvements. To address this challenge, we propose Lattice, a recommendation framework centered around model space redesign that extends Multi-Domain, Multi-Objective (MDMO) learning beyond models and learning objectives. Lattice addresses these challenges through a comprehensive model space redesign that combines cross-domain knowledge sharing, data consolidation, model unification, distillation, and system optimizations to achieve significant improvements in both quality and cost-efficiency. Our deployment of Lattice at Meta has resulted in 10% revenue-driving top-line metrics gain, 11.5% user satisfaction improvement, 6% boost in conversion rate, with 20% capacity saving.

A Geometry Map-Based Site-Specific Propagation Channel Model for Urban Scenarios

Nov 19, 2025Abstract:With the rapid deployments of 5G and 6G networks, accurate modeling of urban radio propagation has become critical for system design and network planning. However, conventional statistical or empirical models fail to fully capture the influence of detailed geometric features on site-specific channel variances in dense urban environments. In this paper, we propose a geometry map-based propagation channel model that directly extracts key parameters from a 3D geometry map and incorporates the Uniform Theory of Diffraction (UTD) to recursively compute multiple diffraction fields, thereby enabling accurate prediction of site-specific large-scale path loss and time-varying Doppler characteristics in urban scenarios. A well-designed identification algorithm is developed to efficiently detect buildings that significantly affect signal propagation. The proposed model is validated using urban measurement data, showing excellent agreement of path loss in both line-of-sight (LOS) and nonline-of-sight (NLOS) conditions. In particular, for NLOS scenarios with complex diffractions, it outperforms the 3GPP and simplified models, reducing the RMSE by 7.1 dB and 3.18 dB, respectively. Doppler analysis further demonstrates its accuracy in capturing time-varying propagation characteristics, confirming the scalability and generalization of the model in urban environments.

ExplainableGuard: Interpretable Adversarial Defense for Large Language Models Using Chain-of-Thought Reasoning

Nov 15, 2025

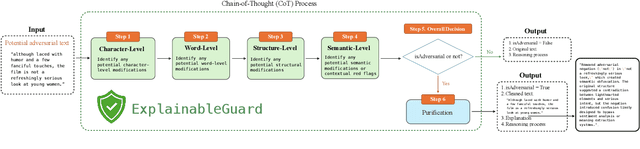

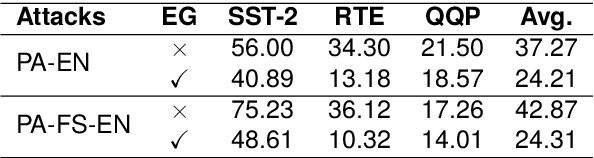

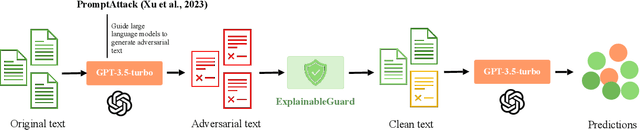

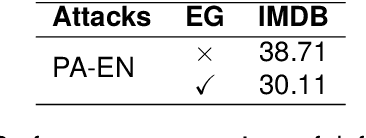

Abstract:Large Language Models (LLMs) are increasingly vulnerable to adversarial attacks that can subtly manipulate their outputs. While various defense mechanisms have been proposed, many operate as black boxes, lacking transparency in their decision-making. This paper introduces ExplainableGuard, an interpretable adversarial defense framework leveraging the chain-of-thought (CoT) reasoning capabilities of DeepSeek-Reasoner. Our approach not only detects and neutralizes adversarial perturbations in text but also provides step-by-step explanations for each defense action. We demonstrate how tailored CoT prompts guide the LLM to perform a multi-faceted analysis (character, word, structural, and semantic) and generate a purified output along with a human-readable justification. Preliminary results on the GLUE Benchmark and IMDB Movie Reviews dataset show promising defense efficacy. Additionally, a human evaluation study reveals that ExplainableGuard's explanations outperform ablated variants in clarity, specificity, and actionability, with a 72.5% deployability-trust rating, underscoring its potential for more trustworthy LLM deployments.

Deep Learning Based Dynamic Environment Reconstruction for Vehicular ISAC Scenarios

Aug 07, 2025

Abstract:Integrated Sensing and Communication (ISAC) technology plays a critical role in future intelligent transportation systems, by enabling vehicles to perceive and reconstruct the surrounding environment through reuse of wireless signals, thereby reducing or even eliminating the need for additional sensors such as LiDAR or radar. However, existing ISAC based reconstruction methods often lack the ability to track dynamic scenes with sufficient accuracy and temporal consistency, limiting the real world applicability. To address this limitation, we propose a deep learning based framework for vehicular environment reconstruction by using ISAC channels. We first establish a joint channel environment dataset based on multi modal measurements from real world urban street scenarios. Then, a multistage deep learning network is developed to reconstruct the environment. Specifically, a scene decoder identifies the environmental context such as buildings, trees and so on; a cluster center decoder predicts coarse spatial layouts by localizing dominant scattering centers; a point cloud decoder recovers fine grained geometry and structure of surrounding environments. Experimental results demonstrate that the proposed method achieves high-quality dynamic environment reconstruction with a Chamfer Distance of 0.29 and F Score@1% of 0.87. In addition, complexity analysis demonstrates the efficiency and practical applicability of the method in real time scenarios. This work provides a pathway toward low cost environment reconstruction based on ISAC for future intelligent transportation.

A Versatile Pathology Co-pilot via Reasoning Enhanced Multimodal Large Language Model

Jul 23, 2025

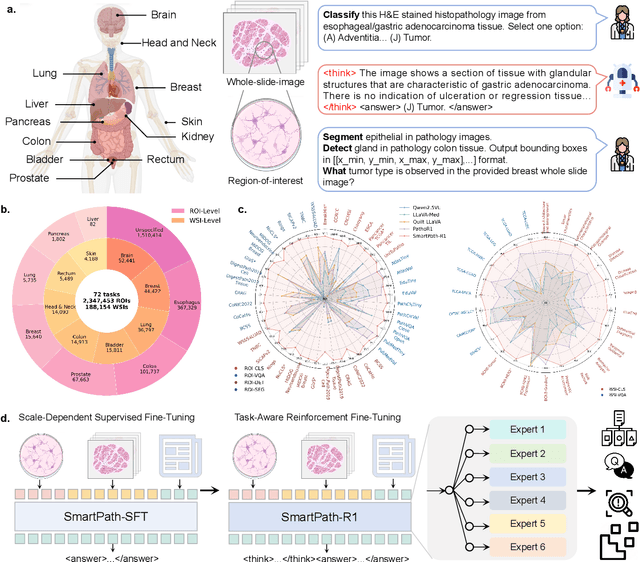

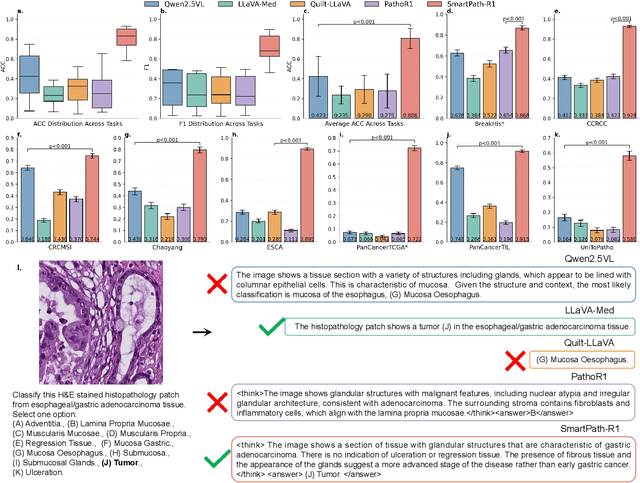

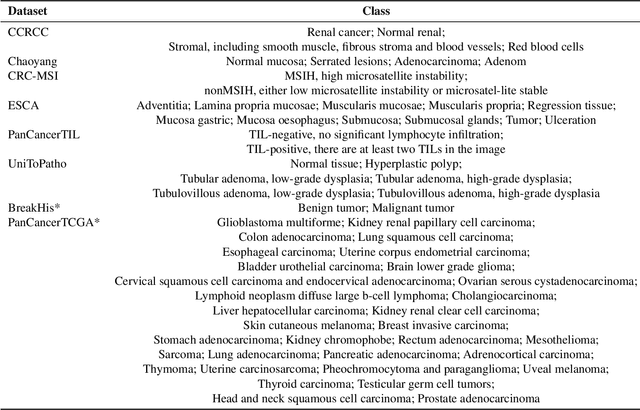

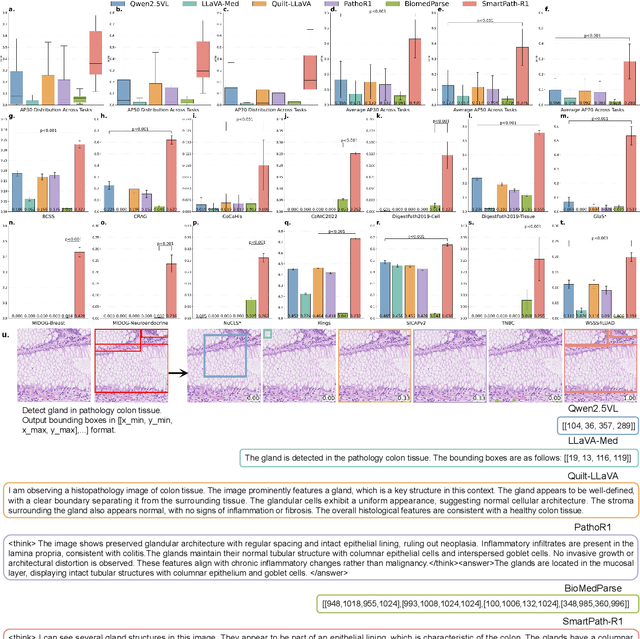

Abstract:Multimodal large language models (MLLMs) have emerged as powerful tools for computational pathology, offering unprecedented opportunities to integrate pathological images with language context for comprehensive diagnostic analysis. These models hold particular promise for automating complex tasks that traditionally require expert interpretation of pathologists. However, current MLLM approaches in pathology demonstrate significantly constrained reasoning capabilities, primarily due to their reliance on expensive chain-of-thought annotations. Additionally, existing methods remain limited to simplex application of visual question answering (VQA) at region-of-interest (ROI) level, failing to address the full spectrum of diagnostic needs such as ROI classification, detection, segmentation, whole-slide-image (WSI) classification and VQA in clinical practice. In this study, we present SmartPath-R1, a versatile MLLM capable of simultaneously addressing both ROI-level and WSI-level tasks while demonstrating robust pathological reasoning capability. Our framework combines scale-dependent supervised fine-tuning and task-aware reinforcement fine-tuning, which circumvents the requirement for chain-of-thought supervision by leveraging the intrinsic knowledge within MLLM. Furthermore, SmartPath-R1 integrates multiscale and multitask analysis through a mixture-of-experts mechanism, enabling dynamic processing for diverse tasks. We curate a large-scale dataset comprising 2.3M ROI samples and 188K WSI samples for training and evaluation. Extensive experiments across 72 tasks validate the effectiveness and superiority of the proposed approach. This work represents a significant step toward developing versatile, reasoning-enhanced AI systems for precision pathology.

Prototypical Progressive Alignment and Reweighting for Generalizable Semantic Segmentation

Jul 16, 2025

Abstract:Generalizable semantic segmentation aims to perform well on unseen target domains, a critical challenge due to real-world applications requiring high generalizability. Class-wise prototypes, representing class centroids, serve as domain-invariant cues that benefit generalization due to their stability and semantic consistency. However, this approach faces three challenges. First, existing methods often adopt coarse prototypical alignment strategies, which may hinder performance. Second, naive prototypes computed by averaging source batch features are prone to overfitting and may be negatively affected by unrelated source data. Third, most methods treat all source samples equally, ignoring the fact that different features have varying adaptation difficulties. To address these limitations, we propose a novel framework for generalizable semantic segmentation: Prototypical Progressive Alignment and Reweighting (PPAR), leveraging the strong generalization ability of the CLIP model. Specifically, we define two prototypes: the Original Text Prototype (OTP) and Visual Text Prototype (VTP), generated via CLIP to serve as a solid base for alignment. We then introduce a progressive alignment strategy that aligns features in an easy-to-difficult manner, reducing domain gaps gradually. Furthermore, we propose a prototypical reweighting mechanism that estimates the reliability of source data and adjusts its contribution, mitigating the effect of irrelevant or harmful features (i.e., reducing negative transfer). We also provide a theoretical analysis showing the alignment between our method and domain generalization theory. Extensive experiments across multiple benchmarks demonstrate that PPAR achieves state-of-the-art performance, validating its effectiveness.

Deep Learning-based Human Gesture Channel Modeling for Integrated Sensing and Communication Scenarios

Jul 09, 2025Abstract:With the development of Integrated Sensing and Communication (ISAC) for Sixth-Generation (6G) wireless systems, contactless human recognition has emerged as one of the key application scenarios. Since human gesture motion induces subtle and random variations in wireless multipath propagation, how to accurately model human gesture channels has become a crucial issue for the design and validation of ISAC systems. To this end, this paper proposes a deep learning-based human gesture channel modeling framework for ISAC scenarios, in which the human body is decomposed into multiple body parts, and the mapping between human gestures and their corresponding multipath characteristics is learned from real-world measurements. Specifically, a Poisson neural network is employed to predict the number of Multi-Path Components (MPCs) for each human body part, while Conditional Variational Auto-Encoders (C-VAEs) are reused to generate the scattering points, which are further used to reconstruct continuous channel impulse responses and micro-Doppler signatures. Simulation results demonstrate that the proposed method achieves high accuracy and generalization across different gestures and subjects, providing an interpretable approach for data augmentation and the evaluation of gesture-based ISAC systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge