Jiawei Sheng

Hyperbolic-PDE GNN: Spectral Graph Neural Networks in the Perspective of A System of Hyperbolic Partial Differential Equations

May 29, 2025Abstract:Graph neural networks (GNNs) leverage message passing mechanisms to learn the topological features of graph data. Traditional GNNs learns node features in a spatial domain unrelated to the topology, which can hardly ensure topological features. In this paper, we formulates message passing as a system of hyperbolic partial differential equations (hyperbolic PDEs), constituting a dynamical system that explicitly maps node representations into a particular solution space. This solution space is spanned by a set of eigenvectors describing the topological structure of graphs. Within this system, for any moment in time, a node features can be decomposed into a superposition of the basis of eigenvectors. This not only enhances the interpretability of message passing but also enables the explicit extraction of fundamental characteristics about the topological structure. Furthermore, by solving this system of hyperbolic partial differential equations, we establish a connection with spectral graph neural networks (spectral GNNs), serving as a message passing enhancement paradigm for spectral GNNs.We further introduce polynomials to approximate arbitrary filter functions. Extensive experiments demonstrate that the paradigm of hyperbolic PDEs not only exhibits strong flexibility but also significantly enhances the performance of various spectral GNNs across diverse graph tasks.

* 18 pages, 2 figures, published to ICML 2025

Graph Wave Networks

May 26, 2025

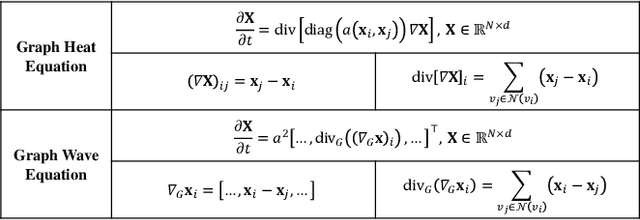

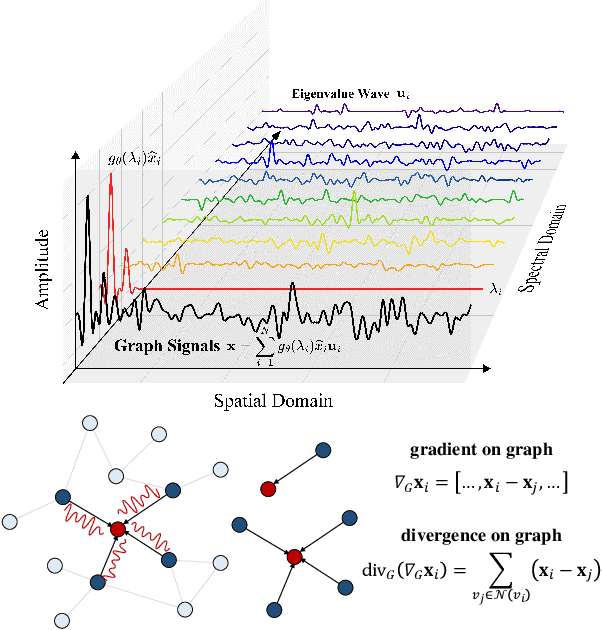

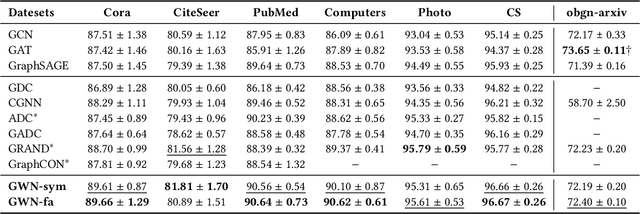

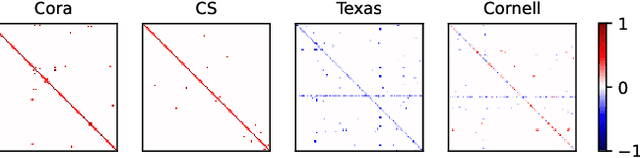

Abstract:Dynamics modeling has been introduced as a novel paradigm in message passing (MP) of graph neural networks (GNNs). Existing methods consider MP between nodes as a heat diffusion process, and leverage heat equation to model the temporal evolution of nodes in the embedding space. However, heat equation can hardly depict the wave nature of graph signals in graph signal processing. Besides, heat equation is essentially a partial differential equation (PDE) involving a first partial derivative of time, whose numerical solution usually has low stability, and leads to inefficient model training. In this paper, we would like to depict more wave details in MP, since graph signals are essentially wave signals that can be seen as a superposition of a series of waves in the form of eigenvector. This motivates us to consider MP as a wave propagation process to capture the temporal evolution of wave signals in the space. Based on wave equation in physics, we innovatively develop a graph wave equation to leverage the wave propagation on graphs. In details, we demonstrate that the graph wave equation can be connected to traditional spectral GNNs, facilitating the design of graph wave networks based on various Laplacians and enhancing the performance of the spectral GNNs. Besides, the graph wave equation is particularly a PDE involving a second partial derivative of time, which has stronger stability on graphs than the heat equation that involves a first partial derivative of time. Additionally, we theoretically prove that the numerical solution derived from the graph wave equation are constantly stable, enabling to significantly enhance model efficiency while ensuring its performance. Extensive experiments show that GWNs achieve SOTA and efficient performance on benchmark datasets, and exhibit outstanding performance in addressing challenging graph problems, such as over-smoothing and heterophily.

* 15 pages, 8 figures, published to WWW 2025

Mitigating Modality Bias in Multi-modal Entity Alignment from a Causal Perspective

Apr 29, 2025

Abstract:Multi-Modal Entity Alignment (MMEA) aims to retrieve equivalent entities from different Multi-Modal Knowledge Graphs (MMKGs), a critical information retrieval task. Existing studies have explored various fusion paradigms and consistency constraints to improve the alignment of equivalent entities, while overlooking that the visual modality may not always contribute positively. Empirically, entities with low-similarity images usually generate unsatisfactory performance, highlighting the limitation of overly relying on visual features. We believe the model can be biased toward the visual modality, leading to a shortcut image-matching task. To address this, we propose a counterfactual debiasing framework for MMEA, termed CDMEA, which investigates visual modality bias from a causal perspective. Our approach aims to leverage both visual and graph modalities to enhance MMEA while suppressing the direct causal effect of the visual modality on model predictions. By estimating the Total Effect (TE) of both modalities and excluding the Natural Direct Effect (NDE) of the visual modality, we ensure that the model predicts based on the Total Indirect Effect (TIE), effectively utilizing both modalities and reducing visual modality bias. Extensive experiments on 9 benchmark datasets show that CDMEA outperforms 14 state-of-the-art methods, especially in low-similarity, high-noise, and low-resource data scenarios.

Inner Thinking Transformer: Leveraging Dynamic Depth Scaling to Foster Adaptive Internal Thinking

Feb 19, 2025

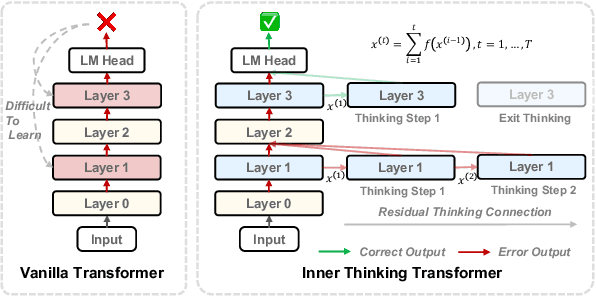

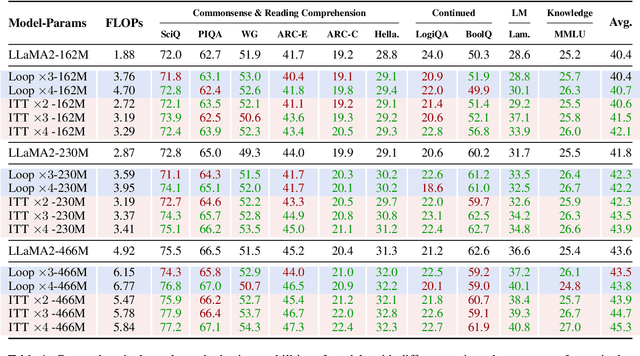

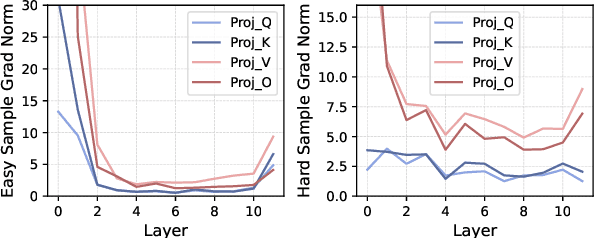

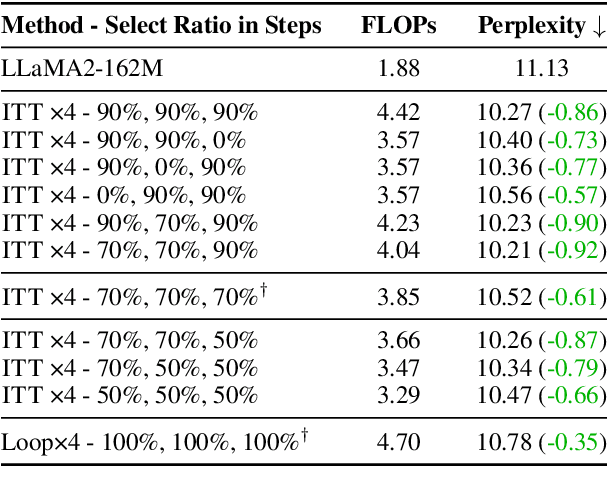

Abstract:Large language models (LLMs) face inherent performance bottlenecks under parameter constraints, particularly in processing critical tokens that demand complex reasoning. Empirical analysis reveals challenging tokens induce abrupt gradient spikes across layers, exposing architectural stress points in standard Transformers. Building on this insight, we propose Inner Thinking Transformer (ITT), which reimagines layer computations as implicit thinking steps. ITT dynamically allocates computation through Adaptive Token Routing, iteratively refines representations via Residual Thinking Connections, and distinguishes reasoning phases using Thinking Step Encoding. ITT enables deeper processing of critical tokens without parameter expansion. Evaluations across 162M-466M parameter models show ITT achieves 96.5\% performance of a 466M Transformer using only 162M parameters, reduces training data by 43.2\%, and outperforms Transformer/Loop variants in 11 benchmarks. By enabling elastic computation allocation during inference, ITT balances performance and efficiency through architecture-aware optimization of implicit thinking pathways.

FARM: Frequency-Aware Model for Cross-Domain Live-Streaming Recommendation

Feb 13, 2025

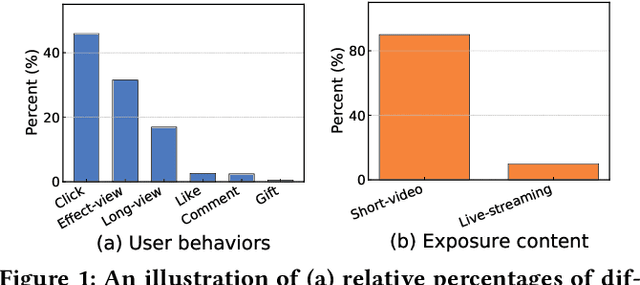

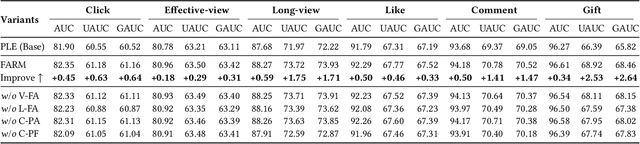

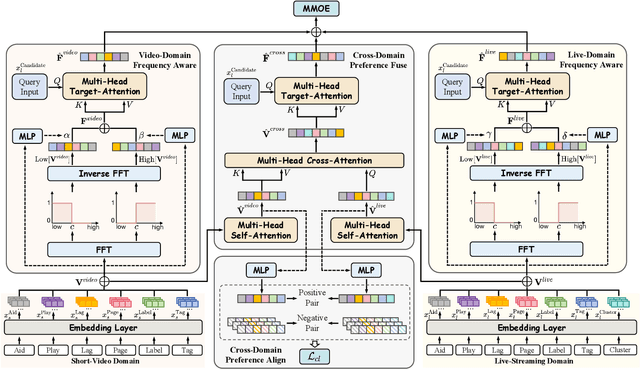

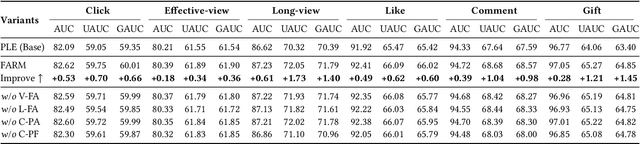

Abstract:Live-streaming services have attracted widespread popularity due to their real-time interactivity and entertainment value. Users can engage with live-streaming authors by participating in live chats, posting likes, or sending virtual gifts to convey their preferences and support. However, the live-streaming services faces serious data-sparsity problem, which can be attributed to the following two points: (1) User's valuable behaviors are usually sparse, e.g., like, comment and gift, which are easily overlooked by the model, making it difficult to describe user's personalized preference. (2) The main exposure content on our platform is short-video, which is 9 times higher than the exposed live-streaming, leading to the inability of live-streaming content to fully model user preference. To this end, we propose a Frequency-Aware Model for Cross-Domain Live-Streaming Recommendation, termed as FARM. Specifically, we first present the intra-domain frequency aware module to enable our model to perceive user's sparse yet valuable behaviors, i.e., high-frequency information, supported by the Discrete Fourier Transform (DFT). To transfer user preference across the short-video and live-streaming domains, we propose a novel preference align before fuse strategy, which consists of two parts: the cross-domain preference align module to align user preference in both domains with contrastive learning, and the cross-domain preference fuse module to further fuse user preference in both domains using a serious of tailor-designed attention mechanisms. Extensive offline experiments and online A/B testing on Kuaishou live-streaming services demonstrate the effectiveness and superiority of FARM. Our FARM has been deployed in online live-streaming services and currently serves hundreds of millions of users on Kuaishou.

Exploring Preference-Guided Diffusion Model for Cross-Domain Recommendation

Jan 20, 2025

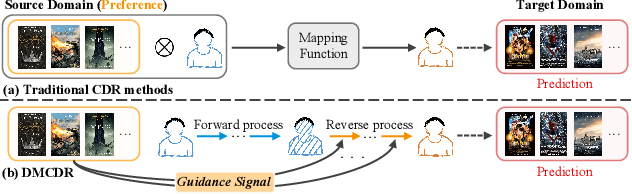

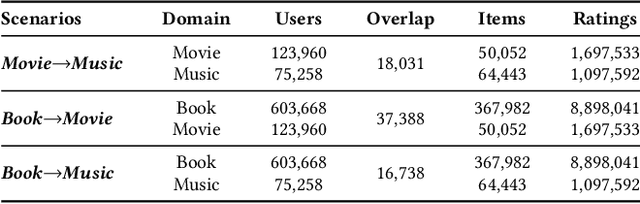

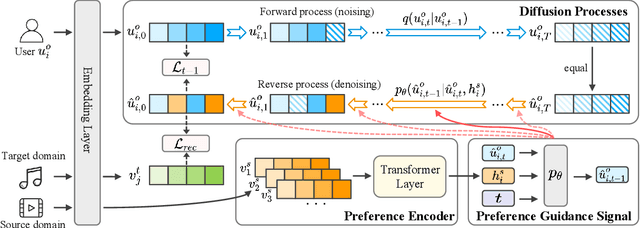

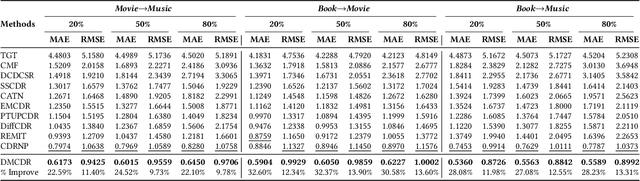

Abstract:Cross-domain recommendation (CDR) has been proven as a promising way to alleviate the cold-start issue, in which the most critical problem is how to draw an informative user representation in the target domain via the transfer of user preference existing in the source domain. Prior efforts mostly follow the embedding-and-mapping paradigm, which first integrate the preference into user representation in the source domain, and then perform a mapping function on this representation to the target domain. However, they focus on mapping features across domains, neglecting to explicitly model the preference integration process, which may lead to learning coarse user representation. Diffusion models (DMs), which contribute to more accurate user/item representations due to their explicit information injection capability, have achieved promising performance in recommendation systems. Nevertheless, these DMs-based methods cannot directly account for valuable user preference in other domains, leading to challenges in adapting to the transfer of preference for cold-start users. Consequently, the feasibility of DMs for CDR remains underexplored. To this end, we explore to utilize the explicit information injection capability of DMs for user preference integration and propose a Preference-Guided Diffusion Model for CDR to cold-start users, termed as DMCDR. Specifically, we leverage a preference encoder to establish the preference guidance signal with the user's interaction history in the source domain. Then, we explicitly inject the preference guidance signal into the user representation step by step to guide the reverse process, and ultimately generate the personalized user representation in the target domain, thus achieving the transfer of user preference across domains. Furthermore, we comprehensively explore the impact of six DMs-based variants on CDR.

Mixture of Hidden-Dimensions Transformer

Dec 10, 2024

Abstract:Transformer models encounter challenges in scaling hidden dimensions efficiently, as uniformly increasing them inflates computational and memory costs while failing to emphasize the most relevant features for each token. For further understanding, we study hidden dimension sparsity and observe that trained Transformers utilize only a small fraction of token dimensions, revealing an "activation flow" pattern. Notably, there are shared sub-dimensions with sustained activation across multiple consecutive tokens and specialized sub-dimensions uniquely activated for each token. To better model token-relevant sub-dimensions, we propose MoHD (Mixture of Hidden Dimensions), a sparse conditional activation architecture. Particularly, MoHD employs shared sub-dimensions for common token features and a routing mechanism to dynamically activate specialized sub-dimensions. To mitigate potential information loss from sparsity, we design activation scaling and group fusion mechanisms to preserve activation flow. In this way, MoHD expands hidden dimensions with negligible increases in computation or parameters, efficient training and inference while maintaining performance. Evaluations across 10 NLP tasks show that MoHD surpasses Vanilla Transformers in parameter efficiency and task performance. It achieves 1.7% higher performance with 50% fewer activation parameters and 3.7% higher performance with a 3x parameter expansion at constant activation cost. MOHD offers a new perspective for scaling the model, showcasing the potential of hidden dimension sparsity to boost efficiency

Revealing the Challenge of Detecting Character Knowledge Errors in LLM Role-Playing

Sep 18, 2024

Abstract:Large language model (LLM) role-playing has gained widespread attention, where the authentic character knowledge is crucial for constructing realistic LLM role-playing agents. However, existing works usually overlook the exploration of LLMs' ability to detect characters' known knowledge errors (KKE) and unknown knowledge errors (UKE) while playing roles, which would lead to low-quality automatic construction of character trainable corpus. In this paper, we propose a probing dataset to evaluate LLMs' ability to detect errors in KKE and UKE. The results indicate that even the latest LLMs struggle to effectively detect these two types of errors, especially when it comes to familiar knowledge. We experimented with various reasoning strategies and propose an agent-based reasoning method, Self-Recollection and Self-Doubt (S2RD), to further explore the potential for improving error detection capabilities. Experiments show that our method effectively improves the LLMs' ability to detect error character knowledge, but it remains an issue that requires ongoing attention.

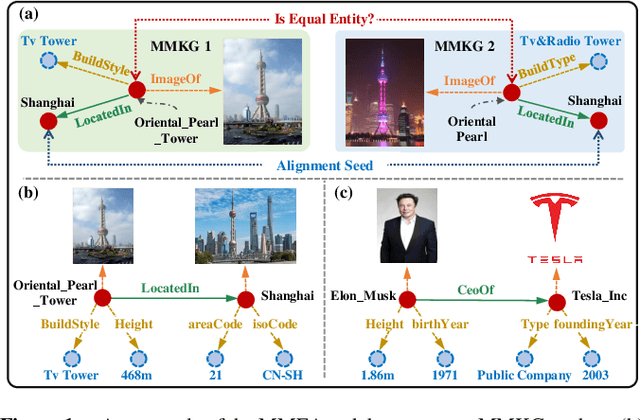

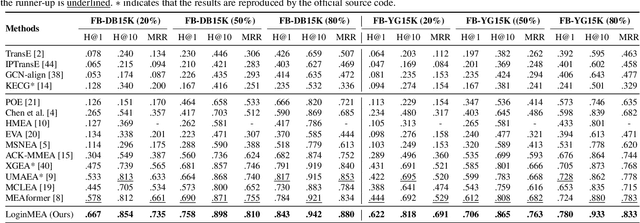

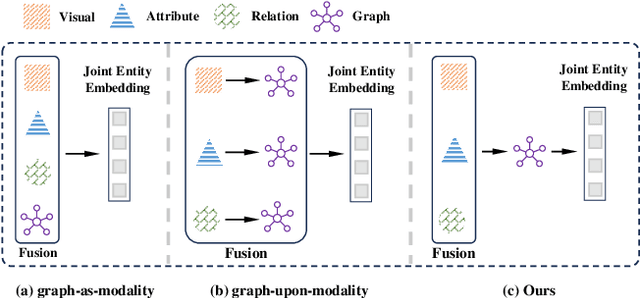

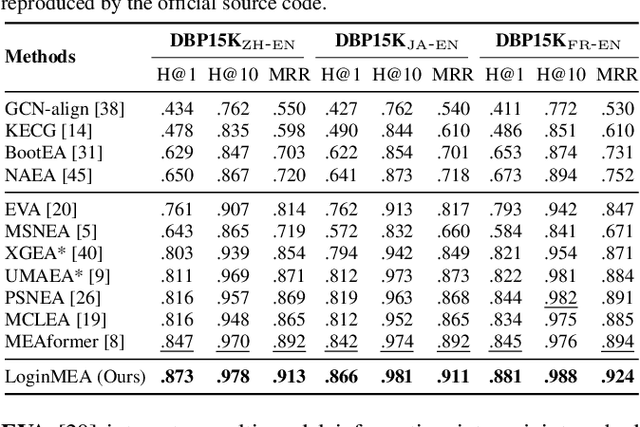

LoginMEA: Local-to-Global Interaction Network for Multi-modal Entity Alignment

Jul 29, 2024

Abstract:Multi-modal entity alignment (MMEA) aims to identify equivalent entities between two multi-modal knowledge graphs (MMKGs), whose entities can be associated with relational triples and related images. Most previous studies treat the graph structure as a special modality, and fuse different modality information with separate uni-modal encoders, neglecting valuable relational associations in modalities. Other studies refine each uni-modal information with graph structures, but may introduce unnecessary relations in specific modalities. To this end, we propose a novel local-to-global interaction network for MMEA, termed as LoginMEA. Particularly, we first fuse local multi-modal interactions to generate holistic entity semantics and then refine them with global relational interactions of entity neighbors. In this design, the uni-modal information is fused adaptively, and can be refined with relations accordingly. To enrich local interactions of multi-modal entity information, we device modality weights and low-rank interactive fusion, allowing diverse impacts and element-level interactions among modalities. To capture global interactions of graph structures, we adopt relation reflection graph attention networks, which fully capture relational associations between entities. Extensive experiments demonstrate superior results of our method over 5 cross-KG or bilingual benchmark datasets, indicating the effectiveness of capturing local and global interactions.

IBMEA: Exploring Variational Information Bottleneck for Multi-modal Entity Alignment

Jul 27, 2024

Abstract:Multi-modal entity alignment (MMEA) aims to identify equivalent entities between multi-modal knowledge graphs (MMKGs), where the entities can be associated with related images. Most existing studies integrate multi-modal information heavily relying on the automatically-learned fusion module, rarely suppressing the redundant information for MMEA explicitly. To this end, we explore variational information bottleneck for multi-modal entity alignment (IBMEA), which emphasizes the alignment-relevant information and suppresses the alignment-irrelevant information in generating entity representations. Specifically, we devise multi-modal variational encoders to generate modal-specific entity representations as probability distributions. Then, we propose four modal-specific information bottleneck regularizers, limiting the misleading clues in refining modal-specific entity representations. Finally, we propose a modal-hybrid information contrastive regularizer to integrate all the refined modal-specific representations, enhancing the entity similarity between MMKGs to achieve MMEA. We conduct extensive experiments on two cross-KG and three bilingual MMEA datasets. Experimental results demonstrate that our model consistently outperforms previous state-of-the-art methods, and also shows promising and robust performance in low-resource and high-noise data scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge