Yun Fu

Seeing Through Words: Controlling Visual Retrieval Quality with Language Models

Feb 24, 2026Abstract:Text-to-image retrieval is a fundamental task in vision-language learning, yet in real-world scenarios it is often challenged by short and underspecified user queries. Such queries are typically only one or two words long, rendering them semantically ambiguous, prone to collisions across diverse visual interpretations, and lacking explicit control over the quality of retrieved images. To address these issues, we propose a new paradigm of quality-controllable retrieval, which enriches short queries with contextual details while incorporating explicit notions of image quality. Our key idea is to leverage a generative language model as a query completion function, extending underspecified queries into descriptive forms that capture fine-grained visual attributes such as pose, scene, and aesthetics. We introduce a general framework that conditions query completion on discretized quality levels, derived from relevance and aesthetic scoring models, so that query enrichment is not only semantically meaningful but also quality-aware. The resulting system provides three key advantages: 1) flexibility, it is compatible with any pretrained vision-language model (VLMs) without modification; 2) transparency, enriched queries are explicitly interpretable by users; and 3) controllability, enabling retrieval results to be steered toward user-preferred quality levels. Extensive experiments demonstrate that our proposed approach significantly improves retrieval results and provides effective quality control, bridging the gap between the expressive capacity of modern VLMs and the underspecified nature of short user queries. Our code is available at https://github.com/Jianglin954/QCQC.

Fine-T2I: An Open, Large-Scale, and Diverse Dataset for High-Quality T2I Fine-Tuning

Feb 10, 2026Abstract:High-quality and open datasets remain a major bottleneck for text-to-image (T2I) fine-tuning. Despite rapid progress in model architectures and training pipelines, most publicly available fine-tuning datasets suffer from low resolution, poor text-image alignment, or limited diversity, resulting in a clear performance gap between open research models and enterprise-grade models. In this work, we present Fine-T2I, a large-scale, high-quality, and fully open dataset for T2I fine-tuning. Fine-T2I spans 10 task combinations, 32 prompt categories, 11 visual styles, and 5 prompt templates, and combines synthetic images generated by strong modern models with carefully curated real images from professional photographers. All samples are rigorously filtered for text-image alignment, visual fidelity, and prompt quality, with over 95% of initial candidates removed. The final dataset contains over 6 million text-image pairs, around 2 TB on disk, approaching the scale of pretraining datasets while maintaining fine-tuning-level quality. Across a diverse set of pretrained diffusion and autoregressive models, fine-tuning on Fine-T2I consistently improves both generation quality and instruction adherence, as validated by human evaluation, visual comparison, and automatic metrics. We release Fine-T2I under an open license to help close the data gap in T2I fine-tuning in the open community.

IIR-VLM: In-Context Instance-level Recognition for Large Vision-Language Models

Jan 20, 2026Abstract:Instance-level recognition (ILR) concerns distinguishing individual instances from one another, with person re-identification as a prominent example. Despite the impressive visual perception capabilities of modern VLMs, we find their performance on ILR unsatisfactory, often dramatically underperforming domain-specific ILR models. This limitation hinders many practical application of VLMs, e.g. where recognizing familiar people and objects is crucial for effective visual understanding. Existing solutions typically learn to recognize instances one at a time using instance-specific datasets, which not only incur substantial data collection and training costs but also struggle with fine-grained discrimination. In this work, we propose IIR-VLM, a VLM enhanced for In-context Instance-level Recognition. We integrate pre-trained ILR expert models as auxiliary visual encoders to provide specialized features for learning diverse instances, which enables VLMs to learn new instances in-context in a one-shot manner. Further, IIR-VLM leverages this knowledge for instance-aware visual understanding. We validate IIR-VLM's efficacy on existing instance personalization benchmarks. Finally, we demonstrate its superior ILR performance on a challenging new benchmark, which assesses ILR capabilities across varying difficulty and diverse categories, with person, face, pet and general objects as the instances at task.

Diffusion-DRF: Differentiable Reward Flow for Video Diffusion Fine-Tuning

Jan 07, 2026Abstract:Direct Preference Optimization (DPO) has recently improved Text-to-Video (T2V) generation by enhancing visual fidelity and text alignment. However, current methods rely on non-differentiable preference signals from human annotations or learned reward models. This reliance makes training label-intensive, bias-prone, and easy-to-game, which often triggers reward hacking and unstable training. We propose Diffusion-DRF, a differentiable reward flow for fine-tuning video diffusion models using a frozen, off-the-shelf Vision-Language Model (VLM) as a training-free critic. Diffusion-DRF directly backpropagates VLM feedback through the diffusion denoising chain, converting logit-level responses into token-aware gradients for optimization. We propose an automated, aspect-structured prompting pipeline to obtain reliable multi-dimensional VLM feedback, while gradient checkpointing enables efficient updates through the final denoising steps. Diffusion-DRF improves video quality and semantic alignment while mitigating reward hacking and collapse -- without additional reward models or preference datasets. It is model-agnostic and readily generalizes to other diffusion-based generative tasks.

Rethinking Fine-Tuning: Unlocking Hidden Capabilities in Vision-Language Models

Dec 28, 2025Abstract:Explorations in fine-tuning Vision-Language Models (VLMs), such as Low-Rank Adaptation (LoRA) from Parameter Efficient Fine-Tuning (PEFT), have made impressive progress. However, most approaches rely on explicit weight updates, overlooking the extensive representational structures already encoded in pre-trained models that remain underutilized. Recent works have demonstrated that Mask Fine-Tuning (MFT) can be a powerful and efficient post-training paradigm for language models. Instead of updating weights, MFT assigns learnable gating scores to each weight, allowing the model to reorganize its internal subnetworks for downstream task adaptation. In this paper, we rethink fine-tuning for VLMs from a structural reparameterization perspective grounded in MFT. We apply MFT to the language and projector components of VLMs with different language backbones and compare against strong PEFT baselines. Experiments show that MFT consistently surpasses LoRA variants and even full fine-tuning, achieving high performance without altering the frozen backbone. Our findings reveal that effective adaptation can emerge not only from updating weights but also from reestablishing connections among the model's existing knowledge. Code available at: https://github.com/Ming-K9/MFT-VLM

SimpleCall: A Lightweight Image Restoration Agent in Label-Free Environments with MLLM Perceptual Feedback

Dec 21, 2025Abstract:Complex image restoration aims to recover high-quality images from inputs affected by multiple degradations such as blur, noise, rain, and compression artifacts. Recent restoration agents, powered by vision-language models and large language models, offer promising restoration capabilities but suffer from significant efficiency bottlenecks due to reflection, rollback, and iterative tool searching. Moreover, their performance heavily depends on degradation recognition models that require extensive annotations for training, limiting their applicability in label-free environments. To address these limitations, we propose a policy optimization-based restoration framework that learns an lightweight agent to determine tool-calling sequences. The agent operates in a sequential decision process, selecting the most appropriate restoration operation at each step to maximize final image quality. To enable training within label-free environments, we introduce a novel reward mechanism driven by multimodal large language models, which act as human-aligned evaluator and provide perceptual feedback for policy improvement. Once trained, our agent executes a deterministic restoration plans without redundant tool invocations, significantly accelerating inference while maintaining high restoration quality. Extensive experiments show that despite using no supervision, our method matches SOTA performance on full-reference metrics and surpasses existing approaches on no-reference metrics across diverse degradation scenarios.

LinkedOut: Linking World Knowledge Representation Out of Video LLM for Next-Generation Video Recommendation

Dec 18, 2025Abstract:Video Large Language Models (VLLMs) unlock world-knowledge-aware video understanding through pretraining on internet-scale data and have already shown promise on tasks such as movie analysis and video question answering. However, deploying VLLMs for downstream tasks such as video recommendation remains challenging, since real systems require multi-video inputs, lightweight backbones, low-latency sequential inference, and rapid response. In practice, (1) decode-only generation yields high latency for sequential inference, (2) typical interfaces do not support multi-video inputs, and (3) constraining outputs to language discards fine-grained visual details that matter for downstream vision tasks. We argue that these limitations stem from the absence of a representation that preserves pixel-level detail while leveraging world knowledge. We present LinkedOut, a representation that extracts VLLM world knowledge directly from video to enable fast inference, supports multi-video histories, and removes the language bottleneck. LinkedOut extracts semantically grounded, knowledge-aware tokens from raw frames using VLLMs, guided by promptable queries and optional auxiliary modalities. We introduce a cross-layer knowledge fusion MoE that selects the appropriate level of abstraction from the rich VLLM features, enabling personalized, interpretable, and low-latency recommendation. To our knowledge, LinkedOut is the first VLLM-based video recommendation method that operates on raw frames without handcrafted labels, achieving state-of-the-art results on standard benchmarks. Interpretability studies and ablations confirm the benefits of layer diversity and layer-wise fusion, pointing to a practical path that fully leverages VLLM world-knowledge priors and visual reasoning for downstream vision tasks such as recommendation.

MTS-DMAE: Dual-Masked Autoencoder for Unsupervised Multivariate Time Series Representation Learning

Sep 19, 2025

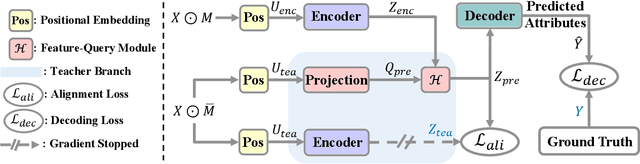

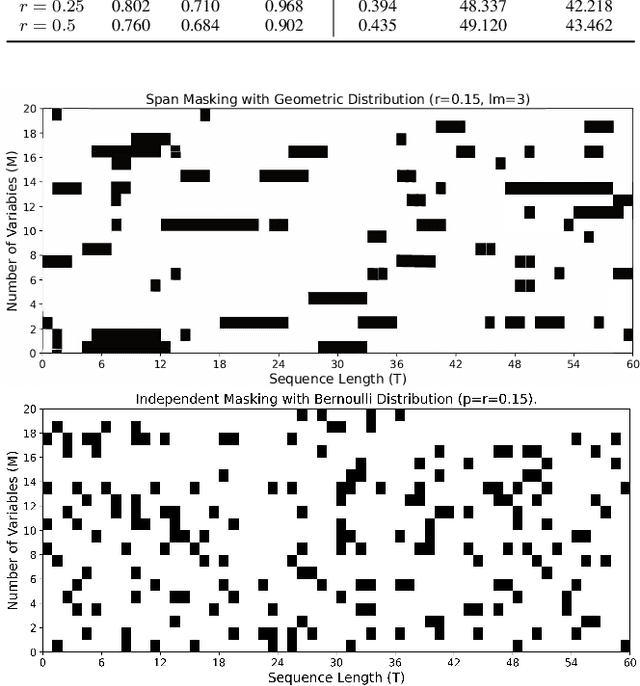

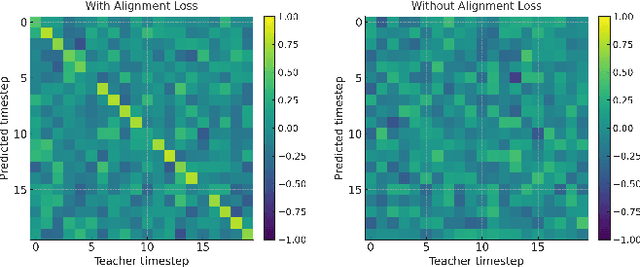

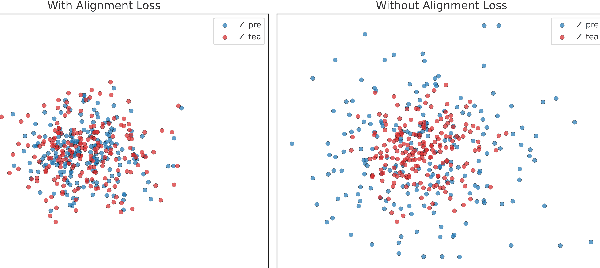

Abstract:Unsupervised multivariate time series (MTS) representation learning aims to extract compact and informative representations from raw sequences without relying on labels, enabling efficient transfer to diverse downstream tasks. In this paper, we propose Dual-Masked Autoencoder (DMAE), a novel masked time-series modeling framework for unsupervised MTS representation learning. DMAE formulates two complementary pretext tasks: (1) reconstructing masked values based on visible attributes, and (2) estimating latent representations of masked features, guided by a teacher encoder. To further improve representation quality, we introduce a feature-level alignment constraint that encourages the predicted latent representations to align with the teacher's outputs. By jointly optimizing these objectives, DMAE learns temporally coherent and semantically rich representations. Comprehensive evaluations across classification, regression, and forecasting tasks demonstrate that our approach achieves consistent and superior performance over competitive baselines.

AdaSports-Traj: Role- and Domain-Aware Adaptation for Multi-Agent Trajectory Modeling in Sports

Sep 19, 2025Abstract:Trajectory prediction in multi-agent sports scenarios is inherently challenging due to the structural heterogeneity across agent roles (e.g., players vs. ball) and dynamic distribution gaps across different sports domains. Existing unified frameworks often fail to capture these structured distributional shifts, resulting in suboptimal generalization across roles and domains. We propose AdaSports-Traj, an adaptive trajectory modeling framework that explicitly addresses both intra-domain and inter-domain distribution discrepancies in sports. At its core, AdaSports-Traj incorporates a Role- and Domain-Aware Adapter to conditionally adjust latent representations based on agent identity and domain context. Additionally, we introduce a Hierarchical Contrastive Learning objective, which separately supervises role-sensitive and domain-aware representations to encourage disentangled latent structures without introducing optimization conflict. Experiments on three diverse sports datasets, Basketball-U, Football-U, and Soccer-U, demonstrate the effectiveness of our adaptive design, achieving strong performance in both unified and cross-domain trajectory prediction settings.

Out-of-Sight Trajectories: Tracking, Fusion, and Prediction

Sep 18, 2025Abstract:Trajectory prediction is a critical task in computer vision and autonomous systems, playing a key role in autonomous driving, robotics, surveillance, and virtual reality. Existing methods often rely on complete and noise-free observational data, overlooking the challenges associated with out-of-sight objects and the inherent noise in sensor data caused by limited camera coverage, obstructions, and the absence of ground truth for denoised trajectories. These limitations pose safety risks and hinder reliable prediction in real-world scenarios. In this extended work, we present advancements in Out-of-Sight Trajectory (OST), a novel task that predicts the noise-free visual trajectories of out-of-sight objects using noisy sensor data. Building on our previous research, we broaden the scope of Out-of-Sight Trajectory Prediction (OOSTraj) to include pedestrians and vehicles, extending its applicability to autonomous driving, robotics, surveillance, and virtual reality. Our enhanced Vision-Positioning Denoising Module leverages camera calibration to establish a vision-positioning mapping, addressing the lack of visual references, while effectively denoising noisy sensor data in an unsupervised manner. Through extensive evaluations on the Vi-Fi and JRDB datasets, our approach achieves state-of-the-art performance in both trajectory denoising and prediction, significantly surpassing previous baselines. Additionally, we introduce comparisons with traditional denoising methods, such as Kalman filtering, and adapt recent trajectory prediction models to our task, providing a comprehensive benchmark. This work represents the first initiative to integrate vision-positioning projection for denoising noisy sensor trajectories of out-of-sight agents, paving the way for future advances. The code and preprocessed datasets are available at github.com/Hai-chao-Zhang/OST

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge