Yu Hu

Beyond Endpoints: Path-Centric Reasoning for Vectorized Off-Road Network Extraction

Dec 12, 2025Abstract:Deep learning has advanced vectorized road extraction in urban settings, yet off-road environments remain underexplored and challenging. A significant domain gap causes advanced models to fail in wild terrains due to two key issues: lack of large-scale vectorized datasets and structural weakness in prevailing methods. Models such as SAM-Road employ a node-centric paradigm that reasons at sparse endpoints, making them fragile to occlusions and ambiguous junctions in off-road scenes, leading to topological errors. This work addresses these limitations in two complementary ways. First, we release WildRoad, a global off-road road network dataset constructed efficiently with a dedicated interactive annotation tool tailored for road-network labeling. Second, we introduce MaGRoad (Mask-aware Geodesic Road network extractor), a path-centric framework that aggregates multi-scale visual evidence along candidate paths to infer connectivity robustly. Extensive experiments show that MaGRoad achieves state-of-the-art performance on our challenging WildRoad benchmark while generalizing well to urban datasets. A streamlined pipeline also yields roughly 2.5x faster inference, improving practical applicability. Together, the dataset and path-centric paradigm provide a stronger foundation for mapping roads in the wild. We release both the dataset and code at https://github.com/xiaofei-guan/MaGRoad.

Mastering Diverse, Unknown, and Cluttered Tracks for Robust Vision-Based Drone Racing

Dec 11, 2025Abstract:Most reinforcement learning(RL)-based methods for drone racing target fixed, obstacle-free tracks, leaving the generalization to unknown, cluttered environments largely unaddressed. This challenge stems from the need to balance racing speed and collision avoidance, limited feasible space causing policy exploration trapped in local optima during training, and perceptual ambiguity between gates and obstacles in depth maps-especially when gate positions are only coarsely specified. To overcome these issues, we propose a two-phase learning framework: an initial soft-collision training phase that preserves policy exploration for high-speed flight, followed by a hard-collision refinement phase that enforces robust obstacle avoidance. An adaptive, noise-augmented curriculum with an asymmetric actor-critic architecture gradually shifts the policy's reliance from privileged gate-state information to depth-based visual input. We further impose Lipschitz constraints and integrate a track-primitive generator to enhance motion stability and cross-environment generalization. We evaluate our framework through extensive simulation and ablation studies, and validate it in real-world experiments on a computationally constrained quadrotor. The system achieves agile flight while remaining robust to gate-position errors, developing a generalizable drone racing framework with the capability to operate in diverse, partially unknown and cluttered environments. https://yufengsjtu.github.io/MasterRacing.github.io/

UNIV: Unified Foundation Model for Infrared and Visible Modalities

Sep 19, 2025Abstract:The demand for joint RGB-visible and infrared perception is growing rapidly, particularly to achieve robust performance under diverse weather conditions. Although pre-trained models for RGB-visible and infrared data excel in their respective domains, they often underperform in multimodal scenarios, such as autonomous vehicles equipped with both sensors. To address this challenge, we propose a biologically inspired UNified foundation model for Infrared and Visible modalities (UNIV), featuring two key innovations. First, we introduce Patch-wise Cross-modality Contrastive Learning (PCCL), an attention-guided distillation framework that mimics retinal horizontal cells' lateral inhibition, which enables effective cross-modal feature alignment while remaining compatible with any transformer-based architecture. Second, our dual-knowledge preservation mechanism emulates the retina's bipolar cell signal routing - combining LoRA adapters (2% added parameters) with synchronous distillation to prevent catastrophic forgetting, thereby replicating the retina's photopic (cone-driven) and scotopic (rod-driven) functionality. To support cross-modal learning, we introduce the MVIP dataset, the most comprehensive visible-infrared benchmark to date. It contains 98,992 precisely aligned image pairs spanning diverse scenarios. Extensive experiments demonstrate UNIV's superior performance on infrared tasks (+1.7 mIoU in semantic segmentation and +0.7 mAP in object detection) while maintaining 99%+ of the baseline performance on visible RGB tasks. Our code is available at https://github.com/fangyuanmao/UNIV.

ROD: RGB-Only Fast and Efficient Off-road Freespace Detection

Aug 12, 2025

Abstract:Off-road freespace detection is more challenging than on-road scenarios because of the blurred boundaries of traversable areas. Previous state-of-the-art (SOTA) methods employ multi-modal fusion of RGB images and LiDAR data. However, due to the significant increase in inference time when calculating surface normal maps from LiDAR data, multi-modal methods are not suitable for real-time applications, particularly in real-world scenarios where higher FPS is required compared to slow navigation. This paper presents a novel RGB-only approach for off-road freespace detection, named ROD, eliminating the reliance on LiDAR data and its computational demands. Specifically, we utilize a pre-trained Vision Transformer (ViT) to extract rich features from RGB images. Additionally, we design a lightweight yet efficient decoder, which together improve both precision and inference speed. ROD establishes a new SOTA on ORFD and RELLIS-3D datasets, as well as an inference speed of 50 FPS, significantly outperforming prior models.

EGGCodec: A Robust Neural Encodec Framework for EGG Reconstruction and F0 Extraction

Aug 12, 2025Abstract:This letter introduces EGGCodec, a robust neural Encodec framework engineered for electroglottography (EGG) signal reconstruction and F0 extraction. We propose a multi-scale frequency-domain loss function to capture the nuanced relationship between original and reconstructed EGG signals, complemented by a time-domain correlation loss to improve generalization and accuracy. Unlike conventional Encodec models that extract F0 directly from features, EGGCodec leverages reconstructed EGG signals, which more closely correspond to F0. By removing the conventional GAN discriminator, we streamline EGGCodec's training process without compromising efficiency, incurring only negligible performance degradation. Trained on a widely used EGG-inclusive dataset, extensive evaluations demonstrate that EGGCodec outperforms state-of-the-art F0 extraction schemes, reducing mean absolute error (MAE) from 14.14 Hz to 13.69 Hz, and improving voicing decision error (VDE) by 38.2\%. Moreover, extensive ablation experiments validate the contribution of each component of EGGCodec.

MedCite: Can Language Models Generate Verifiable Text for Medicine?

Jun 07, 2025Abstract:Existing LLM-based medical question-answering systems lack citation generation and evaluation capabilities, raising concerns about their adoption in practice. In this work, we introduce \name, the first end-to-end framework that facilitates the design and evaluation of citation generation with LLMs for medical tasks. Meanwhile, we introduce a novel multi-pass retrieval-citation method that generates high-quality citations. Our evaluation highlights the challenges and opportunities of citation generation for medical tasks, while identifying important design choices that have a significant impact on the final citation quality. Our proposed method achieves superior citation precision and recall improvements compared to strong baseline methods, and we show that evaluation results correlate well with annotation results from professional experts.

Enhancing User-Oriented Proactivity in Open-Domain Dialogues with Critic Guidance

May 18, 2025

Abstract:Open-domain dialogue systems aim to generate natural and engaging conversations, providing significant practical value in real applications such as social robotics and personal assistants. The advent of large language models (LLMs) has greatly advanced this field by improving context understanding and conversational fluency. However, existing LLM-based dialogue systems often fall short in proactively understanding the user's chatting preferences and guiding conversations toward user-centered topics. This lack of user-oriented proactivity can lead users to feel unappreciated, reducing their satisfaction and willingness to continue the conversation in human-computer interactions. To address this issue, we propose a User-oriented Proactive Chatbot (UPC) to enhance the user-oriented proactivity. Specifically, we first construct a critic to evaluate this proactivity inspired by the LLM-as-a-judge strategy. Given the scarcity of high-quality training data, we then employ the critic to guide dialogues between the chatbot and user agents, generating a corpus with enhanced user-oriented proactivity. To ensure the diversity of the user backgrounds, we introduce the ISCO-800, a diverse user background dataset for constructing user agents. Moreover, considering the communication difficulty varies among users, we propose an iterative curriculum learning method that trains the chatbot from easy-to-communicate users to more challenging ones, thereby gradually enhancing its performance. Experiments demonstrate that our proposed training method is applicable to different LLMs, improving user-oriented proactivity and attractiveness in open-domain dialogues.

Exploiting Inter-Sample Correlation and Intra-Sample Redundancy for Partially Relevant Video Retrieval

Apr 28, 2025

Abstract:Partially Relevant Video Retrieval (PRVR) aims to retrieve the target video that is partially relevant to the text query. The primary challenge in PRVR arises from the semantic asymmetry between textual and visual modalities, as videos often contain substantial content irrelevant to the query. Existing methods coarsely align paired videos and text queries to construct the semantic space, neglecting the critical cross-modal dual nature inherent in this task: inter-sample correlation and intra-sample redundancy. To this end, we propose a novel PRVR framework to systematically exploit these two characteristics. Our framework consists of three core modules. First, the Inter Correlation Enhancement (ICE) module captures inter-sample correlation by identifying semantically similar yet unpaired text queries and video moments, combining them to form pseudo-positive pairs for more robust semantic space construction. Second, the Intra Redundancy Mining (IRM) module mitigates intra-sample redundancy by mining redundant video moment features and treating them as hard negative samples, thereby encouraging the model to learn more discriminative representations. Finally, to reinforce these modules, we introduce the Temporal Coherence Prediction (TCP) module, which enhances feature discrimination by training the model to predict the original temporal order of randomly shuffled video frames and moments. Extensive experiments on three datasets demonstrate the superiority of our approach compared to previous methods, achieving state-of-the-art results.

Dynamic Compressing Prompts for Efficient Inference of Large Language Models

Apr 15, 2025Abstract:Large Language Models (LLMs) have shown outstanding performance across a variety of tasks, partly due to advanced prompting techniques. However, these techniques often require lengthy prompts, which increase computational costs and can hinder performance because of the limited context windows of LLMs. While prompt compression is a straightforward solution, existing methods confront the challenges of retaining essential information, adapting to context changes, and remaining effective across different tasks. To tackle these issues, we propose a task-agnostic method called Dynamic Compressing Prompts (LLM-DCP). Our method reduces the number of prompt tokens while aiming to preserve the performance as much as possible. We model prompt compression as a Markov Decision Process (MDP), enabling the DCP-Agent to sequentially remove redundant tokens by adapting to dynamic contexts and retaining crucial content. We develop a reward function for training the DCP-Agent that balances the compression rate, the quality of the LLM output, and the retention of key information. This allows for prompt token reduction without needing an external black-box LLM. Inspired by the progressive difficulty adjustment in curriculum learning, we introduce a Hierarchical Prompt Compression (HPC) training strategy that gradually increases the compression difficulty, enabling the DCP-Agent to learn an effective compression method that maintains information integrity. Experiments demonstrate that our method outperforms state-of-the-art techniques, especially at higher compression rates. The code for our approach will be available at https://github.com/Fhujinwu/DCP.

Mapless Collision-Free Flight via MPC using Dual KD-Trees in Cluttered Environments

Mar 13, 2025

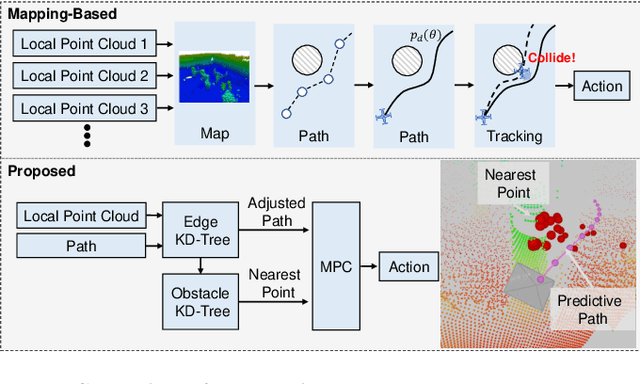

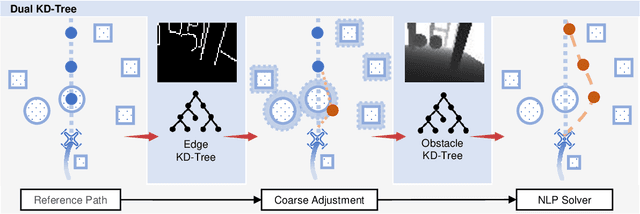

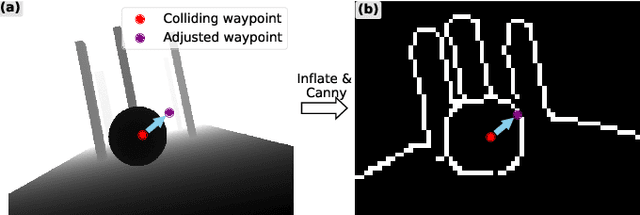

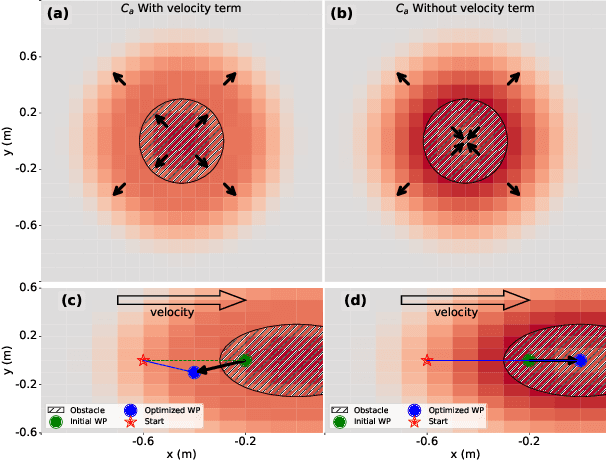

Abstract:Collision-free flight in cluttered environments is a critical capability for autonomous quadrotors. Traditional methods often rely on detailed 3D map construction, trajectory generation, and tracking. However, this cascade pipeline can introduce accumulated errors and computational delays, limiting flight agility and safety. In this paper, we propose a novel method for enabling collision-free flight in cluttered environments without explicitly constructing 3D maps or generating and tracking collision-free trajectories. Instead, we leverage Model Predictive Control (MPC) to directly produce safe actions from sparse waypoints and point clouds from a depth camera. These sparse waypoints are dynamically adjusted online based on nearby obstacles detected from point clouds. To achieve this, we introduce a dual KD-Tree mechanism: the Obstacle KD-Tree quickly identifies the nearest obstacle for avoidance, while the Edge KD-Tree provides a robust initial guess for the MPC solver, preventing it from getting stuck in local minima during obstacle avoidance. We validate our approach through extensive simulations and real-world experiments. The results show that our approach significantly outperforms the mapping-based methods and is also superior to imitation learning-based methods, demonstrating reliable obstacle avoidance at up to 12 m/s in simulations and 6 m/s in real-world tests. Our method provides a simple and robust alternative to existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge