Kai Zhou

PositionOCR: Augmenting Positional Awareness in Multi-Modal Models via Hybrid Specialist Integration

Feb 22, 2026Abstract:In recent years, Multi-modal Large Language Models (MLLMs) have achieved strong performance in OCR-centric Visual Question Answering (VQA) tasks, illustrating their capability to process heterogeneous data and exhibit adaptability across varied contexts. However, these MLLMs rely on a Large Language Model (LLM) as the decoder, which is primarily designed for linguistic processing, and thus inherently lacks the positional reasoning required for precise visual tasks, such as text spotting and text grounding. Additionally, the extensive parameters of MLLMs necessitate substantial computational resources and large-scale data for effective training. Conversely, text spotting specialists achieve state-of-the-art coordinate predictions but lack semantic reasoning capabilities. This dichotomy motivates our key research question: Can we synergize the efficiency of specialists with the contextual power of LLMs to create a positionally-accurate MLLM? To overcome these challenges, we introduce PositionOCR, a parameter-efficient hybrid architecture that seamlessly integrates a text spotting model's positional strengths with an LLM's contextual reasoning. Comprising 131M trainable parameters, this framework demonstrates outstanding multi-modal processing capabilities, particularly excelling in tasks such as text grounding and text spotting, consistently surpassing traditional MLLMs.

Context Forcing: Consistent Autoregressive Video Generation with Long Context

Feb 05, 2026Abstract:Recent approaches to real-time long video generation typically employ streaming tuning strategies, attempting to train a long-context student using a short-context (memoryless) teacher. In these frameworks, the student performs long rollouts but receives supervision from a teacher limited to short 5-second windows. This structural discrepancy creates a critical \textbf{student-teacher mismatch}: the teacher's inability to access long-term history prevents it from guiding the student on global temporal dependencies, effectively capping the student's context length. To resolve this, we propose \textbf{Context Forcing}, a novel framework that trains a long-context student via a long-context teacher. By ensuring the teacher is aware of the full generation history, we eliminate the supervision mismatch, enabling the robust training of models capable of long-term consistency. To make this computationally feasible for extreme durations (e.g., 2 minutes), we introduce a context management system that transforms the linearly growing context into a \textbf{Slow-Fast Memory} architecture, significantly reducing visual redundancy. Extensive results demonstrate that our method enables effective context lengths exceeding 20 seconds -- 2 to 10 times longer than state-of-the-art methods like LongLive and Infinite-RoPE. By leveraging this extended context, Context Forcing preserves superior consistency across long durations, surpassing state-of-the-art baselines on various long video evaluation metrics.

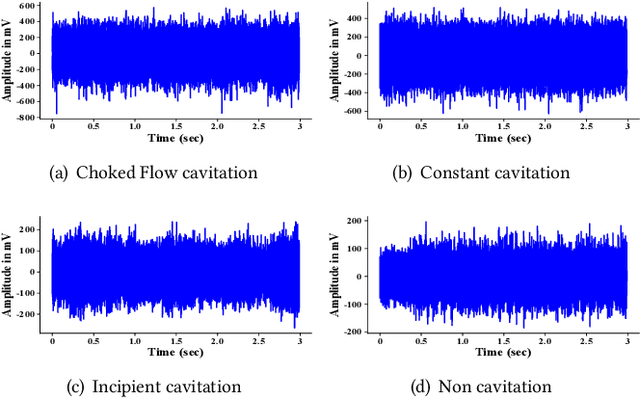

A Review of Machine Learning for Cavitation Intensity Recognition in Complex Industrial Systems

Nov 19, 2025Abstract:Cavitation intensity recognition (CIR) is a critical technology for detecting and evaluating cavitation phenomena in hydraulic machinery, with significant implications for operational safety, performance optimization, and maintenance cost reduction in complex industrial systems. Despite substantial research progress, a comprehensive review that systematically traces the development trajectory and provides explicit guidance for future research is still lacking. To bridge this gap, this paper presents a thorough review and analysis of hundreds of publications on intelligent CIR across various types of mechanical equipment from 2002 to 2025, summarizing its technological evolution and offering insights for future development. The early stages are dominated by traditional machine learning approaches that relied on manually engineered features under the guidance of domain expert knowledge. The advent of deep learning has driven the development of end-to-end models capable of automatically extracting features from multi-source signals, thereby significantly improving recognition performance and robustness. Recently, physical informed diagnostic models have been proposed to embed domain knowledge into deep learning models, which can enhance interpretability and cross-condition generalization. In the future, transfer learning, multi-modal fusion, lightweight network architectures, and the deployment of industrial agents are expected to propel CIR technology into a new stage, addressing challenges in multi-source data acquisition, standardized evaluation, and industrial implementation. The paper aims to systematically outline the evolution of CIR technology and highlight the emerging trend of integrating deep learning with physical knowledge. This provides a significant reference for researchers and practitioners in the field of intelligent cavitation diagnosis in complex industrial systems.

Stealthy Dual-Trigger Backdoors: Attacking Prompt Tuning in LM-Empowered Graph Foundation Models

Oct 16, 2025Abstract:The emergence of graph foundation models (GFMs), particularly those incorporating language models (LMs), has revolutionized graph learning and demonstrated remarkable performance on text-attributed graphs (TAGs). However, compared to traditional GNNs, these LM-empowered GFMs introduce unique security vulnerabilities during the unsecured prompt tuning phase that remain understudied in current research. Through empirical investigation, we reveal a significant performance degradation in traditional graph backdoor attacks when operating in attribute-inaccessible constrained TAG systems without explicit trigger node attribute optimization. To address this, we propose a novel dual-trigger backdoor attack framework that operates at both text-level and struct-level, enabling effective attacks without explicit optimization of trigger node text attributes through the strategic utilization of a pre-established text pool. Extensive experimental evaluations demonstrate that our attack maintains superior clean accuracy while achieving outstanding attack success rates, including scenarios with highly concealed single-trigger nodes. Our work highlights critical backdoor risks in web-deployed LM-empowered GFMs and contributes to the development of more robust supervision mechanisms for open-source platforms in the era of foundation models.

Hierarchical knowledge guided fault intensity diagnosis of complex industrial systems

Aug 17, 2025

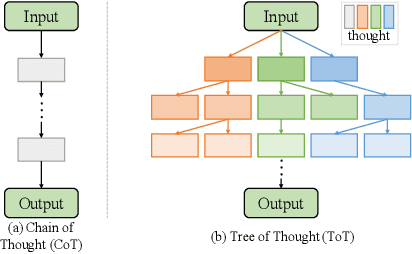

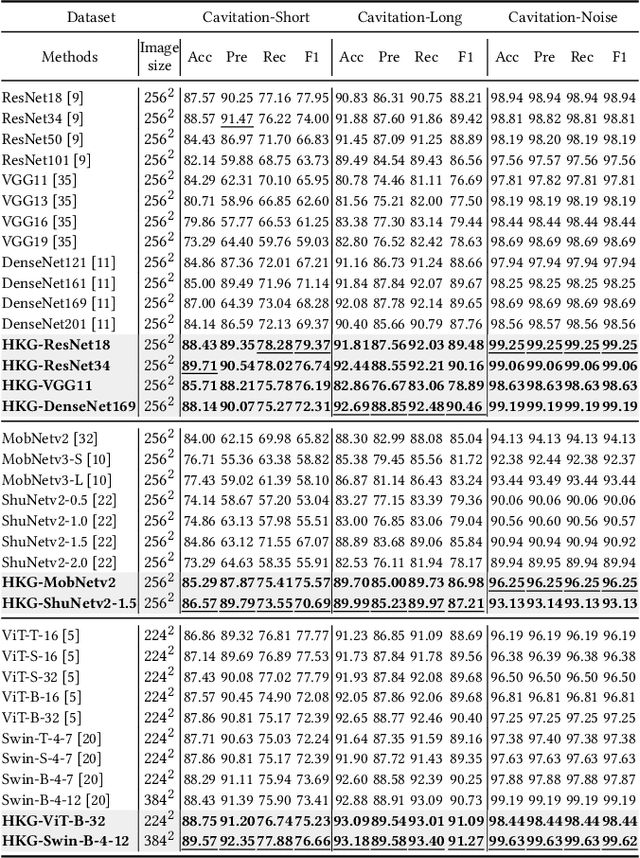

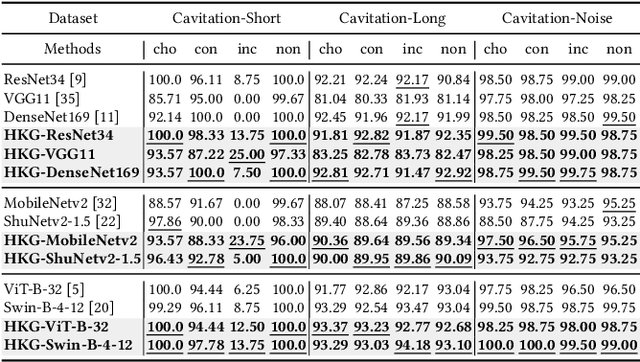

Abstract:Fault intensity diagnosis (FID) plays a pivotal role in monitoring and maintaining mechanical devices within complex industrial systems. As current FID methods are based on chain of thought without considering dependencies among target classes. To capture and explore dependencies, we propose a hierarchical knowledge guided fault intensity diagnosis framework (HKG) inspired by the tree of thought, which is amenable to any representation learning methods. The HKG uses graph convolutional networks to map the hierarchical topological graph of class representations into a set of interdependent global hierarchical classifiers, where each node is denoted by word embeddings of a class. These global hierarchical classifiers are applied to learned deep features extracted by representation learning, allowing the entire model to be end-to-end learnable. In addition, we develop a re-weighted hierarchical knowledge correlation matrix (Re-HKCM) scheme by embedding inter-class hierarchical knowledge into a data-driven statistical correlation matrix (SCM) which effectively guides the information sharing of nodes in graphical convolutional neural networks and avoids over-smoothing issues. The Re-HKCM is derived from the SCM through a series of mathematical transformations. Extensive experiments are performed on four real-world datasets from different industrial domains (three cavitation datasets from SAMSON AG and one existing publicly) for FID, all showing superior results and outperform recent state-of-the-art FID methods.

* 12 pages

Real-Time Cascade Mitigation in Power Systems Using Influence Graph Improved by Reinforcement Learning

Jun 10, 2025

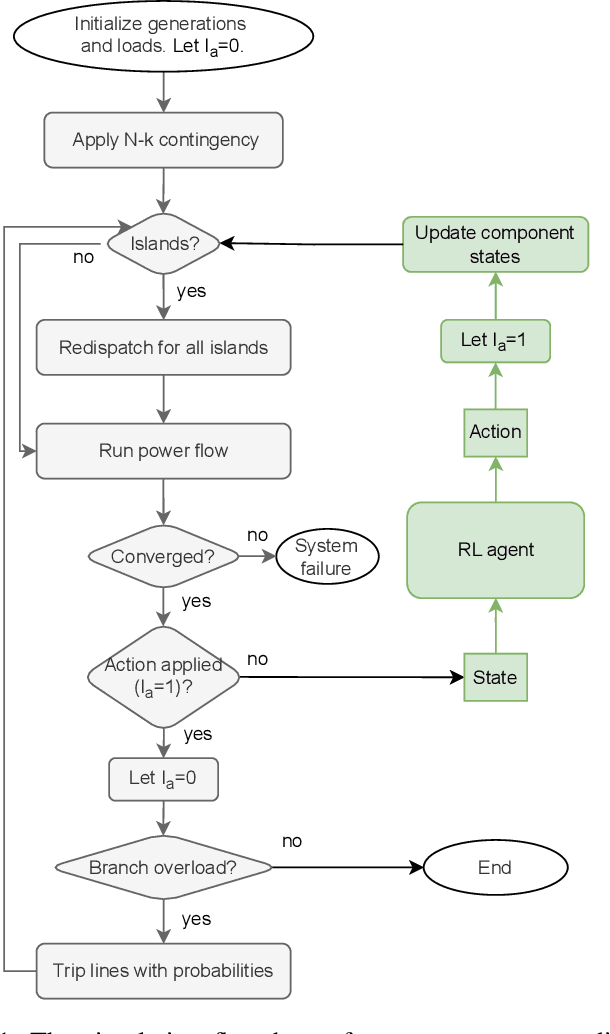

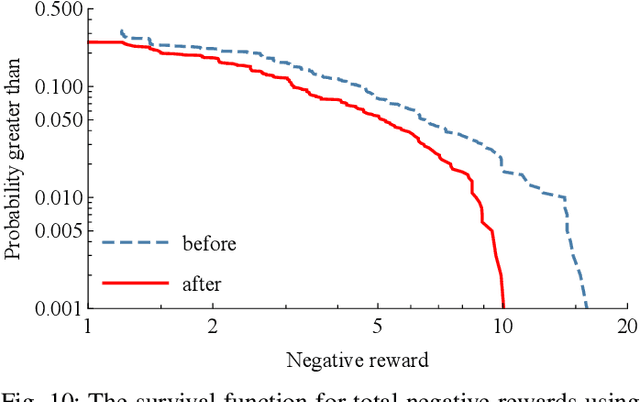

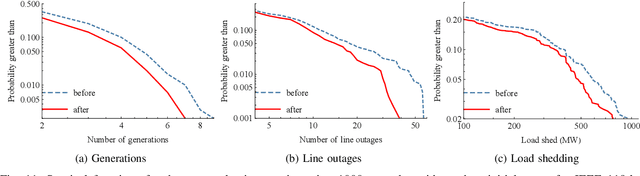

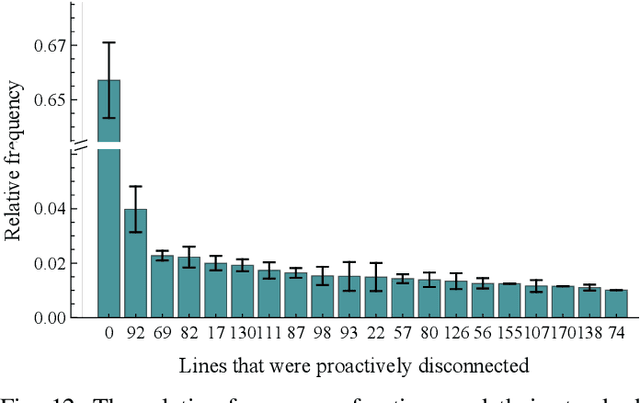

Abstract:Despite high reliability, modern power systems with growing renewable penetration face an increasing risk of cascading outages. Real-time cascade mitigation requires fast, complex operational decisions under uncertainty. In this work, we extend the influence graph into a Markov decision process model (MDP) for real-time mitigation of cascading outages in power transmission systems, accounting for uncertainties in generation, load, and initial contingencies. The MDP includes a do-nothing action to allow for conservative decision-making and is solved using reinforcement learning. We present a policy gradient learning algorithm initialized with a policy corresponding to the unmitigated case and designed to handle invalid actions. The proposed learning method converges faster than the conventional algorithm. Through careful reward design, we learn a policy that takes conservative actions without deteriorating system conditions. The model is validated on the IEEE 14-bus and IEEE 118-bus systems. The results show that proactive line disconnections can effectively reduce cascading risk, and certain lines consistently emerge as critical in mitigating cascade propagation.

Unifying Physics- and Data-Driven Modeling via Novel Causal Spatiotemporal Graph Neural Network for Interpretable Epidemic Forecasting

Apr 07, 2025

Abstract:Accurate epidemic forecasting is crucial for effective disease control and prevention. Traditional compartmental models often struggle to estimate temporally and spatially varying epidemiological parameters, while deep learning models typically overlook disease transmission dynamics and lack interpretability in the epidemiological context. To address these limitations, we propose a novel Causal Spatiotemporal Graph Neural Network (CSTGNN), a hybrid framework that integrates a Spatio-Contact SIR model with Graph Neural Networks (GNNs) to capture the spatiotemporal propagation of epidemics. Inter-regional human mobility exhibits continuous and smooth spatiotemporal patterns, leading to adjacent graph structures that share underlying mobility dynamics. To model these dynamics, we employ an adaptive static connectivity graph to represent the stable components of human mobility and utilize a temporal dynamics model to capture fluctuations within these patterns. By integrating the adaptive static connectivity graph with the temporal dynamics graph, we construct a dynamic graph that encapsulates the comprehensive properties of human mobility networks. Additionally, to capture temporal trends and variations in infectious disease spread, we introduce a temporal decomposition model to handle temporal dependence. This model is then integrated with a dynamic graph convolutional network for epidemic forecasting. We validate our model using real-world datasets at the provincial level in China and the state level in Germany. Extensive studies demonstrate that our method effectively models the spatiotemporal dynamics of infectious diseases, providing a valuable tool for forecasting and intervention strategies. Furthermore, analysis of the learned parameters offers insights into disease transmission mechanisms, enhancing the interpretability and practical applicability of our model.

AuditVotes: A Framework Towards More Deployable Certified Robustness for Graph Neural Networks

Mar 29, 2025Abstract:Despite advancements in Graph Neural Networks (GNNs), adaptive attacks continue to challenge their robustness. Certified robustness based on randomized smoothing has emerged as a promising solution, offering provable guarantees that a model's predictions remain stable under adversarial perturbations within a specified range. However, existing methods face a critical trade-off between accuracy and robustness, as achieving stronger robustness requires introducing greater noise into the input graph. This excessive randomization degrades data quality and disrupts prediction consistency, limiting the practical deployment of certifiably robust GNNs in real-world scenarios where both accuracy and robustness are essential. To address this challenge, we propose \textbf{AuditVotes}, the first framework to achieve both high clean accuracy and certifiably robust accuracy for GNNs. It integrates randomized smoothing with two key components, \underline{au}gmentation and con\underline{dit}ional smoothing, aiming to improve data quality and prediction consistency. The augmentation, acting as a pre-processing step, de-noises the randomized graph, significantly improving data quality and clean accuracy. The conditional smoothing, serving as a post-processing step, employs a filtering function to selectively count votes, thereby filtering low-quality predictions and improving voting consistency. Extensive experimental results demonstrate that AuditVotes significantly enhances clean accuracy, certified robustness, and empirical robustness while maintaining high computational efficiency. Notably, compared to baseline randomized smoothing, AuditVotes improves clean accuracy by $437.1\%$ and certified accuracy by $409.3\%$ when the attacker can arbitrarily insert $20$ edges on the Cora-ML datasets, representing a substantial step toward deploying certifiably robust GNNs in real-world applications.

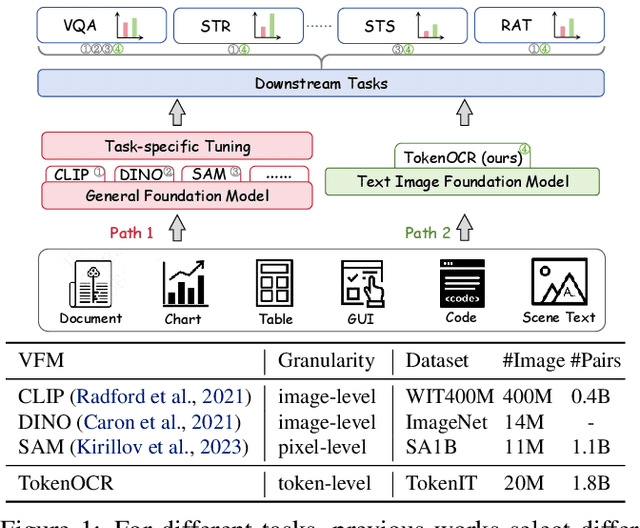

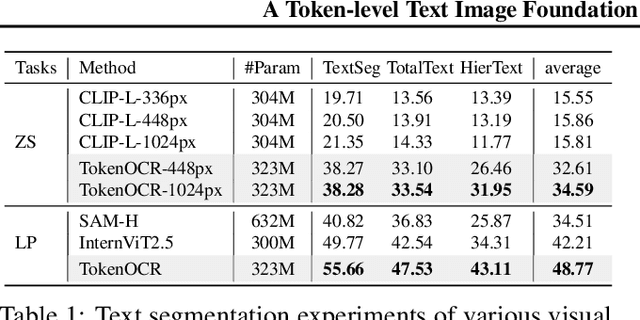

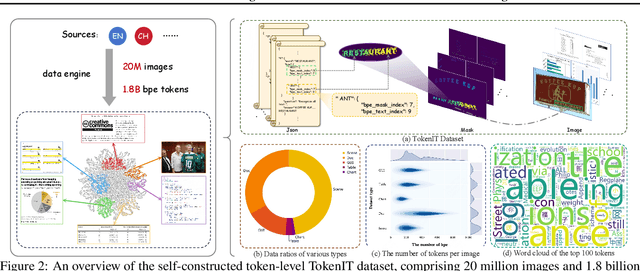

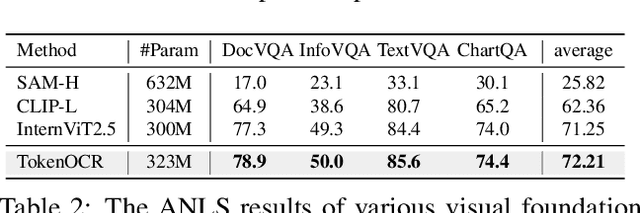

A Token-level Text Image Foundation Model for Document Understanding

Mar 04, 2025

Abstract:In recent years, general visual foundation models (VFMs) have witnessed increasing adoption, particularly as image encoders for popular multi-modal large language models (MLLMs). However, without semantically fine-grained supervision, these models still encounter fundamental prediction errors in the context of downstream text-image-related tasks, i.e., perception, understanding and reasoning with images containing small and dense texts. To bridge this gap, we develop TokenOCR, the first token-level visual foundation model specifically tailored for text-image-related tasks, designed to support a variety of traditional downstream applications. To facilitate the pretraining of TokenOCR, we also devise a high-quality data production pipeline that constructs the first token-level image text dataset, TokenIT, comprising 20 million images and 1.8 billion token-mask pairs. Furthermore, leveraging this foundation with exceptional image-as-text capability, we seamlessly replace previous VFMs with TokenOCR to construct a document-level MLLM, TokenVL, for VQA-based document understanding tasks. Finally, extensive experiments demonstrate the effectiveness of TokenOCR and TokenVL. Code, datasets, and weights will be available at https://token-family.github.io/TokenOCR_project.

Multimodal Large Language Models for Text-rich Image Understanding: A Comprehensive Review

Feb 23, 2025Abstract:The recent emergence of Multi-modal Large Language Models (MLLMs) has introduced a new dimension to the Text-rich Image Understanding (TIU) field, with models demonstrating impressive and inspiring performance. However, their rapid evolution and widespread adoption have made it increasingly challenging to keep up with the latest advancements. To address this, we present a systematic and comprehensive survey to facilitate further research on TIU MLLMs. Initially, we outline the timeline, architecture, and pipeline of nearly all TIU MLLMs. Then, we review the performance of selected models on mainstream benchmarks. Finally, we explore promising directions, challenges, and limitations within the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge