Yiu-ming Cheung

Bridging the Semantic Gap for Categorical Data Clustering via Large Language Models

Jan 03, 2026Abstract:Categorical data are prevalent in domains such as healthcare, marketing, and bioinformatics, where clustering serves as a fundamental tool for pattern discovery. A core challenge in categorical data clustering lies in measuring similarity among attribute values that lack inherent ordering or distance. Without appropriate similarity measures, values are often treated as equidistant, creating a semantic gap that obscures latent structures and degrades clustering quality. Although existing methods infer value relationships from within-dataset co-occurrence patterns, such inference becomes unreliable when samples are limited, leaving the semantic context of the data underexplored. To bridge this gap, we present ARISE (Attention-weighted Representation with Integrated Semantic Embeddings), which draws on external semantic knowledge from Large Language Models (LLMs) to construct semantic-aware representations that complement the metric space of categorical data for accurate clustering. That is, LLM is adopted to describe attribute values for representation enhancement, and the LLM-enhanced embeddings are combined with the original data to explore semantically prominent clusters. Experiments on eight benchmark datasets demonstrate consistent improvements over seven representative counterparts, with gains of 19-27%. Code is available at https://github.com/develop-yang/ARISE

Break the Tie: Learning Cluster-Customized Category Relationships for Categorical Data Clustering

Nov 12, 2025

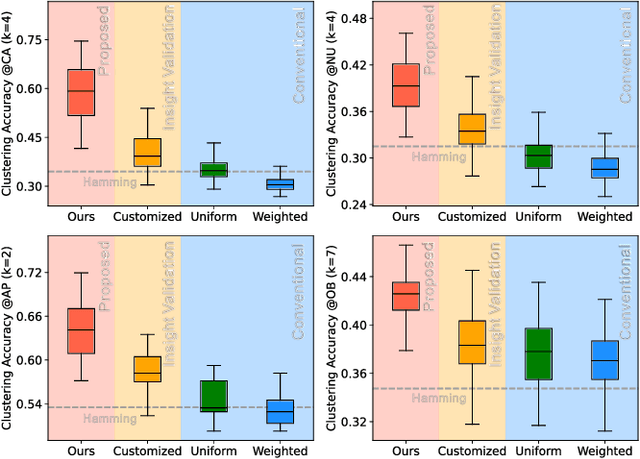

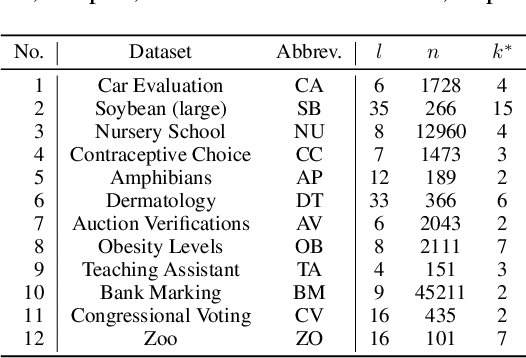

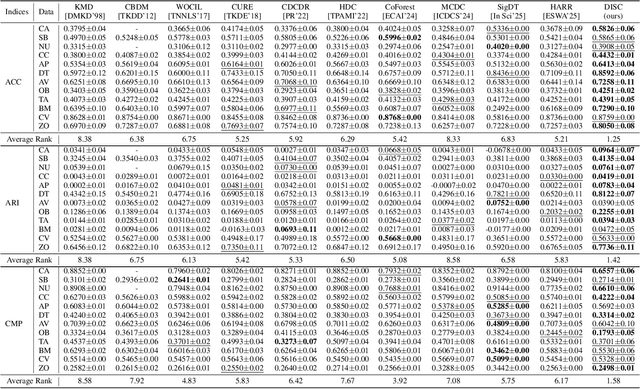

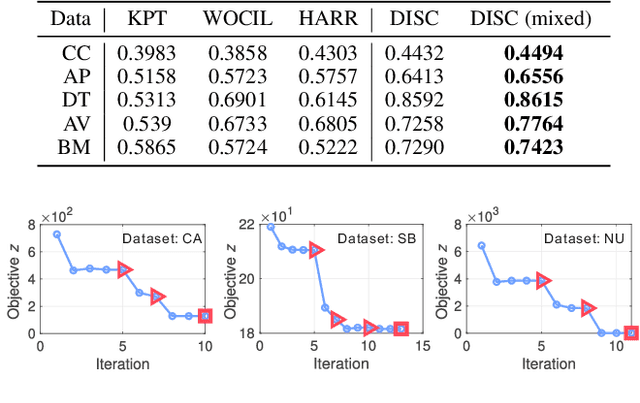

Abstract:Categorical attributes with qualitative values are ubiquitous in cluster analysis of real datasets. Unlike the Euclidean distance of numerical attributes, the categorical attributes lack well-defined relationships of their possible values (also called categories interchangeably), which hampers the exploration of compact categorical data clusters. Although most attempts are made for developing appropriate distance metrics, they typically assume a fixed topological relationship between categories when learning distance metrics, which limits their adaptability to varying cluster structures and often leads to suboptimal clustering performance. This paper, therefore, breaks the intrinsic relationship tie of attribute categories and learns customized distance metrics suitable for flexibly and accurately revealing various cluster distributions. As a result, the fitting ability of the clustering algorithm is significantly enhanced, benefiting from the learnable category relationships. Moreover, the learned category relationships are proved to be Euclidean distance metric-compatible, enabling a seamless extension to mixed datasets that include both numerical and categorical attributes. Comparative experiments on 12 real benchmark datasets with significance tests show the superior clustering accuracy of the proposed method with an average ranking of 1.25, which is significantly higher than the 5.21 ranking of the current best-performing method.

CADM: Cluster-customized Adaptive Distance Metric for Categorical Data Clustering

Nov 08, 2025Abstract:An appropriate distance metric is crucial for categorical data clustering, as the distance between categorical data cannot be directly calculated. However, the distances between attribute values usually vary in different clusters induced by their different distributions, which has not been taken into account, thus leading to unreasonable distance measurement. Therefore, we propose a cluster-customized distance metric for categorical data clustering, which can competitively update distances based on different distributions of attributes in each cluster. In addition, we extend the proposed distance metric to the mixed data that contains both numerical and categorical attributes. Experiments demonstrate the efficacy of the proposed method, i.e., achieving an average ranking of around first in fourteen datasets. The source code is available at https://anonymous.4open.science/r/CADM-47D8

TYrPPG: Uncomplicated and Enhanced Learning Capability rPPG for Remote Heart Rate Estimation

Nov 08, 2025

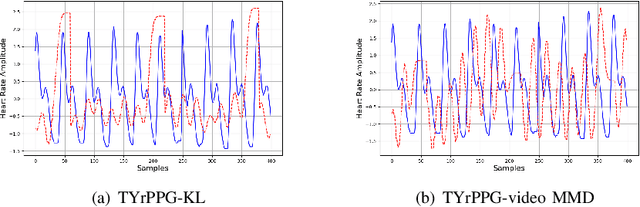

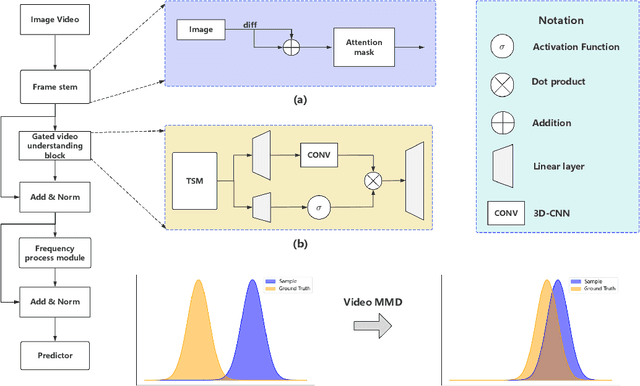

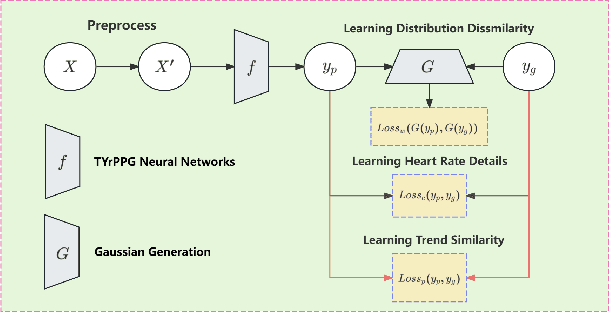

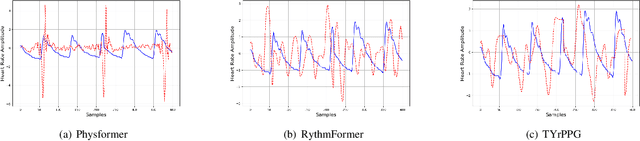

Abstract:Remote photoplethysmography (rPPG) can remotely extract physiological signals from RGB video, which has many advantages in detecting heart rate, such as low cost and no invasion to patients. The existing rPPG model is usually based on the transformer module, which has low computation efficiency. Recently, the Mamba model has garnered increasing attention due to its efficient performance in natural language processing tasks, demonstrating potential as a substitute for transformer-based algorithms. However, the Mambaout model and its variants prove that the SSM module, which is the core component of the Mamba model, is unnecessary for the vision task. Therefore, we hope to prove the feasibility of using the Mambaout-based module to remotely learn the heart rate. Specifically, we propose a novel rPPG algorithm called uncomplicated and enhanced learning capability rPPG (TYrPPG). This paper introduces an innovative gated video understanding block (GVB) designed for efficient analysis of RGB videos. Based on the Mambaout structure, this block integrates 2D-CNN and 3D-CNN to enhance video understanding for analysis. In addition, we propose a comprehensive supervised loss function (CSL) to improve the model's learning capability, along with its weakly supervised variants. The experiments show that our TYrPPG can achieve state-of-the-art performance in commonly used datasets, indicating its prospects and superiority in remote heart rate estimation. The source code is available at https://github.com/Taixi-CHEN/TYrPPG.

Classifying Long-tailed and Label-noise Data via Disentangling and Unlearning

Mar 14, 2025Abstract:In real-world datasets, the challenges of long-tailed distributions and noisy labels often coexist, posing obstacles to the model training and performance. Existing studies on long-tailed noisy label learning (LTNLL) typically assume that the generation of noisy labels is independent of the long-tailed distribution, which may not be true from a practical perspective. In real-world situaiton, we observe that the tail class samples are more likely to be mislabeled as head, exacerbating the original degree of imbalance. We call this phenomenon as ``tail-to-head (T2H)'' noise. T2H noise severely degrades model performance by polluting the head classes and forcing the model to learn the tail samples as head. To address this challenge, we investigate the dynamic misleading process of the nosiy labels and propose a novel method called Disentangling and Unlearning for Long-tailed and Label-noisy data (DULL). It first employs the Inner-Feature Disentangling (IFD) to disentangle feature internally. Based on this, the Inner-Feature Partial Unlearning (IFPU) is then applied to weaken and unlearn incorrect feature regions correlated to wrong classes. This method prevents the model from being misled by noisy labels, enhancing the model's robustness against noise. To provide a controlled experimental environment, we further propose a new noise addition algorithm to simulate T2H noise. Extensive experiments on both simulated and real-world datasets demonstrate the effectiveness of our proposed method.

Iterative Prompt Relocation for Distribution-Adaptive Visual Prompt Tuning

Mar 10, 2025Abstract:Visual prompt tuning (VPT) provides an efficient and effective solution for adapting pre-trained models to various downstream tasks by incorporating learnable prompts. However, most prior art indiscriminately applies a fixed prompt distribution across different tasks, neglecting the importance of each block differing depending on the task. In this paper, we investigate adaptive distribution optimization (ADO) by addressing two key questions: (1) How to appropriately and formally define ADO, and (2) How to design an adaptive distribution strategy guided by this definition? Through in-depth analysis, we provide an affirmative answer that properly adjusting the distribution significantly improves VPT performance, and further uncover a key insight that a nested relationship exists between ADO and VPT. Based on these findings, we propose a new VPT framework, termed PRO-VPT (iterative Prompt RelOcation-based VPT), which adaptively adjusts the distribution building upon a nested optimization formulation. Specifically, we develop a prompt relocation strategy for ADO derived from this formulation, comprising two optimization steps: identifying and pruning idle prompts, followed by determining the optimal blocks for their relocation. By iteratively performing prompt relocation and VPT, our proposal adaptively learns the optimal prompt distribution, thereby unlocking the full potential of VPT. Extensive experiments demonstrate that our proposal significantly outperforms state-of-the-art VPT methods, e.g., PRO-VPT surpasses VPT by 1.6% average accuracy, leading prompt-based methods to state-of-the-art performance on the VTAB-1k benchmark. The code is available at https://github.com/ckshang/PRO-VPT.

ReCon: Enhancing True Correspondence Discrimination through Relation Consistency for Robust Noisy Correspondence Learning

Feb 27, 2025Abstract:Can we accurately identify the true correspondences from multimodal datasets containing mismatched data pairs? Existing methods primarily emphasize the similarity matching between the representations of objects across modalities, potentially neglecting the crucial relation consistency within modalities that are particularly important for distinguishing the true and false correspondences. Such an omission often runs the risk of misidentifying negatives as positives, thus leading to unanticipated performance degradation. To address this problem, we propose a general Relation Consistency learning framework, namely ReCon, to accurately discriminate the true correspondences among the multimodal data and thus effectively mitigate the adverse impact caused by mismatches. Specifically, ReCon leverages a novel relation consistency learning to ensure the dual-alignment, respectively of, the cross-modal relation consistency between different modalities and the intra-modal relation consistency within modalities. Thanks to such dual constrains on relations, ReCon significantly enhances its effectiveness for true correspondence discrimination and therefore reliably filters out the mismatched pairs to mitigate the risks of wrong supervisions. Extensive experiments on three widely-used benchmark datasets, including Flickr30K, MS-COCO, and Conceptual Captions, are conducted to demonstrate the effectiveness and superiority of ReCon compared with other SOTAs. The code is available at: https://github.com/qxzha/ReCon.

Asynchronous Federated Clustering with Unknown Number of Clusters

Dec 29, 2024Abstract:Federated Clustering (FC) is crucial to mining knowledge from unlabeled non-Independent Identically Distributed (non-IID) data provided by multiple clients while preserving their privacy. Most existing attempts learn cluster distributions at local clients, and then securely pass the desensitized information to the server for aggregation. However, some tricky but common FC problems are still relatively unexplored, including the heterogeneity in terms of clients' communication capacity and the unknown number of proper clusters $k^*$. To further bridge the gap between FC and real application scenarios, this paper first shows that the clients' communication asynchrony and unknown $k^*$ are complex coupling problems, and then proposes an Asynchronous Federated Cluster Learning (AFCL) method accordingly. It spreads the excessive number of seed points to the clients as a learning medium and coordinates them across the clients to form a consensus. To alleviate the distribution imbalance cumulated due to the unforeseen asynchronous uploading from the heterogeneous clients, we also design a balancing mechanism for seeds updating. As a result, the seeds gradually adapt to each other to reveal a proper number of clusters. Extensive experiments demonstrate the efficacy of AFCL.

Implementing Trust in Non-Small Cell Lung Cancer Diagnosis with a Conformalized Uncertainty-Aware AI Framework in Whole-Slide Images

Dec 28, 2024Abstract:Ensuring trustworthiness is fundamental to the development of artificial intelligence (AI) that is considered societally responsible, particularly in cancer diagnostics, where a misdiagnosis can have dire consequences. Current digital pathology AI models lack systematic solutions to address trustworthiness concerns arising from model limitations and data discrepancies between model deployment and development environments. To address this issue, we developed TRUECAM, a framework designed to ensure both data and model trustworthiness in non-small cell lung cancer subtyping with whole-slide images. TRUECAM integrates 1) a spectral-normalized neural Gaussian process for identifying out-of-scope inputs and 2) an ambiguity-guided elimination of tiles to filter out highly ambiguous regions, addressing data trustworthiness, as well as 3) conformal prediction to ensure controlled error rates. We systematically evaluated the framework across multiple large-scale cancer datasets, leveraging both task-specific and foundation models, illustrate that an AI model wrapped with TRUECAM significantly outperforms models that lack such guidance, in terms of classification accuracy, robustness, interpretability, and data efficiency, while also achieving improvements in fairness. These findings highlight TRUECAM as a versatile wrapper framework for digital pathology AI models with diverse architectural designs, promoting their responsible and effective applications in real-world settings.

GBRIP: Granular Ball Representation for Imbalanced Partial Label Learning

Dec 19, 2024Abstract:Partial label learning (PLL) is a complicated weakly supervised multi-classification task compounded by class imbalance. Currently, existing methods only rely on inter-class pseudo-labeling from inter-class features, often overlooking the significant impact of the intra-class imbalanced features combined with the inter-class. To address these limitations, we introduce Granular Ball Representation for Imbalanced PLL (GBRIP), a novel framework for imbalanced PLL. GBRIP utilizes coarse-grained granular ball representation and multi-center loss to construct a granular ball-based nfeature space through unsupervised learning, effectively capturing the feature distribution within each class. GBRIP mitigates the impact of confusing features by systematically refining label disambiguation and estimating imbalance distributions. The novel multi-center loss function enhances learning by emphasizing the relationships between samples and their respective centers within the granular balls. Extensive experiments on standard benchmarks demonstrate that GBRIP outperforms existing state-of-the-art methods, offering a robust solution to the challenges of imbalanced PLL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge