Mingjie Zhao

Break the Tie: Learning Cluster-Customized Category Relationships for Categorical Data Clustering

Nov 12, 2025

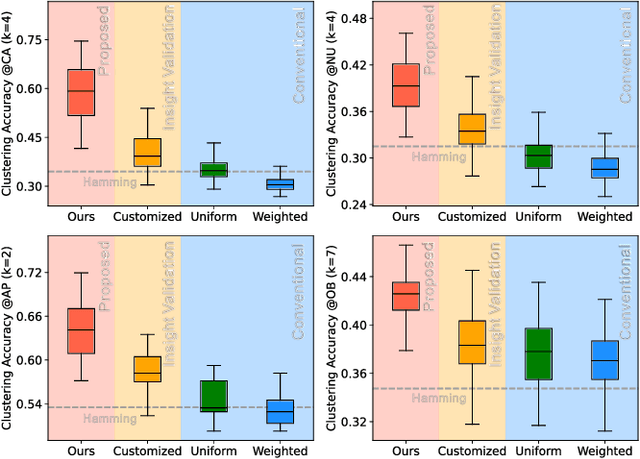

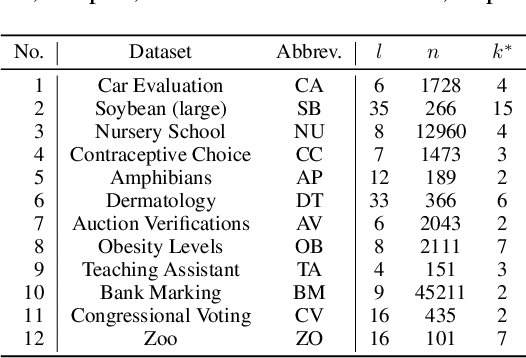

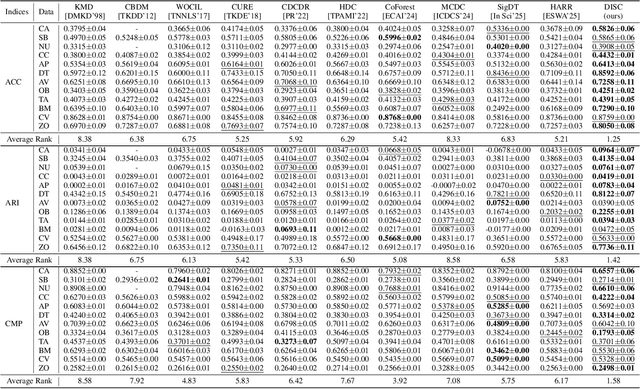

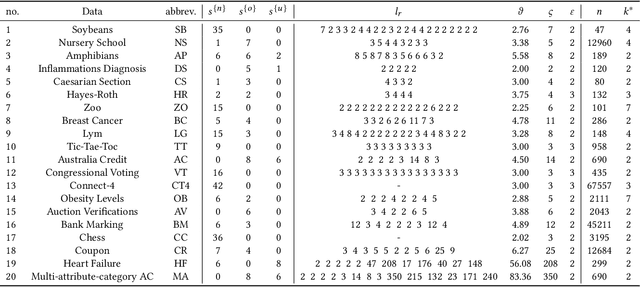

Abstract:Categorical attributes with qualitative values are ubiquitous in cluster analysis of real datasets. Unlike the Euclidean distance of numerical attributes, the categorical attributes lack well-defined relationships of their possible values (also called categories interchangeably), which hampers the exploration of compact categorical data clusters. Although most attempts are made for developing appropriate distance metrics, they typically assume a fixed topological relationship between categories when learning distance metrics, which limits their adaptability to varying cluster structures and often leads to suboptimal clustering performance. This paper, therefore, breaks the intrinsic relationship tie of attribute categories and learns customized distance metrics suitable for flexibly and accurately revealing various cluster distributions. As a result, the fitting ability of the clustering algorithm is significantly enhanced, benefiting from the learnable category relationships. Moreover, the learned category relationships are proved to be Euclidean distance metric-compatible, enabling a seamless extension to mixed datasets that include both numerical and categorical attributes. Comparative experiments on 12 real benchmark datasets with significance tests show the superior clustering accuracy of the proposed method with an average ranking of 1.25, which is significantly higher than the 5.21 ranking of the current best-performing method.

Order Is All You Need for Categorical Data Clustering

Nov 19, 2024

Abstract:Categorical data composed of nominal valued attributes are ubiquitous in knowledge discovery and data mining tasks. Due to the lack of well-defined metric space, categorical data distributions are difficult to intuitively understand. Clustering is a popular technique suitable for data analysis. However, the success of clustering often relies on reasonable distance metrics, which happens to be what categorical data naturally lack. Therefore, the cluster analysis of categorical data is considered a critical but challenging problem. This paper introduces the new finding that the order relation among attribute values is the decisive factor in clustering accuracy, and is also the key to understanding the categorical data clusters. To automatically obtain the orders, we propose a new learning paradigm that allows joint learning of clusters and the orders. It turns out that clustering with order learning achieves superior clustering accuracy, and the learned orders provide intuition for understanding the cluster distribution of categorical data. Extensive experiments with statistical evidence and case studies have verified the effectiveness of the new ``order is all you need'' insight and the proposed method.

Contrastive Learning-based User Identification with Limited Data on Smart Textiles

Sep 06, 2024

Abstract:Pressure-sensitive smart textiles are widely applied in the fields of healthcare, sports monitoring, and intelligent homes. The integration of devices embedded with pressure sensing arrays is expected to enable comprehensive scene coverage and multi-device integration. However, the implementation of identity recognition, a fundamental function in this context, relies on extensive device-specific datasets due to variations in pressure distribution across different devices. To address this challenge, we propose a novel user identification method based on contrastive learning. We design two parallel branches to facilitate user identification on both new and existing devices respectively, employing supervised contrastive learning in the feature space to promote domain unification. When encountering new devices, extensive data collection efforts are not required; instead, user identification can be achieved using limited data consisting of only a few simple postures. Through experimentation with two 8-subject pressure datasets (BedPressure and ChrPressure), our proposed method demonstrates the capability to achieve user identification across 12 sitting scenarios using only a dataset containing 2 postures. Our average recognition accuracy reaches 79.05%, representing an improvement of 2.62% over the best baseline model.

MassNet: A Deep Learning Approach for Body Weight Extraction from A Single Pressure Image

Mar 17, 2023

Abstract:Body weight, as an essential physiological trait, is of considerable significance in many applications like body management, rehabilitation, and drug dosing for patient-specific treatments. Previous works on the body weight estimation task are mainly vision-based, using 2D/3D, depth, or infrared images, facing problems in illumination, occlusions, and especially privacy issues. The pressure mapping mattress is a non-invasive and privacy-preserving tool to obtain the pressure distribution image over the bed surface, which strongly correlates with the body weight of the lying person. To extract the body weight from this image, we propose a deep learning-based model, including a dual-branch network to extract the deep features and pose features respectively. A contrastive learning module is also combined with the deep-feature branch to help mine the mutual factors across different postures of every single subject. The two groups of features are then concatenated for the body weight regression task. To test the model's performance over different hardware and posture settings, we create a pressure image dataset of 10 subjects and 23 postures, using a self-made pressure-sensing bedsheet. This dataset, which is made public together with this paper, together with a public dataset, are used for the validation. The results show that our model outperforms the state-of-the-art algorithms over both 2 datasets. Our research constitutes an important step toward fully automatic weight estimation in both clinical and at-home practice. Our dataset is available for research purposes at: https://github.com/USTCWzy/MassEstimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge