Zhentao Guo

Marten: Visual Question Answering with Mask Generation for Multi-modal Document Understanding

Mar 18, 2025

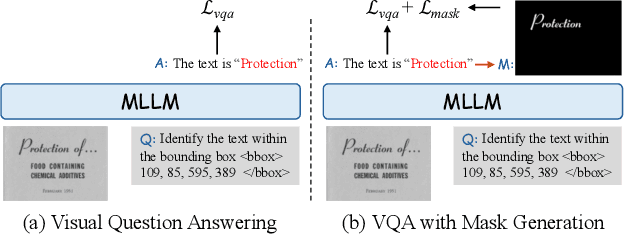

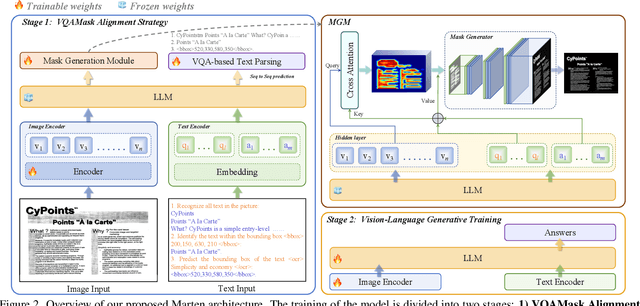

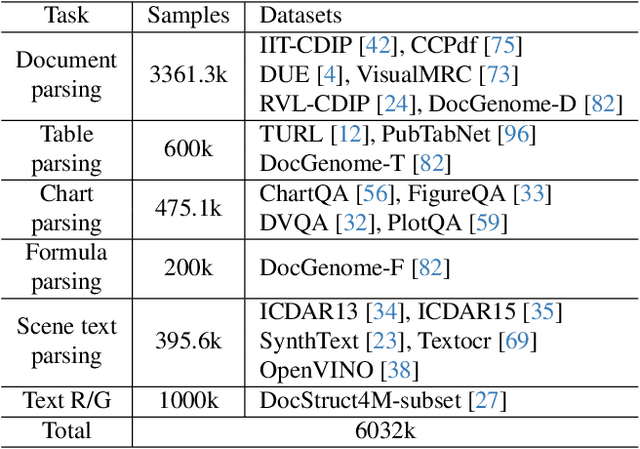

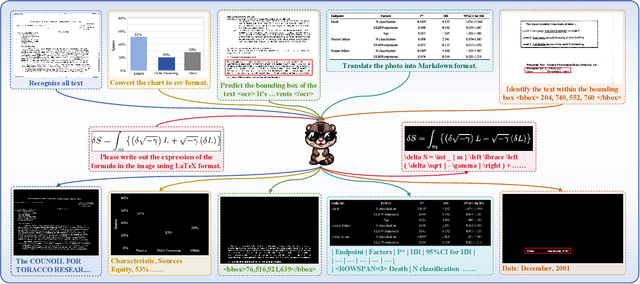

Abstract:Multi-modal Large Language Models (MLLMs) have introduced a novel dimension to document understanding, i.e., they endow large language models with visual comprehension capabilities; however, how to design a suitable image-text pre-training task for bridging the visual and language modality in document-level MLLMs remains underexplored. In this study, we introduce a novel visual-language alignment method that casts the key issue as a Visual Question Answering with Mask generation (VQAMask) task, optimizing two tasks simultaneously: VQA-based text parsing and mask generation. The former allows the model to implicitly align images and text at the semantic level. The latter introduces an additional mask generator (discarded during inference) to explicitly ensure alignment between visual texts within images and their corresponding image regions at a spatially-aware level. Together, they can prevent model hallucinations when parsing visual text and effectively promote spatially-aware feature representation learning. To support the proposed VQAMask task, we construct a comprehensive image-mask generation pipeline and provide a large-scale dataset with 6M data (MTMask6M). Subsequently, we demonstrate that introducing the proposed mask generation task yields competitive document-level understanding performance. Leveraging the proposed VQAMask, we introduce Marten, a training-efficient MLLM tailored for document-level understanding. Extensive experiments show that our Marten consistently achieves significant improvements among 8B-MLLMs in document-centric tasks. Code and datasets are available at https://github.com/PriNing/Marten.

Multimodal Large Language Models for Text-rich Image Understanding: A Comprehensive Review

Feb 23, 2025Abstract:The recent emergence of Multi-modal Large Language Models (MLLMs) has introduced a new dimension to the Text-rich Image Understanding (TIU) field, with models demonstrating impressive and inspiring performance. However, their rapid evolution and widespread adoption have made it increasingly challenging to keep up with the latest advancements. To address this, we present a systematic and comprehensive survey to facilitate further research on TIU MLLMs. Initially, we outline the timeline, architecture, and pipeline of nearly all TIU MLLMs. Then, we review the performance of selected models on mainstream benchmarks. Finally, we explore promising directions, challenges, and limitations within the field.

Cross-PCR: A Robust Cross-Source Point Cloud Registration Framework

Dec 25, 2024Abstract:Due to the density inconsistency and distribution difference between cross-source point clouds, previous methods fail in cross-source point cloud registration. We propose a density-robust feature extraction and matching scheme to achieve robust and accurate cross-source registration. To address the density inconsistency between cross-source data, we introduce a density-robust encoder for extracting density-robust features. To tackle the issue of challenging feature matching and few correct correspondences, we adopt a loose-to-strict matching pipeline with a ``loose generation, strict selection'' idea. Under it, we employ a one-to-many strategy to loosely generate initial correspondences. Subsequently, high-quality correspondences are strictly selected to achieve robust registration through sparse matching and dense matching. On the challenging Kinect-LiDAR scene in the cross-source 3DCSR dataset, our method improves feature matching recall by 63.5 percentage points (pp) and registration recall by 57.6 pp. It also achieves the best performance on 3DMatch, while maintaining robustness under diverse downsampling densities.

SGOR: Outlier Removal by Leveraging Semantic and Geometric Information for Robust Point Cloud Registration

Jul 08, 2024Abstract:In this paper, we introduce a new outlier removal method that fully leverages geometric and semantic information, to achieve robust registration. Current semantic-based registration methods only use semantics for point-to-point or instance semantic correspondence generation, which has two problems. First, these methods are highly dependent on the correctness of semantics. They perform poorly in scenarios with incorrect semantics and sparse semantics. Second, the use of semantics is limited only to the correspondence generation, resulting in bad performance in the weak geometry scene. To solve these problems, on the one hand, we propose secondary ground segmentation and loose semantic consistency based on regional voting. It improves the robustness to semantic correctness by reducing the dependence on single-point semantics. On the other hand, we propose semantic-geometric consistency for outlier removal, which makes full use of semantic information and significantly improves the quality of correspondences. In addition, a two-stage hypothesis verification is proposed, which solves the problem of incorrect transformation selection in the weak geometry scene. In the outdoor dataset, our method demonstrates superior performance, boosting a 22.5 percentage points improvement in registration recall and achieving better robustness under various conditions. Our code is available.

VRHCF: Cross-Source Point Cloud Registration via Voxel Representation and Hierarchical Correspondence Filtering

Mar 15, 2024

Abstract:Addressing the challenges posed by the substantial gap in point cloud data collected from diverse sensors, achieving robust cross-source point cloud registration becomes a formidable task. In response, we present a novel framework for point cloud registration with broad applicability, suitable for both homologous and cross-source registration scenarios. To tackle the issues arising from different densities and distributions in cross-source point cloud data, we introduce a feature representation based on spherical voxels. Furthermore, addressing the challenge of numerous outliers and mismatches in cross-source registration, we propose a hierarchical correspondence filtering approach. This method progressively filters out mismatches, yielding a set of high-quality correspondences. Our method exhibits versatile applicability and excels in both traditional homologous registration and challenging cross-source registration scenarios. Specifically, in homologous registration using the 3DMatch dataset, we achieve the highest registration recall of 95.1% and an inlier ratio of 87.8%. In cross-source point cloud registration, our method attains the best RR on the 3DCSR dataset, demonstrating a 9.3 percentage points improvement. The code is available at https://github.com/GuiyuZhao/VRHCF.

SphereNet: Learning a Noise-Robust and General Descriptor for Point Cloud Registration

Jul 18, 2023

Abstract:Point cloud registration is to estimate a transformation to align point clouds collected in different perspectives. In learning-based point cloud registration, a robust descriptor is vital for high-accuracy registration. However, most methods are susceptible to noise and have poor generalization ability on unseen datasets. Motivated by this, we introduce SphereNet to learn a noise-robust and unseen-general descriptor for point cloud registration. In our method, first, the spheroid generator builds a geometric domain based on spherical voxelization to encode initial features. Then, the spherical interpolation of the sphere is introduced to realize robustness against noise. Finally, a new spherical convolutional neural network with spherical integrity padding completes the extraction of descriptors, which reduces the loss of features and fully captures the geometric features. To evaluate our methods, a new benchmark 3DMatch-noise with strong noise is introduced. Extensive experiments are carried out on both indoor and outdoor datasets. Under high-intensity noise, SphereNet increases the feature matching recall by more than 25 percentage points on 3DMatch-noise. In addition, it sets a new state-of-the-art performance for the 3DMatch and 3DLoMatch benchmarks with 93.5\% and 75.6\% registration recall and also has the best generalization ability on unseen datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge