Jianhua Li

Unifying Ranking and Generation in Query Auto-Completion via Retrieval-Augmented Generation and Multi-Objective Alignment

Feb 03, 2026Abstract:Query Auto-Completion (QAC) suggests query completions as users type, helping them articulate intent and reach results more efficiently. Existing approaches face fundamental challenges: traditional retrieve-and-rank pipelines have limited long-tail coverage and require extensive feature engineering, while recent generative methods suffer from hallucination and safety risks. We present a unified framework that reformulates QAC as end-to-end list generation through Retrieval-Augmented Generation (RAG) and multi-objective Direct Preference Optimization (DPO). Our approach combines three key innovations: (1) reformulating QAC as end-to-end list generation with multi-objective optimization; (2) defining and deploying a suite of rule-based, model-based, and LLM-as-judge verifiers for QAC, and using them in a comprehensive methodology that combines RAG, multi-objective DPO, and iterative critique-revision for high-quality synthetic data; (3) a hybrid serving architecture enabling efficient production deployment under strict latency constraints. Evaluation on a large-scale commercial search platform demonstrates substantial improvements: offline metrics show gains across all dimensions, human evaluation yields +0.40 to +0.69 preference scores, and a controlled online experiment achieves 5.44\% reduction in keystrokes and 3.46\% increase in suggestion adoption, validating that unified generation with RAG and multi-objective alignment provides an effective solution for production QAC. This work represents a paradigm shift to end-to-end generation powered by large language models, RAG, and multi-objective alignment, establishing a production-validated framework that can benefit the broader search and recommendation industry.

HoneyTrap: Deceiving Large Language Model Attackers to Honeypot Traps with Resilient Multi-Agent Defense

Jan 07, 2026Abstract:Jailbreak attacks pose significant threats to large language models (LLMs), enabling attackers to bypass safeguards. However, existing reactive defense approaches struggle to keep up with the rapidly evolving multi-turn jailbreaks, where attackers continuously deepen their attacks to exploit vulnerabilities. To address this critical challenge, we propose HoneyTrap, a novel deceptive LLM defense framework leveraging collaborative defenders to counter jailbreak attacks. It integrates four defensive agents, Threat Interceptor, Misdirection Controller, Forensic Tracker, and System Harmonizer, each performing a specialized security role and collaborating to complete a deceptive defense. To ensure a comprehensive evaluation, we introduce MTJ-Pro, a challenging multi-turn progressive jailbreak dataset that combines seven advanced jailbreak strategies designed to gradually deepen attack strategies across multi-turn attacks. Besides, we present two novel metrics: Mislead Success Rate (MSR) and Attack Resource Consumption (ARC), which provide more nuanced assessments of deceptive defense beyond conventional measures. Experimental results on GPT-4, GPT-3.5-turbo, Gemini-1.5-pro, and LLaMa-3.1 demonstrate that HoneyTrap achieves an average reduction of 68.77% in attack success rates compared to state-of-the-art baselines. Notably, even in a dedicated adaptive attacker setting with intensified conditions, HoneyTrap remains resilient, leveraging deceptive engagement to prolong interactions, significantly increasing the time and computational costs required for successful exploitation. Unlike simple rejection, HoneyTrap strategically wastes attacker resources without impacting benign queries, improving MSR and ARC by 118.11% and 149.16%, respectively.

SemCovert: Secure and Covert Video Transmission via Deep Semantic-Level Hiding

Dec 23, 2025Abstract:Video semantic communication, praised for its transmission efficiency, still faces critical challenges related to privacy leakage. Traditional security techniques like steganography and encryption are challenging to apply since they are not inherently robust against semantic-level transformations and abstractions. Moreover, the temporal continuity of video enables framewise statistical modeling over extended periods, which increases the risk of exposing distributional anomalies and reconstructing hidden content. To address these challenges, we propose SemCovert, a deep semantic-level hiding framework for secure and covert video transmission. SemCovert introduces a pair of co-designed models, namely the semantic hiding model and the secret semantic extractor, which are seamlessly integrated into the semantic communication pipeline. This design enables authorized receivers to reliably recover hidden information, while keeping it imperceptible to regular users. To further improve resistance to analysis, we introduce a randomized semantic hiding strategy, which breaks the determinism of embedding and introduces unpredictable distribution patterns. The experimental results demonstrate that SemCovert effectively mitigates potential eavesdropping and detection risks while reliably concealing secret videos during transmission. Meanwhile, video quality suffers only minor degradation, preserving transmission fidelity. These results confirm SemCovert's effectiveness in enabling secure and covert transmission without compromising semantic communication performance.

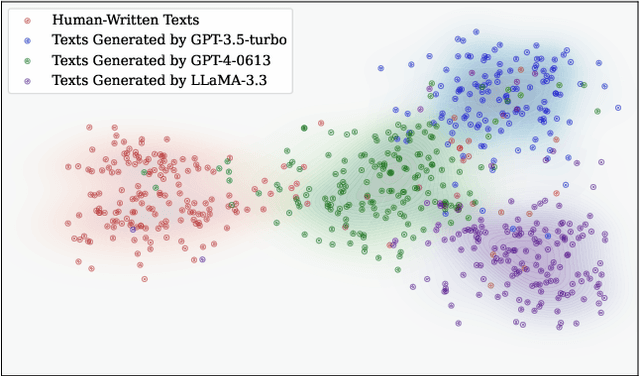

StyleDecipher: Robust and Explainable Detection of LLM-Generated Texts with Stylistic Analysis

Oct 14, 2025Abstract:With the increasing integration of large language models (LLMs) into open-domain writing, detecting machine-generated text has become a critical task for ensuring content authenticity and trust. Existing approaches rely on statistical discrepancies or model-specific heuristics to distinguish between LLM-generated and human-written text. However, these methods struggle in real-world scenarios due to limited generalization, vulnerability to paraphrasing, and lack of explainability, particularly when facing stylistic diversity or hybrid human-AI authorship. In this work, we propose StyleDecipher, a robust and explainable detection framework that revisits LLM-generated text detection using combined feature extractors to quantify stylistic differences. By jointly modeling discrete stylistic indicators and continuous stylistic representations derived from semantic embeddings, StyleDecipher captures distinctive style-level divergences between human and LLM outputs within a unified representation space. This framework enables accurate, explainable, and domain-agnostic detection without requiring access to model internals or labeled segments. Extensive experiments across five diverse domains, including news, code, essays, reviews, and academic abstracts, demonstrate that StyleDecipher consistently achieves state-of-the-art in-domain accuracy. Moreover, in cross-domain evaluations, it surpasses existing baselines by up to 36.30%, while maintaining robustness against adversarial perturbations and mixed human-AI content. Further qualitative and quantitative analysis confirms that stylistic signals provide explainable evidence for distinguishing machine-generated text. Our source code can be accessed at https://github.com/SiyuanLi00/StyleDecipher.

Fre-CW: Targeted Attack on Time Series Forecasting using Frequency Domain Loss

Aug 12, 2025Abstract:Transformer-based models have made significant progress in time series forecasting. However, a key limitation of deep learning models is their susceptibility to adversarial attacks, which has not been studied enough in the context of time series prediction. In contrast to areas such as computer vision, where adversarial robustness has been extensively studied, frequency domain features of time series data play an important role in the prediction task but have not been sufficiently explored in terms of adversarial attacks. This paper proposes a time series prediction attack algorithm based on frequency domain loss. Specifically, we adapt an attack method originally designed for classification tasks to the prediction field and optimize the adversarial samples using both time-domain and frequency-domain losses. To the best of our knowledge, there is no relevant research on using frequency information for time-series adversarial attacks. Our experimental results show that these current time series prediction models are vulnerable to adversarial attacks, and our approach achieves excellent performance on major time series forecasting datasets.

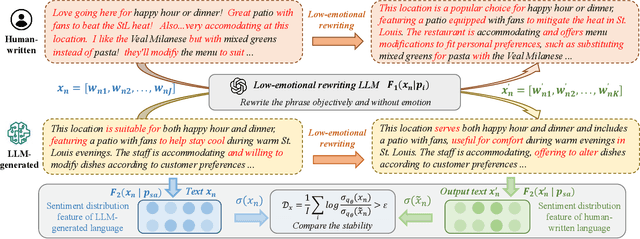

Model-Agnostic Sentiment Distribution Stability Analysis for Robust LLM-Generated Texts Detection

Aug 09, 2025

Abstract:The rapid advancement of large language models (LLMs) has resulted in increasingly sophisticated AI-generated content, posing significant challenges in distinguishing LLM-generated text from human-written language. Existing detection methods, primarily based on lexical heuristics or fine-tuned classifiers, often suffer from limited generalizability and are vulnerable to paraphrasing, adversarial perturbations, and cross-domain shifts. In this work, we propose SentiDetect, a model-agnostic framework for detecting LLM-generated text by analyzing the divergence in sentiment distribution stability. Our method is motivated by the empirical observation that LLM outputs tend to exhibit emotionally consistent patterns, whereas human-written texts display greater emotional variability. To capture this phenomenon, we define two complementary metrics: sentiment distribution consistency and sentiment distribution preservation, which quantify stability under sentiment-altering and semantic-preserving transformations. We evaluate SentiDetect on five diverse datasets and a range of advanced LLMs,including Gemini-1.5-Pro, Claude-3, GPT-4-0613, and LLaMa-3.3. Experimental results demonstrate its superiority over state-of-the-art baselines, with over 16% and 11% F1 score improvements on Gemini-1.5-Pro and GPT-4-0613, respectively. Moreover, SentiDetect also shows greater robustness to paraphrasing, adversarial attacks, and text length variations, outperforming existing detectors in challenging scenarios.

AuditVotes: A Framework Towards More Deployable Certified Robustness for Graph Neural Networks

Mar 29, 2025Abstract:Despite advancements in Graph Neural Networks (GNNs), adaptive attacks continue to challenge their robustness. Certified robustness based on randomized smoothing has emerged as a promising solution, offering provable guarantees that a model's predictions remain stable under adversarial perturbations within a specified range. However, existing methods face a critical trade-off between accuracy and robustness, as achieving stronger robustness requires introducing greater noise into the input graph. This excessive randomization degrades data quality and disrupts prediction consistency, limiting the practical deployment of certifiably robust GNNs in real-world scenarios where both accuracy and robustness are essential. To address this challenge, we propose \textbf{AuditVotes}, the first framework to achieve both high clean accuracy and certifiably robust accuracy for GNNs. It integrates randomized smoothing with two key components, \underline{au}gmentation and con\underline{dit}ional smoothing, aiming to improve data quality and prediction consistency. The augmentation, acting as a pre-processing step, de-noises the randomized graph, significantly improving data quality and clean accuracy. The conditional smoothing, serving as a post-processing step, employs a filtering function to selectively count votes, thereby filtering low-quality predictions and improving voting consistency. Extensive experimental results demonstrate that AuditVotes significantly enhances clean accuracy, certified robustness, and empirical robustness while maintaining high computational efficiency. Notably, compared to baseline randomized smoothing, AuditVotes improves clean accuracy by $437.1\%$ and certified accuracy by $409.3\%$ when the attacker can arbitrarily insert $20$ edges on the Cora-ML datasets, representing a substantial step toward deploying certifiably robust GNNs in real-world applications.

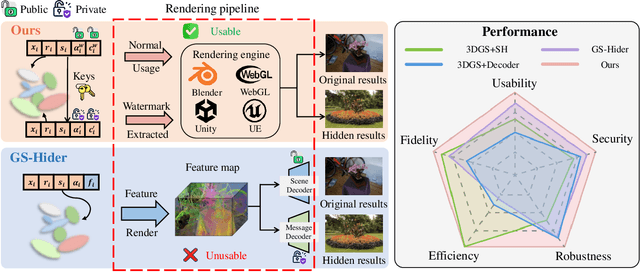

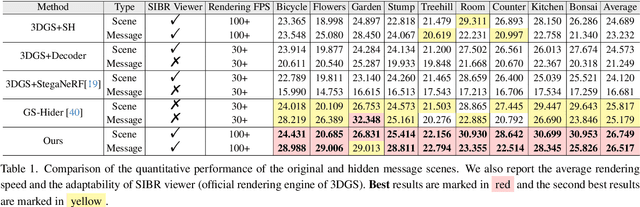

Splats in Splats: Embedding Invisible 3D Watermark within Gaussian Splatting

Dec 04, 2024

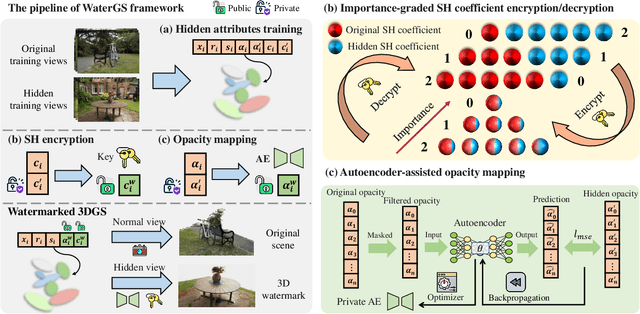

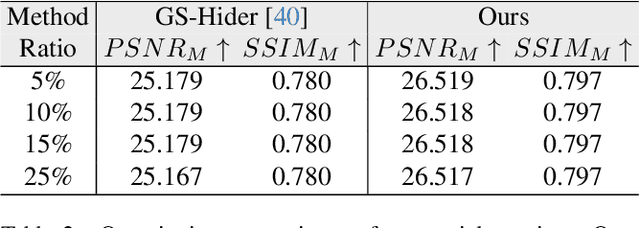

Abstract:3D Gaussian splatting (3DGS) has demonstrated impressive 3D reconstruction performance with explicit scene representations. Given the widespread application of 3DGS in 3D reconstruction and generation tasks, there is an urgent need to protect the copyright of 3DGS assets. However, existing copyright protection techniques for 3DGS overlook the usability of 3D assets, posing challenges for practical deployment. Here we describe WaterGS, the first 3DGS watermarking framework that embeds 3D content in 3DGS itself without modifying any attributes of the vanilla 3DGS. To achieve this, we take a deep insight into spherical harmonics (SH) and devise an importance-graded SH coefficient encryption strategy to embed the hidden SH coefficients. Furthermore, we employ a convolutional autoencoder to establish a mapping between the original Gaussian primitives' opacity and the hidden Gaussian primitives' opacity. Extensive experiments indicate that WaterGS significantly outperforms existing 3D steganography techniques, with 5.31% higher scene fidelity and 3X faster rendering speed, while ensuring security, robustness, and user experience. Codes and data will be released at https://water-gs.github.io.

SFR-GNN: Simple and Fast Robust GNNs against Structural Attacks

Sep 01, 2024

Abstract:Graph Neural Networks (GNNs) have demonstrated commendable performance for graph-structured data. Yet, GNNs are often vulnerable to adversarial structural attacks as embedding generation relies on graph topology. Existing efforts are dedicated to purifying the maliciously modified structure or applying adaptive aggregation, thereby enhancing the robustness against adversarial structural attacks. It is inevitable for a defender to consume heavy computational costs due to lacking prior knowledge about modified structures. To this end, we propose an efficient defense method, called Simple and Fast Robust Graph Neural Network (SFR-GNN), supported by mutual information theory. The SFR-GNN first pre-trains a GNN model using node attributes and then fine-tunes it over the modified graph in the manner of contrastive learning, which is free of purifying modified structures and adaptive aggregation, thus achieving great efficiency gains. Consequently, SFR-GNN exhibits a 24%--162% speedup compared to advanced robust models, demonstrating superior robustness for node classification tasks.

Performance Analysis of Photon-Limited Free-Space Optical Communications with Practical Photon-Counting Receivers

Aug 24, 2024

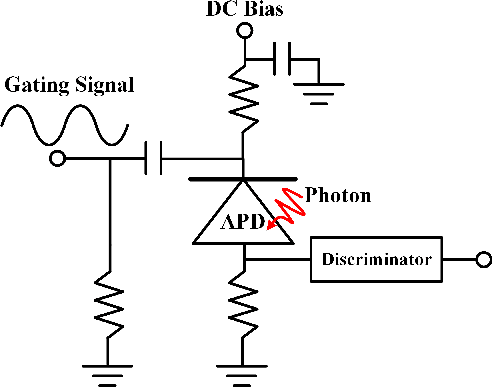

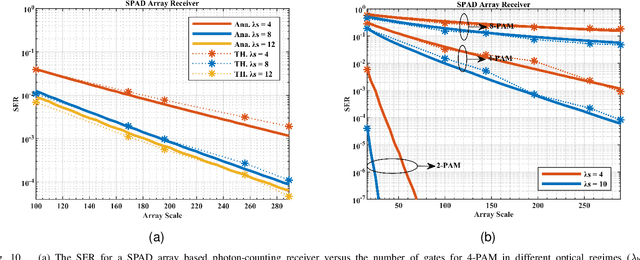

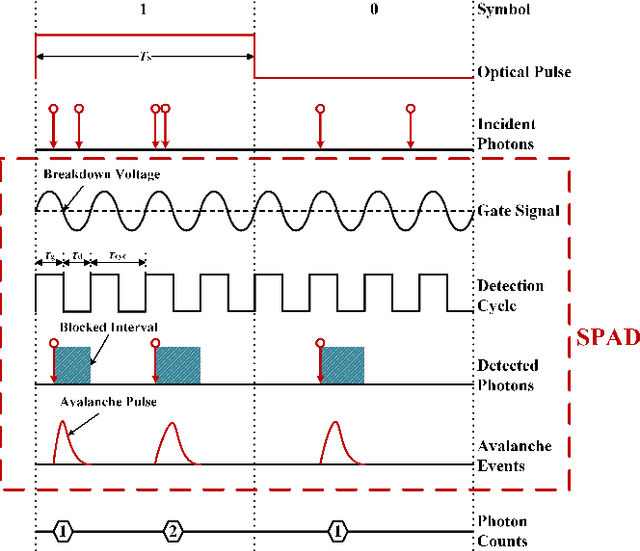

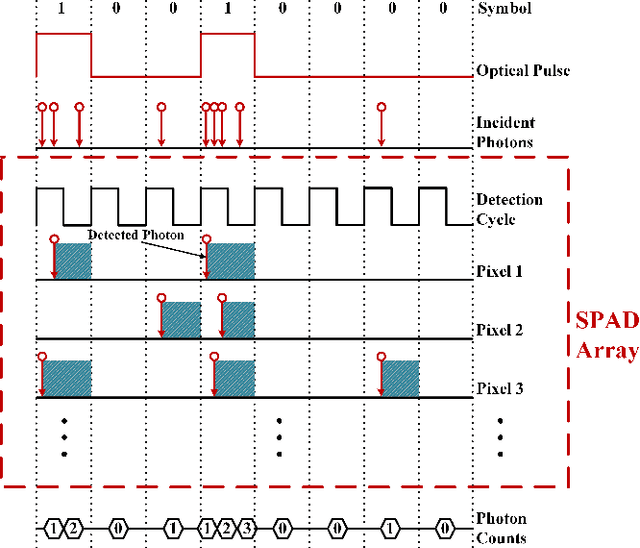

Abstract:The non-perfect factors of practical photon-counting receiver are recognized as a significant challenge for long-distance photon-limited free-space optical (FSO) communication systems. This paper presents a comprehensive analytical framework for modeling the statistical properties of time-gated single-photon avalanche diode (TG-SPAD) based photon-counting receivers in presence of dead time, non-photon-number-resolving and afterpulsing effect. Drawing upon the non-Markovian characteristic of afterpulsing effect, we formulate a closed-form approximation for the probability mass function (PMF) of photon counts, when high-order pulse amplitude modulation (PAM) is used. Unlike the photon counts from a perfect photon-counting receiver, which adhere to a Poisson arrival process, the photon counts from a practical TG-SPAD based receiver are instead approximated by a binomial distribution. Additionally, by employing the maximum likelihood (ML) criterion, we derive a refined closed-form formula for determining the threshold in high-order PAM, thereby facilitating the development of an analytical model for the symbol error rate (SER). Utilizing this analytical SER model, the system performance is investigated. The numerical results underscore the crucial need to suppress background radiation below the tolerated threshold and to maintain a sufficient number of gates in order to achieve a target SER.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge