Yinwei Wei

Reasoning in the Dark: Interleaved Vision-Text Reasoning in Latent Space

Oct 14, 2025

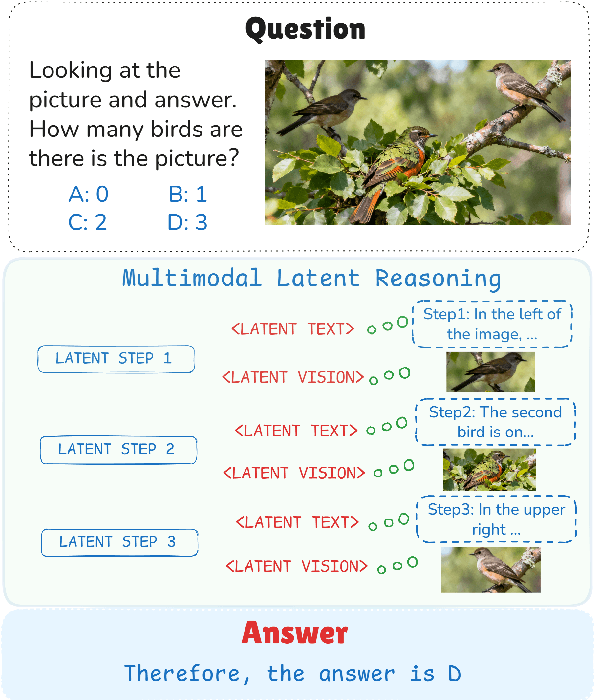

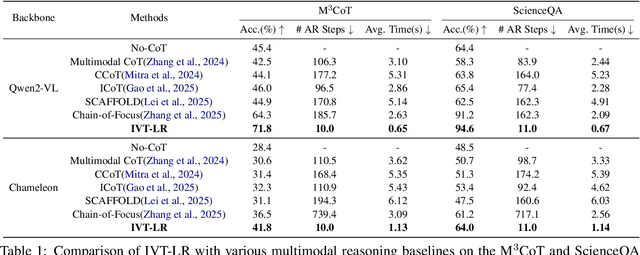

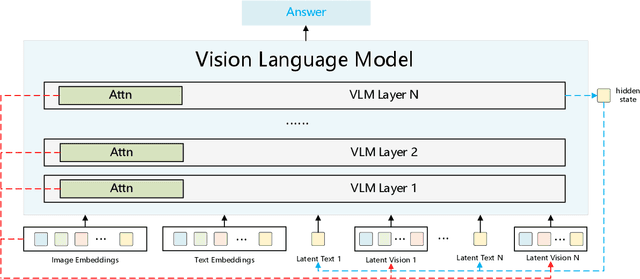

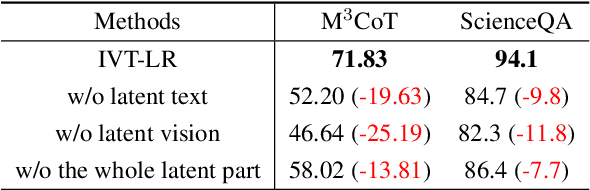

Abstract:Multimodal reasoning aims to enhance the capabilities of MLLMs by incorporating intermediate reasoning steps before reaching the final answer. It has evolved from text-only reasoning to the integration of visual information, enabling the thought process to be conveyed through both images and text. Despite its effectiveness, current multimodal reasoning methods depend on explicit reasoning steps that require labor-intensive vision-text annotations and inherently introduce significant inference latency. To address these issues, we introduce multimodal latent reasoning with the advantages of multimodal representation, reduced annotation, and inference efficiency. To facilicate it, we propose Interleaved Vision-Text Latent Reasoning (IVT-LR), which injects both visual and textual information in the reasoning process within the latent space. Specifically, IVT-LR represents each reasoning step by combining two implicit parts: latent text (the hidden states from the previous step) and latent vision (a set of selected image embeddings). We further introduce a progressive multi-stage training strategy to enable MLLMs to perform the above multimodal latent reasoning steps. Experiments on M3CoT and ScienceQA demonstrate that our IVT-LR method achieves an average performance increase of 5.45% in accuracy, while simultaneously achieving a speed increase of over 5 times compared to existing approaches. Code available at https://github.com/FYYDCC/IVT-LR.

MIST: Towards Multi-dimensional Implicit Bias and Stereotype Evaluation of LLMs via Theory of Mind

Jun 17, 2025Abstract:Theory of Mind (ToM) in Large Language Models (LLMs) refers to their capacity for reasoning about mental states, yet failures in this capacity often manifest as systematic implicit bias. Evaluating this bias is challenging, as conventional direct-query methods are susceptible to social desirability effects and fail to capture its subtle, multi-dimensional nature. To this end, we propose an evaluation framework that leverages the Stereotype Content Model (SCM) to reconceptualize bias as a multi-dimensional failure in ToM across Competence, Sociability, and Morality. The framework introduces two indirect tasks: the Word Association Bias Test (WABT) to assess implicit lexical associations and the Affective Attribution Test (AAT) to measure covert affective leanings, both designed to probe latent stereotypes without triggering model avoidance. Extensive experiments on 8 State-of-the-Art LLMs demonstrate our framework's capacity to reveal complex bias structures, including pervasive sociability bias, multi-dimensional divergence, and asymmetric stereotype amplification, thereby providing a more robust methodology for identifying the structural nature of implicit bias.

KEPLA: A Knowledge-Enhanced Deep Learning Framework for Accurate Protein-Ligand Binding Affinity Prediction

Jun 16, 2025

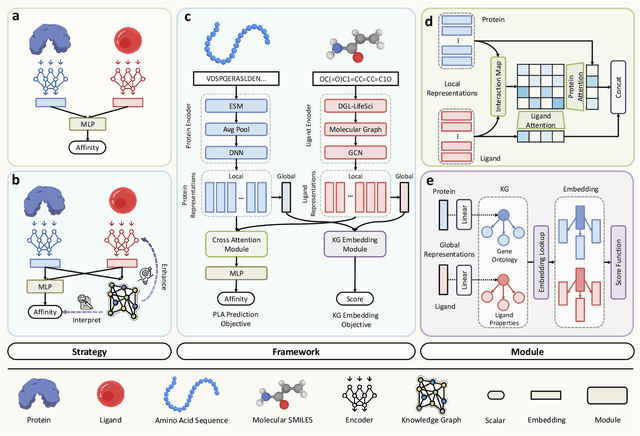

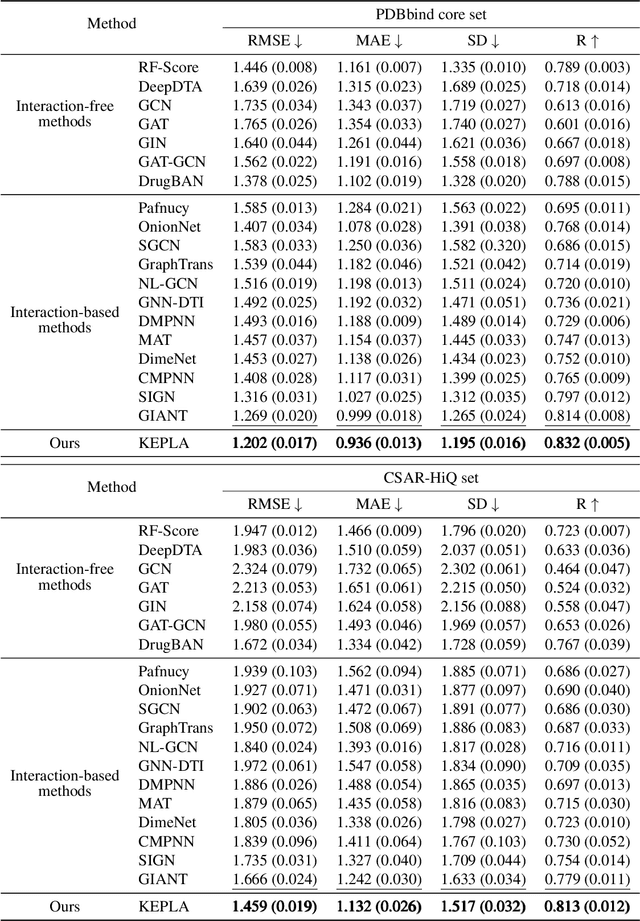

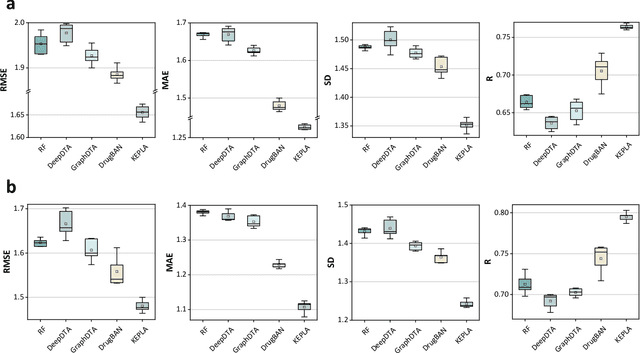

Abstract:Accurate prediction of protein-ligand binding affinity is critical for drug discovery. While recent deep learning approaches have demonstrated promising results, they often rely solely on structural features, overlooking valuable biochemical knowledge associated with binding affinity. To address this limitation, we propose KEPLA, a novel deep learning framework that explicitly integrates prior knowledge from Gene Ontology and ligand properties of proteins and ligands to enhance prediction performance. KEPLA takes protein sequences and ligand molecular graphs as input and optimizes two complementary objectives: (1) aligning global representations with knowledge graph relations to capture domain-specific biochemical insights, and (2) leveraging cross attention between local representations to construct fine-grained joint embeddings for prediction. Experiments on two benchmark datasets across both in-domain and cross-domain scenarios demonstrate that KEPLA consistently outperforms state-of-the-art baselines. Furthermore, interpretability analyses based on knowledge graph relations and cross attention maps provide valuable insights into the underlying predictive mechanisms.

Mitigating Hallucination Through Theory-Consistent Symmetric Multimodal Preference Optimization

Jun 13, 2025

Abstract:Direct Preference Optimization (DPO) has emerged as an effective approach for mitigating hallucination in Multimodal Large Language Models (MLLMs). Although existing methods have achieved significant progress by utilizing vision-oriented contrastive objectives for enhancing MLLMs' attention to visual inputs and hence reducing hallucination, they suffer from non-rigorous optimization objective function and indirect preference supervision. To address these limitations, we propose a Symmetric Multimodal Preference Optimization (SymMPO), which conducts symmetric preference learning with direct preference supervision (i.e., response pairs) for visual understanding enhancement, while maintaining rigorous theoretical alignment with standard DPO. In addition to conventional ordinal preference learning, SymMPO introduces a preference margin consistency loss to quantitatively regulate the preference gap between symmetric preference pairs. Comprehensive evaluation across five benchmarks demonstrate SymMPO's superior performance, validating its effectiveness in hallucination mitigation of MLLMs.

Dynamic Multimodal Fusion via Meta-Learning Towards Micro-Video Recommendation

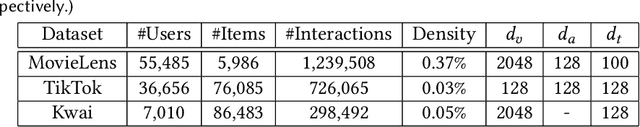

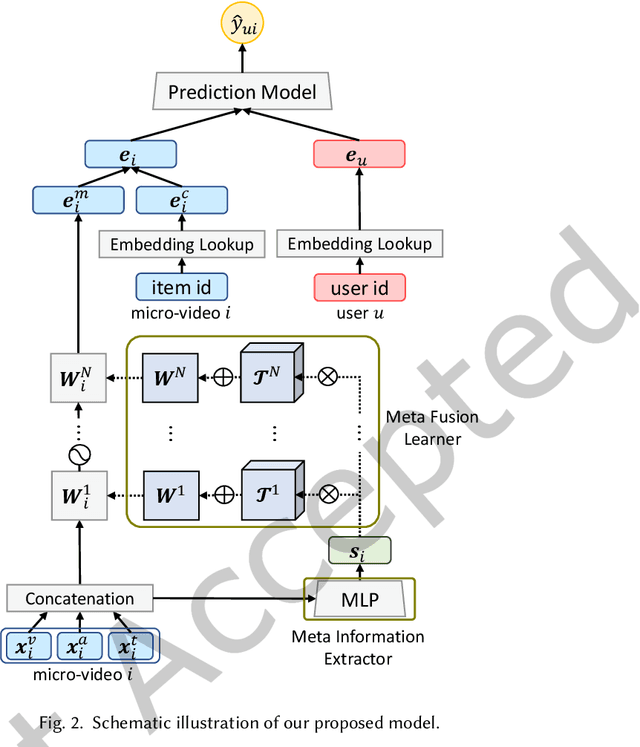

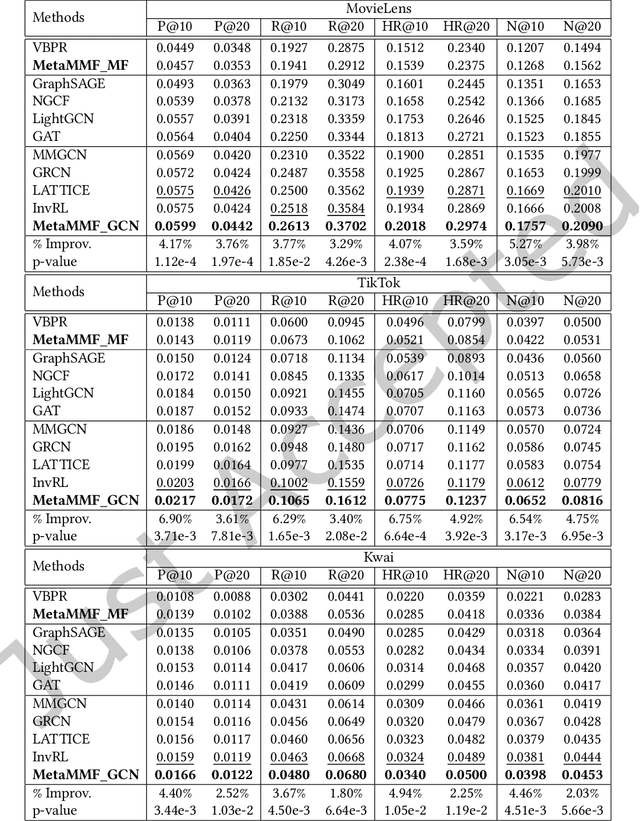

Jan 13, 2025

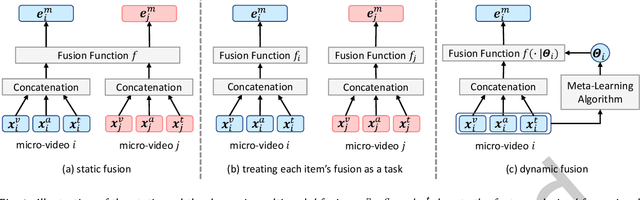

Abstract:Multimodal information (e.g., visual, acoustic, and textual) has been widely used to enhance representation learning for micro-video recommendation. For integrating multimodal information into a joint representation of micro-video, multimodal fusion plays a vital role in the existing micro-video recommendation approaches. However, the static multimodal fusion used in previous studies is insufficient to model the various relationships among multimodal information of different micro-videos. In this paper, we develop a novel meta-learning-based multimodal fusion framework called Meta Multimodal Fusion (MetaMMF), which dynamically assigns parameters to the multimodal fusion function for each micro-video during its representation learning. Specifically, MetaMMF regards the multimodal fusion of each micro-video as an independent task. Based on the meta information extracted from the multimodal features of the input task, MetaMMF parameterizes a neural network as the item-specific fusion function via a meta learner. We perform extensive experiments on three benchmark datasets, demonstrating the significant improvements over several state-of-the-art multimodal recommendation models, like MMGCN, LATTICE, and InvRL. Furthermore, we lighten our model by adopting canonical polyadic decomposition to improve the training efficiency, and validate its effectiveness through experimental results. Codes are available at https://github.com/hanliu95/MetaMMF.

Modality-Independent Graph Neural Networks with Global Transformers for Multimodal Recommendation

Dec 18, 2024

Abstract:Multimodal recommendation systems can learn users' preferences from existing user-item interactions as well as the semantics of multimodal data associated with items. Many existing methods model this through a multimodal user-item graph, approaching multimodal recommendation as a graph learning task. Graph Neural Networks (GNNs) have shown promising performance in this domain. Prior research has capitalized on GNNs' capability to capture neighborhood information within certain receptive fields (typically denoted by the number of hops, $K$) to enrich user and item semantics. We observe that the optimal receptive fields for GNNs can vary across different modalities. In this paper, we propose GNNs with Modality-Independent Receptive Fields, which employ separate GNNs with independent receptive fields for different modalities to enhance performance. Our results indicate that the optimal $K$ for certain modalities on specific datasets can be as low as 1 or 2, which may restrict the GNNs' capacity to capture global information. To address this, we introduce a Sampling-based Global Transformer, which utilizes uniform global sampling to effectively integrate global information for GNNs. We conduct comprehensive experiments that demonstrate the superiority of our approach over existing methods. Our code is publicly available at https://github.com/CrawlScript/MIG-GT.

Revolutionizing Text-to-Image Retrieval as Autoregressive Token-to-Voken Generation

Jul 24, 2024

Abstract:Text-to-image retrieval is a fundamental task in multimedia processing, aiming to retrieve semantically relevant cross-modal content. Traditional studies have typically approached this task as a discriminative problem, matching the text and image via the cross-attention mechanism (one-tower framework) or in a common embedding space (two-tower framework). Recently, generative cross-modal retrieval has emerged as a new research line, which assigns images with unique string identifiers and generates the target identifier as the retrieval target. Despite its great potential, existing generative approaches are limited due to the following issues: insufficient visual information in identifiers, misalignment with high-level semantics, and learning gap towards the retrieval target. To address the above issues, we propose an autoregressive voken generation method, named AVG. AVG tokenizes images into vokens, i.e., visual tokens, and innovatively formulates the text-to-image retrieval task as a token-to-voken generation problem. AVG discretizes an image into a sequence of vokens as the identifier of the image, while maintaining the alignment with both the visual information and high-level semantics of the image. Additionally, to bridge the learning gap between generative training and the retrieval target, we incorporate discriminative training to modify the learning direction during token-to-voken training. Extensive experiments demonstrate that AVG achieves superior results in both effectiveness and efficiency.

Harnessing Large Language Models for Multimodal Product Bundling

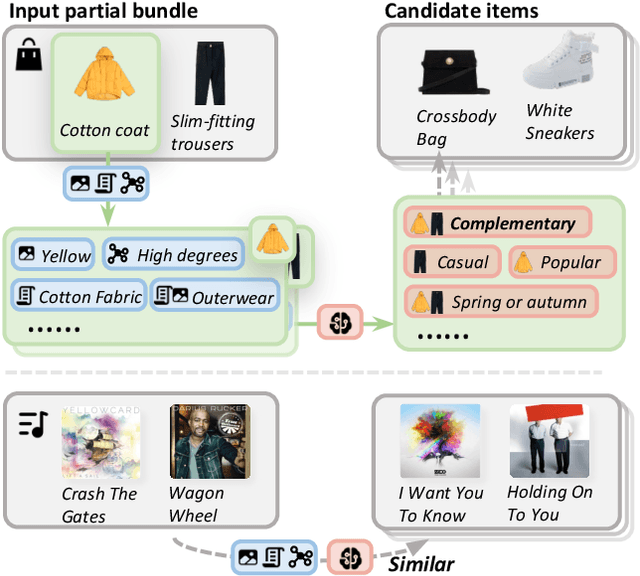

Jul 16, 2024

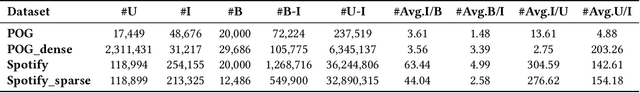

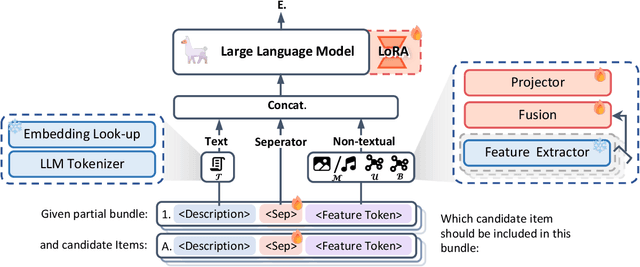

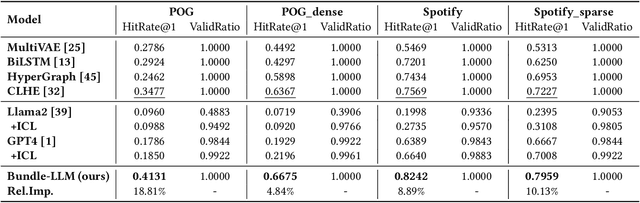

Abstract:Product bundling provides clients with a strategic combination of individual items.And it has gained significant attention in recent years as a fundamental prerequisite for online services. Recent methods utilize multimodal information through sophisticated extractors for bundling, but remain limited by inferior semantic understanding, the restricted scope of knowledge, and an inability to handle cold-start issues.Despite the extensive knowledge and complex reasoning capabilities of large language models (LLMs), their direct utilization fails to process multimodalities and exploit their knowledge for multimodal product bundling. Adapting LLMs for this purpose involves demonstrating the synergies among different modalities and designing an effective optimization strategy for bundling, which remains challenging.To this end, we introduce Bundle-LLM to bridge the gap between LLMs and product bundling tasks. Sepcifically, we utilize a hybrid item tokenization to integrate multimodal information, where a simple yet powerful multimodal fusion module followed by a trainable projector embeds all non-textual features into a single token. This module not only explicitly exhibits the interplays among modalities but also shortens the prompt length, thereby boosting efficiency.By designing a prompt template, we formulate product bundling as a multiple-choice question given candidate items. Furthermore, we adopt progressive optimization strategy to fine-tune the LLMs for disentangled objectives, achieving effective product bundling capability with comprehensive multimodal semantic understanding.Extensive experiments on four datasets from two application domains show that our approach outperforms a range of state-of-the-art (SOTA) methods.

PromptDSI: Prompt-based Rehearsal-free Instance-wise Incremental Learning for Document Retrieval

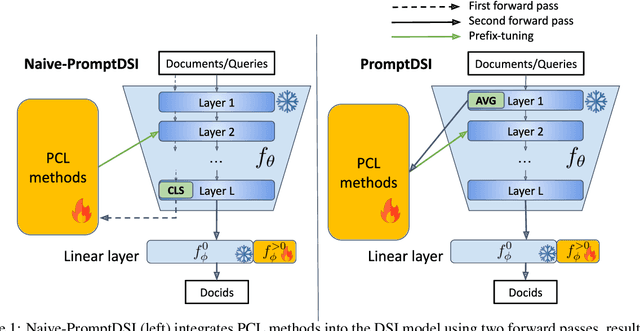

Jun 18, 2024

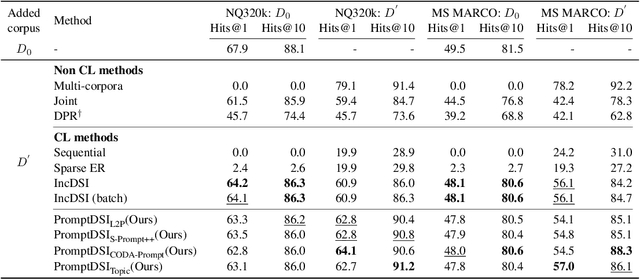

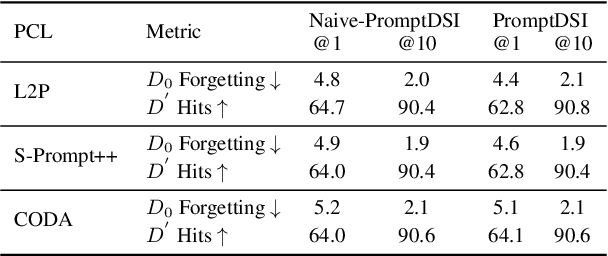

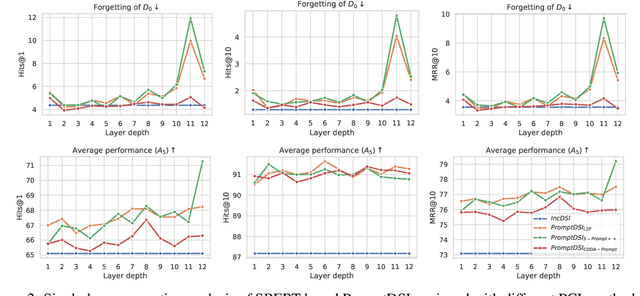

Abstract:Differentiable Search Index (DSI) utilizes Pre-trained Language Models (PLMs) for efficient document retrieval without relying on external indexes. However, DSIs need full re-training to handle updates in dynamic corpora, causing significant computational inefficiencies. We introduce PromptDSI, a rehearsal-free, prompt-based approach for instance-wise incremental learning in document retrieval. PromptDSI attaches prompts to the frozen PLM's encoder of DSI, leveraging its powerful representation to efficiently index new corpora while maintaining a balance between stability and plasticity. We eliminate the initial forward pass of prompt-based continual learning methods that doubles training and inference time. Moreover, we propose a topic-aware prompt pool that employs neural topic embeddings as fixed keys. This strategy ensures diverse and effective prompt usage, addressing the challenge of parameter underutilization caused by the collapse of the query-key matching mechanism. Our empirical evaluations demonstrate that PromptDSI matches IncDSI in managing forgetting while significantly enhancing recall by over 4% on new corpora.

MMGRec: Multimodal Generative Recommendation with Transformer Model

Apr 25, 2024Abstract:Multimodal recommendation aims to recommend user-preferred candidates based on her/his historically interacted items and associated multimodal information. Previous studies commonly employ an embed-and-retrieve paradigm: learning user and item representations in the same embedding space, then retrieving similar candidate items for a user via embedding inner product. However, this paradigm suffers from inference cost, interaction modeling, and false-negative issues. Toward this end, we propose a new MMGRec model to introduce a generative paradigm into multimodal recommendation. Specifically, we first devise a hierarchical quantization method Graph RQ-VAE to assign Rec-ID for each item from its multimodal and CF information. Consisting of a tuple of semantically meaningful tokens, Rec-ID serves as the unique identifier of each item. Afterward, we train a Transformer-based recommender to generate the Rec-IDs of user-preferred items based on historical interaction sequences. The generative paradigm is qualified since this model systematically predicts the tuple of tokens identifying the recommended item in an autoregressive manner. Moreover, a relation-aware self-attention mechanism is devised for the Transformer to handle non-sequential interaction sequences, which explores the element pairwise relation to replace absolute positional encoding. Extensive experiments evaluate MMGRec's effectiveness compared with state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge