Peilin Chen

Orion-RAG: Path-Aligned Hybrid Retrieval for Graphless Data

Jan 08, 2026Abstract:Retrieval-Augmented Generation (RAG) has proven effective for knowledge synthesis, yet it encounters significant challenges in practical scenarios where data is inherently discrete and fragmented. In most environments, information is distributed across isolated files like reports and logs that lack explicit links. Standard search engines process files independently, ignoring the connections between them. Furthermore, manually building Knowledge Graphs is impractical for such vast data. To bridge this gap, we present Orion-RAG. Our core insight is simple yet effective: we do not need heavy algorithms to organize this data. Instead, we use a low-complexity strategy to extract lightweight paths that naturally link related concepts. We demonstrate that this streamlined approach suffices to transform fragmented documents into semi-structured data, enabling the system to link information across different files effectively. Extensive experiments demonstrate that Orion-RAG consistently outperforms mainstream frameworks across diverse domains, supporting real-time updates and explicit Human-in-the-Loop verification with high cost-efficiency. Experiments on FinanceBench demonstrate superior precision with a 25.2% relative improvement over strong baselines.

VQualA 2025 Challenge on Visual Quality Comparison for Large Multimodal Models: Methods and Results

Sep 11, 2025

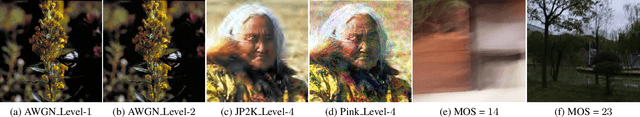

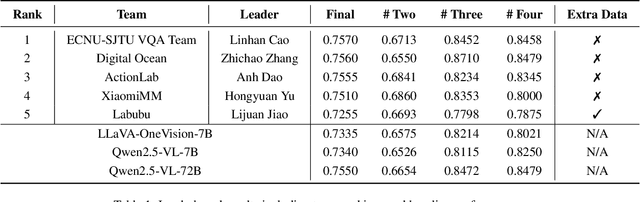

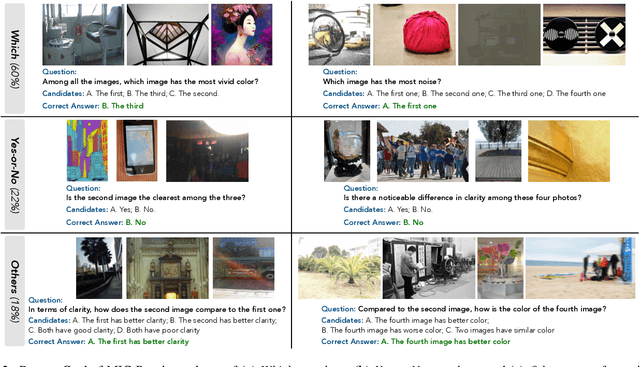

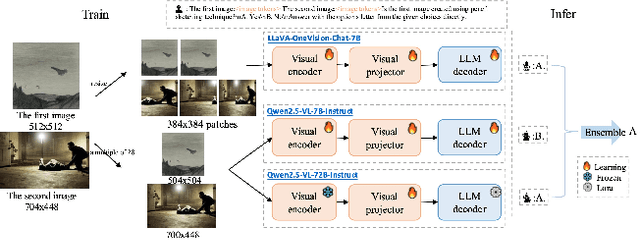

Abstract:This paper presents a summary of the VQualA 2025 Challenge on Visual Quality Comparison for Large Multimodal Models (LMMs), hosted as part of the ICCV 2025 Workshop on Visual Quality Assessment. The challenge aims to evaluate and enhance the ability of state-of-the-art LMMs to perform open-ended and detailed reasoning about visual quality differences across multiple images. To this end, the competition introduces a novel benchmark comprising thousands of coarse-to-fine grained visual quality comparison tasks, spanning single images, pairs, and multi-image groups. Each task requires models to provide accurate quality judgments. The competition emphasizes holistic evaluation protocols, including 2AFC-based binary preference and multi-choice questions (MCQs). Around 100 participants submitted entries, with five models demonstrating the emerging capabilities of instruction-tuned LMMs on quality assessment. This challenge marks a significant step toward open-domain visual quality reasoning and comparison and serves as a catalyst for future research on interpretable and human-aligned quality evaluation systems.

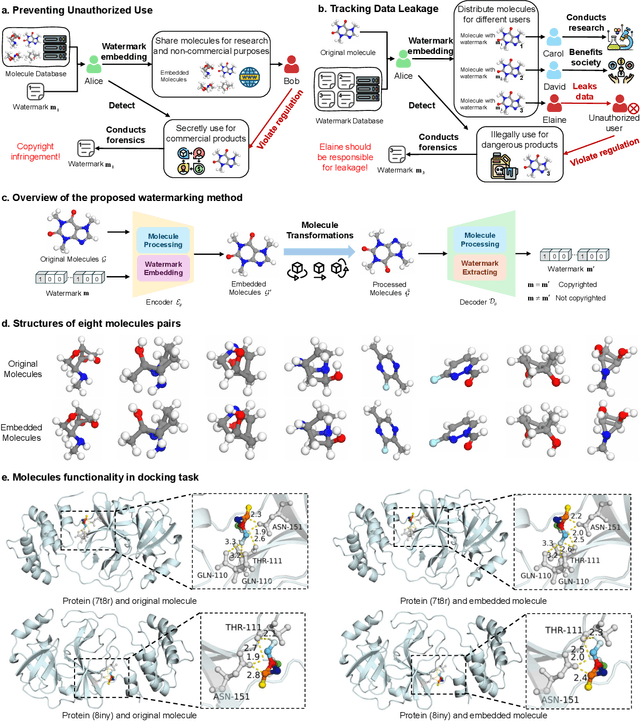

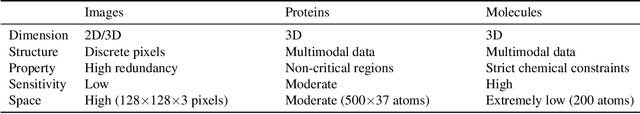

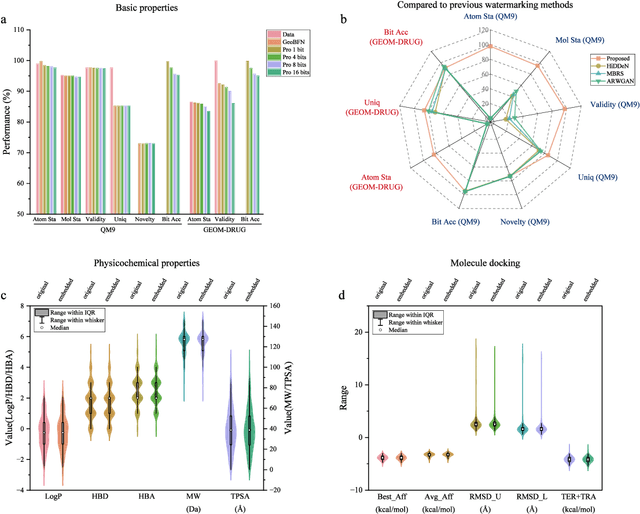

Copyright Protection for 3D Molecular Structures with Watermarking

Aug 25, 2025

Abstract:Artificial intelligence (AI) revolutionizes molecule generation in bioengineering and biological research, significantly accelerating discovery processes. However, this advancement introduces critical concerns regarding intellectual property protection. To address these challenges, we propose the first robust watermarking method designed for molecules, which utilizes atom-level features to preserve molecular integrity and invariant features to ensure robustness against affine transformations. Comprehensive experiments validate the effectiveness of our method using the datasets QM9 and GEOM-DRUG, and generative models GeoBFN and GeoLDM. We demonstrate the feasibility of embedding watermarks, maintaining basic properties higher than 90.00\% while achieving watermark accuracy greater than 95.00\%. Furthermore, downstream docking simulations reveal comparable performance between original and watermarked molecules, with binding affinities reaching -6.00 kcal/mol and root mean square deviations below 1.602 \AA. These results confirm that our watermarking technique effectively safeguards molecular intellectual property without compromising scientific utility, enabling secure and responsible AI integration in molecular discovery and research applications.

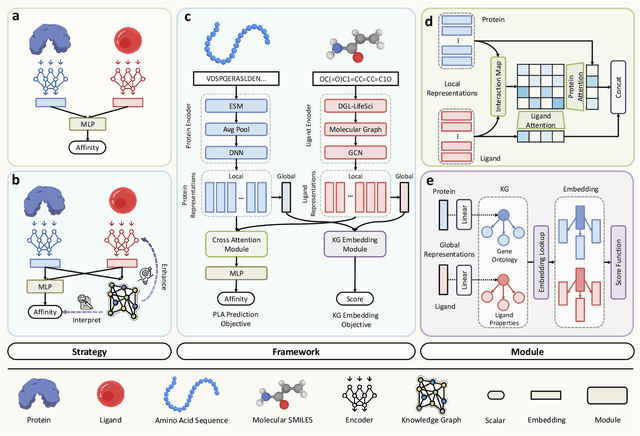

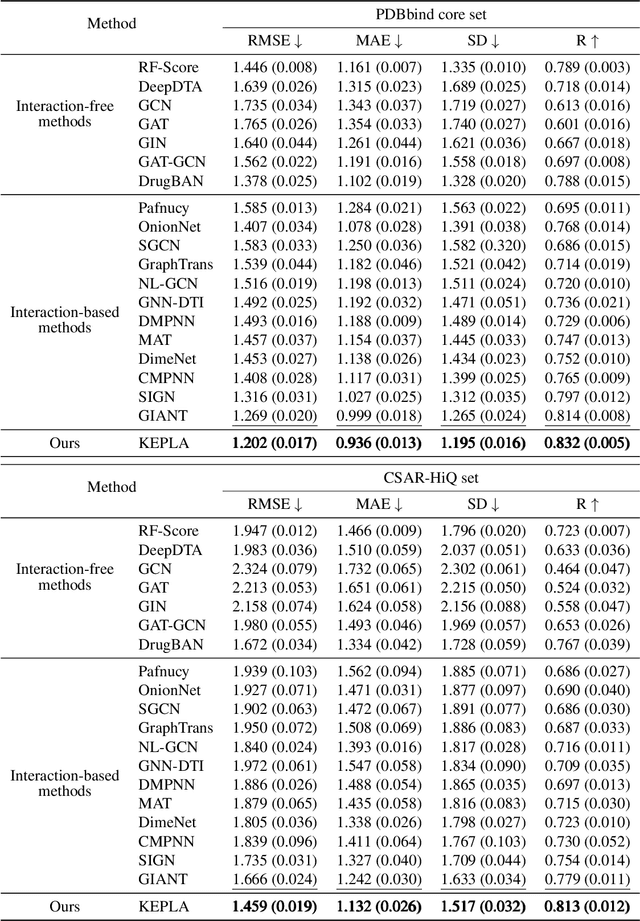

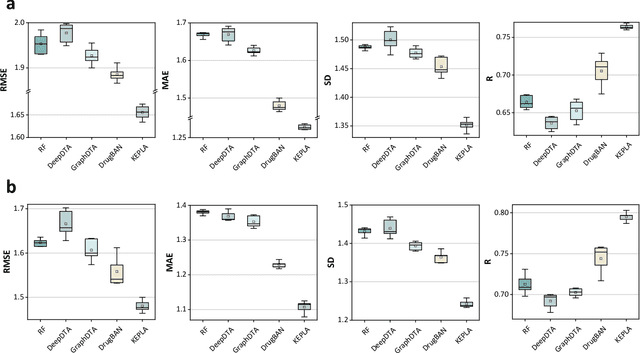

KEPLA: A Knowledge-Enhanced Deep Learning Framework for Accurate Protein-Ligand Binding Affinity Prediction

Jun 16, 2025

Abstract:Accurate prediction of protein-ligand binding affinity is critical for drug discovery. While recent deep learning approaches have demonstrated promising results, they often rely solely on structural features, overlooking valuable biochemical knowledge associated with binding affinity. To address this limitation, we propose KEPLA, a novel deep learning framework that explicitly integrates prior knowledge from Gene Ontology and ligand properties of proteins and ligands to enhance prediction performance. KEPLA takes protein sequences and ligand molecular graphs as input and optimizes two complementary objectives: (1) aligning global representations with knowledge graph relations to capture domain-specific biochemical insights, and (2) leveraging cross attention between local representations to construct fine-grained joint embeddings for prediction. Experiments on two benchmark datasets across both in-domain and cross-domain scenarios demonstrate that KEPLA consistently outperforms state-of-the-art baselines. Furthermore, interpretability analyses based on knowledge graph relations and cross attention maps provide valuable insights into the underlying predictive mechanisms.

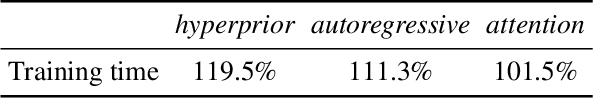

Fine-Grained Motion Compression and Selective Temporal Fusion for Neural B-Frame Video Coding

Jun 09, 2025Abstract:With the remarkable progress in neural P-frame video coding, neural B-frame coding has recently emerged as a critical research direction. However, most existing neural B-frame codecs directly adopt P-frame coding tools without adequately addressing the unique challenges of B-frame compression, leading to suboptimal performance. To bridge this gap, we propose novel enhancements for motion compression and temporal fusion for neural B-frame coding. First, we design a fine-grained motion compression method. This method incorporates an interactive dual-branch motion auto-encoder with per-branch adaptive quantization steps, which enables fine-grained compression of bi-directional motion vectors while accommodating their asymmetric bitrate allocation and reconstruction quality requirements. Furthermore, this method involves an interactive motion entropy model that exploits correlations between bi-directional motion latent representations by interactively leveraging partitioned latent segments as directional priors. Second, we propose a selective temporal fusion method that predicts bi-directional fusion weights to achieve discriminative utilization of bi-directional multi-scale temporal contexts with varying qualities. Additionally, this method introduces a hyperprior-based implicit alignment mechanism for contextual entropy modeling. By treating the hyperprior as a surrogate for the contextual latent representation, this mechanism implicitly mitigates the misalignment in the fused bi-directional temporal priors. Extensive experiments demonstrate that our proposed codec outperforms state-of-the-art neural B-frame codecs and achieves comparable or even superior compression performance to the H.266/VVC reference software under random-access configurations.

Leveraging Diffusion Knowledge for Generative Image Compression with Fractal Frequency-Aware Band Learning

Mar 14, 2025Abstract:By optimizing the rate-distortion-realism trade-off, generative image compression approaches produce detailed, realistic images instead of the only sharp-looking reconstructions produced by rate-distortion-optimized models. In this paper, we propose a novel deep learning-based generative image compression method injected with diffusion knowledge, obtaining the capacity to recover more realistic textures in practical scenarios. Efforts are made from three perspectives to navigate the rate-distortion-realism trade-off in the generative image compression task. First, recognizing the strong connection between image texture and frequency-domain characteristics, we design a Fractal Frequency-Aware Band Image Compression (FFAB-IC) network to effectively capture the directional frequency components inherent in natural images. This network integrates commonly used fractal band feature operations within a neural non-linear mapping design, enhancing its ability to retain essential given information and filter out unnecessary details. Then, to improve the visual quality of image reconstruction under limited bandwidth, we integrate diffusion knowledge into the encoder and implement diffusion iterations into the decoder process, thus effectively recovering lost texture details. Finally, to fully leverage the spatial and frequency intensity information, we incorporate frequency- and content-aware regularization terms to regularize the training of the generative image compression network. Extensive experiments in quantitative and qualitative evaluations demonstrate the superiority of the proposed method, advancing the boundaries of achievable distortion-realism pairs, i.e., our method achieves better distortions at high realism and better realism at low distortion than ever before.

Data Foundations for Large Scale Multimodal Clinical Foundation Models

Mar 09, 2025Abstract:Recent advances in clinical AI have enabled remarkable progress across many clinical domains. However, existing benchmarks and models are primarily limited to a small set of modalities and tasks, which hinders the development of large-scale multimodal methods that can make holistic assessments of patient health and well-being. To bridge this gap, we introduce Clinical Large-Scale Integrative Multimodal Benchmark (CLIMB), a comprehensive clinical benchmark unifying diverse clinical data across imaging, language, temporal, and graph modalities. CLIMB comprises 4.51 million patient samples totaling 19.01 terabytes distributed across 2D imaging, 3D video, time series, graphs, and multimodal data. Through extensive empirical evaluation, we demonstrate that multitask pretraining significantly improves performance on understudied domains, achieving up to 29% improvement in ultrasound and 23% in ECG analysis over single-task learning. Pretraining on CLIMB also effectively improves models' generalization capability to new tasks, and strong unimodal encoder performance translates well to multimodal performance when paired with task-appropriate fusion strategies. Our findings provide a foundation for new architecture designs and pretraining strategies to advance clinical AI research. Code is released at https://github.com/DDVD233/climb.

An Information-Theoretic Regularizer for Lossy Neural Image Compression

Nov 23, 2024

Abstract:Lossy image compression networks aim to minimize the latent entropy of images while adhering to specific distortion constraints. However, optimizing the neural network can be challenging due to its nature of learning quantized latent representations. In this paper, our key finding is that minimizing the latent entropy is, to some extent, equivalent to maximizing the conditional source entropy, an insight that is deeply rooted in information-theoretic equalities. Building on this insight, we propose a novel structural regularization method for the neural image compression task by incorporating the negative conditional source entropy into the training objective, such that both the optimization efficacy and the model's generalization ability can be promoted. The proposed information-theoretic regularizer is interpretable, plug-and-play, and imposes no inference overheads. Extensive experiments demonstrate its superiority in regularizing the models and further squeezing bits from the latent representation across various compression structures and unseen domains.

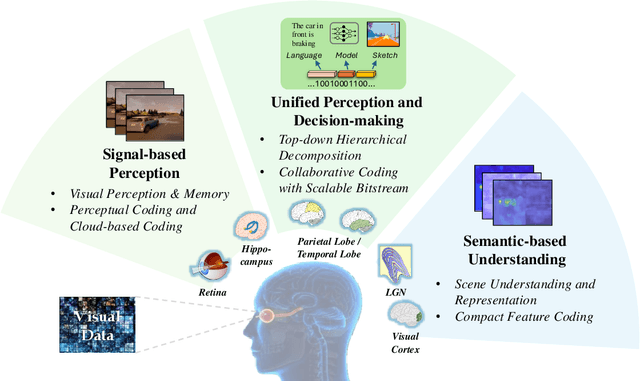

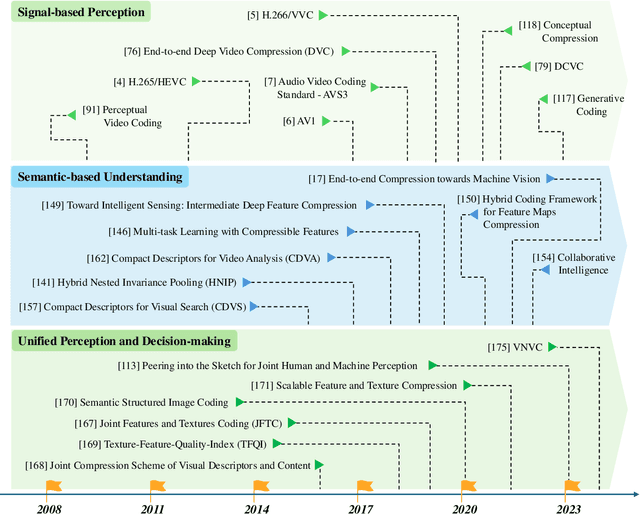

Compact Visual Data Representation for Green Multimedia -- A Human Visual System Perspective

Nov 21, 2024

Abstract:The Human Visual System (HVS), with its intricate sophistication, is capable of achieving ultra-compact information compression for visual signals. This remarkable ability is coupled with high generalization capability and energy efficiency. By contrast, the state-of-the-art Versatile Video Coding (VVC) standard achieves a compression ratio of around 1,000 times for raw visual data. This notable disparity motivates the research community to draw inspiration to effectively handle the immense volume of visual data in a green way. Therefore, this paper provides a survey of how visual data can be efficiently represented for green multimedia, in particular when the ultimate task is knowledge extraction instead of visual signal reconstruction. We introduce recent research efforts that promote green, sustainable, and efficient multimedia in this field. Moreover, we discuss how the deep understanding of the HVS can benefit the research community, and envision the development of future green multimedia technologies.

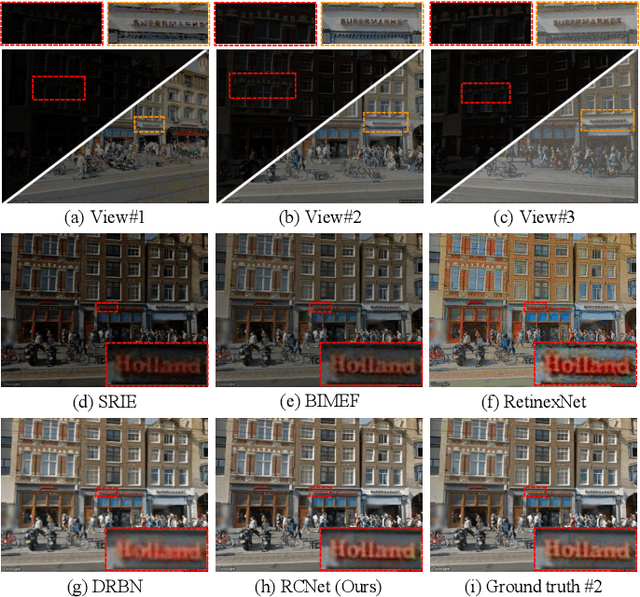

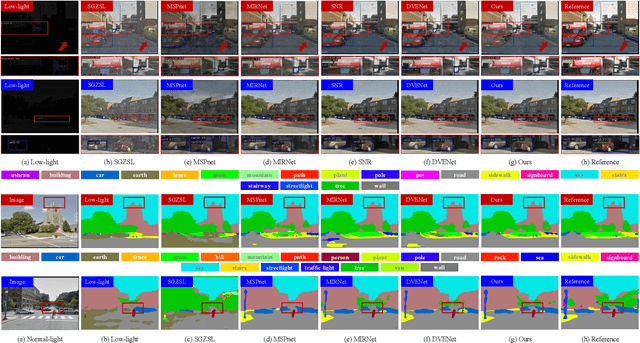

RCNet: Deep Recurrent Collaborative Network for Multi-View Low-Light Image Enhancement

Sep 06, 2024

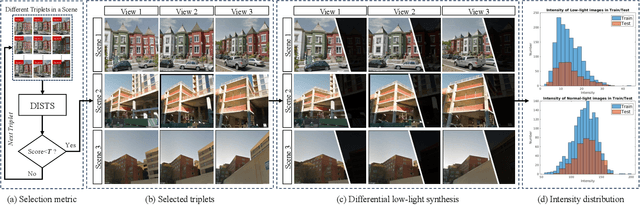

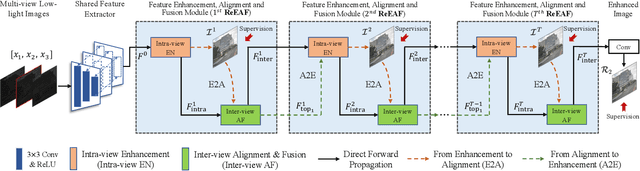

Abstract:Scene observation from multiple perspectives would bring a more comprehensive visual experience. However, in the context of acquiring multiple views in the dark, the highly correlated views are seriously alienated, making it challenging to improve scene understanding with auxiliary views. Recent single image-based enhancement methods may not be able to provide consistently desirable restoration performance for all views due to the ignorance of potential feature correspondence among different views. To alleviate this issue, we make the first attempt to investigate multi-view low-light image enhancement. First, we construct a new dataset called Multi-View Low-light Triplets (MVLT), including 1,860 pairs of triple images with large illumination ranges and wide noise distribution. Each triplet is equipped with three different viewpoints towards the same scene. Second, we propose a deep multi-view enhancement framework based on the Recurrent Collaborative Network (RCNet). Specifically, in order to benefit from similar texture correspondence across different views, we design the recurrent feature enhancement, alignment and fusion (ReEAF) module, in which intra-view feature enhancement (Intra-view EN) followed by inter-view feature alignment and fusion (Inter-view AF) is performed to model the intra-view and inter-view feature propagation sequentially via multi-view collaboration. In addition, two different modules from enhancement to alignment (E2A) and from alignment to enhancement (A2E) are developed to enable the interactions between Intra-view EN and Inter-view AF, which explicitly utilize attentive feature weighting and sampling for enhancement and alignment, respectively. Experimental results demonstrate that our RCNet significantly outperforms other state-of-the-art methods. All of our dataset, code, and model will be available at https://github.com/hluo29/RCNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge