Siwei Ma

Content Adaptive based Motion Alignment Framework for Learned Video Compression

Dec 15, 2025

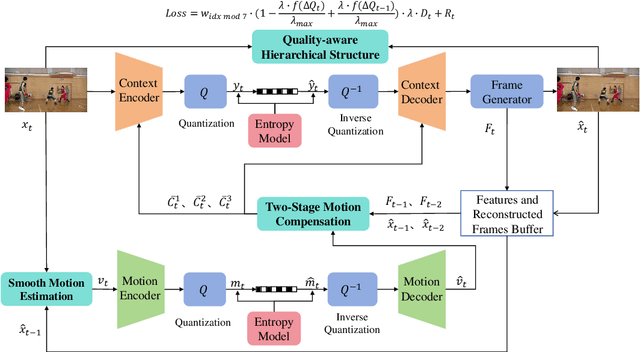

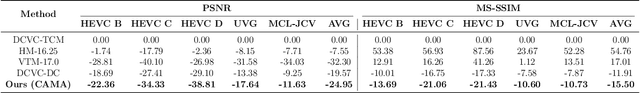

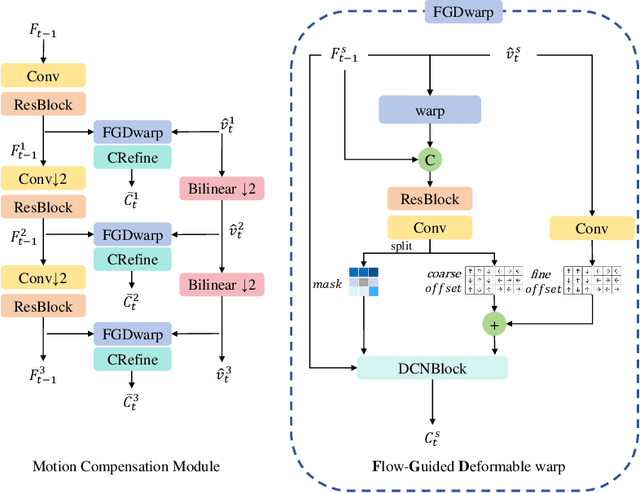

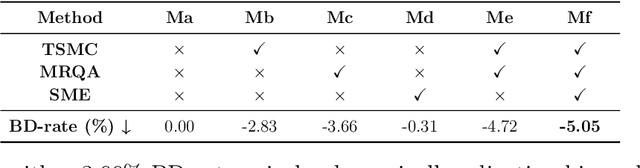

Abstract:Recent advances in end-to-end video compression have shown promising results owing to their unified end-to-end learning optimization. However, such generalized frameworks often lack content-specific adaptation, leading to suboptimal compression performance. To address this, this paper proposes a content adaptive based motion alignment framework that improves performance by adapting encoding strategies to diverse content characteristics. Specifically, we first introduce a two-stage flow-guided deformable warping mechanism that refines motion compensation with coarse-to-fine offset prediction and mask modulation, enabling precise feature alignment. Second, we propose a multi-reference quality aware strategy that adjusts distortion weights based on reference quality, and applies it to hierarchical training to reduce error propagation. Third, we integrate a training-free module that downsamples frames by motion magnitude and resolution to obtain smooth motion estimation. Experimental results on standard test datasets demonstrate that our framework CAMA achieves significant improvements over state-of-the-art Neural Video Compression models, achieving a 24.95% BD-rate (PSNR) savings over our baseline model DCVC-TCM, while also outperforming reproduced DCVC-DC and traditional codec HM-16.25.

L-STEC: Learned Video Compression with Long-term Spatio-Temporal Enhanced Context

Dec 14, 2025Abstract:Neural Video Compression has emerged in recent years, with condition-based frameworks outperforming traditional codecs. However, most existing methods rely solely on the previous frame's features to predict temporal context, leading to two critical issues. First, the short reference window misses long-term dependencies and fine texture details. Second, propagating only feature-level information accumulates errors over frames, causing prediction inaccuracies and loss of subtle textures. To address these, we propose the Long-term Spatio-Temporal Enhanced Context (L-STEC) method. We first extend the reference chain with LSTM to capture long-term dependencies. We then incorporate warped spatial context from the pixel domain, fusing spatio-temporal information through a multi-receptive field network to better preserve reference details. Experimental results show that L-STEC significantly improves compression by enriching contextual information, achieving 37.01% bitrate savings in PSNR and 31.65% in MS-SSIM compared to DCVC-TCM, outperforming both VTM-17.0 and DCVC-FM and establishing new state-of-the-art performance.

MoCo: Motion-Consistent Human Video Generation via Structure-Appearance Decoupling

Aug 24, 2025Abstract:Generating human videos with consistent motion from text prompts remains a significant challenge, particularly for whole-body or long-range motion. Existing video generation models prioritize appearance fidelity, resulting in unrealistic or physically implausible human movements with poor structural coherence. Additionally, most existing human video datasets primarily focus on facial or upper-body motions, or consist of vertically oriented dance videos, limiting the scope of corresponding generation methods to simple movements. To overcome these challenges, we propose MoCo, which decouples the process of human video generation into two components: structure generation and appearance generation. Specifically, our method first employs an efficient 3D structure generator to produce a human motion sequence from a text prompt. The remaining video appearance is then synthesized under the guidance of the generated structural sequence. To improve fine-grained control over sparse human structures, we introduce Human-Aware Dynamic Control modules and integrate dense tracking constraints during training. Furthermore, recognizing the limitations of existing datasets, we construct a large-scale whole-body human video dataset featuring complex and diverse motions. Extensive experiments demonstrate that MoCo outperforms existing approaches in generating realistic and structurally coherent human videos.

TinySplat: Feedforward Approach for Generating Compact 3D Scene Representation

Jun 11, 2025

Abstract:The recent development of feedforward 3D Gaussian Splatting (3DGS) presents a new paradigm to reconstruct 3D scenes. Using neural networks trained on large-scale multi-view datasets, it can directly infer 3DGS representations from sparse input views. Although the feedforward approach achieves high reconstruction speed, it still suffers from the substantial storage cost of 3D Gaussians. Existing 3DGS compression methods relying on scene-wise optimization are not applicable due to architectural incompatibilities. To overcome this limitation, we propose TinySplat, a complete feedforward approach for generating compact 3D scene representations. Built upon standard feedforward 3DGS methods, TinySplat integrates a training-free compression framework that systematically eliminates key sources of redundancy. Specifically, we introduce View-Projection Transformation (VPT) to reduce geometric redundancy by projecting geometric parameters into a more compact space. We further present Visibility-Aware Basis Reduction (VABR), which mitigates perceptual redundancy by aligning feature energy along dominant viewing directions via basis transformation. Lastly, spatial redundancy is addressed through an off-the-shelf video codec. Comprehensive experimental results on multiple benchmark datasets demonstrate that TinySplat achieves over 100x compression for 3D Gaussian data generated by feedforward methods. Compared to the state-of-the-art compression approach, we achieve comparable quality with only 6% of the storage size. Meanwhile, our compression framework requires only 25% of the encoding time and 1% of the decoding time.

ProSplat: Improved Feed-Forward 3D Gaussian Splatting for Wide-Baseline Sparse Views

Jun 09, 2025Abstract:Feed-forward 3D Gaussian Splatting (3DGS) has recently demonstrated promising results for novel view synthesis (NVS) from sparse input views, particularly under narrow-baseline conditions. However, its performance significantly degrades in wide-baseline scenarios due to limited texture details and geometric inconsistencies across views. To address these challenges, in this paper, we propose ProSplat, a two-stage feed-forward framework designed for high-fidelity rendering under wide-baseline conditions. The first stage involves generating 3D Gaussian primitives via a 3DGS generator. In the second stage, rendered views from these primitives are enhanced through an improvement model. Specifically, this improvement model is based on a one-step diffusion model, further optimized by our proposed Maximum Overlap Reference view Injection (MORI) and Distance-Weighted Epipolar Attention (DWEA). MORI supplements missing texture and color by strategically selecting a reference view with maximum viewpoint overlap, while DWEA enforces geometric consistency using epipolar constraints. Additionally, we introduce a divide-and-conquer training strategy that aligns data distributions between the two stages through joint optimization. We evaluate ProSplat on the RealEstate10K and DL3DV-10K datasets under wide-baseline settings. Experimental results demonstrate that ProSplat achieves an average improvement of 1 dB in PSNR compared to recent SOTA methods.

Emerging Advances in Learned Video Compression: Models, Systems and Beyond

Apr 30, 2025Abstract:Video compression is a fundamental topic in the visual intelligence, bridging visual signal sensing/capturing and high-level visual analytics. The broad success of artificial intelligence (AI) technology has enriched the horizon of video compression into novel paradigms by leveraging end-to-end optimized neural models. In this survey, we first provide a comprehensive and systematic overview of recent literature on end-to-end optimized learned video coding, covering the spectrum of pioneering efforts in both uni-directional and bi-directional prediction based compression model designation. We further delve into the optimization techniques employed in learned video compression (LVC), emphasizing their technical innovations, advantages. Some standardization progress is also reported. Furthermore, we investigate the system design and hardware implementation challenges of the LVC inclusively. Finally, we present the extensive simulation results to demonstrate the superior compression performance of LVC models, addressing the question that why learned codecs and AI-based video technology would have with broad impact on future visual intelligence research.

A Joint Visual Compression and Perception Framework for Neuralmorphic Spiking Camera

Mar 04, 2025Abstract:The advent of neuralmorphic spike cameras has garnered significant attention for their ability to capture continuous motion with unparalleled temporal resolution.However, this imaging attribute necessitates considerable resources for binary spike data storage and transmission.In light of compression and spike-driven intelligent applications, we present the notion of Spike Coding for Intelligence (SCI), wherein spike sequences are compressed and optimized for both bit-rate and task performance.Drawing inspiration from the mammalian vision system, we propose a dual-pathway architecture for separate processing of spatial semantics and motion information, which is then merged to produce features for compression.A refinement scheme is also introduced to ensure consistency between decoded features and motion vectors.We further propose a temporal regression approach that integrates various motion dynamics, capitalizing on the advancements in warping and deformation simultaneously.Comprehensive experiments demonstrate our scheme achieves state-of-the-art (SOTA) performance for spike compression and analysis.We achieve an average 17.25% BD-rate reduction compared to SOTA codecs and a 4.3% accuracy improvement over SpiReco for spike-based classification, with 88.26% complexity reduction and 42.41% inference time saving on the encoding side.

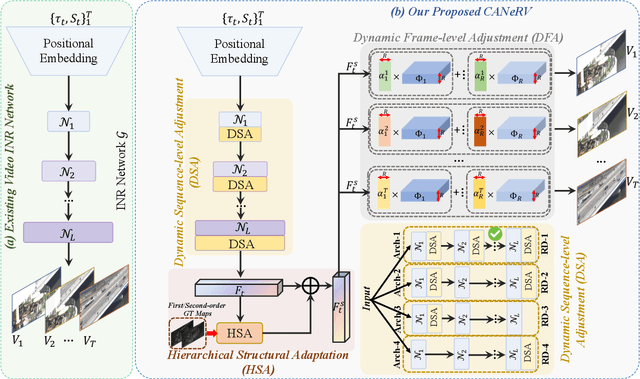

CANeRV: Content Adaptive Neural Representation for Video Compression

Feb 10, 2025

Abstract:Recent advances in video compression introduce implicit neural representation (INR) based methods, which effectively capture global dependencies and characteristics of entire video sequences. Unlike traditional and deep learning based approaches, INR-based methods optimize network parameters from a global perspective, resulting in superior compression potential. However, most current INR methods utilize a fixed and uniform network architecture across all frames, limiting their adaptability to dynamic variations within and between video sequences. This often leads to suboptimal compression outcomes as these methods struggle to capture the distinct nuances and transitions in video content. To overcome these challenges, we propose Content Adaptive Neural Representation for Video Compression (CANeRV), an innovative INR-based video compression network that adaptively conducts structure optimisation based on the specific content of each video sequence. To better capture dynamic information across video sequences, we propose a dynamic sequence-level adjustment (DSA). Furthermore, to enhance the capture of dynamics between frames within a sequence, we implement a dynamic frame-level adjustment (DFA). {Finally, to effectively capture spatial structural information within video frames, thereby enhancing the detail restoration capabilities of CANeRV, we devise a structure level hierarchical structural adaptation (HSA).} Experimental results demonstrate that CANeRV can outperform both H.266/VVC and state-of-the-art INR-based video compression techniques across diverse video datasets.

Compressed Domain Prior-Guided Video Super-Resolution for Cloud Gaming Content

Jan 03, 2025Abstract:Cloud gaming is an advanced form of Internet service that necessitates local terminals to decode within limited resources and time latency. Super-Resolution (SR) techniques are often employed on these terminals as an efficient way to reduce the required bit-rate bandwidth for cloud gaming. However, insufficient attention has been paid to SR of compressed game video content. Most SR networks amplify block artifacts and ringing effects in decoded frames while ignoring edge details of game content, leading to unsatisfactory reconstruction results. In this paper, we propose a novel lightweight network called Coding Prior-Guided Super-Resolution (CPGSR) to address the SR challenges in compressed game video content. First, we design a Compressed Domain Guided Block (CDGB) to extract features of different depths from coding priors, which are subsequently integrated with features from the U-net backbone. Then, a series of re-parameterization blocks are utilized for reconstruction. Ultimately, inspired by the quantization in video coding, we propose a partitioned focal frequency loss to effectively guide the model's focus on preserving high-frequency information. Extensive experiments demonstrate the advancement of our approach.

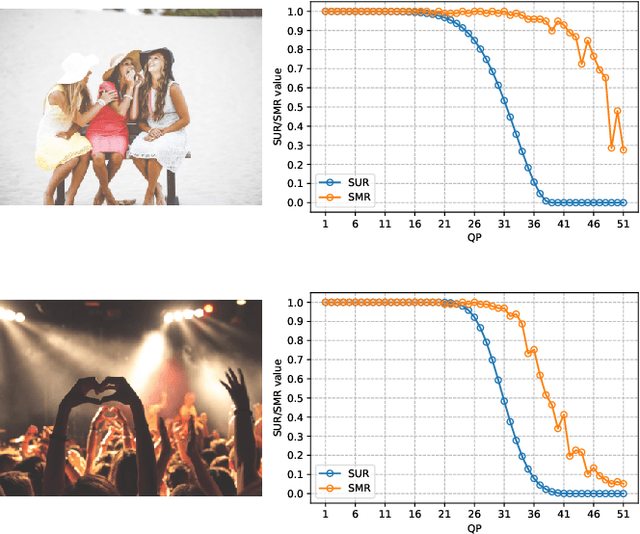

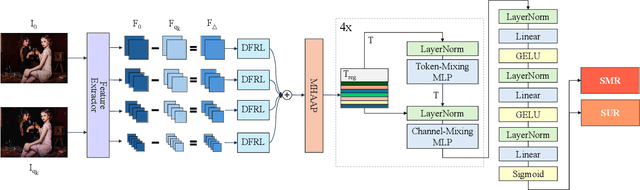

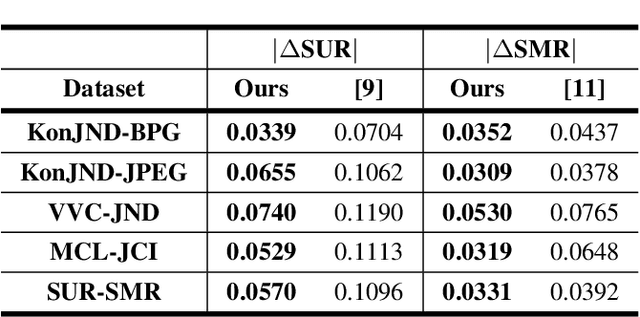

Predicting Satisfied User and Machine Ratio for Compressed Images: A Unified Approach

Dec 23, 2024

Abstract:Nowadays, high-quality images are pursued by both humans for better viewing experience and by machines for more accurate visual analysis. However, images are usually compressed before being consumed, decreasing their quality. It is meaningful to predict the perceptual quality of compressed images for both humans and machines, which guides the optimization for compression. In this paper, we propose a unified approach to address this. Specifically, we create a deep learning-based model to predict Satisfied User Ratio (SUR) and Satisfied Machine Ratio (SMR) of compressed images simultaneously. We first pre-train a feature extractor network on a large-scale SMR-annotated dataset with human perception-related quality labels generated by diverse image quality models, which simulates the acquisition of SUR labels. Then, we propose an MLP-Mixer-based network to predict SUR and SMR by leveraging and fusing the extracted multi-layer features. We introduce a Difference Feature Residual Learning (DFRL) module to learn more discriminative difference features. We further use a Multi-Head Attention Aggregation and Pooling (MHAAP) layer to aggregate difference features and reduce their redundancy. Experimental results indicate that the proposed model significantly outperforms state-of-the-art SUR and SMR prediction methods. Moreover, our joint learning scheme of human and machine perceptual quality prediction tasks is effective at improving the performance of both.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge