Kexiang Feng

A Joint Visual Compression and Perception Framework for Neuralmorphic Spiking Camera

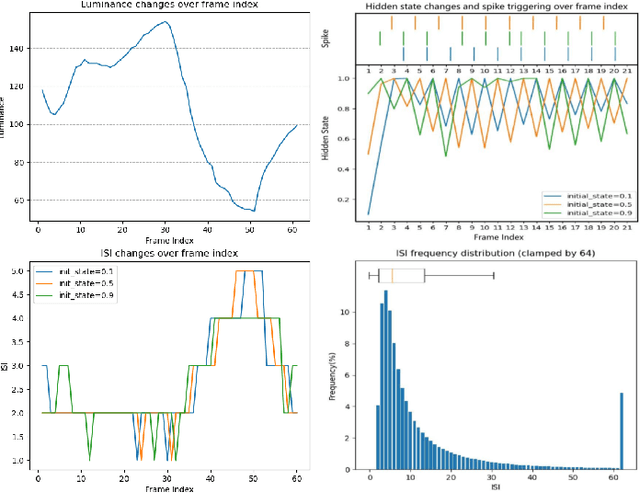

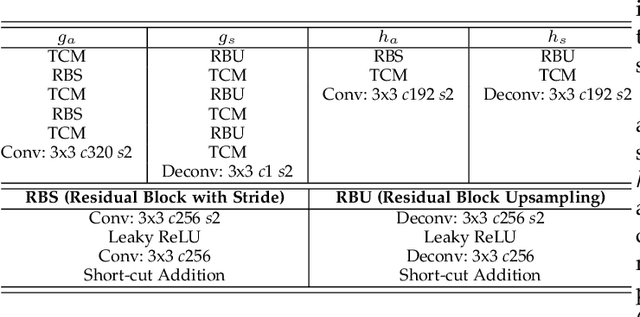

Mar 04, 2025Abstract:The advent of neuralmorphic spike cameras has garnered significant attention for their ability to capture continuous motion with unparalleled temporal resolution.However, this imaging attribute necessitates considerable resources for binary spike data storage and transmission.In light of compression and spike-driven intelligent applications, we present the notion of Spike Coding for Intelligence (SCI), wherein spike sequences are compressed and optimized for both bit-rate and task performance.Drawing inspiration from the mammalian vision system, we propose a dual-pathway architecture for separate processing of spatial semantics and motion information, which is then merged to produce features for compression.A refinement scheme is also introduced to ensure consistency between decoded features and motion vectors.We further propose a temporal regression approach that integrates various motion dynamics, capitalizing on the advancements in warping and deformation simultaneously.Comprehensive experiments demonstrate our scheme achieves state-of-the-art (SOTA) performance for spike compression and analysis.We achieve an average 17.25% BD-rate reduction compared to SOTA codecs and a 4.3% accuracy improvement over SpiReco for spike-based classification, with 88.26% complexity reduction and 42.41% inference time saving on the encoding side.

SpikeCodec: An End-to-end Learned Compression Framework for Spiking Camera

Jun 25, 2023

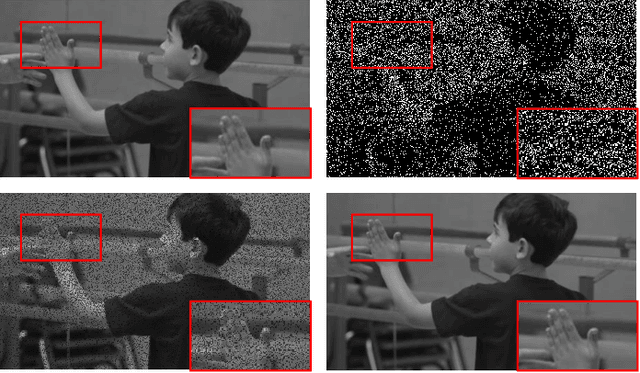

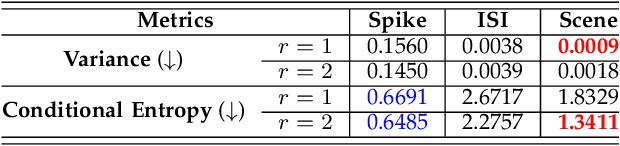

Abstract:Recently, the bio-inspired spike camera with continuous motion recording capability has attracted tremendous attention due to its ultra high temporal resolution imaging characteristic. Such imaging feature results in huge data storage and transmission burden compared to that of traditional camera, raising severe challenge and imminent necessity in compression for spike camera captured content. Existing lossy data compression methods could not be applied for compressing spike streams efficiently due to integrate-and-fire characteristic and binarized data structure. Considering the imaging principle and information fidelity of spike cameras, we introduce an effective and robust representation of spike streams. Based on this representation, we propose a novel learned spike compression framework using scene recovery, variational auto-encoder plus spike simulator. To our knowledge, it is the first data-trained model for efficient and robust spike stream compression. Extensive experimental results show that our method outperforms the conventional and learning-based codecs, contributing a strong baseline for learned spike data compression.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge