Yingwen Zhang

LMVC: An End-to-End Learned Multiview Video Coding Framework

Sep 04, 2025Abstract:Multiview video is a key data source for volumetric video, enabling immersive 3D scene reconstruction but posing significant challenges in storage and transmission due to its massive data volume. Recently, deep learning-based end-to-end video coding has achieved great success, yet most focus on single-view or stereo videos, leaving general multiview scenarios underexplored. This paper proposes an end-to-end learned multiview video coding (LMVC) framework that ensures random access and backward compatibility while enhancing compression efficiency. Our key innovation lies in effectively leveraging independent-view motion and content information to enhance dependent-view compression. Specifically, to exploit the inter-view motion correlation, we propose a feature-based inter-view motion vector prediction method that conditions dependent-view motion encoding on decoded independent-view motion features, along with an inter-view motion entropy model that learns inter-view motion priors. To exploit the inter-view content correlation, we propose a disparity-free inter-view context prediction module that predicts inter-view contexts from decoded independent-view content features, combined with an inter-view contextual entropy model that captures inter-view context priors. Experimental results show that our proposed LMVC framework outperforms the reference software of the traditional MV-HEVC standard by a large margin, establishing a strong baseline for future research in this field.

An Information-Theoretic Regularizer for Lossy Neural Image Compression

Nov 23, 2024

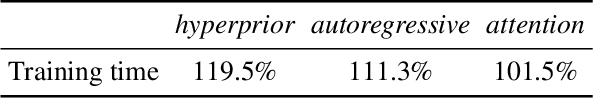

Abstract:Lossy image compression networks aim to minimize the latent entropy of images while adhering to specific distortion constraints. However, optimizing the neural network can be challenging due to its nature of learning quantized latent representations. In this paper, our key finding is that minimizing the latent entropy is, to some extent, equivalent to maximizing the conditional source entropy, an insight that is deeply rooted in information-theoretic equalities. Building on this insight, we propose a novel structural regularization method for the neural image compression task by incorporating the negative conditional source entropy into the training objective, such that both the optimization efficacy and the model's generalization ability can be promoted. The proposed information-theoretic regularizer is interpretable, plug-and-play, and imposes no inference overheads. Extensive experiments demonstrate its superiority in regularizing the models and further squeezing bits from the latent representation across various compression structures and unseen domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge