Yikang Li

Explicit Discovery of Nonlinear Symmetries from Dynamic Data

Oct 02, 2025Abstract:Symmetry is widely applied in problems such as the design of equivariant networks and the discovery of governing equations, but in complex scenarios, it is not known in advance. Most previous symmetry discovery methods are limited to linear symmetries, and recent attempts to discover nonlinear symmetries fail to explicitly get the Lie algebra subspace. In this paper, we propose LieNLSD, which is, to our knowledge, the first method capable of determining the number of infinitesimal generators with nonlinear terms and their explicit expressions. We specify a function library for the infinitesimal group action and aim to solve for its coefficient matrix, proving that its prolongation formula for differential equations, which governs dynamic data, is also linear with respect to the coefficient matrix. By substituting the central differences of the data and the Jacobian matrix of the trained neural network into the infinitesimal criterion, we get a system of linear equations for the coefficient matrix, which can then be solved using SVD. On top quark tagging and a series of dynamic systems, LieNLSD shows qualitative advantages over existing methods and improves the long rollout accuracy of neural PDE solvers by over 20% while applying to guide data augmentation. Code and data are available at https://github.com/hulx2002/LieNLSD.

Governing Equation Discovery from Data Based on Differential Invariants

May 24, 2025

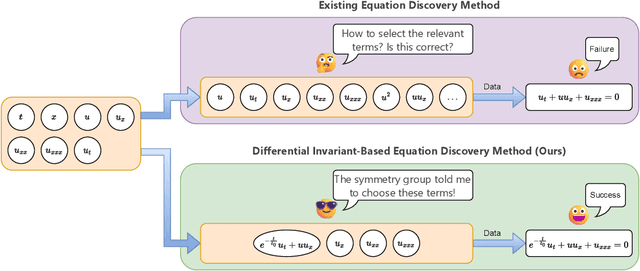

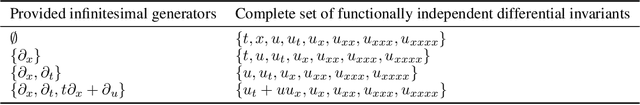

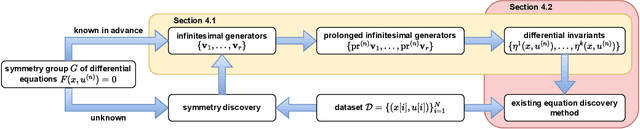

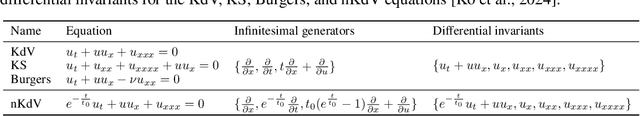

Abstract:The explicit governing equation is one of the simplest and most intuitive forms for characterizing physical laws. However, directly discovering partial differential equations (PDEs) from data poses significant challenges, primarily in determining relevant terms from a vast search space. Symmetry, as a crucial prior knowledge in scientific fields, has been widely applied in tasks such as designing equivariant networks and guiding neural PDE solvers. In this paper, we propose a pipeline for governing equation discovery based on differential invariants, which can losslessly reduce the search space of existing equation discovery methods while strictly adhering to symmetry. Specifically, we compute the set of differential invariants corresponding to the infinitesimal generators of the symmetry group and select them as the relevant terms for equation discovery. Taking DI-SINDy (SINDy based on Differential Invariants) as an example, we demonstrate that its success rate and accuracy in PDE discovery surpass those of other symmetry-informed governing equation discovery methods across a series of PDEs.

High-Rank Irreducible Cartesian Tensor Decomposition and Bases of Equivariant Spaces

Dec 30, 2024

Abstract:Irreducible Cartesian tensors (ICTs) play a crucial role in the design of equivariant graph neural networks, as well as in theoretical chemistry and chemical physics. Meanwhile, the design space of available linear operations on tensors that preserve symmetry presents a significant challenge. The ICT decomposition and a basis of this equivariant space are difficult to obtain for high-order tensors. After decades of research, we recently achieve an explicit ICT decomposition for $n=5$ \citep{bonvicini2024irreducible} with factorial time/space complexity. This work, for the first time, obtains decomposition matrices for ICTs up to rank $n=9$ with reduced and affordable complexity, by constructing what we call path matrices. The path matrices are obtained via performing chain-like contraction with Clebsch-Gordan matrices following the parentage scheme. We prove and leverage that the concatenation of path matrices is an orthonormal change-of-basis matrix between the Cartesian tensor product space and the spherical direct sum spaces. Furthermore, we identify a complete orthogonal basis for the equivariant space, rather than a spanning set \citep{pearce2023brauer}, through this path matrices technique. We further extend our result to the arbitrary tensor product and direct sum spaces, enabling free design between different spaces while keeping symmetry. The Python code is available in https://github.com/ShihaoShao-GH/ICT-decomposition-and-equivariant-bases where the $n=6,\dots,9$ ICT decomposition matrices are obtained in 1s, 3s, 11s, and 4m32s, respectively.

Free the Design Space of Equivariant Graph Neural Networks: High-Rank Irreducible Cartesian Tensor Decomposition and Bases of Equivariant Spaces

Dec 24, 2024

Abstract:Irreducible Cartesian tensors (ICTs) play a crucial role in the design of equivariant graph neural networks, as well as in theoretical chemistry and chemical physics. Meanwhile, the design space of available linear operations on tensors that preserve symmetry presents a significant challenge. The ICT decomposition and a basis of this equivariant space are difficult to obtain for high-order tensors. After decades of research, we recently achieve an explicit ICT decomposition for $n=5$ \citep{bonvicini2024irreducible} with factorial time/space complexity. This work, for the first time, obtains decomposition matrices for ICTs up to rank $n=9$ with reduced and affordable complexity, by constructing what we call path matrices. The path matrices are obtained via performing chain-like contraction with Clebsch-Gordan matrices following the parentage scheme. We prove and leverage that the concatenation of path matrices is an orthonormal change-of-basis matrix between the Cartesian tensor product space and the spherical direct sum spaces. Furthermore, we identify a complete orthogonal basis for the equivariant space, rather than a spanning set \citep{pearce2023brauer}, through this path matrices technique. We further extend our result to the arbitrary tensor product and direct sum spaces, enabling free design between different spaces while keeping symmetry. The Python code is available in the appendix where the $n=6,\dots,9$ ICT decomposition matrices are obtained in <0.1s, 0.5s, 1s, 3s, 11s, and 4m32s, respectively.

Symmetry Discovery for Different Data Types

Oct 13, 2024Abstract:Equivariant neural networks incorporate symmetries into their architecture, achieving higher generalization performance. However, constructing equivariant neural networks typically requires prior knowledge of data types and symmetries, which is difficult to achieve in most tasks. In this paper, we propose LieSD, a method for discovering symmetries via trained neural networks which approximate the input-output mappings of the tasks. It characterizes equivariance and invariance (a special case of equivariance) of continuous groups using Lie algebra and directly solves the Lie algebra space through the inputs, outputs, and gradients of the trained neural network. Then, we extend the method to make it applicable to multi-channel data and tensor data, respectively. We validate the performance of LieSD on tasks with symmetries such as the two-body problem, the moment of inertia matrix prediction, and top quark tagging. Compared with the baseline, LieSD can accurately determine the number of Lie algebra bases without the need for expensive group sampling. Furthermore, LieSD can perform well on non-uniform datasets, whereas methods based on GANs fail.

Optimizing the Placement of Roadside LiDARs for Autonomous Driving

Oct 11, 2023

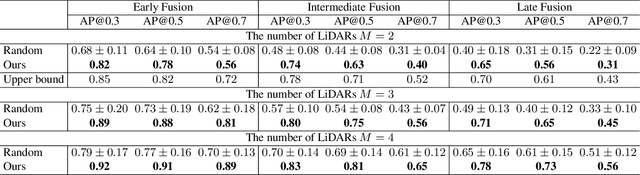

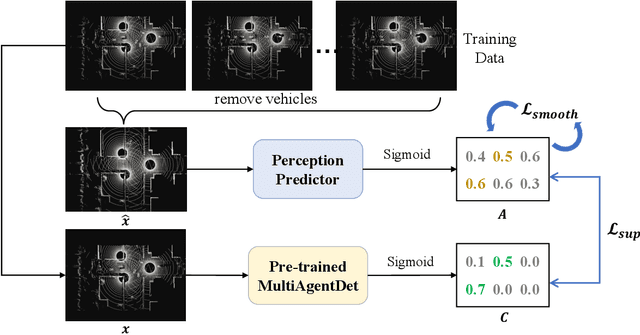

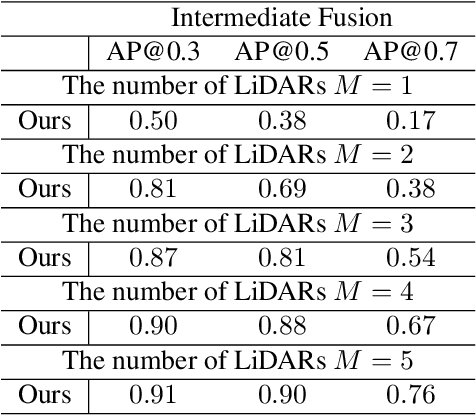

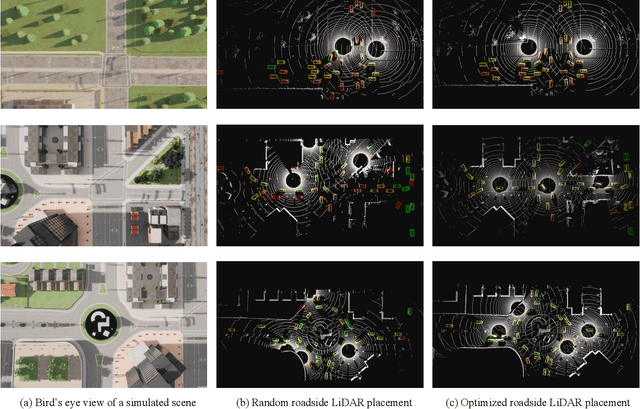

Abstract:Multi-agent cooperative perception is an increasingly popular topic in the field of autonomous driving, where roadside LiDARs play an essential role. However, how to optimize the placement of roadside LiDARs is a crucial but often overlooked problem. This paper proposes an approach to optimize the placement of roadside LiDARs by selecting optimized positions within the scene for better perception performance. To efficiently obtain the best combination of locations, a greedy algorithm based on perceptual gain is proposed, which selects the location that can maximize the perceptual gain sequentially. We define perceptual gain as the increased perceptual capability when a new LiDAR is placed. To obtain the perception capability, we propose a perception predictor that learns to evaluate LiDAR placement using only a single point cloud frame. A dataset named Roadside-Opt is created using the CARLA simulator to facilitate research on the roadside LiDAR placement problem.

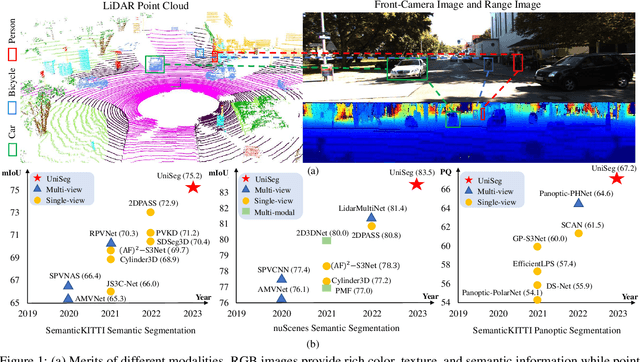

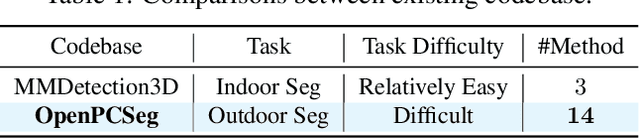

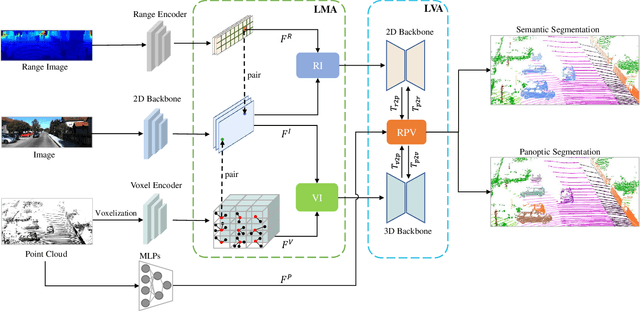

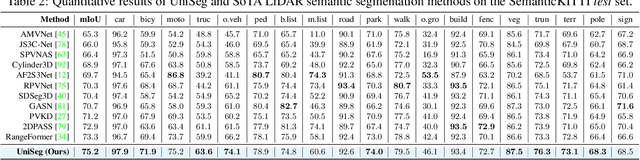

UniSeg: A Unified Multi-Modal LiDAR Segmentation Network and the OpenPCSeg Codebase

Sep 11, 2023

Abstract:Point-, voxel-, and range-views are three representative forms of point clouds. All of them have accurate 3D measurements but lack color and texture information. RGB images are a natural complement to these point cloud views and fully utilizing the comprehensive information of them benefits more robust perceptions. In this paper, we present a unified multi-modal LiDAR segmentation network, termed UniSeg, which leverages the information of RGB images and three views of the point cloud, and accomplishes semantic segmentation and panoptic segmentation simultaneously. Specifically, we first design the Learnable cross-Modal Association (LMA) module to automatically fuse voxel-view and range-view features with image features, which fully utilize the rich semantic information of images and are robust to calibration errors. Then, the enhanced voxel-view and range-view features are transformed to the point space,where three views of point cloud features are further fused adaptively by the Learnable cross-View Association module (LVA). Notably, UniSeg achieves promising results in three public benchmarks, i.e., SemanticKITTI, nuScenes, and Waymo Open Dataset (WOD); it ranks 1st on two challenges of two benchmarks, including the LiDAR semantic segmentation challenge of nuScenes and panoptic segmentation challenges of SemanticKITTI. Besides, we construct the OpenPCSeg codebase, which is the largest and most comprehensive outdoor LiDAR segmentation codebase. It contains most of the popular outdoor LiDAR segmentation algorithms and provides reproducible implementations. The OpenPCSeg codebase will be made publicly available at https://github.com/PJLab-ADG/PCSeg.

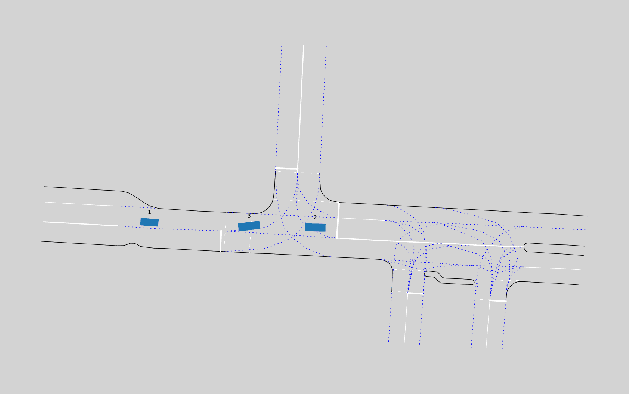

LimSim: A Long-term Interactive Multi-scenario Traffic Simulator

Jul 26, 2023Abstract:With the growing popularity of digital twin and autonomous driving in transportation, the demand for simulation systems capable of generating high-fidelity and reliable scenarios is increasing. Existing simulation systems suffer from a lack of support for different types of scenarios, and the vehicle models used in these systems are too simplistic. Thus, such systems fail to represent driving styles and multi-vehicle interactions, and struggle to handle corner cases in the dataset. In this paper, we propose LimSim, the Long-term Interactive Multi-scenario traffic Simulator, which aims to provide a long-term continuous simulation capability under the urban road network. LimSim can simulate fine-grained dynamic scenarios and focus on the diverse interactions between multiple vehicles in the traffic flow. This paper provides a detailed introduction to the framework and features of the LimSim, and demonstrates its performance through case studies and experiments. LimSim is now open source on GitHub: https://www.github.com/PJLab-ADG/LimSim .

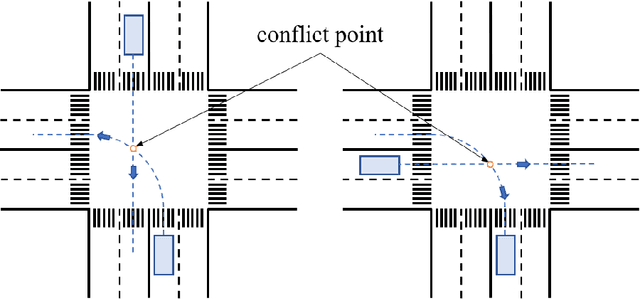

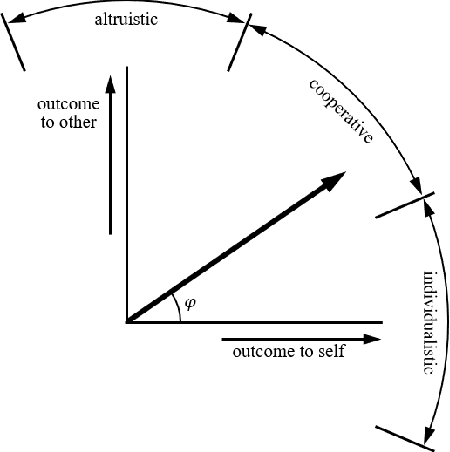

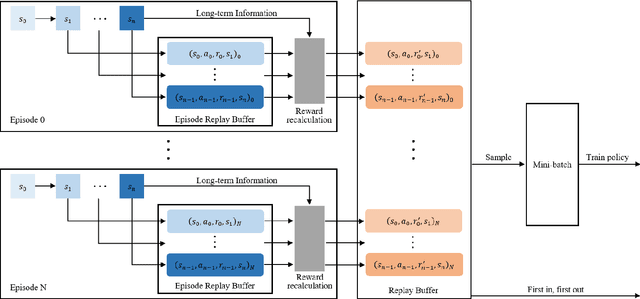

Human-like Decision-making at Unsignalized Intersection using Social Value Orientation

Jun 30, 2023

Abstract:With the commercial application of automated vehicles (AVs), the sharing of roads between AVs and human-driven vehicles (HVs) becomes a common occurrence in the future. While research has focused on improving the safety and reliability of autonomous driving, it's also crucial to consider collaboration between AVs and HVs. Human-like interaction is a required capability for AVs, especially at common unsignalized intersections, as human drivers of HVs expect to maintain their driving habits for inter-vehicle interactions. This paper uses the social value orientation (SVO) in the decision-making of vehicles to describe the social interaction among multiple vehicles. Specifically, we define the quantitative calculation of the conflict-involved SVO at unsignalized intersections to enhance decision-making based on the reinforcement learning method. We use naturalistic driving scenarios with highly interactive motions for performance evaluation of the proposed method. Experimental results show that SVO is more effective in characterizing inter-vehicle interactions than conventional motion state parameters like velocity, and the proposed method can accurately reproduce naturalistic driving trajectories compared to behavior cloning.

DetZero: Rethinking Offboard 3D Object Detection with Long-term Sequential Point Clouds

Jun 09, 2023

Abstract:Existing offboard 3D detectors always follow a modular pipeline design to take advantage of unlimited sequential point clouds. We have found that the full potential of offboard 3D detectors is not explored mainly due to two reasons: (1) the onboard multi-object tracker cannot generate sufficient complete object trajectories, and (2) the motion state of objects poses an inevitable challenge for the object-centric refining stage in leveraging the long-term temporal context representation. To tackle these problems, we propose a novel paradigm of offboard 3D object detection, named DetZero. Concretely, an offline tracker coupled with a multi-frame detector is proposed to focus on the completeness of generated object tracks. An attention-mechanism refining module is proposed to strengthen contextual information interaction across long-term sequential point clouds for object refining with decomposed regression methods. Extensive experiments on Waymo Open Dataset show our DetZero outperforms all state-of-the-art onboard and offboard 3D detection methods. Notably, DetZero ranks 1st place on Waymo 3D object detection leaderboard with 85.15 mAPH (L2) detection performance. Further experiments validate the application of taking the place of human labels with such high-quality results. Our empirical study leads to rethinking conventions and interesting findings that can guide future research on offboard 3D object detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge