Yang Luo

FOCUS: Efficient Keyframe Selection for Long Video Understanding

Oct 31, 2025Abstract:Multimodal large language models (MLLMs) represent images and video frames as visual tokens. Scaling from single images to hour-long videos, however, inflates the token budget far beyond practical limits. Popular pipelines therefore either uniformly subsample or apply keyframe selection with retrieval-style scoring using smaller vision-language models. However, these keyframe selection methods still rely on pre-filtering before selection to reduce the inference cost and can miss the most informative moments. We propose FOCUS, Frame-Optimistic Confidence Upper-bound Selection, a training-free, model-agnostic keyframe selection module that selects query-relevant frames under a strict token budget. FOCUS formulates keyframe selection as a combinatorial pure-exploration (CPE) problem in multi-armed bandits: it treats short temporal clips as arms, and uses empirical means and Bernstein confidence radius to identify informative regions while preserving exploration of uncertain areas. The resulting two-stage exploration-exploitation procedure reduces from a sequential policy with theoretical guarantees, first identifying high-value temporal regions, then selecting top-scoring frames within each region On two long-video question-answering benchmarks, FOCUS delivers substantial accuracy improvements while processing less than 2% of video frames. For videos longer than 20 minutes, it achieves an 11.9% gain in accuracy on LongVideoBench, demonstrating its effectiveness as a keyframe selection method and providing a simple and general solution for scalable long-video understanding with MLLMs.

SGS-3D: High-Fidelity 3D Instance Segmentation via Reliable Semantic Mask Splitting and Growing

Sep 05, 2025Abstract:Accurate 3D instance segmentation is crucial for high-quality scene understanding in the 3D vision domain. However, 3D instance segmentation based on 2D-to-3D lifting approaches struggle to produce precise instance-level segmentation, due to accumulated errors introduced during the lifting process from ambiguous semantic guidance and insufficient depth constraints. To tackle these challenges, we propose splitting and growing reliable semantic mask for high-fidelity 3D instance segmentation (SGS-3D), a novel "split-then-grow" framework that first purifies and splits ambiguous lifted masks using geometric primitives, and then grows them into complete instances within the scene. Unlike existing approaches that directly rely on raw lifted masks and sacrifice segmentation accuracy, SGS-3D serves as a training-free refinement method that jointly fuses semantic and geometric information, enabling effective cooperation between the two levels of representation. Specifically, for semantic guidance, we introduce a mask filtering strategy that leverages the co-occurrence of 3D geometry primitives to identify and remove ambiguous masks, thereby ensuring more reliable semantic consistency with the 3D object instances. For the geometric refinement, we construct fine-grained object instances by exploiting both spatial continuity and high-level features, particularly in the case of semantic ambiguity between distinct objects. Experimental results on ScanNet200, ScanNet++, and KITTI-360 demonstrate that SGS-3D substantially improves segmentation accuracy and robustness against inaccurate masks from pre-trained models, yielding high-fidelity object instances while maintaining strong generalization across diverse indoor and outdoor environments. Code is available in the supplementary materials.

PAF-Net: Phase-Aligned Frequency Decoupling Network for Multi-Process Manufacturing Quality Prediction

Jul 30, 2025

Abstract:Accurate quality prediction in multi-process manufacturing is critical for industrial efficiency but hindered by three core challenges: time-lagged process interactions, overlapping operations with mixed periodicity, and inter-process dependencies in shared frequency bands. To address these, we propose PAF-Net, a frequency decoupled time series prediction framework with three key innovations: (1) A phase-correlation alignment method guided by frequency domain energy to synchronize time-lagged quality series, resolving temporal misalignment. (2) A frequency independent patch attention mechanism paired with Discrete Cosine Transform (DCT) decomposition to capture heterogeneous operational features within individual series. (3) A frequency decoupled cross attention module that suppresses noise from irrelevant frequencies, focusing exclusively on meaningful dependencies within shared bands. Experiments on 4 real-world datasets demonstrate PAF-Net's superiority. It outperforms 10 well-acknowledged baselines by 7.06% lower MSE and 3.88% lower MAE. Our code is available at https://github.com/StevenLuan904/PAF-Net-Official.

PrefixAgent: An LLM-Powered Design Framework for Efficient Prefix Adder Optimization

Jul 08, 2025Abstract:Prefix adders are fundamental arithmetic circuits, but their design space grows exponentially with bit-width, posing significant optimization challenges. Previous works face limitations in performance, generalization, and scalability. To address these challenges, we propose PrefixAgent, a large language model (LLM)-powered framework that enables efficient prefix adder optimization. Specifically, PrefixAgent reformulates the problem into subtasks including backbone synthesis and structure refinement, which effectively reduces the search space. More importantly, this new design perspective enables us to efficiently collect enormous high-quality data and reasoning traces with E-graph, which further results in an effective fine-tuning of LLM. Experimental results show that PrefixAgent synthesizes prefix adders with consistently smaller areas compared to baseline methods, while maintaining scalability and generalization in commercial EDA flows.

Widely Linear Augmented Extreme Learning Machine Based Impairments Compensation for Satellite Communications

Jun 17, 2025Abstract:Satellite communications are crucial for the evolution beyond fifth-generation networks. However, the dynamic nature of satellite channels and their inherent impairments present significant challenges. In this paper, a novel post-compensation scheme that combines the complex-valued extreme learning machine with augmented hidden layer (CELMAH) architecture and widely linear processing (WLP) is developed to address these issues by exploiting signal impropriety in satellite communications. Although CELMAH shares structural similarities with WLP, it employs a different core algorithm and does not fully exploit the signal impropriety. By incorporating WLP principles, we derive a tailored formulation suited to the network structure and propose the CELM augmented by widely linear least squares (CELM-WLLS) for post-distortion. The proposed approach offers enhanced communication robustness and is highly effective for satellite communication scenarios characterized by dynamic channel conditions and non-linear impairments. CELM-WLLS is designed to improve signal recovery performance and outperform traditional methods such as least square (LS) and minimum mean square error (MMSE). Compared to CELMAH, CELM-WLLS demonstrates approximately 0.8 dB gain in BER performance, and also achieves a two-thirds reduction in computational complexity, making it a more efficient solution.

Info-Coevolution: An Efficient Framework for Data Model Coevolution

Jun 09, 2025

Abstract:Machine learning relies heavily on data, yet the continuous growth of real-world data poses challenges for efficient dataset construction and training. A fundamental yet unsolved question is: given our current model and data, does a new data (sample/batch) need annotation/learning? Conventional approaches retain all available data, leading to non-optimal data and training efficiency. Active learning aims to reduce data redundancy by selecting a subset of samples to annotate, while it increases pipeline complexity and introduces bias. In this work, we propose Info-Coevolution, a novel framework that efficiently enables models and data to coevolve through online selective annotation with no bias. Leveraging task-specific models (and open-source models), it selectively annotates and integrates online and web data to improve datasets efficiently. For real-world datasets like ImageNet-1K, Info-Coevolution reduces annotation and training costs by 32\% without performance loss. It is able to automatically give the saving ratio without tuning the ratio. It can further reduce the annotation ratio to 50\% with semi-supervised learning. We also explore retrieval-based dataset enhancement using unlabeled open-source data. Code is available at https://github.com/NUS-HPC-AI-Lab/Info-Coevolution/.

* V1

Enhance-A-Video: Better Generated Video for Free

Feb 11, 2025Abstract:DiT-based video generation has achieved remarkable results, but research into enhancing existing models remains relatively unexplored. In this work, we introduce a training-free approach to enhance the coherence and quality of DiT-based generated videos, named Enhance-A-Video. The core idea is enhancing the cross-frame correlations based on non-diagonal temporal attention distributions. Thanks to its simple design, our approach can be easily applied to most DiT-based video generation frameworks without any retraining or fine-tuning. Across various DiT-based video generation models, our approach demonstrates promising improvements in both temporal consistency and visual quality. We hope this research can inspire future explorations in video generation enhancement.

PICBench: Benchmarking LLMs for Photonic Integrated Circuits Design

Feb 05, 2025Abstract:While large language models (LLMs) have shown remarkable potential in automating various tasks in digital chip design, the field of Photonic Integrated Circuits (PICs)-a promising solution to advanced chip designs-remains relatively unexplored in this context. The design of PICs is time-consuming and prone to errors due to the extensive and repetitive nature of code involved in photonic chip design. In this paper, we introduce PICBench, the first benchmarking and evaluation framework specifically designed to automate PIC design generation using LLMs, where the generated output takes the form of a netlist. Our benchmark consists of dozens of meticulously crafted PIC design problems, spanning from fundamental device designs to more complex circuit-level designs. It automatically evaluates both the syntax and functionality of generated PIC designs by comparing simulation outputs with expert-written solutions, leveraging an open-source simulator. We evaluate a range of existing LLMs, while also conducting comparative tests on various prompt engineering techniques to enhance LLM performance in automated PIC design. The results reveal the challenges and potential of LLMs in the PIC design domain, offering insights into the key areas that require further research and development to optimize automation in this field. Our benchmark and evaluation code is available at https://github.com/PICDA/PICBench.

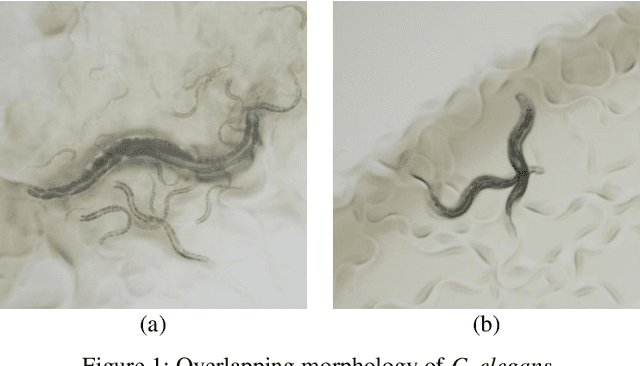

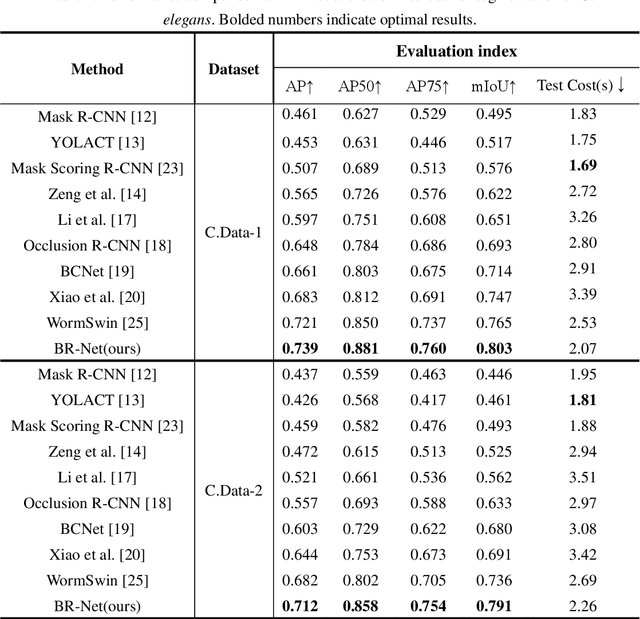

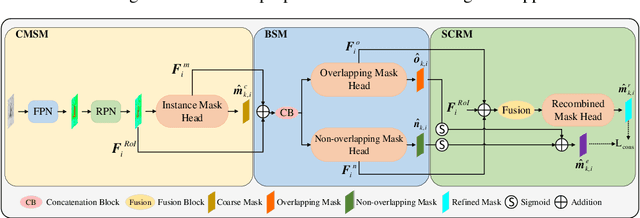

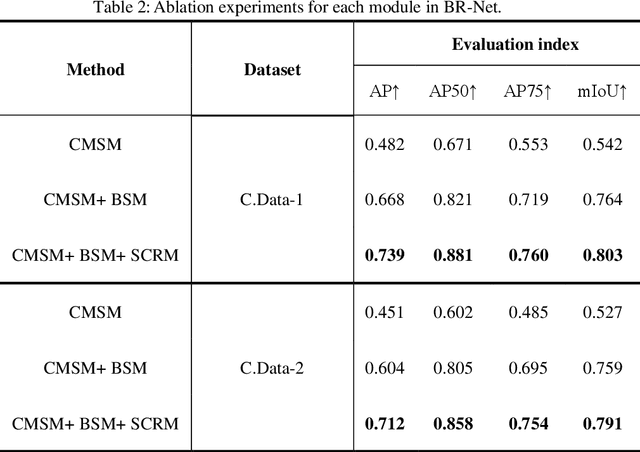

A Bilayer Segmentation-Recombination Network for Accurate Segmentation of Overlapping C. elegans

Nov 26, 2024

Abstract:Caenorhabditis elegans (C. elegans) is an excellent model organism because of its short lifespan and high degree of homology with human genes, and it has been widely used in a variety of human health and disease models. However, the segmentation of C. elegans remains challenging due to the following reasons: 1) the activity trajectory of C. elegans is uncontrollable, and multiple nematodes often overlap, resulting in blurred boundaries of C. elegans. This makes it impossible to clearly study the life trajectory of a certain nematode; and 2) in the microscope images of overlapping C. elegans, the translucent tissues at the edges obscure each other, leading to inaccurate boundary segmentation. To solve these problems, a Bilayer Segmentation-Recombination Network (BR-Net) for the segmentation of C. elegans instances is proposed. The network consists of three parts: A Coarse Mask Segmentation Module (CMSM), a Bilayer Segmentation Module (BSM), and a Semantic Consistency Recombination Module (SCRM). The CMSM is used to extract the coarse mask, and we introduce a Unified Attention Module (UAM) in CMSM to make CMSM better aware of nematode instances. The Bilayer Segmentation Module (BSM) segments the aggregated C. elegans into overlapping and non-overlapping regions. This is followed by integration by the SCRM, where semantic consistency regularization is introduced to segment nematode instances more accurately. Finally, the effectiveness of the method is verified on the C. elegans dataset. The experimental results show that BR-Net exhibits good competitiveness and outperforms other recently proposed instance segmentation methods in processing C. elegans occlusion images.

AE-DENet: Enhancement for Deep Learning-based Channel Estimation in OFDM Systems

Nov 13, 2024

Abstract:Deep learning (DL)-based methods have demonstrated remarkable achievements in addressing orthogonal frequency division multiplexing (OFDM) channel estimation challenges. However, existing DL-based methods mainly rely on separate real and imaginary inputs while ignoring the inherent correlation between the two streams, such as amplitude and phase information that are fundamental in communication signal processing. This paper proposes AE-DENet, a novel autoencoder(AE)-based data enhancement network to improve the performance of existing DL-based channel estimation methods. AE-DENet focuses on enriching the classic least square (LS) estimation input commonly used in DL-based methods by employing a learning-based data enhancement method, which extracts interaction features from the real and imaginary components and fuses them with the original real/imaginary streams to generate an enhanced input for better channel inference. Experimental findings in terms of the mean square error (MSE) results demonstrate that the proposed method enhances the performance of all state-of-the-art DL-based channel estimators with negligible added complexity. Furthermore, the proposed approach is shown to be robust to channel variations and high user mobility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge