Xiaofeng Gao

PAF-Net: Phase-Aligned Frequency Decoupling Network for Multi-Process Manufacturing Quality Prediction

Jul 30, 2025

Abstract:Accurate quality prediction in multi-process manufacturing is critical for industrial efficiency but hindered by three core challenges: time-lagged process interactions, overlapping operations with mixed periodicity, and inter-process dependencies in shared frequency bands. To address these, we propose PAF-Net, a frequency decoupled time series prediction framework with three key innovations: (1) A phase-correlation alignment method guided by frequency domain energy to synchronize time-lagged quality series, resolving temporal misalignment. (2) A frequency independent patch attention mechanism paired with Discrete Cosine Transform (DCT) decomposition to capture heterogeneous operational features within individual series. (3) A frequency decoupled cross attention module that suppresses noise from irrelevant frequencies, focusing exclusively on meaningful dependencies within shared bands. Experiments on 4 real-world datasets demonstrate PAF-Net's superiority. It outperforms 10 well-acknowledged baselines by 7.06% lower MSE and 3.88% lower MAE. Our code is available at https://github.com/StevenLuan904/PAF-Net-Official.

MM-Agent: LLM as Agents for Real-world Mathematical Modeling Problem

May 20, 2025Abstract:Mathematical modeling is a cornerstone of scientific discovery and engineering practice, enabling the translation of real-world problems into formal systems across domains such as physics, biology, and economics. Unlike mathematical reasoning, which assumes a predefined formulation, modeling requires open-ended problem analysis, abstraction, and principled formalization. While Large Language Models (LLMs) have shown strong reasoning capabilities, they fall short in rigorous model construction, limiting their utility in real-world problem-solving. To this end, we formalize the task of LLM-powered real-world mathematical modeling, where agents must analyze problems, construct domain-appropriate formulations, and generate complete end-to-end solutions. We introduce MM-Bench, a curated benchmark of 111 problems from the Mathematical Contest in Modeling (MCM/ICM), spanning the years 2000 to 2025 and across ten diverse domains such as physics, biology, and economics. To tackle this task, we propose MM-Agent, an expert-inspired framework that decomposes mathematical modeling into four stages: open-ended problem analysis, structured model formulation, computational problem solving, and report generation. Experiments on MM-Bench show that MM-Agent significantly outperforms baseline agents, achieving an 11.88\% improvement over human expert solutions while requiring only 15 minutes and \$0.88 per task using GPT-4o. Furthermore, under official MCM/ICM protocols, MM-Agent assisted two undergraduate teams in winning the Finalist Award (\textbf{top 2.0\% among 27,456 teams}) in MCM/ICM 2025, demonstrating its practical effectiveness as a modeling copilot. Our code is available at https://github.com/usail-hkust/LLM-MM-Agent

Symmetry-Preserving Architecture for Multi-NUMA Environments (SPANE): A Deep Reinforcement Learning Approach for Dynamic VM Scheduling

Apr 21, 2025Abstract:As cloud computing continues to evolve, the adoption of multi-NUMA (Non-Uniform Memory Access) architecture by cloud service providers has introduced new challenges in virtual machine (VM) scheduling. To address these challenges and more accurately reflect the complexities faced by modern cloud environments, we introduce the Dynamic VM Allocation problem in Multi-NUMA PM (DVAMP). We formally define both offline and online versions of DVAMP as mixed-integer linear programming problems, providing a rigorous mathematical foundation for analysis. A tight performance bound for greedy online algorithms is derived, offering insights into the worst-case optimality gap as a function of the number of physical machines and VM lifetime variability. To address the challenges posed by DVAMP, we propose SPANE (Symmetry-Preserving Architecture for Multi-NUMA Environments), a novel deep reinforcement learning approach that exploits the problem's inherent symmetries. SPANE produces invariant results under arbitrary permutations of physical machine states, enhancing learning efficiency and solution quality. Extensive experiments conducted on the Huawei-East-1 dataset demonstrate that SPANE outperforms existing baselines, reducing average VM wait time by 45%. Our work contributes to the field of cloud resource management by providing both theoretical insights and practical solutions for VM scheduling in multi-NUMA environments, addressing a critical gap in the literature and offering improved performance for real-world cloud systems.

PathGPT: Leveraging Large Language Models for Personalized Route Generation

Apr 08, 2025Abstract:The proliferation of GPS enabled devices has led to the accumulation of a substantial corpus of historical trajectory data. By leveraging these data for training machine learning models,researchers have devised novel data-driven methodologies that address the personalized route recommendation (PRR) problem. In contrast to conventional algorithms such as Dijkstra shortest path algorithm,these novel algorithms possess the capacity to discern and learn patterns within the data,thereby facilitating the generation of more personalized paths. However,once these models have been trained,their application is constrained to the generation of routes that align with their training patterns. This limitation renders them less adaptable to novel scenarios and the deployment of multiple machine learning models might be necessary to address new possible scenarios,which can be costly as each model must be trained separately. Inspired by recent advances in the field of Large Language Models (LLMs),we leveraged their natural language understanding capabilities to develop a unified model to solve the PRR problem while being seamlessly adaptable to new scenarios without additional training. To accomplish this,we combined the extensive knowledge LLMs acquired during training with further access to external hand-crafted context information,similar to RAG (Retrieved Augmented Generation) systems,to enhance their ability to generate paths according to user-defined requirements. Extensive experiments on different datasets show a considerable uplift in LLM performance on the PRR problem.

Enhancing Masked Time-Series Modeling via Dropping Patches

Dec 19, 2024

Abstract:This paper explores how to enhance existing masked time-series modeling by randomly dropping sub-sequence level patches of time series. On this basis, a simple yet effective method named DropPatch is proposed, which has two remarkable advantages: 1) It improves the pre-training efficiency by a square-level advantage; 2) It provides additional advantages for modeling in scenarios such as in-domain, cross-domain, few-shot learning and cold start. This paper conducts comprehensive experiments to verify the effectiveness of the method and analyze its internal mechanism. Empirically, DropPatch strengthens the attention mechanism, reduces information redundancy and serves as an efficient means of data augmentation. Theoretically, it is proved that DropPatch slows down the rate at which the Transformer representations collapse into the rank-1 linear subspace by randomly dropping patches, thus optimizing the quality of the learned representations

TeamCraft: A Benchmark for Multi-Modal Multi-Agent Systems in Minecraft

Dec 06, 2024

Abstract:Collaboration is a cornerstone of society. In the real world, human teammates make use of multi-sensory data to tackle challenging tasks in ever-changing environments. It is essential for embodied agents collaborating in visually-rich environments replete with dynamic interactions to understand multi-modal observations and task specifications. To evaluate the performance of generalizable multi-modal collaborative agents, we present TeamCraft, a multi-modal multi-agent benchmark built on top of the open-world video game Minecraft. The benchmark features 55,000 task variants specified by multi-modal prompts, procedurally-generated expert demonstrations for imitation learning, and carefully designed protocols to evaluate model generalization capabilities. We also perform extensive analyses to better understand the limitations and strengths of existing approaches. Our results indicate that existing models continue to face significant challenges in generalizing to novel goals, scenes, and unseen numbers of agents. These findings underscore the need for further research in this area. The TeamCraft platform and dataset are publicly available at https://github.com/teamcraft-bench/teamcraft.

Disentangled Interpretable Representation for Efficient Long-term Time Series Forecasting

Nov 26, 2024

Abstract:Industry 5.0 introduces new challenges for Long-term Time Series Forecasting (LTSF), characterized by high-dimensional, high-resolution data and high-stakes application scenarios. Against this backdrop, developing efficient and interpretable models for LTSF becomes a key challenge. Existing deep learning and linear models often suffer from excessive parameter complexity and lack intuitive interpretability. To address these issues, we propose DiPE-Linear, a Disentangled interpretable Parameter-Efficient Linear network. DiPE-Linear incorporates three temporal components: Static Frequential Attention (SFA), Static Temporal Attention (STA), and Independent Frequential Mapping (IFM). These components alternate between learning in the frequency and time domains to achieve disentangled interpretability. The decomposed model structure reduces parameter complexity from quadratic in fully connected networks (FCs) to linear and computational complexity from quadratic to log-linear. Additionally, a Low-Rank Weight Sharing policy enhances the model's ability to handle multivariate series. Despite operating within a subspace of FCs with limited expressive capacity, DiPE-Linear demonstrates comparable or superior performance to both FCs and nonlinear models across multiple open-source and real-world LTSF datasets, validating the effectiveness of its sophisticatedly designed structure. The combination of efficiency, accuracy, and interpretability makes DiPE-Linear a strong candidate for advancing LTSF in both research and real-world applications. The source code is available at https://github.com/wintertee/DiPE-Linear.

Towards Unifying Feature Interaction Models for Click-Through Rate Prediction

Nov 19, 2024

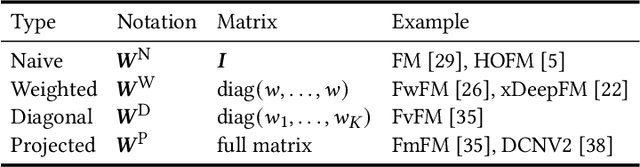

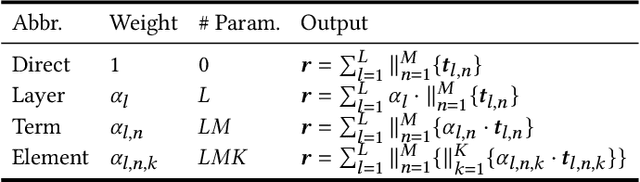

Abstract:Modeling feature interactions plays a crucial role in accurately predicting click-through rates (CTR) in advertising systems. To capture the intricate patterns of interaction, many existing models employ matrix-factorization techniques to represent features as lower-dimensional embedding vectors, enabling the modeling of interactions as products between these embeddings. In this paper, we propose a general framework called IPA to systematically unify these models. Our framework comprises three key components: the Interaction Function, which facilitates feature interaction; the Layer Pooling, which constructs higher-level interaction layers; and the Layer Aggregator, which combines the outputs of all layers to serve as input for the subsequent classifier. We demonstrate that most existing models can be categorized within our framework by making specific choices for these three components. Through extensive experiments and a dimensional collapse analysis, we evaluate the performance of these choices. Furthermore, by leveraging the most powerful components within our framework, we introduce a novel model that achieves competitive results compared to state-of-the-art CTR models. PFL gets significant GMV lift during online A/B test in Tencent's advertising platform and has been deployed as the production model in several primary scenarios.

MatryoshkaKV: Adaptive KV Compression via Trainable Orthogonal Projection

Oct 16, 2024

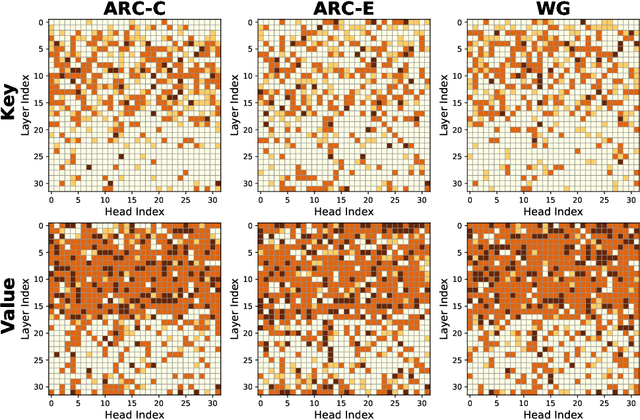

Abstract:KV cache has become a de facto technique for the inference of large language models (LLMs), where tensors of shape (layer number, head number, sequence length, feature dimension) are introduced to cache historical information for self-attention. As the size of the model and data grows, the KV cache can quickly become a bottleneck within the system in both storage and memory transfer. To address this, prior studies usually focus on the first three axes of the cache tensors for compression. This paper supplements them, focusing on the feature dimension axis, by utilizing low-rank projection matrices to transform the cache features into spaces with reduced dimensions. We begin by investigating the canonical orthogonal projection method for data compression through principal component analysis (PCA). We observe the issue with PCA projection where significant performance degradation is observed at low compression rates. To bridge the gap, we propose to directly tune the orthogonal projection matrices with a distillation objective using an elaborate Matryoshka training strategy. After training, we adaptively search for the optimal compression rates for various layers and heads given varying compression budgets. Compared to previous works, our method can easily embrace pre-trained LLMs and hold a smooth tradeoff between performance and compression rate. We empirically witness the high data efficiency of our training procedure and find that our method can sustain over 90% performance with an average KV cache compression rate of 60% (and up to 75% in certain extreme scenarios) for popular LLMs like LLaMA2-7B-base and Mistral-7B-v0.3-base.

T2V-Turbo-v2: Enhancing Video Generation Model Post-Training through Data, Reward, and Conditional Guidance Design

Oct 08, 2024

Abstract:In this paper, we focus on enhancing a diffusion-based text-to-video (T2V) model during the post-training phase by distilling a highly capable consistency model from a pretrained T2V model. Our proposed method, T2V-Turbo-v2, introduces a significant advancement by integrating various supervision signals, including high-quality training data, reward model feedback, and conditional guidance, into the consistency distillation process. Through comprehensive ablation studies, we highlight the crucial importance of tailoring datasets to specific learning objectives and the effectiveness of learning from diverse reward models for enhancing both the visual quality and text-video alignment. Additionally, we highlight the vast design space of conditional guidance strategies, which centers on designing an effective energy function to augment the teacher ODE solver. We demonstrate the potential of this approach by extracting motion guidance from the training datasets and incorporating it into the ODE solver, showcasing its effectiveness in improving the motion quality of the generated videos with the improved motion-related metrics from VBench and T2V-CompBench. Empirically, our T2V-Turbo-v2 establishes a new state-of-the-art result on VBench, with a Total score of 85.13, surpassing proprietary systems such as Gen-3 and Kling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge