Zhemeng Yu

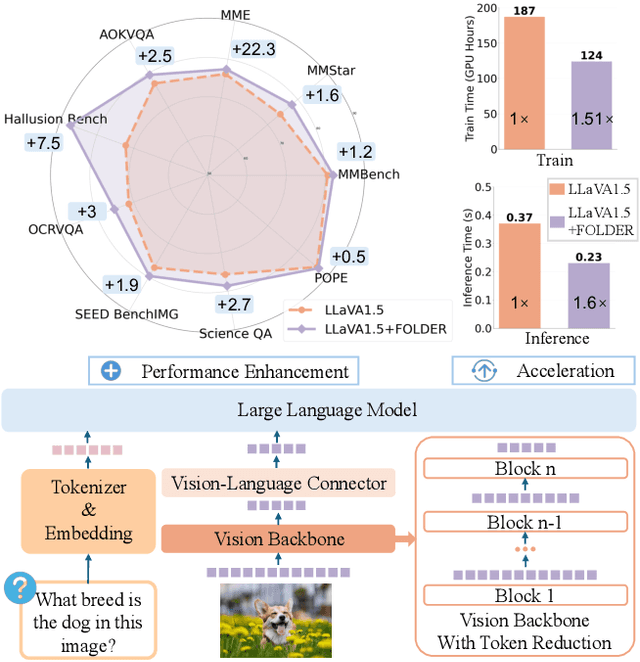

FOLDER: Accelerating Multi-modal Large Language Models with Enhanced Performance

Jan 05, 2025

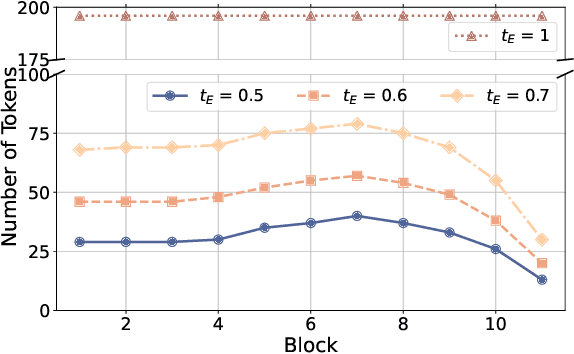

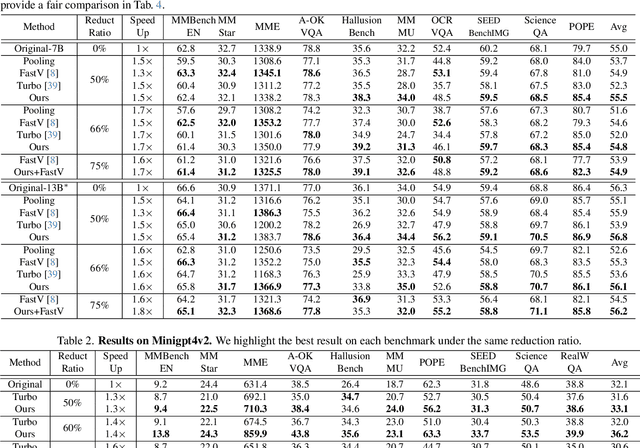

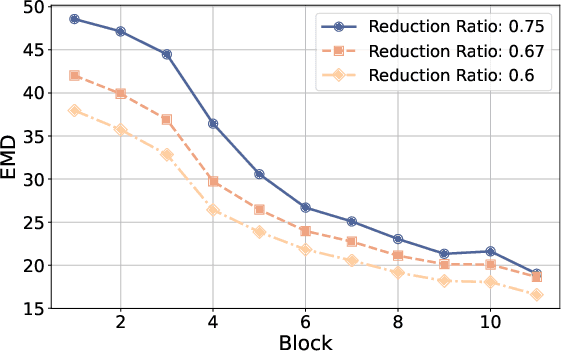

Abstract:Recently, Multi-modal Large Language Models (MLLMs) have shown remarkable effectiveness for multi-modal tasks due to their abilities to generate and understand cross-modal data. However, processing long sequences of visual tokens extracted from visual backbones poses a challenge for deployment in real-time applications. To address this issue, we introduce FOLDER, a simple yet effective plug-and-play module designed to reduce the length of the visual token sequence, mitigating both computational and memory demands during training and inference. Through a comprehensive analysis of the token reduction process, we analyze the information loss introduced by different reduction strategies and develop FOLDER to preserve key information while removing visual redundancy. We showcase the effectiveness of FOLDER by integrating it into the visual backbone of several MLLMs, significantly accelerating the inference phase. Furthermore, we evaluate its utility as a training accelerator or even performance booster for MLLMs. In both contexts, FOLDER achieves comparable or even better performance than the original models, while dramatically reducing complexity by removing up to 70% of visual tokens.

Adaptive Two-Stage Cloud Resource Scaling via Hierarchical Multi-Indicator Forecasting and Bayesian Decision-Making

Aug 02, 2024Abstract:The surging demand for cloud computing resources, driven by the rapid growth of sophisticated large-scale models and data centers, underscores the critical importance of efficient and adaptive resource allocation. As major tech enterprises deploy massive infrastructures with thousands of GPUs, existing cloud platforms still struggle with low resource utilization due to key challenges: capturing hierarchical indicator structures, modeling non-Gaussian distributions, and decision-making under uncertainty. To address these challenges, we propose HRAMONY, an adaptive Hierarchical Attention-based Resource Modeling and Decision-Making System. HARMONY combines hierarchical multi-indicator distribution forecasting and uncertainty-aware Bayesian decision-making. It introduces a novel hierarchical attention mechanism that comprehensively models complex inter-indicator dependencies, enabling accurate predictions that can adapt to evolving environment states. By transforming Gaussian projections into adaptive non-Gaussian distributions via Normalizing Flows. Crucially, HARMONY leverages the full predictive distributions in an adaptive Bayesian process, proactively incorporating uncertainties to optimize resource allocation while robustly meeting SLA constraints under varying conditions. Extensive evaluations across four large-scale cloud datasets demonstrate HARMONY's state-of-the-art performance, significantly outperforming nine established methods. A month-long real-world deployment validated HARMONY's substantial practical impact, realizing over 35,000 GPU hours in savings and translating to $100K+ in cost reduction, showcasing its remarkable economic value through adaptive, uncertainty-aware scaling. Our code is available at https://github.com/Floating-LY/HARMONY1.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge