Yan Luo

Single-Slice-to-3D Reconstruction in Medical Imaging and Natural Objects: A Comparative Benchmark with SAM 3D

Feb 10, 2026Abstract:A 3D understanding of anatomy is central to diagnosis and treatment planning, yet volumetric imaging remains costly with long wait times. Image-to-3D foundations models can solve this issue by reconstructing 3D data from 2D modalites. Current foundation models are trained on natural image distributions to reconstruct naturalistic objects from a single image by leveraging geometric priors across pixels. However, it is unclear whether these learned geometric priors transfer to medical data. In this study, we present a controlled zero-shot benchmark of single slice medical image-to-3D reconstruction across five state-of-the-art image-to-3D models: SAM3D, Hunyuan3D-2.1, Direct3D, Hi3DGen, and TripoSG. These are evaluated across six medical datasets spanning anatomical and pathological structures and two natrual datasets, using voxel based metrics and point cloud distance metrics. Across medical datasets, voxel based overlap remains moderate for all models, consistent with a depth reconstruction failure mode when inferring volume from a single slice. In contrast, global distance metrics show more separation between methods: SAM3D achieves the strongest overall topological similarity to ground truth medical 3D data, while alternative models are more prone to over-simplication of reconstruction. Our results quantify the limits of single-slice medical reconstruction and highlight depth ambiguity caused by the planar nature of 2D medical data, motivating multi-view image-to-3D reconstruction to enable reliable medical 3D inference.

Look-Ahead and Look-Back Flows: Training-Free Image Generation with Trajectory Smoothing

Feb 10, 2026Abstract:Recent advances have reformulated diffusion models as deterministic ordinary differential equations (ODEs) through the framework of flow matching, providing a unified formulation for the noise-to-data generative process. Various training-free flow matching approaches have been developed to improve image generation through flow velocity field adjustment, eliminating the need for costly retraining. However, Modifying the velocity field $v$ introduces errors that propagate through the full generation path, whereas adjustments to the latent trajectory $z$ are naturally corrected by the pretrained velocity network, reducing error accumulation. In this paper, we propose two complementary training-free latent-trajectory adjustment approaches based on future and past velocity $v$ and latent trajectory $z$ information that refine the generative path directly in latent space. We propose two training-free trajectory smoothing schemes: \emph{Look-Ahead}, which averages the current and next-step latents using a curvature-gated weight, and \emph{Look-Back}, which smoothes latents using an exponential moving average with decay. We demonstrate through extensive experiments and comprehensive evaluation metrics that the proposed training-free trajectory smoothing models substantially outperform various state-of-the-art models across multiple datasets including COCO17, CUB-200, and Flickr30K.

ADPretrain: Advancing Industrial Anomaly Detection via Anomaly Representation Pretraining

Nov 07, 2025Abstract:The current mainstream and state-of-the-art anomaly detection (AD) methods are substantially established on pretrained feature networks yielded by ImageNet pretraining. However, regardless of supervised or self-supervised pretraining, the pretraining process on ImageNet does not match the goal of anomaly detection (i.e., pretraining in natural images doesn't aim to distinguish between normal and abnormal). Moreover, natural images and industrial image data in AD scenarios typically have the distribution shift. The two issues can cause ImageNet-pretrained features to be suboptimal for AD tasks. To further promote the development of the AD field, pretrained representations specially for AD tasks are eager and very valuable. To this end, we propose a novel AD representation learning framework specially designed for learning robust and discriminative pretrained representations for industrial anomaly detection. Specifically, closely surrounding the goal of anomaly detection (i.e., focus on discrepancies between normals and anomalies), we propose angle- and norm-oriented contrastive losses to maximize the angle size and norm difference between normal and abnormal features simultaneously. To avoid the distribution shift from natural images to AD images, our pretraining is performed on a large-scale AD dataset, RealIAD. To further alleviate the potential shift between pretraining data and downstream AD datasets, we learn the pretrained AD representations based on the class-generalizable representation, residual features. For evaluation, based on five embedding-based AD methods, we simply replace their original features with our pretrained representations. Extensive experiments on five AD datasets and five backbones consistently show the superiority of our pretrained features. The code is available at https://github.com/xcyao00/ADPretrain.

CurveFlow: Curvature-Guided Flow Matching for Image Generation

Aug 20, 2025Abstract:Existing rectified flow models are based on linear trajectories between data and noise distributions. This linearity enforces zero curvature, which can inadvertently force the image generation process through low-probability regions of the data manifold. A key question remains underexplored: how does the curvature of these trajectories correlate with the semantic alignment between generated images and their corresponding captions, i.e., instructional compliance? To address this, we introduce CurveFlow, a novel flow matching framework designed to learn smooth, non-linear trajectories by directly incorporating curvature guidance into the flow path. Our method features a robust curvature regularization technique that penalizes abrupt changes in the trajectory's intrinsic dynamics.Extensive experiments on MS COCO 2014 and 2017 demonstrate that CurveFlow achieves state-of-the-art performance in text-to-image generation, significantly outperforming both standard rectified flow variants and other non-linear baselines like Rectified Diffusion. The improvements are especially evident in semantic consistency metrics such as BLEU, METEOR, ROUGE, and CLAIR. This confirms that our curvature-aware modeling substantially enhances the model's ability to faithfully follow complex instructions while simultaneously maintaining high image quality. The code is made publicly available at https://github.com/Harvard-AI-and-Robotics-Lab/CurveFlow.

DA-Occ: Efficient 3D Voxel Occupancy Prediction via Directional 2D for Geometric Structure Preservation

Jul 31, 2025

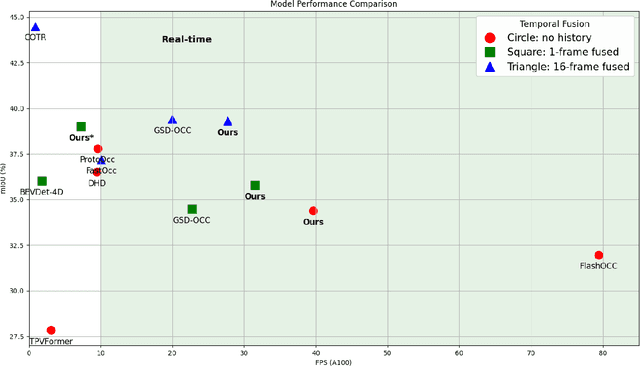

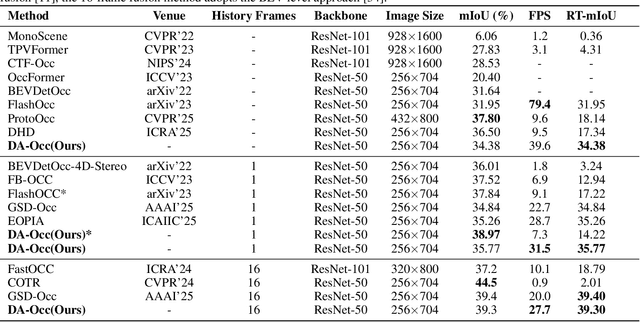

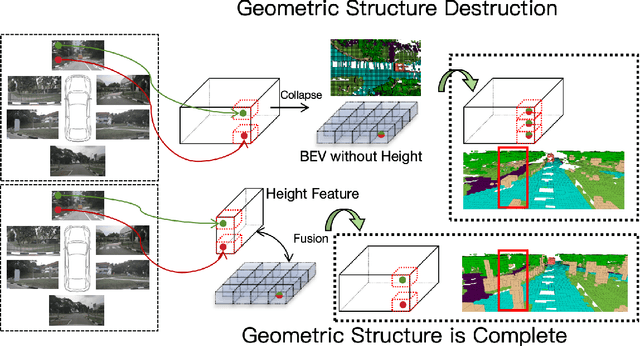

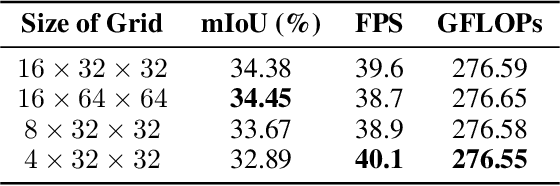

Abstract:Efficient and high-accuracy 3D occupancy prediction is crucial for ensuring the performance of autonomous driving (AD) systems. However, many current methods focus on high accuracy at the expense of real-time processing needs. To address this challenge of balancing accuracy and inference speed, we propose a directional pure 2D approach. Our method involves slicing 3D voxel features to preserve complete vertical geometric information. This strategy compensates for the loss of height cues in Bird's-Eye View (BEV) representations, thereby maintaining the integrity of the 3D geometric structure. By employing a directional attention mechanism, we efficiently extract geometric features from different orientations, striking a balance between accuracy and computational efficiency. Experimental results highlight the significant advantages of our approach for autonomous driving. On the Occ3D-nuScenes, the proposed method achieves an mIoU of 39.3% and an inference speed of 27.7 FPS, effectively balancing accuracy and efficiency. In simulations on edge devices, the inference speed reaches 14.8 FPS, further demonstrating the method's applicability for real-time deployment in resource-constrained environments.

NTIRE 2025 Image Shadow Removal Challenge Report

Jun 18, 2025

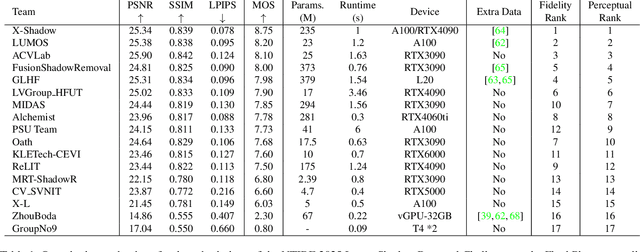

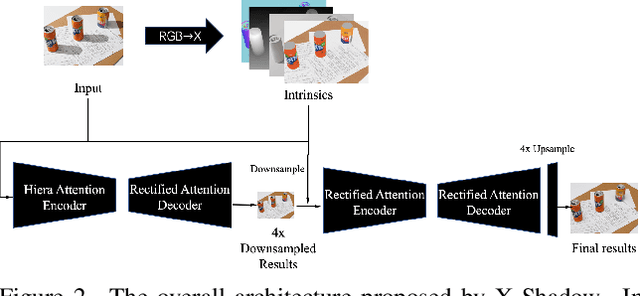

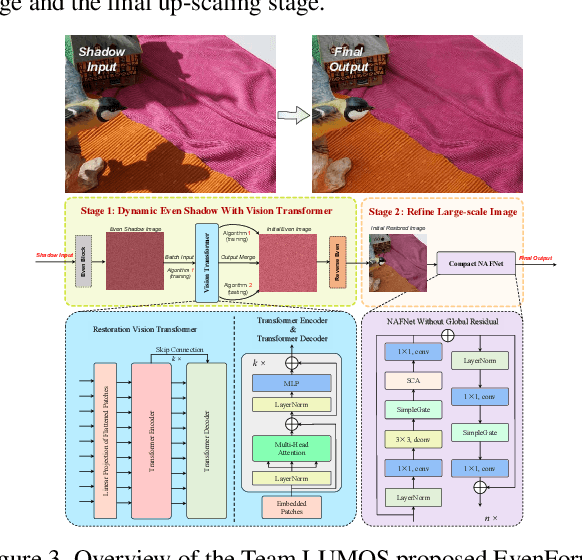

Abstract:This work examines the findings of the NTIRE 2025 Shadow Removal Challenge. A total of 306 participants have registered, with 17 teams successfully submitting their solutions during the final evaluation phase. Following the last two editions, this challenge had two evaluation tracks: one focusing on reconstruction fidelity and the other on visual perception through a user study. Both tracks were evaluated with images from the WSRD+ dataset, simulating interactions between self- and cast-shadows with a large number of diverse objects, textures, and materials.

RD-UIE: Relation-Driven State Space Modeling for Underwater Image Enhancement

May 02, 2025

Abstract:Underwater image enhancement (UIE) is a critical preprocessing step for marine vision applications, where wavelength-dependent attenuation causes severe content degradation and color distortion. While recent state space models like Mamba show potential for long-range dependency modeling, their unfolding operations and fixed scan paths on 1D sequences fail to adapt to local object semantics and global relation modeling, limiting their efficacy in complex underwater environments. To address this, we enhance conventional Mamba with the sorting-based scanning mechanism that dynamically reorders scanning sequences based on statistical distribution of spatial correlation of all pixels. In this way, it encourages the network to prioritize the most informative components--structural and semantic features. Upon building this mechanism, we devise a Visually Self-adaptive State Block (VSSB) that harmonizes dynamic sorting of Mamba with input-dependent dynamic convolution, enabling coherent integration of global context and local relational cues. This exquisite design helps eliminate global focus bias, especially for widely distributed contents, which greatly weakens the statistical frequency. For robust feature extraction and refinement, we design a cross-feature bridge (CFB) to adaptively fuse multi-scale representations. These efforts compose the novel relation-driven Mamba framework for effective UIE (RD-UIE). Extensive experiments on underwater enhancement benchmarks demonstrate RD-UIE outperforms the state-of-the-art approach WMamba in both quantitative metrics and visual fidelity, averagely achieving 0.55 dB performance gain on the three benchmarks. Our code is available at https://github.com/kkoucy/RD-UIE/tree/main

An Experimental Study of Passive UAV Tracking with Digital Arrays and Cellular Downlink Signals

Dec 30, 2024Abstract:Given the prospects of the low-altitude economy (LAE) and the popularity of unmanned aerial vehicles (UAVs), there are increasing demands on monitoring flying objects at low altitude in wide urban areas. In this work, the widely deployed long-term evolution (LTE) base station (BS) is exploited to illuminate UAVs in bistatic trajectory tracking. Specifically, a passive sensing receiver with two digital antenna arrays is proposed and developed to capture both the line-of-sight (LoS) signal and the scattered signal off a target UAV. From their cross ambiguity function, the bistatic range, Doppler shift and angle-of-arrival (AoA) of the target UAV can be detected in a sequence of time slots. In order to address missed detections and false alarms of passive sensing, a multi-target tracking framework is adopted to track the trajectory of the target UAV. It is demonstrated by experiments that the proposed UAV tracking system can achieve a meter-level accuracy.

Impact of Data Distribution on Fairness Guarantees in Equitable Deep Learning

Dec 29, 2024

Abstract:We present a comprehensive theoretical framework analyzing the relationship between data distributions and fairness guarantees in equitable deep learning. Our work establishes novel theoretical bounds that explicitly account for data distribution heterogeneity across demographic groups, while introducing a formal analysis framework that minimizes expected loss differences across these groups. We derive comprehensive theoretical bounds for fairness errors and convergence rates, and characterize how distributional differences between groups affect the fundamental trade-off between fairness and accuracy. Through extensive experiments on diverse datasets, including FairVision (ophthalmology), CheXpert (chest X-rays), HAM10000 (dermatology), and FairFace (facial recognition), we validate our theoretical findings and demonstrate that differences in feature distributions across demographic groups significantly impact model fairness, with performance disparities particularly pronounced in racial categories. The theoretical bounds we derive crroborate these empirical observations, providing insights into the fundamental limits of achieving fairness in deep learning models when faced with heterogeneous data distributions. This work advances our understanding of fairness in AI-based diagnosis systems and provides a theoretical foundation for developing more equitable algorithms. The code for analysis is publicly available via \url{https://github.com/Harvard-Ophthalmology-AI-Lab/fairness_guarantees}.

FairDiffusion: Enhancing Equity in Latent Diffusion Models via Fair Bayesian Perturbation

Dec 29, 2024

Abstract:Recent progress in generative AI, especially diffusion models, has demonstrated significant utility in text-to-image synthesis. Particularly in healthcare, these models offer immense potential in generating synthetic datasets and training medical students. However, despite these strong performances, it remains uncertain if the image generation quality is consistent across different demographic subgroups. To address this critical concern, we present the first comprehensive study on the fairness of medical text-to-image diffusion models. Our extensive evaluations of the popular Stable Diffusion model reveal significant disparities across gender, race, and ethnicity. To mitigate these biases, we introduce FairDiffusion, an equity-aware latent diffusion model that enhances fairness in both image generation quality as well as the semantic correlation of clinical features. In addition, we also design and curate FairGenMed, the first dataset for studying the fairness of medical generative models. Complementing this effort, we further evaluate FairDiffusion on two widely-used external medical datasets: HAM10000 (dermatoscopic images) and CheXpert (chest X-rays) to demonstrate FairDiffusion's effectiveness in addressing fairness concerns across diverse medical imaging modalities. Together, FairDiffusion and FairGenMed significantly advance research in fair generative learning, promoting equitable benefits of generative AI in healthcare.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge