Ran Tao

FPNet: Joint Wi-Fi Beamforming Matrix Feedback and Anomaly-Aware Indoor Positioning

Feb 13, 2026Abstract:Channel State Information (CSI) provides a detailed description of the wireless channel and has been widely adopted for Wi-Fi sensing, particularly for high-precision indoor positioning. However, complete CSI is rarely available in real-world deployments due to hardware constraints and the high communication overhead required for feedback. Moreover, existing positioning models lack mechanisms to detect when users move outside their trained regions, leading to unreliable estimates in dynamic environments. In this paper, we present FPNet, a unified deep learning framework that jointly addresses channel feedback compression, accurate indoor positioning, and robust anomaly detection (AD). FPNet leverages the beamforming feedback matrix (BFM), a compressed CSI representation natively supported by IEEE 802.11ac/ax/be protocols, to minimize feedback overhead while preserving critical positioning features. To enhance reliability, we integrate ADBlock, a lightweight AD module trained on normal BFM samples, which identifies out-of-distribution scenarios when users exit predefined spatial regions. Experimental results using standard 2.4 GHz Wi-Fi hardware show that FPNet achieves positioning accuracy above 97% with only 100 feedback bits, boosts net throughput by up to 22.92%, and attains AD accuracy over 99% with a false alarm rate below 1.5%. These results demonstrate FPNet's ability to deliver efficient, accurate, and reliable indoor positioning on commodity Wi-Fi devices.

Non-volatile Programmable Photonic Integrated Circuits using Mechanically Latched MEMS: A System-Level Scheme Enabling Power-Connection-Free Operation Without Performance Compromise

Jan 10, 2026Abstract:Programmable photonic integrated circuits (PPICs) offer a versatile platform for implementing diverse optical functions on a generic hardware mesh. However, the scalability of PPICs faces critical power consumption barriers. Therefore, we propose a novel non-volatile PPIC architecture utilizing MEMS with mechanical latching, enabling stable passive operation without any power connection once configured. To ensure practical applicability, we present a system-level solution including both this hardware innovation and an accompanying automatic error-resilient configuration algorithm. The algorithm compensates for the lack of continuous tunability inherent in the non-volatile hardware design, thereby enabling such new operational paradigm without compromising performance, and also ensuring robustness against fabrication errors. Functional simulations were performed to validate the proposed scheme by configuring five distinct functionalities of varying complexity, including a Mach-Zehnder interferometer (MZI), a MZI lattice filter, a ring resonator (ORR), a double ORR ring-loaded MZI, and a triple ORR coupled resonator waveguide filter. The results demonstrate that our non-volatile scheme achieves performance equivalent to conventional PPICs. Robustness analysis was also conducted, and the results demonstrated that our scheme exhibits strong robustness against various fabrication errors. Furthermore, we explored the trade-off between the hardware design complexity of such non-volatile scheme and its performance. This study establishes a viable pathway to a new generation of power-connection-free PPICs, providing a practical and scalable solution for future photonic systems.

Universal Reasoning Model

Dec 24, 2025Abstract:Universal transformers (UTs) have been widely used for complex reasoning tasks such as ARC-AGI and Sudoku, yet the specific sources of their performance gains remain underexplored. In this work, we systematically analyze UTs variants and show that improvements on ARC-AGI primarily arise from the recurrent inductive bias and strong nonlinear components of Transformer, rather than from elaborate architectural designs. Motivated by this finding, we propose the Universal Reasoning Model (URM), which enhances the UT with short convolution and truncated backpropagation. Our approach substantially improves reasoning performance, achieving state-of-the-art 53.8% pass@1 on ARC-AGI 1 and 16.0% pass@1 on ARC-AGI 2. Our code is avaliable at https://github.com/UbiquantAI/URM.

Agent Bain vs. Agent McKinsey: A New Text-to-SQL Benchmark for the Business Domain

Oct 08, 2025Abstract:In the business domain, where data-driven decision making is crucial, text-to-SQL is fundamental for easy natural language access to structured data. While recent LLMs have achieved strong performance in code generation, existing text-to-SQL benchmarks remain focused on factual retrieval of past records. We introduce CORGI, a new benchmark specifically designed for real-world business contexts. CORGI is composed of synthetic databases inspired by enterprises such as Doordash, Airbnb, and Lululemon. It provides questions across four increasingly complex categories of business queries: descriptive, explanatory, predictive, and recommendational. This challenge calls for causal reasoning, temporal forecasting, and strategic recommendation, reflecting multi-level and multi-step agentic intelligence. We find that LLM performance drops on high-level questions, struggling to make accurate predictions and offer actionable plans. Based on execution success rate, the CORGI benchmark is about 21\% more difficult than the BIRD benchmark. This highlights the gap between popular LLMs and the need for real-world business intelligence. We release a public dataset and evaluation framework, and a website for public submissions.

VMDNet: Time Series Forecasting with Leakage-Free Samplewise Variational Mode Decomposition and Multibranch Decoding

Sep 18, 2025Abstract:In time series forecasting, capturing recurrent temporal patterns is essential; decomposition techniques make such structure explicit and thereby improve predictive performance. Variational Mode Decomposition (VMD) is a powerful signal-processing method for periodicity-aware decomposition and has seen growing adoption in recent years. However, existing studies often suffer from information leakage and rely on inappropriate hyperparameter tuning. To address these issues, we propose VMDNet, a causality-preserving framework that (i) applies sample-wise VMD to avoid leakage; (ii) represents each decomposed mode with frequency-aware embeddings and decodes it using parallel temporal convolutional networks (TCNs), ensuring mode independence and efficient learning; and (iii) introduces a bilevel, Stackelberg-inspired optimisation to adaptively select VMD's two core hyperparameters: the number of modes (K) and the bandwidth penalty (alpha). Experiments on two energy-related datasets demonstrate that VMDNet achieves state-of-the-art results when periodicity is strong, showing clear advantages in capturing structured periodic patterns while remaining robust under weak periodicity.

On Minimization/Maximization of the Generalized Multi-Order Complex Quadratic Form With Constant-Modulus Constraints

Aug 27, 2025Abstract:In this paper, we study the generalized problem that minimizes or maximizes a multi-order complex quadratic form with constant-modulus constraints on all elements of its optimization variable. Such a mathematical problem is commonly encountered in various applications of signal processing. We term it as the constant-modulus multi-order complex quadratic programming (CMCQP) in this paper. In general, the CMCQP is non-convex and difficult to solve. Its objective function typically relates to metrics such as signal-to-noise ratio, Cram\'er-Rao bound, integrated sidelobe level, etc., and constraints normally correspond to requirements on similarity to desired aspects, peak-to-average-power ratio, or constant-modulus property in practical scenarios. In order to find efficient solutions to the CMCQP, we first reformulate it into an unconstrained optimization problem with respect to phase values of the studied variable only. Then, we devise a steepest descent/ascent method with fast determinations on its optimal step sizes. Specifically, we convert the step-size searching problem into a polynomial form that leads to closed-form solutions of high accuracy, wherein the third-order Taylor expansion of the search function is conducted. Our major contributions also lie in investigating the effect of the order and specific form of matrices embedded in the CMCQP, for which two representative cases are identified. Examples of related applications associated with the two cases are also provided for completeness. The proposed methods are summarized into algorithms, whose convergence speeds are verified to be fast by comprehensive simulations and comparisons to existing methods. The accuracy of our proposed fast step-size determination is also evaluated.

Cross-domain Hyperspectral Image Classification based on Bi-directional Domain Adaptation

Jul 03, 2025Abstract:Utilizing hyperspectral remote sensing technology enables the extraction of fine-grained land cover classes. Typically, satellite or airborne images used for training and testing are acquired from different regions or times, where the same class has significant spectral shifts in different scenes. In this paper, we propose a Bi-directional Domain Adaptation (BiDA) framework for cross-domain hyperspectral image (HSI) classification, which focuses on extracting both domain-invariant features and domain-specific information in the independent adaptive space, thereby enhancing the adaptability and separability to the target scene. In the proposed BiDA, a triple-branch transformer architecture (the source branch, target branch, and coupled branch) with semantic tokenizer is designed as the backbone. Specifically, the source branch and target branch independently learn the adaptive space of source and target domains, a Coupled Multi-head Cross-attention (CMCA) mechanism is developed in coupled branch for feature interaction and inter-domain correlation mining. Furthermore, a bi-directional distillation loss is designed to guide adaptive space learning using inter-domain correlation. Finally, we propose an Adaptive Reinforcement Strategy (ARS) to encourage the model to focus on specific generalized feature extraction within both source and target scenes in noise condition. Experimental results on cross-temporal/scene airborne and satellite datasets demonstrate that the proposed BiDA performs significantly better than some state-of-the-art domain adaptation approaches. In the cross-temporal tree species classification task, the proposed BiDA is more than 3\%$\sim$5\% higher than the most advanced method. The codes will be available from the website: https://github.com/YuxiangZhang-BIT/IEEE_TCSVT_BiDA.

Transforming Weather Data from Pixel to Latent Space

Mar 09, 2025Abstract:The increasing impact of climate change and extreme weather events has spurred growing interest in deep learning for weather research. However, existing studies often rely on weather data in pixel space, which presents several challenges such as smooth outputs in model outputs, limited applicability to a single pressure-variable subset (PVS), and high data storage and computational costs. To address these challenges, we propose a novel Weather Latent Autoencoder (WLA) that transforms weather data from pixel space to latent space, enabling efficient weather task modeling. By decoupling weather reconstruction from downstream tasks, WLA improves the accuracy and sharpness of weather task model results. The incorporated Pressure-Variable Unified Module transforms multiple PVS into a unified representation, enhancing the adaptability of the model in multiple weather scenarios. Furthermore, weather tasks can be performed in a low-storage latent space of WLA rather than a high-storage pixel space, thus significantly reducing data storage and computational costs. Through extensive experimentation, we demonstrate its superior compression and reconstruction performance, enabling the creation of the ERA5-latent dataset with unified representations of multiple PVS from ERA5 data. The compressed full PVS in the ERA5-latent dataset reduces the original 244.34 TB of data to 0.43 TB. The downstream task further demonstrates that task models can apply to multiple PVS with low data costs in latent space and achieve superior performance compared to models in pixel space. Code, ERA5-latent data, and pre-trained models are available at https://anonymous.4open.science/r/Weather-Latent-Autoencoder-8467.

LWFNet: Coherent Doppler Wind Lidar-Based Network for Wind Field Retrieval

Jan 05, 2025

Abstract:Accurate detection of wind fields within the troposphere is essential for atmospheric dynamics research and plays a crucial role in extreme weather forecasting. Coherent Doppler wind lidar (CDWL) is widely regarded as the most suitable technique for high spatial and temporal resolution wind field detection. However, since coherent detection relies heavily on the concentration of aerosol particles, which cause Mie scattering, the received backscattering lidar signal exhibits significantly low intensity at high altitudes. As a result, conventional methods, such as spectral centroid estimation, often fail to produce credible and accurate wind retrieval results in these regions. To address this issue, we propose LWFNet, the first Lidar-based Wind Field (WF) retrieval neural Network, built upon Transformer and the Kolmogorov-Arnold network. Our model is trained solely on targets derived from the traditional wind retrieval algorithm and utilizes radiosonde measurements as the ground truth for test results evaluation. Experimental results demonstrate that LWFNet not only extends the maximum wind field detection range but also produces more accurate results, exhibiting a level of precision that surpasses the labeled targets. This phenomenon, which we refer to as super-accuracy, is explored by investigating the potential underlying factors that contribute to this intriguing occurrence. In addition, we compare the performance of LWFNet with other state-of-the-art (SOTA) models, highlighting its superior effectiveness and capability in high-resolution wind retrieval. LWFNet demonstrates remarkable performance in lidar-based wind field retrieval, setting a benchmark for future research and advancing the development of deep learning models in this domain.

Task-Parameter Nexus: Task-Specific Parameter Learning for Model-Based Control

Dec 17, 2024

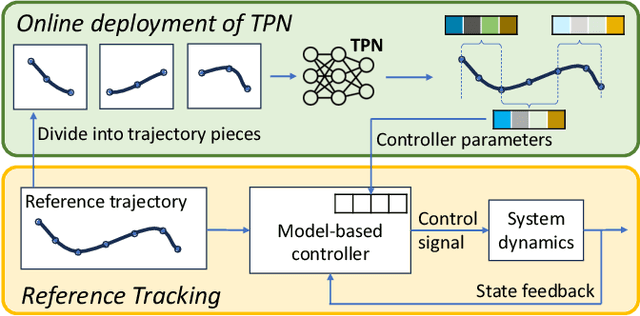

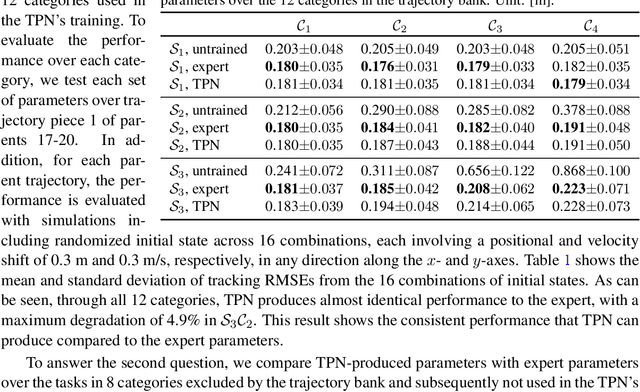

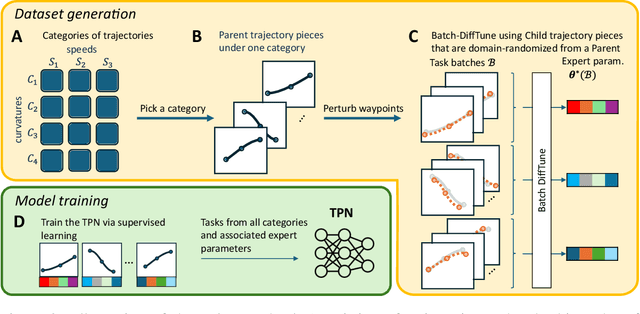

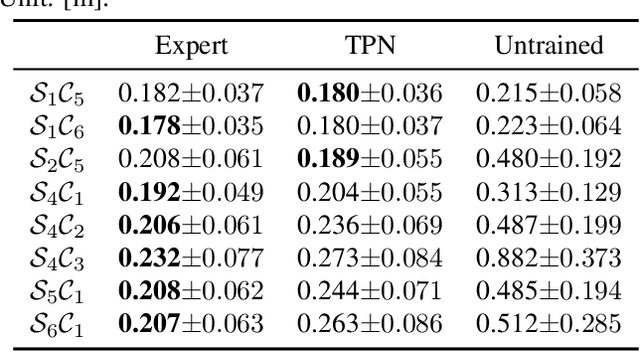

Abstract:This paper presents the Task-Parameter Nexus (TPN), a learning-based approach for online determination of the (near-)optimal control parameters of model-based controllers (MBCs) for tracking tasks. In TPN, a deep neural network is introduced to predict the control parameters for any given tracking task at runtime, especially when optimal parameters for new tasks are not immediately available. To train this network, we constructed a trajectory bank with various speeds and curvatures that represent different motion characteristics. Then, for each trajectory in the bank, we auto-tune the optimal control parameters offline and use them as the corresponding ground truth. With this dataset, the TPN is trained by supervised learning. We evaluated the TPN on the quadrotor platform. In simulation experiments, it is shown that the TPN can predict near-optimal control parameters for a spectrum of tracking tasks, demonstrating its robust generalization capabilities to unseen tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge