Puyang Wang

SDUM: A Scalable Deep Unrolled Model for Universal MRI Reconstruction

Dec 19, 2025

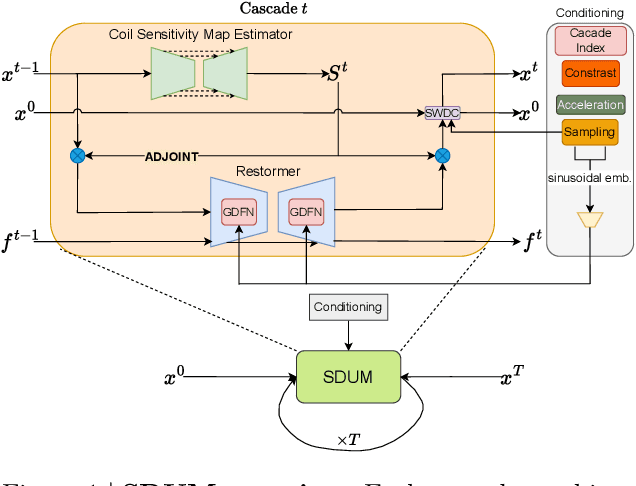

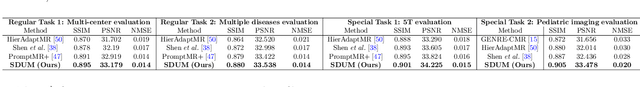

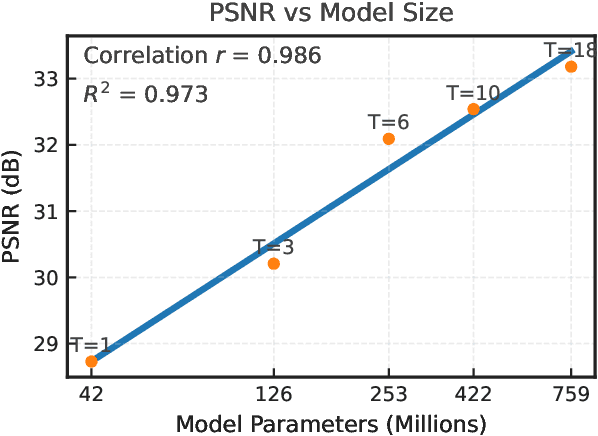

Abstract:Clinical MRI encompasses diverse imaging protocols--spanning anatomical targets (cardiac, brain, knee), contrasts (T1, T2, mapping), sampling patterns (Cartesian, radial, spiral, kt-space), and acceleration factors--yet current deep learning reconstructions are typically protocol-specific, hindering generalization and deployment. We introduce Scalable Deep Unrolled Model (SDUM), a universal framework combining a Restormer-based reconstructor, a learned coil sensitivity map estimator (CSME), sampling-aware weighted data consistency (SWDC), universal conditioning (UC) on cascade index and protocol metadata, and progressive cascade expansion training. SDUM exhibits foundation-model-like scaling behavior: reconstruction quality follows PSNR ${\sim}$ log(parameters) with correlation $r{=}0.986$ ($R^2{=}0.973$) up to 18 cascades, demonstrating predictable performance gains with model depth. A single SDUM trained on heterogeneous data achieves state-of-the-art results across all four CMRxRecon2025 challenge tracks--multi-center, multi-disease, 5T, and pediatric--without task-specific fine-tuning, surpassing specialized baselines by up to ${+}1.0$~dB. On CMRxRecon2024, SDUM outperforms the winning method PromptMR+ by ${+}0.55$~dB; on fastMRI brain, it exceeds PC-RNN by ${+}1.8$~dB. Ablations validate each component: SWDC ${+}0.43$~dB over standard DC, per-cascade CSME ${+}0.51$~dB, UC ${+}0.38$~dB. These results establish SDUM as a practical path toward universal, scalable MRI reconstruction.

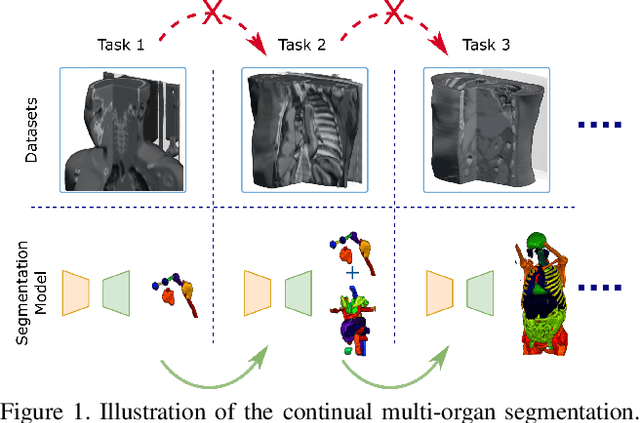

A Continual Learning-driven Model for Accurate and Generalizable Segmentation of Clinically Comprehensive and Fine-grained Whole-body Anatomies in CT

Mar 16, 2025Abstract:Precision medicine in the quantitative management of chronic diseases and oncology would be greatly improved if the Computed Tomography (CT) scan of any patient could be segmented, parsed and analyzed in a precise and detailed way. However, there is no such fully annotated CT dataset with all anatomies delineated for training because of the exceptionally high manual cost, the need for specialized clinical expertise, and the time required to finish the task. To this end, we proposed a novel continual learning-driven CT model that can segment complete anatomies presented using dozens of previously partially labeled datasets, dynamically expanding its capacity to segment new ones without compromising previously learned organ knowledge. Existing multi-dataset approaches are not able to dynamically segment new anatomies without catastrophic forgetting and would encounter optimization difficulty or infeasibility when segmenting hundreds of anatomies across the whole range of body regions. Our single unified CT segmentation model, CL-Net, can highly accurately segment a clinically comprehensive set of 235 fine-grained whole-body anatomies. Composed of a universal encoder, multiple optimized and pruned decoders, CL-Net is developed using 13,952 CT scans from 20 public and 16 private high-quality partially labeled CT datasets of various vendors, different contrast phases, and pathologies. Extensive evaluation demonstrates that CL-Net consistently outperforms the upper limit of an ensemble of 36 specialist nnUNets trained per dataset with the complexity of 5% model size and significantly surpasses the segmentation accuracy of recent leading Segment Anything-style medical image foundation models by large margins. Our continual learning-driven CL-Net model would lay a solid foundation to facilitate many downstream tasks of oncology and chronic diseases using the most widely adopted CT imaging.

Low-Rank Continual Pyramid Vision Transformer: Incrementally Segment Whole-Body Organs in CT with Light-Weighted Adaptation

Oct 07, 2024Abstract:Deep segmentation networks achieve high performance when trained on specific datasets. However, in clinical practice, it is often desirable that pretrained segmentation models can be dynamically extended to enable segmenting new organs without access to previous training datasets or without training from scratch. This would ensure a much more efficient model development and deployment paradigm accounting for the patient privacy and data storage issues. This clinically preferred process can be viewed as a continual semantic segmentation (CSS) problem. Previous CSS works would either experience catastrophic forgetting or lead to unaffordable memory costs as models expand. In this work, we propose a new continual whole-body organ segmentation model with light-weighted low-rank adaptation (LoRA). We first train and freeze a pyramid vision transformer (PVT) base segmentation model on the initial task, then continually add light-weighted trainable LoRA parameters to the frozen model for each new learning task. Through a holistically exploration of the architecture modification, we identify three most important layers (i.e., patch-embedding, multi-head attention and feed forward layers) that are critical in adapting to the new segmentation tasks, while retaining the majority of the pretrained parameters fixed. Our proposed model continually segments new organs without catastrophic forgetting and meanwhile maintaining a low parameter increasing rate. Continually trained and tested on four datasets covering different body parts of a total of 121 organs, results show that our model achieves high segmentation accuracy, closely reaching the PVT and nnUNet upper bounds, and significantly outperforms other regularization-based CSS methods. When comparing to the leading architecture-based CSS method, our model has a substantial lower parameter increasing rate while achieving comparable performance.

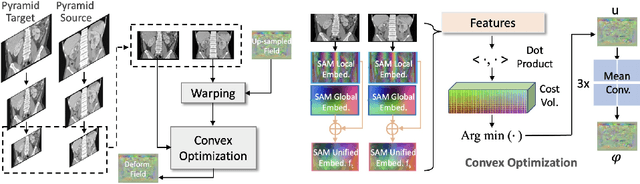

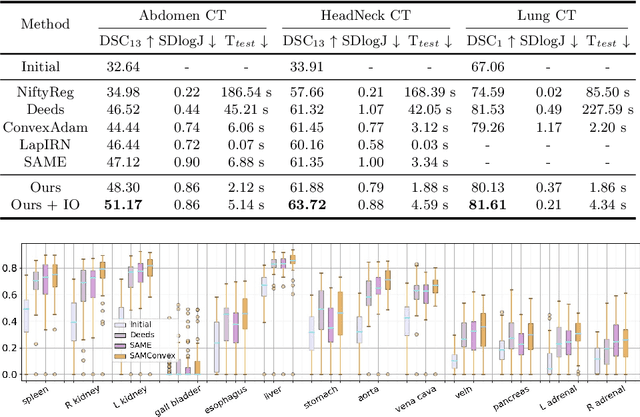

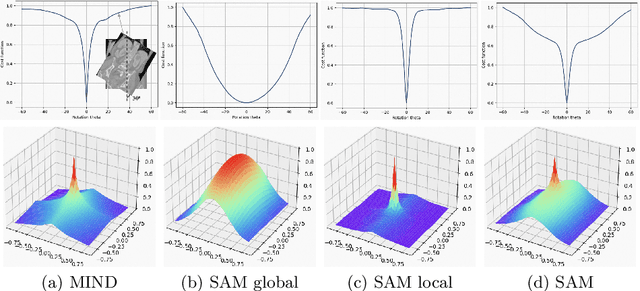

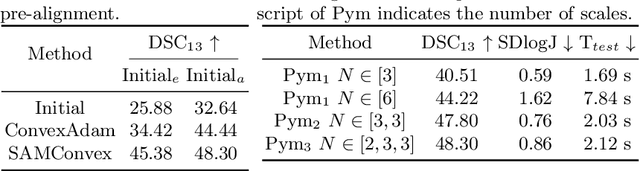

SAMConvex: Fast Discrete Optimization for CT Registration using Self-supervised Anatomical Embedding and Correlation Pyramid

Jul 19, 2023

Abstract:Estimating displacement vector field via a cost volume computed in the feature space has shown great success in image registration, but it suffers excessive computation burdens. Moreover, existing feature descriptors only extract local features incapable of representing the global semantic information, which is especially important for solving large transformations. To address the discussed issues, we propose SAMConvex, a fast coarse-to-fine discrete optimization method for CT registration that includes a decoupled convex optimization procedure to obtain deformation fields based on a self-supervised anatomical embedding (SAM) feature extractor that captures both local and global information. To be specific, SAMConvex extracts per-voxel features and builds 6D correlation volumes based on SAM features, and iteratively updates a flow field by performing lookups on the correlation volumes with a coarse-to-fine scheme. SAMConvex outperforms the state-of-the-art learning-based methods and optimization-based methods over two inter-patient registration datasets (Abdomen CT and HeadNeck CT) and one intra-patient registration dataset (Lung CT). Moreover, as an optimization-based method, SAMConvex only takes $\sim2$s ($\sim5s$ with instance optimization) for one paired images.

Accurate Airway Tree Segmentation in CT Scans via Anatomy-aware Multi-class Segmentation and Topology-guided Iterative Learning

Jun 15, 2023

Abstract:Intrathoracic airway segmentation in computed tomography (CT) is a prerequisite for various respiratory disease analyses such as chronic obstructive pulmonary disease (COPD), asthma and lung cancer. Unlike other organs with simpler shapes or topology, the airway's complex tree structure imposes an unbearable burden to generate the "ground truth" label (up to 7 or 3 hours of manual or semi-automatic annotation on each case). Most of the existing airway datasets are incompletely labeled/annotated, thus limiting the completeness of computer-segmented airway. In this paper, we propose a new anatomy-aware multi-class airway segmentation method enhanced by topology-guided iterative self-learning. Based on the natural airway anatomy, we formulate a simple yet highly effective anatomy-aware multi-class segmentation task to intuitively handle the severe intra-class imbalance of the airway. To solve the incomplete labeling issue, we propose a tailored self-iterative learning scheme to segment toward the complete airway tree. For generating pseudo-labels to achieve higher sensitivity , we introduce a novel breakage attention map and design a topology-guided pseudo-label refinement method by iteratively connecting breaking branches commonly existed from initial pseudo-labels. Extensive experiments have been conducted on four datasets including two public challenges. The proposed method ranked 1st in both EXACT'09 challenge using average score and ATM'22 challenge on weighted average score. In a public BAS dataset and a private lung cancer dataset, our method significantly improves previous leading approaches by extracting at least (absolute) 7.5% more detected tree length and 4.0% more tree branches, while maintaining similar precision.

Multi-site, Multi-domain Airway Tree Modeling : A Public Benchmark for Pulmonary Airway Segmentation

Mar 10, 2023

Abstract:Open international challenges are becoming the de facto standard for assessing computer vision and image analysis algorithms. In recent years, new methods have extended the reach of pulmonary airway segmentation that is closer to the limit of image resolution. Since EXACT'09 pulmonary airway segmentation, limited effort has been directed to quantitative comparison of newly emerged algorithms driven by the maturity of deep learning based approaches and clinical drive for resolving finer details of distal airways for early intervention of pulmonary diseases. Thus far, public annotated datasets are extremely limited, hindering the development of data-driven methods and detailed performance evaluation of new algorithms. To provide a benchmark for the medical imaging community, we organized the Multi-site, Multi-domain Airway Tree Modeling (ATM'22), which was held as an official challenge event during the MICCAI 2022 conference. ATM'22 provides large-scale CT scans with detailed pulmonary airway annotation, including 500 CT scans (300 for training, 50 for validation, and 150 for testing). The dataset was collected from different sites and it further included a portion of noisy COVID-19 CTs with ground-glass opacity and consolidation. Twenty-three teams participated in the entire phase of the challenge and the algorithms for the top ten teams are reviewed in this paper. Quantitative and qualitative results revealed that deep learning models embedded with the topological continuity enhancement achieved superior performance in general. ATM'22 challenge holds as an open-call design, the training data and the gold standard evaluation are available upon successful registration via its homepage.

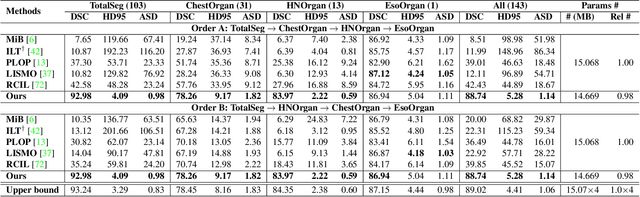

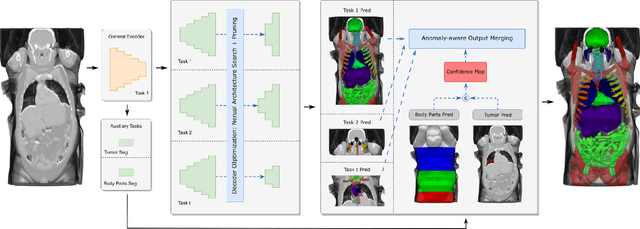

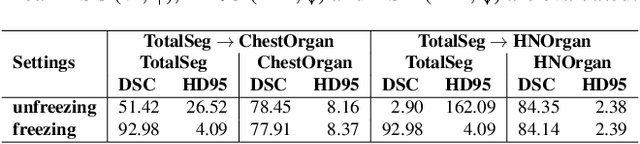

Continual Segment: Towards a Single, Unified and Accessible Continual Segmentation Model of 143 Whole-body Organs in CT Scans

Feb 04, 2023

Abstract:Deep learning empowers the mainstream medical image segmentation methods. Nevertheless current deep segmentation approaches are not capable of efficiently and effectively adapting and updating the trained models when new incremental segmentation classes (along with new training datasets or not) are required to be added. In real clinical environment, it can be preferred that segmentation models could be dynamically extended to segment new organs/tumors without the (re-)access to previous training datasets due to obstacles of patient privacy and data storage. This process can be viewed as a continual semantic segmentation (CSS) problem, being understudied for multi-organ segmentation. In this work, we propose a new architectural CSS learning framework to learn a single deep segmentation model for segmenting a total of 143 whole-body organs. Using the encoder/decoder network structure, we demonstrate that a continually-trained then frozen encoder coupled with incrementally-added decoders can extract and preserve sufficiently representative image features for new classes to be subsequently and validly segmented. To maintain a single network model complexity, we trim each decoder progressively using neural architecture search and teacher-student based knowledge distillation. To incorporate with both healthy and pathological organs appearing in different datasets, a novel anomaly-aware and confidence learning module is proposed to merge the overlapped organ predictions, originated from different decoders. Trained and validated on 3D CT scans of 2500+ patients from four datasets, our single network can segment total 143 whole-body organs with very high accuracy, closely reaching the upper bound performance level by training four separate segmentation models (i.e., one model per dataset/task).

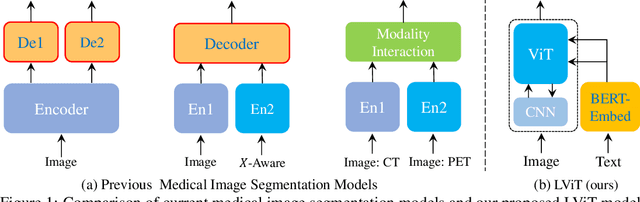

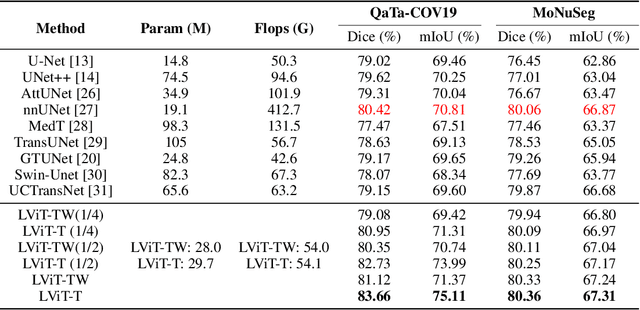

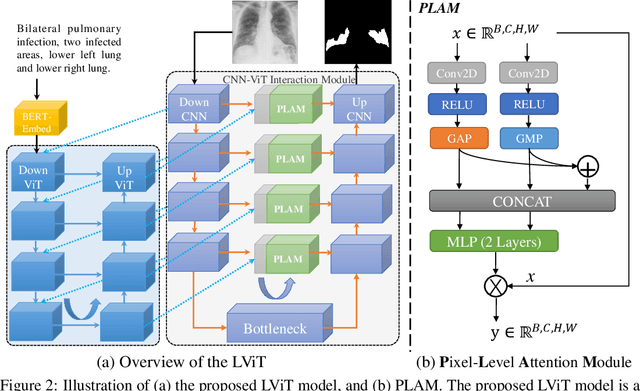

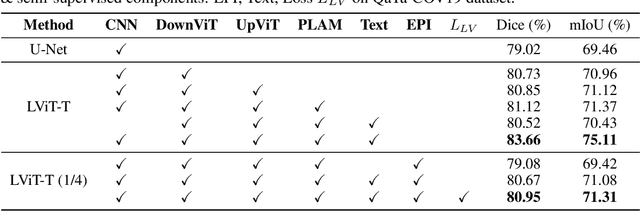

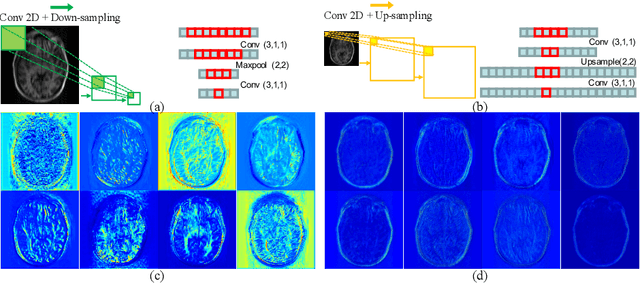

LViT: Language meets Vision Transformer in Medical Image Segmentation

Jun 29, 2022

Abstract:Deep learning has been widely used in medical image segmentation and other aspects. However, the performance of existing medical image segmentation models has been limited by the challenge of obtaining sufficient number of high-quality data with the high cost of data annotation. To overcome the limitation, we propose a new vision-language medical image segmentation model LViT (Language meets Vision Transformer). In our model, medical text annotation is introduced to compensate for the quality deficiency in image data. In addition, the text information can guide the generation of pseudo labels to a certain extent and further guarantee the quality of pseudo labels in semi-supervised learning. We also propose the Exponential Pseudo label Iteration mechanism (EPI) to help extend the semi-supervised version of LViT and the Pixel-Level Attention Module (PLAM) to preserve local features of images. In our model, LV (Language-Vision) loss is designed to supervise the training of unlabeled images using text information directly. To validate the performance of LViT, we construct multimodal medical segmentation datasets (image + text) containing pathological images, X-rays,etc. Experimental results show that our proposed LViT has better segmentation performance in both fully and semi-supervised conditions. Code and datasets are available at https://github.com/HUANGLIZI/LViT.

Deep-learning-enabled Brain Hemodynamic Mapping Using Resting-state fMRI

Apr 25, 2022

Abstract:Cerebrovascular disease is a leading cause of death globally. Prevention and early intervention are known to be the most effective forms of its management. Non-invasive imaging methods hold great promises for early stratification, but at present lack the sensitivity for personalized prognosis. Resting-state functional magnetic resonance imaging (rs-fMRI), a powerful tool previously used for mapping neural activity, is available in most hospitals. Here we show that rs-fMRI can be used to map cerebral hemodynamic function and delineate impairment. By exploiting time variations in breathing pattern during rs-fMRI, deep learning enables reproducible mapping of cerebrovascular reactivity (CVR) and bolus arrive time (BAT) of the human brain using resting-state CO2 fluctuations as a natural 'contrast media'. The deep-learning network was trained with CVR and BAT maps obtained with a reference method of CO2-inhalation MRI, which included data from young and older healthy subjects and patients with Moyamoya disease and brain tumors. We demonstrate the performance of deep-learning cerebrovascular mapping in the detection of vascular abnormalities, evaluation of revascularization effects, and vascular alterations in normal aging. In addition, cerebrovascular maps obtained with the proposed method exhibited excellent reproducibility in both healthy volunteers and stroke patients. Deep-learning resting-state vascular imaging has the potential to become a useful tool in clinical cerebrovascular imaging.

Over-and-Under Complete Convolutional RNN for MRI Reconstruction

Jun 25, 2021

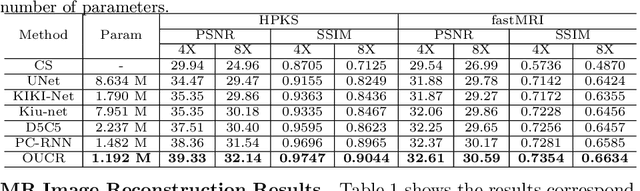

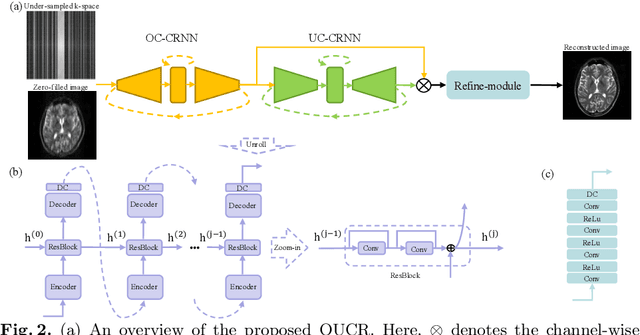

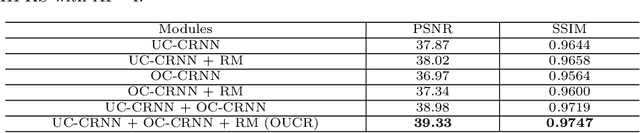

Abstract:Reconstructing magnetic resonance (MR) images from undersampled data is a challenging problem due to various artifacts introduced by the under-sampling operation. Recent deep learning-based methods for MR image reconstruction usually leverage a generic auto-encoder architecture which captures low-level features at the initial layers and high-level features at the deeper layers. Such networks focus much on global features which may not be optimal to reconstruct the fully-sampled image. In this paper, we propose an Over-and-Under Complete Convolutional Recurrent Neural Network (OUCR), which consists of an overcomplete and an undercomplete Convolutional Recurrent Neural Network(CRNN). The overcomplete branch gives special attention in learning local structures by restraining the receptive field of the network. Combining it with the undercomplete branch leads to a network which focuses more on low-level features without losing out on the global structures. Extensive experiments on two datasets demonstrate that the proposed method achieves significant improvements over the compressed sensing and popular deep learning-based methods with less number of trainable parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge