Lei Pan

University of Alberta

Neuronal Activation States as Sample Embeddings for Data Selection in Task-Specific Instruction Tuning

Mar 19, 2025Abstract:Task-specific instruction tuning enhances the performance of large language models (LLMs) on specialized tasks, yet efficiently selecting relevant data for this purpose remains a challenge. Inspired by neural coactivation in the human brain, we propose a novel data selection method called NAS, which leverages neuronal activation states as embeddings for samples in the feature space. Extensive experiments show that NAS outperforms classical data selection methods in terms of both effectiveness and robustness across different models, datasets, and selection ratios.

Alignment for Efficient Tool Calling of Large Language Models

Mar 09, 2025

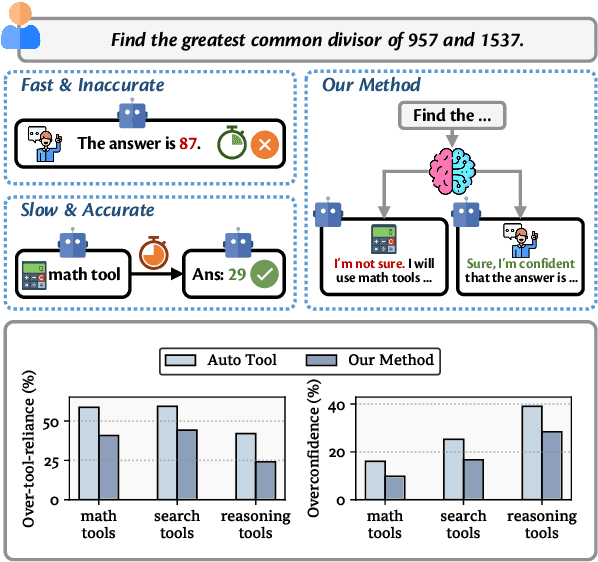

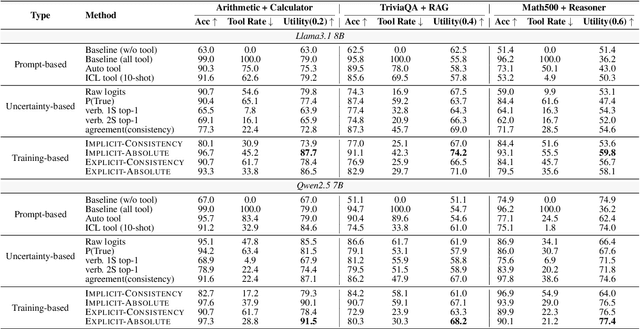

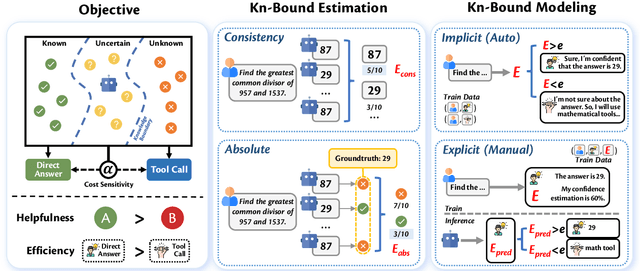

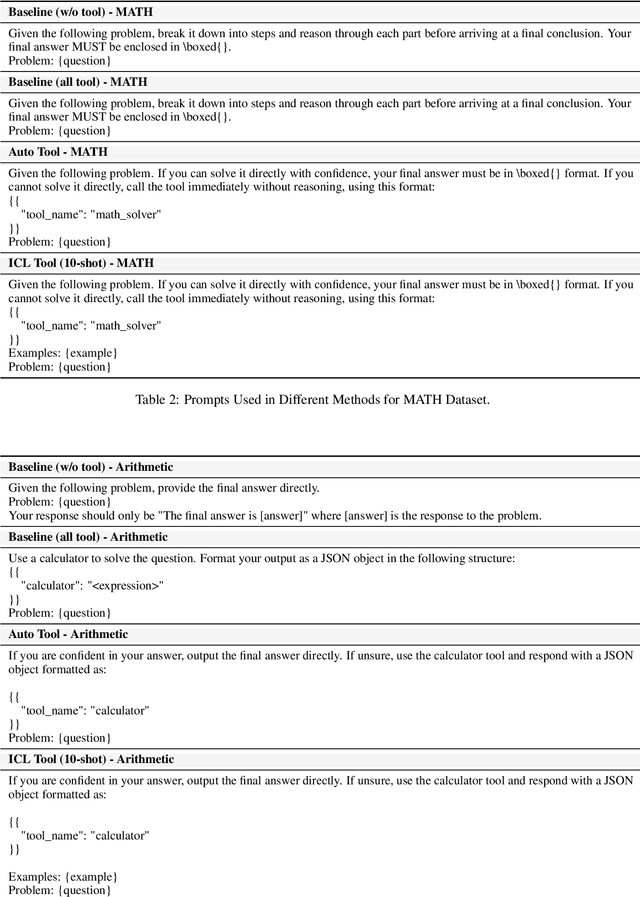

Abstract:Recent advancements in tool learning have enabled large language models (LLMs) to integrate external tools, enhancing their task performance by expanding their knowledge boundaries. However, relying on tools often introduces tradeoffs between performance, speed, and cost, with LLMs sometimes exhibiting overreliance and overconfidence in tool usage. This paper addresses the challenge of aligning LLMs with their knowledge boundaries to make more intelligent decisions about tool invocation. We propose a multi objective alignment framework that combines probabilistic knowledge boundary estimation with dynamic decision making, allowing LLMs to better assess when to invoke tools based on their confidence. Our framework includes two methods for knowledge boundary estimation, consistency based and absolute estimation, and two training strategies for integrating these estimates into the model decision making process. Experimental results on various tool invocation scenarios demonstrate the effectiveness of our framework, showing significant improvements in tool efficiency by reducing unnecessary tool usage.

Compressing KV Cache for Long-Context LLM Inference with Inter-Layer Attention Similarity

Dec 03, 2024

Abstract:The increasing context window size in Large Language Models (LLMs), such as the GPT and LLaMA series, has improved their ability to tackle complex, long-text tasks, but at the cost of inference efficiency, particularly regarding memory and computational complexity. Existing methods, including selective token retention and window-based attention, improve efficiency but risk discarding important tokens needed for future text generation. In this paper, we propose an approach that enhances LLM efficiency without token loss by reducing the memory and computational load of less important tokens, rather than discarding them.We address two challenges: 1) investigating the distribution of important tokens in the context, discovering recent tokens are more important than distant tokens in context, and 2) optimizing resources for distant tokens by sharing attention scores across layers. The experiments show that our method saves $35\%$ KV cache without compromising the performance.

Smart energy management: process structure-based hybrid neural networks for optimal scheduling and economic predictive control in integrated systems

Oct 07, 2024Abstract:Integrated energy systems (IESs) are complex systems consisting of diverse operating units spanning multiple domains. To address its operational challenges, we propose a physics-informed hybrid time-series neural network (NN) surrogate to predict the dynamic performance of IESs across multiple time scales. This neural network-based modeling approach develops time-series multi-layer perceptrons (MLPs) for the operating units and integrates them with prior process knowledge about system structure and fundamental dynamics. This integration forms three hybrid NNs (long-term, slow, and fast MLPs) that predict the entire system dynamics across multiple time scales. Leveraging these MLPs, we design an NN-based scheduler and an NN-based economic model predictive control (NEMPC) framework to meet global operational requirements: rapid electrical power responsiveness to operators requests, adequate cooling supply to customers, and increased system profitability, while addressing the dynamic time-scale multiplicity present in IESs. The proposed day-ahead scheduler is formulated using the ReLU network-based MLP, which effectively represents IES performance under a broad range of conditions from a long-term perspective. The scheduler is then exactly recast into a mixed-integer linear programming problem for efficient evaluation. The real-time NEMPC, based on slow and fast MLPs, comprises two sequential distributed control agents: a slow NEMPC for the cooling-dominant subsystem with slower transient responses and a fast NEMPC for the power-dominant subsystem with faster responses. Extensive simulations demonstrate that the developed scheduler and NEMPC schemes outperform their respective benchmark scheduler and controller by about 25% and 40%. Together, they enhance overall system performance by over 70% compared to benchmark approaches.

RL-GSBridge: 3D Gaussian Splatting Based Real2Sim2Real Method for Robotic Manipulation Learning

Sep 30, 2024

Abstract:Sim-to-Real refers to the process of transferring policies learned in simulation to the real world, which is crucial for achieving practical robotics applications. However, recent Sim2real methods either rely on a large amount of augmented data or large learning models, which is inefficient for specific tasks. In recent years, radiance field-based reconstruction methods, especially the emergence of 3D Gaussian Splatting, making it possible to reproduce realistic real-world scenarios. To this end, we propose a novel real-to-sim-to-real reinforcement learning framework, RL-GSBridge, which introduces a mesh-based 3D Gaussian Splatting method to realize zero-shot sim-to-real transfer for vision-based deep reinforcement learning. We improve the mesh-based 3D GS modeling method by using soft binding constraints, enhancing the rendering quality of mesh models. We then employ a GS editing approach to synchronize rendering with the physics simulator, reflecting the interactions of the physical robot more accurately. Through a series of sim-to-real robotic arm experiments, including grasping and pick-and-place tasks, we demonstrate that RL-GSBridge maintains a satisfactory success rate in real-world task completion during sim-to-real transfer. Furthermore, a series of rendering metrics and visualization results indicate that our proposed mesh-based 3D Gaussian reduces artifacts in unstructured objects, demonstrating more realistic rendering performance.

Probing the Robustness of Vision-Language Pretrained Models: A Multimodal Adversarial Attack Approach

Aug 24, 2024

Abstract:Vision-language pretraining (VLP) with transformers has demonstrated exceptional performance across numerous multimodal tasks. However, the adversarial robustness of these models has not been thoroughly investigated. Existing multimodal attack methods have largely overlooked cross-modal interactions between visual and textual modalities, particularly in the context of cross-attention mechanisms. In this paper, we study the adversarial vulnerability of recent VLP transformers and design a novel Joint Multimodal Transformer Feature Attack (JMTFA) that concurrently introduces adversarial perturbations in both visual and textual modalities under white-box settings. JMTFA strategically targets attention relevance scores to disrupt important features within each modality, generating adversarial samples by fusing perturbations and leading to erroneous model predictions. Experimental results indicate that the proposed approach achieves high attack success rates on vision-language understanding and reasoning downstream tasks compared to existing baselines. Notably, our findings reveal that the textual modality significantly influences the complex fusion processes within VLP transformers. Moreover, we observe no apparent relationship between model size and adversarial robustness under our proposed attacks. These insights emphasize a new dimension of adversarial robustness and underscore potential risks in the reliable deployment of multimodal AI systems.

NeRF in Robotics: A Survey

May 02, 2024

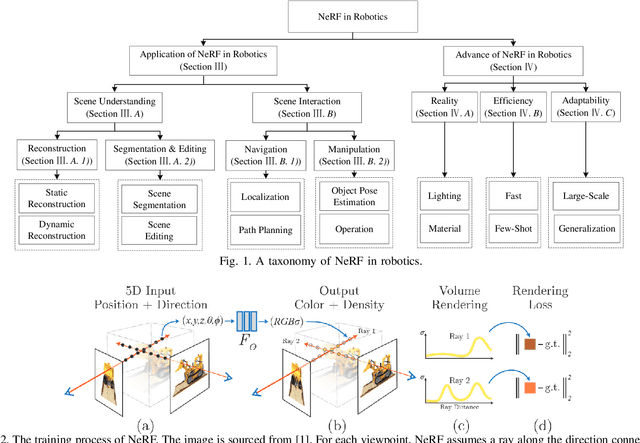

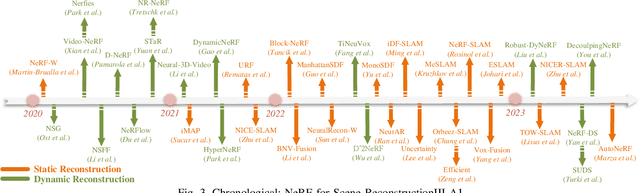

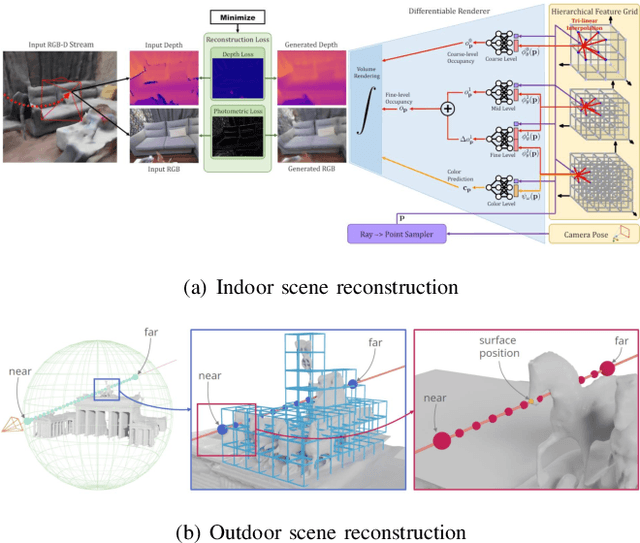

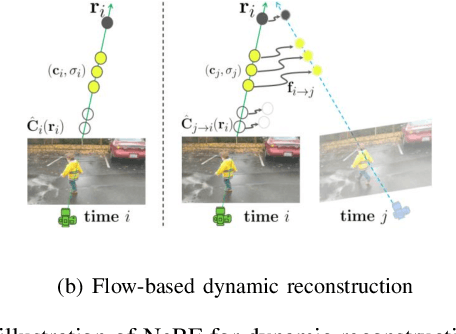

Abstract:Meticulous 3D environment representations have been a longstanding goal in computer vision and robotics fields. The recent emergence of neural implicit representations has introduced radical innovation to this field as implicit representations enable numerous capabilities. Among these, the Neural Radiance Field (NeRF) has sparked a trend because of the huge representational advantages, such as simplified mathematical models, compact environment storage, and continuous scene representations. Apart from computer vision, NeRF has also shown tremendous potential in the field of robotics. Thus, we create this survey to provide a comprehensive understanding of NeRF in the field of robotics. By exploring the advantages and limitations of NeRF, as well as its current applications and future potential, we hope to shed light on this promising area of research. Our survey is divided into two main sections: \textit{The Application of NeRF in Robotics} and \textit{The Advance of NeRF in Robotics}, from the perspective of how NeRF enters the field of robotics. In the first section, we introduce and analyze some works that have been or could be used in the field of robotics from the perception and interaction perspectives. In the second section, we show some works related to improving NeRF's own properties, which are essential for deploying NeRF in the field of robotics. In the discussion section of the review, we summarize the existing challenges and provide some valuable future research directions for reference.

Quantum Federated Learning Experiments in the Cloud with Data Encoding

May 01, 2024Abstract:Quantum Federated Learning (QFL) is an emerging concept that aims to unfold federated learning (FL) over quantum networks, enabling collaborative quantum model training along with local data privacy. We explore the challenges of deploying QFL on cloud platforms, emphasizing quantum intricacies and platform limitations. The proposed data-encoding-driven QFL, with a proof of concept (GitHub Open Source) using genomic data sets on quantum simulators, shows promising results.

Unsupervised Text Style Transfer via LLMs and Attention Masking with Multi-way Interactions

Feb 21, 2024Abstract:Unsupervised Text Style Transfer (UTST) has emerged as a critical task within the domain of Natural Language Processing (NLP), aiming to transfer one stylistic aspect of a sentence into another style without changing its semantics, syntax, or other attributes. This task is especially challenging given the intrinsic lack of parallel text pairings. Among existing methods for UTST tasks, attention masking approach and Large Language Models (LLMs) are deemed as two pioneering methods. However, they have shortcomings in generating unsmooth sentences and changing the original contents, respectively. In this paper, we investigate if we can combine these two methods effectively. We propose four ways of interactions, that are pipeline framework with tuned orders; knowledge distillation from LLMs to attention masking model; in-context learning with constructed parallel examples. We empirically show these multi-way interactions can improve the baselines in certain perspective of style strength, content preservation and text fluency. Experiments also demonstrate that simply conducting prompting followed by attention masking-based revision can consistently surpass the other systems, including supervised text style transfer systems. On Yelp-clean and Amazon-clean datasets, it improves the previously best mean metric by 0.5 and 3.0 absolute percentages respectively, and achieves new SOTA results.

Terrain Diffusion Network: Climatic-Aware Terrain Generation with Geological Sketch Guidance

Aug 31, 2023

Abstract:Sketch-based terrain generation seeks to create realistic landscapes for virtual environments in various applications such as computer games, animation and virtual reality. Recently, deep learning based terrain generation has emerged, notably the ones based on generative adversarial networks (GAN). However, these methods often struggle to fulfill the requirements of flexible user control and maintain generative diversity for realistic terrain. Therefore, we propose a novel diffusion-based method, namely terrain diffusion network (TDN), which actively incorporates user guidance for enhanced controllability, taking into account terrain features like rivers, ridges, basins, and peaks. Instead of adhering to a conventional monolithic denoising process, which often compromises the fidelity of terrain details or the alignment with user control, a multi-level denoising scheme is proposed to generate more realistic terrains by taking into account fine-grained details, particularly those related to climatic patterns influenced by erosion and tectonic activities. Specifically, three terrain synthesisers are designed for structural, intermediate, and fine-grained level denoising purposes, which allow each synthesiser concentrate on a distinct terrain aspect. Moreover, to maximise the efficiency of our TDN, we further introduce terrain and sketch latent spaces for the synthesizers with pre-trained terrain autoencoders. Comprehensive experiments on a new dataset constructed from NASA Topology Images clearly demonstrate the effectiveness of our proposed method, achieving the state-of-the-art performance. Our code and dataset will be publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge