Kun Hu

Pb4U-GNet: Resolution-Adaptive Garment Simulation via Propagation-before-Update Graph Network

Jan 21, 2026Abstract:Garment simulation is fundamental to various applications in computer vision and graphics, from virtual try-on to digital human modelling. However, conventional physics-based methods remain computationally expensive, hindering their application in time-sensitive scenarios. While graph neural networks (GNNs) offer promising acceleration, existing approaches exhibit poor cross-resolution generalisation, demonstrating significant performance degradation on higher-resolution meshes beyond the training distribution. This stems from two key factors: (1) existing GNNs employ fixed message-passing depth that fails to adapt information aggregation to mesh density variation, and (2) vertex-wise displacement magnitudes are inherently resolution-dependent in garment simulation. To address these issues, we introduce Propagation-before-Update Graph Network (Pb4U-GNet), a resolution-adaptive framework that decouples message propagation from feature updates. Pb4U-GNet incorporates two key mechanisms: (1) dynamic propagation depth control, adjusting message-passing iterations based on mesh resolution, and (2) geometry-aware update scaling, which scales predictions according to local mesh characteristics. Extensive experiments show that even trained solely on low-resolution meshes, Pb4U-GNet exhibits strong generalisability across diverse mesh resolutions, addressing a fundamental challenge in neural garment simulation.

Benchmark^2: Systematic Evaluation of LLM Benchmarks

Jan 07, 2026Abstract:The rapid proliferation of benchmarks for evaluating large language models (LLMs) has created an urgent need for systematic methods to assess benchmark quality itself. We propose Benchmark^2, a comprehensive framework comprising three complementary metrics: (1) Cross-Benchmark Ranking Consistency, measuring whether a benchmark produces model rankings aligned with peer benchmarks; (2) Discriminability Score, quantifying a benchmark's ability to differentiate between models; and (3) Capability Alignment Deviation, identifying problematic instances where stronger models fail but weaker models succeed within the same model family. We conduct extensive experiments across 15 benchmarks spanning mathematics, reasoning, and knowledge domains, evaluating 11 LLMs across four model families. Our analysis reveals significant quality variations among existing benchmarks and demonstrates that selective benchmark construction based on our metrics can achieve comparable evaluation performance with substantially reduced test sets.

PUMPS: Skeleton-Agnostic Point-based Universal Motion Pre-Training for Synthesis in Human Motion Tasks

Jul 27, 2025Abstract:Motion skeletons drive 3D character animation by transforming bone hierarchies, but differences in proportions or structure make motion data hard to transfer across skeletons, posing challenges for data-driven motion synthesis. Temporal Point Clouds (TPCs) offer an unstructured, cross-compatible motion representation. Though reversible with skeletons, TPCs mainly serve for compatibility, not for direct motion task learning. Doing so would require data synthesis capabilities for the TPC format, which presents unexplored challenges regarding its unique temporal consistency and point identifiability. Therefore, we propose PUMPS, the primordial autoencoder architecture for TPC data. PUMPS independently reduces frame-wise point clouds into sampleable feature vectors, from which a decoder extracts distinct temporal points using latent Gaussian noise vectors as sampling identifiers. We introduce linear assignment-based point pairing to optimise the TPC reconstruction process, and negate the use of expensive point-wise attention mechanisms in the architecture. Using these latent features, we pre-train a motion synthesis model capable of performing motion prediction, transition generation, and keyframe interpolation. For these pre-training tasks, PUMPS performs remarkably well even without native dataset supervision, matching state-of-the-art performance. When fine-tuned for motion denoising or estimation, PUMPS outperforms many respective methods without deviating from its generalist architecture.

F2Net: A Frequency-Fused Network for Ultra-High Resolution Remote Sensing Segmentation

Jun 09, 2025Abstract:Semantic segmentation of ultra-high-resolution (UHR) remote sensing imagery is critical for applications like environmental monitoring and urban planning but faces computational and optimization challenges. Conventional methods either lose fine details through downsampling or fragment global context via patch processing. While multi-branch networks address this trade-off, they suffer from computational inefficiency and conflicting gradient dynamics during training. We propose F2Net, a frequency-aware framework that decomposes UHR images into high- and low-frequency components for specialized processing. The high-frequency branch preserves full-resolution structural details, while the low-frequency branch processes downsampled inputs through dual sub-branches capturing short- and long-range dependencies. A Hybrid-Frequency Fusion module integrates these observations, guided by two novel objectives: Cross-Frequency Alignment Loss ensures semantic consistency between frequency components, and Cross-Frequency Balance Loss regulates gradient magnitudes across branches to stabilize training. Evaluated on DeepGlobe and Inria Aerial benchmarks, F2Net achieves state-of-the-art performance with mIoU of 80.22 and 83.39, respectively. Our code will be publicly available.

Learning Fine-Grained Geometry for Sparse-View Splatting via Cascade Depth Loss

May 28, 2025Abstract:Novel view synthesis is a fundamental task in 3D computer vision that aims to reconstruct realistic images from a set of posed input views. However, reconstruction quality degrades significantly under sparse-view conditions due to limited geometric cues. Existing methods, such as Neural Radiance Fields (NeRF) and the more recent 3D Gaussian Splatting (3DGS), often suffer from blurred details and structural artifacts when trained with insufficient views. Recent works have identified the quality of rendered depth as a key factor in mitigating these artifacts, as it directly affects geometric accuracy and view consistency. In this paper, we address these challenges by introducing Hierarchical Depth-Guided Splatting (HDGS), a depth supervision framework that progressively refines geometry from coarse to fine levels. Central to HDGS is a novel Cascade Pearson Correlation Loss (CPCL), which aligns rendered and estimated monocular depths across multiple spatial scales. By enforcing multi-scale depth consistency, our method substantially improves structural fidelity in sparse-view scenarios. Extensive experiments on the LLFF and DTU benchmarks demonstrate that HDGS achieves state-of-the-art performance under sparse-view settings while maintaining efficient and high-quality rendering

Extended Short- and Long-Range Mesh Learning for Fast and Generalized Garment Simulation

Apr 16, 2025Abstract:3D garment simulation is a critical component for producing cloth-based graphics. Recent advancements in graph neural networks (GNNs) offer a promising approach for efficient garment simulation. However, GNNs require extensive message-passing to propagate information such as physical forces and maintain contact awareness across the entire garment mesh, which becomes computationally inefficient at higher resolutions. To address this, we devise a novel GNN-based mesh learning framework with two key components to extend the message-passing range with minimal overhead, namely the Laplacian-Smoothed Dual Message-Passing (LSDMP) and the Geodesic Self-Attention (GSA) modules. LSDMP enhances message-passing with a Laplacian features smoothing process, which efficiently propagates the impact of each vertex to nearby vertices. Concurrently, GSA introduces geodesic distance embeddings to represent the spatial relationship between vertices and utilises attention mechanisms to capture global mesh information. The two modules operate in parallel to ensure both short- and long-range mesh modelling. Extensive experiments demonstrate the state-of-the-art performance of our method, requiring fewer layers and lower inference latency.

Adaptive-LIO: Enhancing Robustness and Precision through Environmental Adaptation in LiDAR Inertial Odometry

Mar 07, 2025Abstract:The emerging Internet of Things (IoT) applications, such as driverless cars, have a growing demand for high-precision positioning and navigation. Nowadays, LiDAR inertial odometry becomes increasingly prevalent in robotics and autonomous driving. However, many current SLAM systems lack sufficient adaptability to various scenarios. Challenges include decreased point cloud accuracy with longer frame intervals under the constant velocity assumption, coupling of erroneous IMU information when IMU saturation occurs, and decreased localization accuracy due to the use of fixed-resolution maps during indoor-outdoor scene transitions. To address these issues, we propose a loosely coupled adaptive LiDAR-Inertial-Odometry named \textbf{Adaptive-LIO}, which incorporates adaptive segmentation to enhance mapping accuracy, adapts motion modality through IMU saturation and fault detection, and adjusts map resolution adaptively using multi-resolution voxel maps based on the distance from the LiDAR center. Our proposed method has been tested in various challenging scenarios, demonstrating the effectiveness of the improvements we introduce. The code is open-source on GitHub: \href{https://github.com/chengwei0427/adaptive_lio}{Adaptive-LIO}.

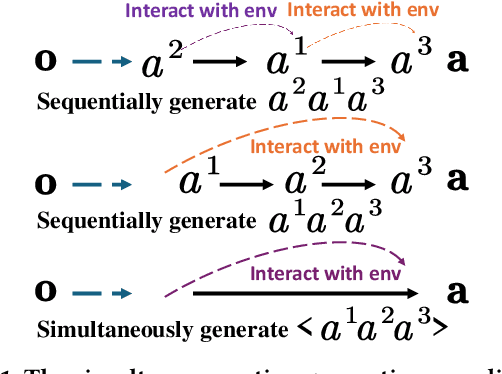

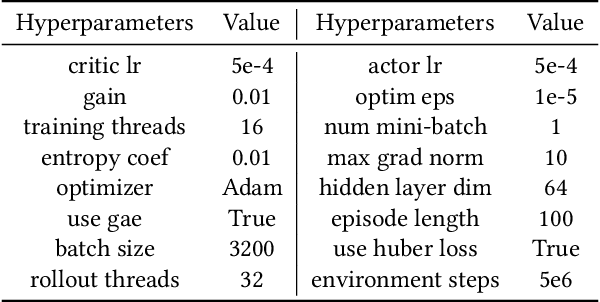

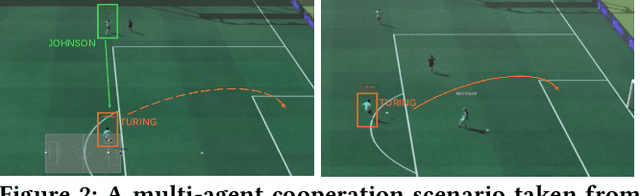

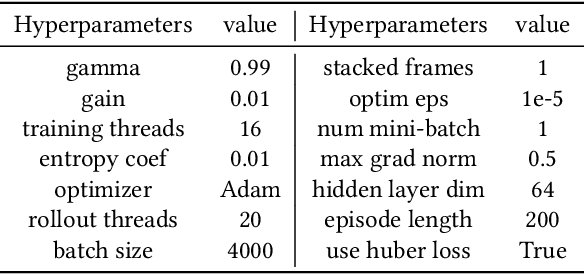

PMAT: Optimizing Action Generation Order in Multi-Agent Reinforcement Learning

Feb 23, 2025

Abstract:Multi-agent reinforcement learning (MARL) faces challenges in coordinating agents due to complex interdependencies within multi-agent systems. Most MARL algorithms use the simultaneous decision-making paradigm but ignore the action-level dependencies among agents, which reduces coordination efficiency. In contrast, the sequential decision-making paradigm provides finer-grained supervision for agent decision order, presenting the potential for handling dependencies via better decision order management. However, determining the optimal decision order remains a challenge. In this paper, we introduce Action Generation with Plackett-Luce Sampling (AGPS), a novel mechanism for agent decision order optimization. We model the order determination task as a Plackett-Luce sampling process to address issues such as ranking instability and vanishing gradient during the network training process. AGPS realizes credit-based decision order determination by establishing a bridge between the significance of agents' local observations and their decision credits, thus facilitating order optimization and dependency management. Integrating AGPS with the Multi-Agent Transformer, we propose the Prioritized Multi-Agent Transformer (PMAT), a sequential decision-making MARL algorithm with decision order optimization. Experiments on benchmarks including StarCraft II Multi-Agent Challenge, Google Research Football, and Multi-Agent MuJoCo show that PMAT outperforms state-of-the-art algorithms, greatly enhancing coordination efficiency.

DC-PCN: Point Cloud Completion Network with Dual-Codebook Guided Quantization

Jan 19, 2025Abstract:Point cloud completion aims to reconstruct complete 3D shapes from partial 3D point clouds. With advancements in deep learning techniques, various methods for point cloud completion have been developed. Despite achieving encouraging results, a significant issue remains: these methods often overlook the variability in point clouds sampled from a single 3D object surface. This variability can lead to ambiguity and hinder the achievement of more precise completion results. Therefore, in this study, we introduce a novel point cloud completion network, namely Dual-Codebook Point Completion Network (DC-PCN), following an encder-decoder pipeline. The primary objective of DC-PCN is to formulate a singular representation of sampled point clouds originating from the same 3D surface. DC-PCN introduces a dual-codebook design to quantize point-cloud representations from a multilevel perspective. It consists of an encoder-codebook and a decoder-codebook, designed to capture distinct point cloud patterns at shallow and deep levels. Additionally, to enhance the information flow between these two codebooks, we devise an information exchange mechanism. This approach ensures that crucial features and patterns from both shallow and deep levels are effectively utilized for completion. Extensive experiments on the PCN, ShapeNet\_Part, and ShapeNet34 datasets demonstrate the state-of-the-art performance of our method.

B-VLLM: A Vision Large Language Model with Balanced Spatio-Temporal Tokens

Dec 13, 2024

Abstract:Recently, Vision Large Language Models (VLLMs) integrated with vision encoders have shown promising performance in vision understanding. The key of VLLMs is to encode visual content into sequences of visual tokens, enabling VLLMs to simultaneously process both visual and textual content. However, understanding videos, especially long videos, remain a challenge to VLLMs as the number of visual tokens grows rapidly when encoding videos, resulting in the risk of exceeding the context window of VLLMs and introducing heavy computation burden. To restrict the number of visual tokens, existing VLLMs either: (1) uniformly downsample videos into a fixed number of frames or (2) reducing the number of visual tokens encoded from each frame. We argue the former solution neglects the rich temporal cue in videos and the later overlooks the spatial details in each frame. In this work, we present Balanced-VLLM (B-VLLM): a novel VLLM framework that aims to effectively leverage task relevant spatio-temporal cues while restricting the number of visual tokens under the VLLM context window length. At the core of our method, we devise a text-conditioned adaptive frame selection module to identify frames relevant to the visual understanding task. The selected frames are then de-duplicated using a temporal frame token merging technique. The visual tokens of the selected frames are processed through a spatial token sampling module and an optional spatial token merging strategy to achieve precise control over the token count. Experimental results show that B-VLLM is effective in balancing the number of frames and visual tokens in video understanding, yielding superior performance on various video understanding benchmarks. Our code is available at https://github.com/zhuqiangLu/B-VLLM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge