Panpan Cai

ForceVLA: Enhancing VLA Models with a Force-aware MoE for Contact-rich Manipulation

May 28, 2025Abstract:Vision-Language-Action (VLA) models have advanced general-purpose robotic manipulation by leveraging pretrained visual and linguistic representations. However, they struggle with contact-rich tasks that require fine-grained control involving force, especially under visual occlusion or dynamic uncertainty. To address these limitations, we propose \textbf{ForceVLA}, a novel end-to-end manipulation framework that treats external force sensing as a first-class modality within VLA systems. ForceVLA introduces \textbf{FVLMoE}, a force-aware Mixture-of-Experts fusion module that dynamically integrates pretrained visual-language embeddings with real-time 6-axis force feedback during action decoding. This enables context-aware routing across modality-specific experts, enhancing the robot's ability to adapt to subtle contact dynamics. We also introduce \textbf{ForceVLA-Data}, a new dataset comprising synchronized vision, proprioception, and force-torque signals across five contact-rich manipulation tasks. ForceVLA improves average task success by 23.2\% over strong $\pi_0$-based baselines, achieving up to 80\% success in tasks such as plug insertion. Our approach highlights the importance of multimodal integration for dexterous manipulation and sets a new benchmark for physically intelligent robotic control. Code and data will be released at https://sites.google.com/view/forcevla2025.

World Modeling Makes a Better Planner: Dual Preference Optimization for Embodied Task Planning

Mar 13, 2025Abstract:Recent advances in large vision-language models (LVLMs) have shown promise for embodied task planning, yet they struggle with fundamental challenges like dependency constraints and efficiency. Existing approaches either solely optimize action selection or leverage world models during inference, overlooking the benefits of learning to model the world as a way to enhance planning capabilities. We propose Dual Preference Optimization (D$^2$PO), a new learning framework that jointly optimizes state prediction and action selection through preference learning, enabling LVLMs to understand environment dynamics for better planning. To automatically collect trajectories and stepwise preference data without human annotation, we introduce a tree search mechanism for extensive exploration via trial-and-error. Extensive experiments on VoTa-Bench demonstrate that our D$^2$PO-based method significantly outperforms existing methods and GPT-4o when applied to Qwen2-VL (7B), LLaVA-1.6 (7B), and LLaMA-3.2 (11B), achieving superior task success rates with more efficient execution paths.

BoT-Drive: Hierarchical Behavior and Trajectory Planning for Autonomous Driving using POMDPs

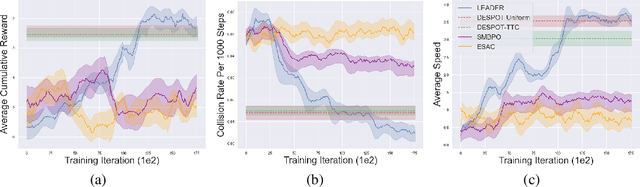

Sep 27, 2024Abstract:Uncertainties in dynamic road environments pose significant challenges for behavior and trajectory planning in autonomous driving. This paper introduces BoT-Drive, a planning algorithm that addresses uncertainties at both behavior and trajectory levels within a Partially Observable Markov Decision Process (POMDP) framework. BoT-Drive employs driver models to characterize unknown behavioral intentions and utilizes their model parameters to infer hidden driving styles. By also treating driver models as decision-making actions for the autonomous vehicle, BoT-Drive effectively tackles the exponential complexity inherent in POMDPs. To enhance safety and robustness, the planner further applies importance sampling to refine the driving trajectory conditioned on the planned high-level behavior. Evaluation on real-world data shows that BoT-Drive consistently outperforms both existing planning methods and learning-based methods in regular and complex urban driving scenes, demonstrating significant improvements in driving safety and reliability.

RI-MAE: Rotation-Invariant Masked AutoEncoders for Self-Supervised Point Cloud Representation Learning

Aug 31, 2024

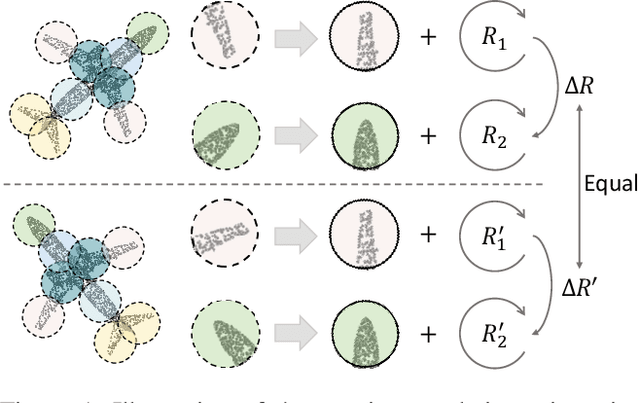

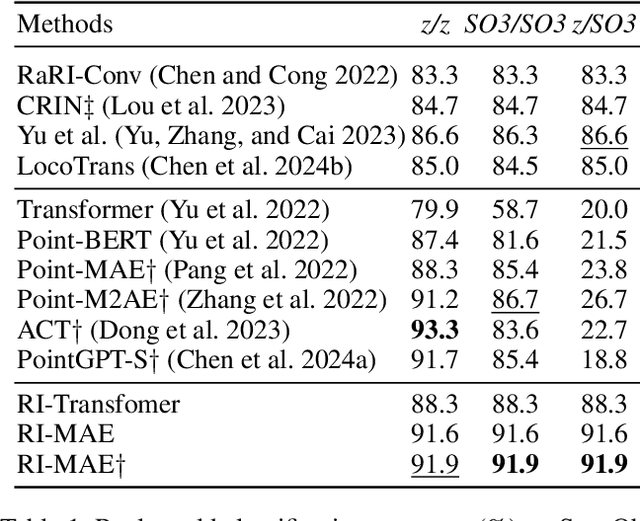

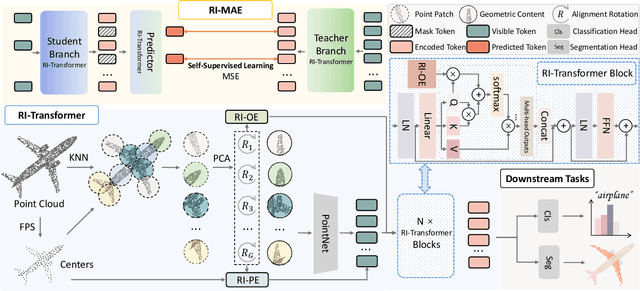

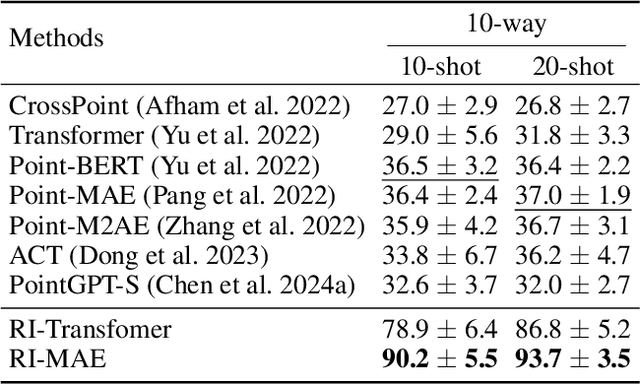

Abstract:Masked point modeling methods have recently achieved great success in self-supervised learning for point cloud data. However, these methods are sensitive to rotations and often exhibit sharp performance drops when encountering rotational variations. In this paper, we propose a novel Rotation-Invariant Masked AutoEncoders (RI-MAE) to address two major challenges: 1) achieving rotation-invariant latent representations, and 2) facilitating self-supervised reconstruction in a rotation-invariant manner. For the first challenge, we introduce RI-Transformer, which features disentangled geometry content, rotation-invariant relative orientation and position embedding mechanisms for constructing rotation-invariant point cloud latent space. For the second challenge, a novel dual-branch student-teacher architecture is devised. It enables the self-supervised learning via the reconstruction of masked patches within the learned rotation-invariant latent space. Each branch is based on an RI-Transformer, and they are connected with an additional RI-Transformer predictor. The teacher encodes all point patches, while the student solely encodes unmasked ones. Finally, the predictor predicts the latent features of the masked patches using the output latent embeddings from the student, supervised by the outputs from the teacher. Extensive experiments demonstrate that our method is robust to rotations, achieving the state-of-the-art performance on various downstream tasks.

RoboSense: Large-scale Dataset and Benchmark for Multi-sensor Low-speed Autonomous Driving

Aug 28, 2024

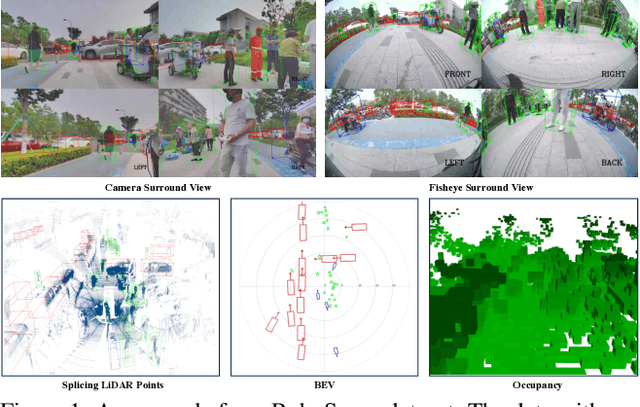

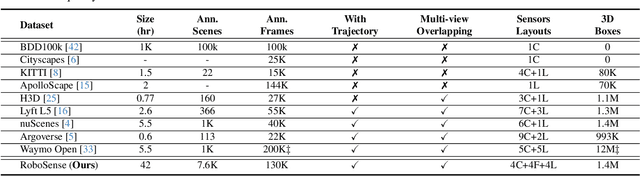

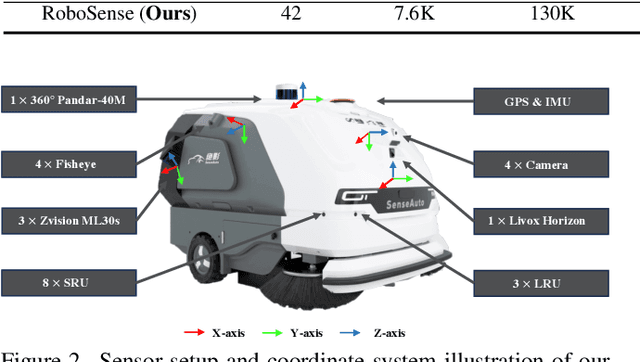

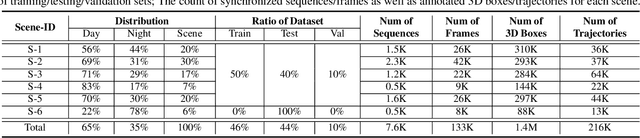

Abstract:Robust object detection and tracking under arbitrary sight of view is challenging yet essential for the development of Autonomous Vehicle technology. With the growing demand of unmanned function vehicles, near-field scene understanding becomes an important research topic in the areas of low-speed autonomous driving. Due to the complexity of driving conditions and diversity of near obstacles such as blind spots and high occlusion, the perception capability of near-field environment is still inferior than its farther counterpart. To further enhance the intelligent ability of unmanned vehicles, in this paper, we construct a multimodal data collection platform based on 3 main types of sensors (Camera, LiDAR and Fisheye), which supports flexible sensor configurations to enable dynamic sight of view for ego vehicle, either global view or local view. Meanwhile, a large-scale multi-sensor dataset is built, named RoboSense, to facilitate near-field scene understanding. RoboSense contains more than 133K synchronized data with 1.4M 3D bounding box and IDs annotated in the full $360^{\circ}$ view, forming 216K trajectories across 7.6K temporal sequences. It has $270\times$ and $18\times$ as many annotations of near-field obstacles within 5$m$ as the previous single-vehicle datasets such as KITTI and nuScenes. Moreover, we define a novel matching criterion for near-field 3D perception and prediction metrics. Based on RoboSense, we formulate 6 popular tasks to facilitate the future development of related research, where the detailed data analysis as well as benchmarks are also provided accordingly.

Rethinking State Disentanglement in Causal Reinforcement Learning

Aug 24, 2024

Abstract:One of the significant challenges in reinforcement learning (RL) when dealing with noise is estimating latent states from observations. Causality provides rigorous theoretical support for ensuring that the underlying states can be uniquely recovered through identifiability. Consequently, some existing work focuses on establishing identifiability from a causal perspective to aid in the design of algorithms. However, these results are often derived from a purely causal viewpoint, which may overlook the specific RL context. We revisit this research line and find that incorporating RL-specific context can reduce unnecessary assumptions in previous identifiability analyses for latent states. More importantly, removing these assumptions allows algorithm design to go beyond the earlier boundaries constrained by them. Leveraging these insights, we propose a novel approach for general partially observable Markov Decision Processes (POMDPs) by replacing the complicated structural constraints in previous methods with two simple constraints for transition and reward preservation. With the two constraints, the proposed algorithm is guaranteed to disentangle state and noise that is faithful to the underlying dynamics. Empirical evidence from extensive benchmark control tasks demonstrates the superiority of our approach over existing counterparts in effectively disentangling state belief from noise.

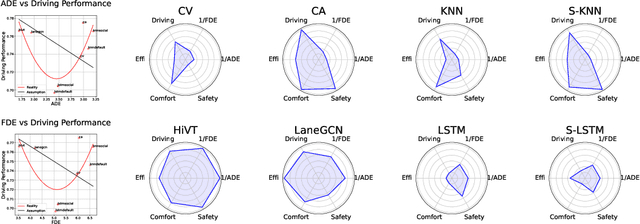

What Truly Matters in Trajectory Prediction for Autonomous Driving?

Jun 27, 2023

Abstract:In the autonomous driving system, trajectory prediction plays a vital role in ensuring safety and facilitating smooth navigation. However, we observe a substantial discrepancy between the accuracy of predictors on fixed datasets and their driving performance when used in downstream tasks. This discrepancy arises from two overlooked factors in the current evaluation protocols of trajectory prediction: 1) the dynamics gap between the dataset and real driving scenario; and 2) the computational efficiency of predictors. In real-world scenarios, prediction algorithms influence the behavior of autonomous vehicles, which, in turn, alter the behaviors of other agents on the road. This interaction results in predictor-specific dynamics that directly impact prediction results. As other agents' responses are predetermined on datasets, a significant dynamics gap arises between evaluations conducted on fixed datasets and actual driving scenarios. Furthermore, focusing solely on accuracy fails to address the demand for computational efficiency, which is critical for the real-time response required by the autonomous driving system. Therefore, in this paper, we demonstrate that an interactive, task-driven evaluation approach for trajectory prediction is crucial to reflect its efficacy for autonomous driving.

The Planner Optimization Problem: Formulations and Frameworks

Mar 14, 2023Abstract:Identifying internal parameters for planning is crucial to maximizing the performance of a planner. However, automatically tuning internal parameters which are conditioned on the problem instance is especially challenging. A recent line of work focuses on learning planning parameter generators, but lack a consistent problem definition and software framework. This work proposes the unified planner optimization problem (POP) formulation, along with the Open Planner Optimization Framework (OPOF), a highly extensible software framework to specify and to solve these problems in a reusable manner.

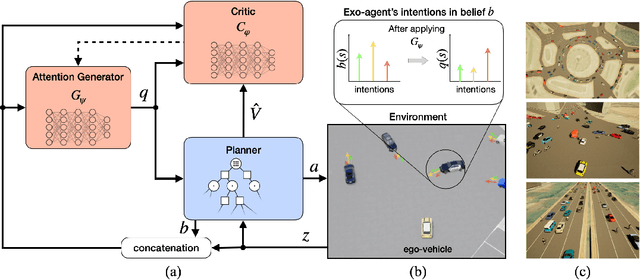

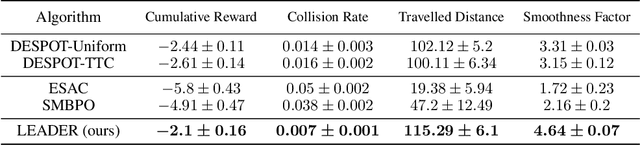

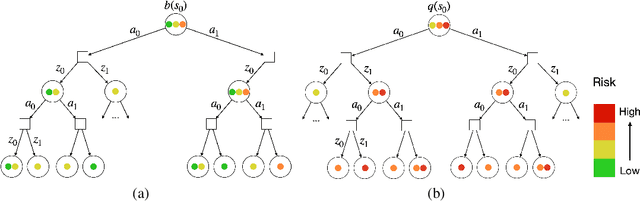

LEADER: Learning Attention over Driving Behaviors for Planning under Uncertainty

Sep 23, 2022

Abstract:Uncertainty on human behaviors poses a significant challenge to autonomous driving in crowded urban environments. The partially observable Markov decision processes (POMDPs) offer a principled framework for planning under uncertainty, often leveraging Monte Carlo sampling to achieve online performance for complex tasks. However, sampling also raises safety concerns by potentially missing critical events. To address this, we propose a new algorithm, LEarning Attention over Driving bEhavioRs (LEADER), that learns to attend to critical human behaviors during planning. LEADER learns a neural network generator to provide attention over human behaviors in real-time situations. It integrates the attention into a belief-space planner, using importance sampling to bias reasoning towards critical events. To train the algorithm, we let the attention generator and the planner form a min-max game. By solving the min-max game, LEADER learns to perform risk-aware planning without human labeling.

Closing the Planning-Learning Loop with Application to Autonomous Driving in a Crowd

Jan 11, 2021

Abstract:Imagine an autonomous robot vehicle driving in dense, possibly unregulated urban traffic. To contend with an uncertain, interactive environment with many traffic participants, the robot vehicle has to perform long-term planning in order to drive effectively and approach human-level performance. Planning explicitly over a long time horizon, however, incurs prohibitive computational cost and is impractical under real-time constraints. To achieve real-time performance for large-scale planning, this paper introduces Learning from Tree Search for Driving (LeTS-Drive), which integrates planning and learning in a close loop. LeTS-Drive learns a driving policy from a planner based on sparsely-sampled tree search. It then guides online planning using this learned policy for real-time vehicle control. These two steps are repeated to form a close loop so that the planner and the learner inform each other and both improve in synchrony. The entire algorithm evolves on its own in a self-supervised manner, without explicit human efforts on data labeling. We applied LeTS-Drive to autonomous driving in crowded urban environments in simulation. Experimental results clearly show that LeTS-Drive outperforms either planning or learning alone, as well as open-loop integration of planning and learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge