Minglong Li

DR$^2$Seg: Decomposed Two-Stage Rollouts for Efficient Reasoning Segmentation in Multimodal Large Language Models

Jan 15, 2026Abstract:Reasoning segmentation is an emerging vision-language task that requires reasoning over intricate text queries to precisely segment objects. However, existing methods typically suffer from overthinking, generating verbose reasoning chains that interfere with object localization in multimodal large language models (MLLMs). To address this issue, we propose DR$^2$Seg, a self-rewarding framework that improves both reasoning efficiency and segmentation accuracy without requiring extra thinking supervision. DR$^2$Seg employs a two-stage rollout strategy that decomposes reasoning segmentation into multimodal reasoning and referring segmentation. In the first stage, the model generates a self-contained description that explicitly specifies the target object. In the second stage, this description replaces the original complex query to verify its self-containment. Based on this design, two self-rewards are introduced to strengthen goal-oriented reasoning and suppress redundant thinking. Extensive experiments across MLLMs of varying scales and segmentation models demonstrate that DR$^2$Seg consistently improves reasoning efficiency and overall segmentation performance.

BeyondFacial: Identity-Preserving Personalized Generation Beyond Facial Close-ups

Nov 15, 2025

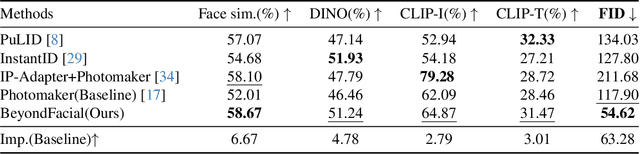

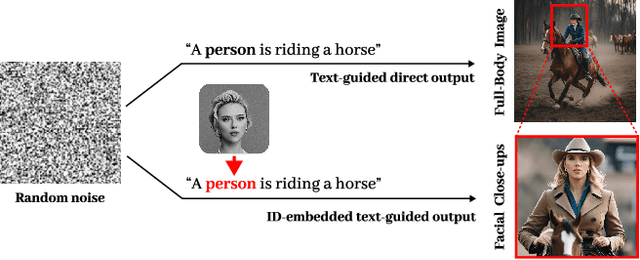

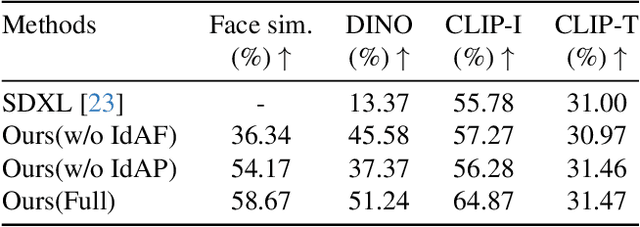

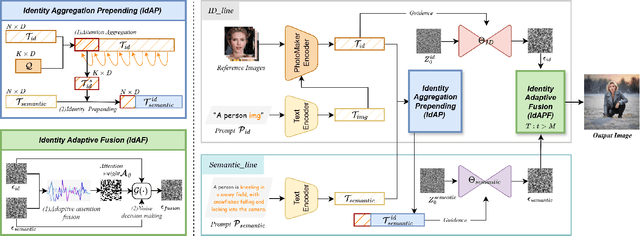

Abstract:Identity-Preserving Personalized Generation (IPPG) has advanced film production and artistic creation, yet existing approaches overemphasize facial regions, resulting in outputs dominated by facial close-ups.These methods suffer from weak visual narrativity and poor semantic consistency under complex text prompts, with the core limitation rooted in identity (ID) feature embeddings undermining the semantic expressiveness of generative models. To address these issues, this paper presents an IPPG method that breaks the constraint of facial close-ups, achieving synergistic optimization of identity fidelity and scene semantic creation. Specifically, we design a Dual-Line Inference (DLI) pipeline with identity-semantic separation, resolving the representation conflict between ID and semantics inherent in traditional single-path architectures. Further, we propose an Identity Adaptive Fusion (IdAF) strategy that defers ID-semantic fusion to the noise prediction stage, integrating adaptive attention fusion and noise decision masking to avoid ID embedding interference on semantics without manual masking. Finally, an Identity Aggregation Prepending (IdAP) module is introduced to aggregate ID information and replace random initializations, further enhancing identity preservation. Experimental results validate that our method achieves stable and effective performance in IPPG tasks beyond facial close-ups, enabling efficient generation without manual masking or fine-tuning. As a plug-and-play component, it can be rapidly deployed in existing IPPG frameworks, addressing the over-reliance on facial close-ups, facilitating film-level character-scene creation, and providing richer personalized generation capabilities for related domains.

MRBTP: Efficient Multi-Robot Behavior Tree Planning and Collaboration

Feb 25, 2025Abstract:Multi-robot task planning and collaboration are critical challenges in robotics. While Behavior Trees (BTs) have been established as a popular control architecture and are plannable for a single robot, the development of effective multi-robot BT planning algorithms remains challenging due to the complexity of coordinating diverse action spaces. We propose the Multi-Robot Behavior Tree Planning (MRBTP) algorithm, with theoretical guarantees of both soundness and completeness. MRBTP features cross-tree expansion to coordinate heterogeneous actions across different BTs to achieve the team's goal. For homogeneous actions, we retain backup structures among BTs to ensure robustness and prevent redundant execution through intention sharing. While MRBTP is capable of generating BTs for both homogeneous and heterogeneous robot teams, its efficiency can be further improved. We then propose an optional plugin for MRBTP when Large Language Models (LLMs) are available to reason goal-related actions for each robot. These relevant actions can be pre-planned to form long-horizon subtrees, significantly enhancing the planning speed and collaboration efficiency of MRBTP. We evaluate our algorithm in warehouse management and everyday service scenarios. Results demonstrate MRBTP's robustness and execution efficiency under varying settings, as well as the ability of the pre-trained LLM to generate effective task-specific subtrees for MRBTP.

PMAT: Optimizing Action Generation Order in Multi-Agent Reinforcement Learning

Feb 23, 2025

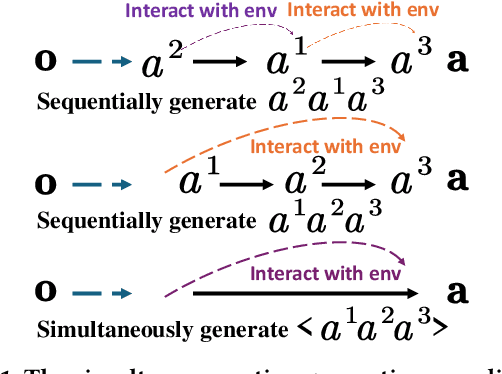

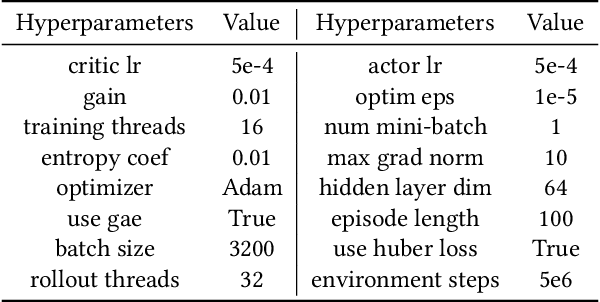

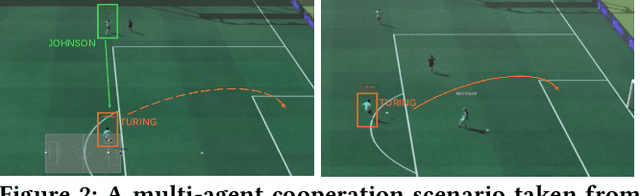

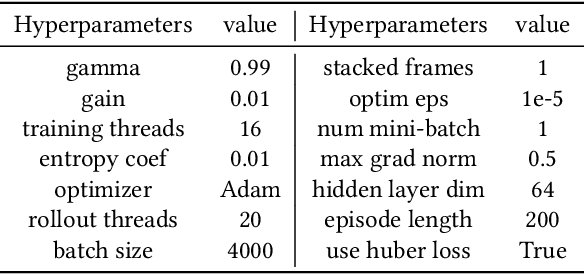

Abstract:Multi-agent reinforcement learning (MARL) faces challenges in coordinating agents due to complex interdependencies within multi-agent systems. Most MARL algorithms use the simultaneous decision-making paradigm but ignore the action-level dependencies among agents, which reduces coordination efficiency. In contrast, the sequential decision-making paradigm provides finer-grained supervision for agent decision order, presenting the potential for handling dependencies via better decision order management. However, determining the optimal decision order remains a challenge. In this paper, we introduce Action Generation with Plackett-Luce Sampling (AGPS), a novel mechanism for agent decision order optimization. We model the order determination task as a Plackett-Luce sampling process to address issues such as ranking instability and vanishing gradient during the network training process. AGPS realizes credit-based decision order determination by establishing a bridge between the significance of agents' local observations and their decision credits, thus facilitating order optimization and dependency management. Integrating AGPS with the Multi-Agent Transformer, we propose the Prioritized Multi-Agent Transformer (PMAT), a sequential decision-making MARL algorithm with decision order optimization. Experiments on benchmarks including StarCraft II Multi-Agent Challenge, Google Research Football, and Multi-Agent MuJoCo show that PMAT outperforms state-of-the-art algorithms, greatly enhancing coordination efficiency.

FutureNet-LOF: Joint Trajectory Prediction and Lane Occupancy Field Prediction with Future Context Encoding

Jun 20, 2024

Abstract:Most prior motion prediction endeavors in autonomous driving have inadequately encoded future scenarios, leading to predictions that may fail to accurately capture the diverse movements of agents (e.g., vehicles or pedestrians). To address this, we propose FutureNet, which explicitly integrates initially predicted trajectories into the future scenario and further encodes these future contexts to enhance subsequent forecasting. Additionally, most previous motion forecasting works have focused on predicting independent futures for each agent. However, safe and smooth autonomous driving requires accurately predicting the diverse future behaviors of numerous surrounding agents jointly in complex dynamic environments. Given that all agents occupy certain potential travel spaces and possess lane driving priority, we propose Lane Occupancy Field (LOF), a new representation with lane semantics for motion forecasting in autonomous driving. LOF can simultaneously capture the joint probability distribution of all road participants' future spatial-temporal positions. Due to the high compatibility between lane occupancy field prediction and trajectory prediction, we propose a novel network with future context encoding for the joint prediction of these two tasks. Our approach ranks 1st on two large-scale motion forecasting benchmarks: Argoverse 1 and Argoverse 2.

Efficient Behavior Tree Planning with Commonsense Pruning and Heuristic

Jun 04, 2024

Abstract:Behavior Tree (BT) planning is crucial for autonomous robot behavior control, yet its application in complex scenarios is hampered by long planning times. Pruning and heuristics are common techniques to accelerate planning, but it is difficult to design general pruning strategies and heuristic functions for BT planning problems. This paper proposes improving BT planning efficiency for everyday service robots leveraging commonsense reasoning provided by Large Language Models (LLMs), leading to model-free pre-planning action space pruning and heuristic generation. This approach takes advantage of the modularity and interpretability of BT nodes, represented by predicate logic, to enable LLMs to predict the task-relevant action predicates and objects, and even the optimal path, without an explicit action model. We propose the Heuristic Optimal Behavior Tree Expansion Algorithm (HOBTEA) with two heuristic variants and provide a formal comparison and discussion of their efficiency and optimality. We introduce a learnable and transferable commonsense library to enhance the LLM's reasoning performance without fine-tuning. The action space expansion based on the commonsense library can further increase the success rate of planning. Experiments show the theoretical bounds of commonsense pruning and heuristic, and demonstrate the actual performance of LLM learning and reasoning with the commonsense library. Results in four datasets showcase the practical effectiveness of our approach in everyday service robot applications.

Integrating Intent Understanding and Optimal Behavior Planning for Behavior Tree Generation from Human Instructions

May 13, 2024

Abstract:Robots executing tasks following human instructions in domestic or industrial environments essentially require both adaptability and reliability. Behavior Tree (BT) emerges as an appropriate control architecture for these scenarios due to its modularity and reactivity. Existing BT generation methods, however, either do not involve interpreting natural language or cannot theoretically guarantee the BTs' success. This paper proposes a two-stage framework for BT generation, which first employs large language models (LLMs) to interpret goals from high-level instructions, then constructs an efficient goal-specific BT through the Optimal Behavior Tree Expansion Algorithm (OBTEA). We represent goals as well-formed formulas in first-order logic, effectively bridging intent understanding and optimal behavior planning. Experiments in the service robot validate the proficiency of LLMs in producing grammatically correct and accurately interpreted goals, demonstrate OBTEA's superiority over the baseline BT Expansion algorithm in various metrics, and finally confirm the practical deployability of our framework. The project website is https://dids-ei.github.io/Project/LLM-OBTEA/.

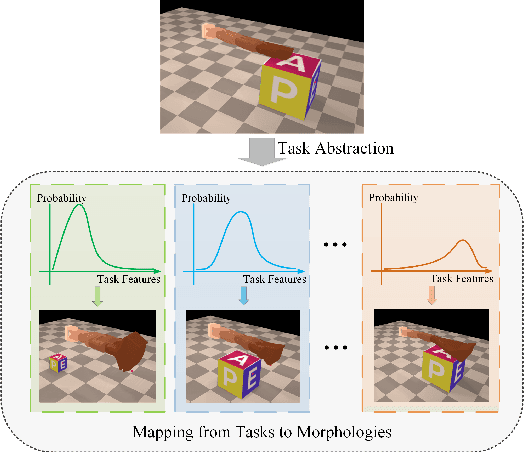

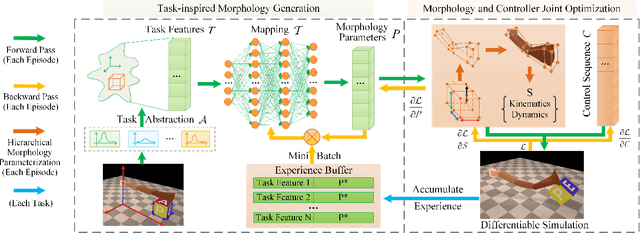

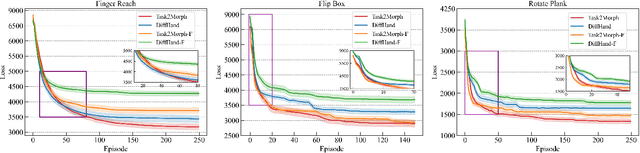

Task2Morph: Differentiable Task-inspired Framework for Contact-Aware Robot Design

Mar 28, 2024

Abstract:Optimizing the morphologies and the controllers that adapt to various tasks is a critical issue in the field of robot design, aka. embodied intelligence. Previous works typically model it as a joint optimization problem and use search-based methods to find the optimal solution in the morphology space. However, they ignore the implicit knowledge of task-to-morphology mapping which can directly inspire robot design. For example, flipping heavier boxes tends to require more muscular robot arms. This paper proposes a novel and general differentiable task-inspired framework for contact-aware robot design called Task2Morph. We abstract task features highly related to task performance and use them to build a task-to-morphology mapping. Further, we embed the mapping into a differentiable robot design process, where the gradient information is leveraged for both the mapping learning and the whole optimization. The experiments are conducted on three scenarios, and the results validate that Task2Morph outperforms DiffHand, which lacks a task-inspired morphology module, in terms of efficiency and effectiveness.

* 9 pages, 10 figures, published to IROS

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge