Kai Zhu

EditEmoTalk: Controllable Speech-Driven 3D Facial Animation with Continuous Expression Editing

Jan 15, 2026Abstract:Speech-driven 3D facial animation aims to generate realistic and expressive facial motions directly from audio. While recent methods achieve high-quality lip synchronization, they often rely on discrete emotion categories, limiting continuous and fine-grained emotional control. We present EditEmoTalk, a controllable speech-driven 3D facial animation framework with continuous emotion editing. The key idea is a boundary-aware semantic embedding that learns the normal directions of inter-emotion decision boundaries, enabling a continuous expression manifold for smooth emotion manipulation. Moreover, we introduce an emotional consistency loss that enforces semantic alignment between the generated motion dynamics and the target emotion embedding through a mapping network, ensuring faithful emotional expression. Extensive experiments demonstrate that EditEmoTalk achieves superior controllability, expressiveness, and generalization while maintaining accurate lip synchronization. Code and pretrained models will be released.

Anchoring Values in Temporal and Group Dimensions for Flow Matching Model Alignment

Dec 13, 2025Abstract:Group Relative Policy Optimization (GRPO) has proven highly effective in enhancing the alignment capabilities of Large Language Models (LLMs). However, current adaptations of GRPO for the flow matching-based image generation neglect a foundational conflict between its core principles and the distinct dynamics of the visual synthesis process. This mismatch leads to two key limitations: (i) Uniformly applying a sparse terminal reward across all timesteps impairs temporal credit assignment, ignoring the differing criticality of generation phases from early structure formation to late-stage tuning. (ii) Exclusive reliance on relative, intra-group rewards causes the optimization signal to fade as training converges, leading to the optimization stagnation when reward diversity is entirely depleted. To address these limitations, we propose Value-Anchored Group Policy Optimization (VGPO), a framework that redefines value estimation across both temporal and group dimensions. Specifically, VGPO transforms the sparse terminal reward into dense, process-aware value estimates, enabling precise credit assignment by modeling the expected cumulative reward at each generative stage. Furthermore, VGPO replaces standard group normalization with a novel process enhanced by absolute values to maintain a stable optimization signal even as reward diversity declines. Extensive experiments on three benchmarks demonstrate that VGPO achieves state-of-the-art image quality while simultaneously improving task-specific accuracy, effectively mitigating reward hacking. Project webpage: https://yawen-shao.github.io/VGPO/.

AliTok: Towards Sequence Modeling Alignment between Tokenizer and Autoregressive Model

Jun 05, 2025Abstract:Autoregressive image generation aims to predict the next token based on previous ones. However, existing image tokenizers encode tokens with bidirectional dependencies during the compression process, which hinders the effective modeling by autoregressive models. In this paper, we propose a novel Aligned Tokenizer (AliTok), which utilizes a causal decoder to establish unidirectional dependencies among encoded tokens, thereby aligning the token modeling approach between the tokenizer and autoregressive model. Furthermore, by incorporating prefix tokens and employing two-stage tokenizer training to enhance reconstruction consistency, AliTok achieves great reconstruction performance while being generation-friendly. On ImageNet-256 benchmark, using a standard decoder-only autoregressive model as the generator with only 177M parameters, AliTok achieves a gFID score of 1.50 and an IS of 305.9. When the parameter count is increased to 662M, AliTok achieves a gFID score of 1.35, surpassing the state-of-the-art diffusion method with 10x faster sampling speed. The code and weights are available at https://github.com/ali-vilab/alitok.

Self-Supervised Evolution Operator Learning for High-Dimensional Dynamical Systems

May 24, 2025

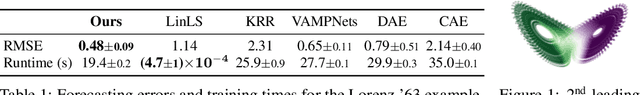

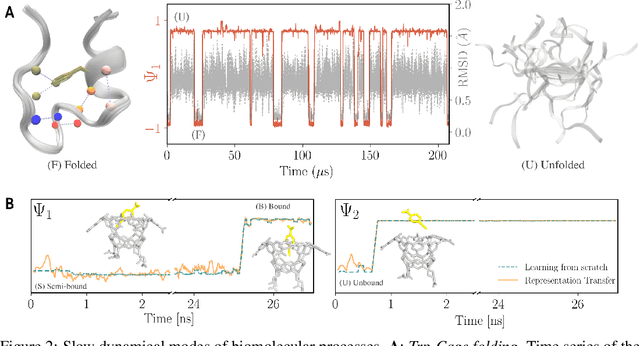

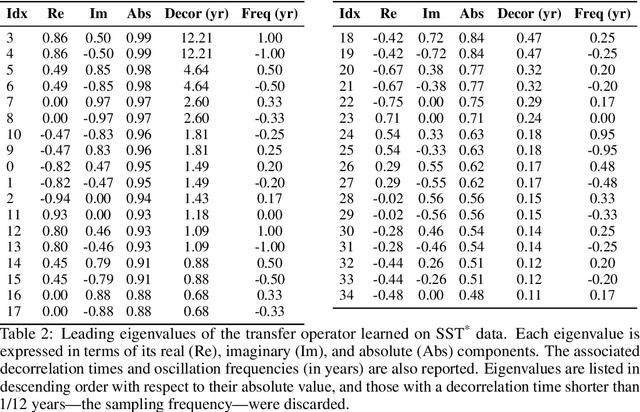

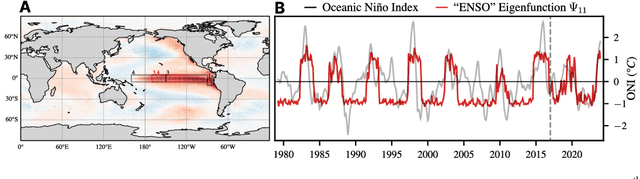

Abstract:We introduce an encoder-only approach to learn the evolution operators of large-scale non-linear dynamical systems, such as those describing complex natural phenomena. Evolution operators are particularly well-suited for analyzing systems that exhibit complex spatio-temporal patterns and have become a key analytical tool across various scientific communities. As terabyte-scale weather datasets and simulation tools capable of running millions of molecular dynamics steps per day are becoming commodities, our approach provides an effective tool to make sense of them from a data-driven perspective. The core of it lies in a remarkable connection between self-supervised representation learning methods and the recently established learning theory of evolution operators. To show the usefulness of the proposed method, we test it across multiple scientific domains: explaining the folding dynamics of small proteins, the binding process of drug-like molecules in host sites, and autonomously finding patterns in climate data. Code and data to reproduce the experiments are made available open source.

Semantically-Aware Game Image Quality Assessment

May 16, 2025

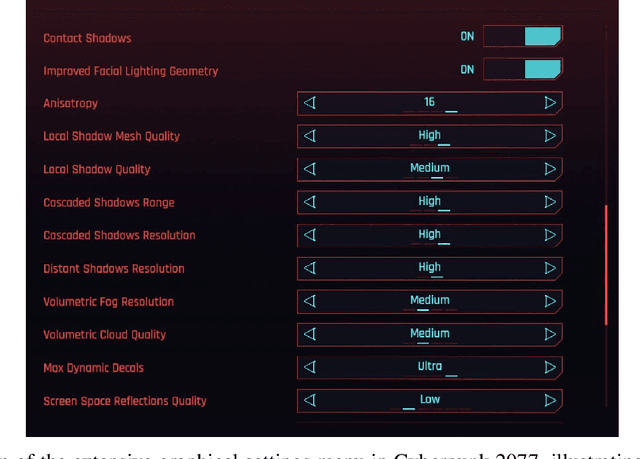

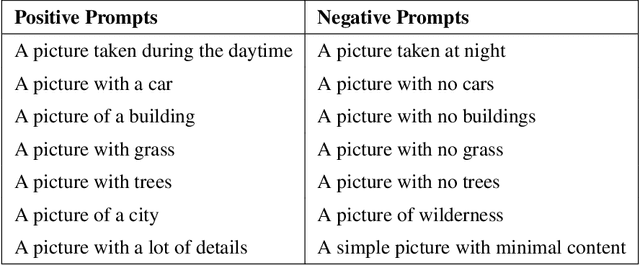

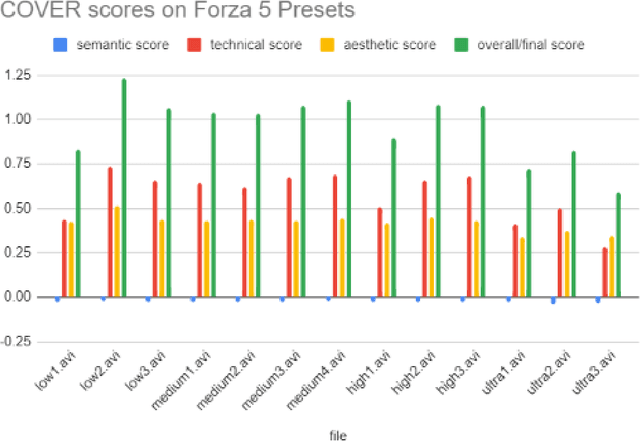

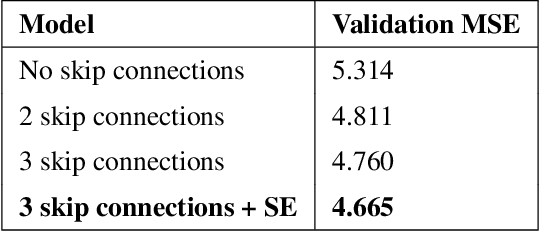

Abstract:Assessing the visual quality of video game graphics presents unique challenges due to the absence of reference images and the distinct types of distortions, such as aliasing, texture blur, and geometry level of detail (LOD) issues, which differ from those in natural images or user-generated content. Existing no-reference image and video quality assessment (NR-IQA/VQA) methods fail to generalize to gaming environments as they are primarily designed for distortions like compression artifacts. This study introduces a semantically-aware NR-IQA model tailored to gaming. The model employs a knowledge-distilled Game distortion feature extractor (GDFE) to detect and quantify game-specific distortions, while integrating semantic gating via CLIP embeddings to dynamically weight feature importance based on scene content. Training on gameplay data recorded across graphical quality presets enables the model to produce quality scores that align with human perception. Our results demonstrate that the GDFE, trained through knowledge distillation from binary classifiers, generalizes effectively to intermediate distortion levels unseen during training. Semantic gating further improves contextual relevance and reduces prediction variance. In the absence of in-domain NR-IQA baselines, our model outperforms out-of-domain methods and exhibits robust, monotonic quality trends across unseen games in the same genre. This work establishes a foundation for automated graphical quality assessment in gaming, advancing NR-IQA methods in this domain.

UCS: A Universal Model for Curvilinear Structure Segmentation

Apr 05, 2025

Abstract:Curvilinear structure segmentation (CSS) is vital in various domains, including medical imaging, landscape analysis, industrial surface inspection, and plant analysis. While existing methods achieve high performance within specific domains, their generalizability is limited. On the other hand, large-scale models such as Segment Anything Model (SAM) exhibit strong generalization but are not optimized for curvilinear structures. Existing adaptations of SAM primarily focus on general object segmentation and lack specialized design for CSS tasks. To bridge this gap, we propose the Universal Curvilinear structure Segmentation (\textit{UCS}) model, which adapts SAM to CSS tasks while enhancing its generalization. \textit{UCS} features a novel encoder architecture integrating a pretrained SAM encoder with two innovations: a Sparse Adapter, strategically inserted to inherit the pre-trained SAM encoder's generalization capability while minimizing the number of fine-tuning parameters, and a Prompt Generation module, which leverages Fast Fourier Transform with a high-pass filter to generate curve-specific prompts. Furthermore, the \textit{UCS} incorporates a mask decoder that eliminates reliance on manual interaction through a dual-compression module: a Hierarchical Feature Compression module, which aggregates the outputs of the sampled encoder to enhance detail preservation, and a Guidance Feature Compression module, which extracts and compresses image-driven guidance features. Evaluated on a comprehensive multi-domain dataset, including an in-house dataset covering eight natural curvilinear structures, \textit{UCS} demonstrates state-of-the-art generalization and open-set segmentation performance across medical, engineering, natural, and plant imagery, establishing a new benchmark for universal CSS.

Exploring Reliable PPG Authentication on Smartwatches in Daily Scenarios

Mar 31, 2025

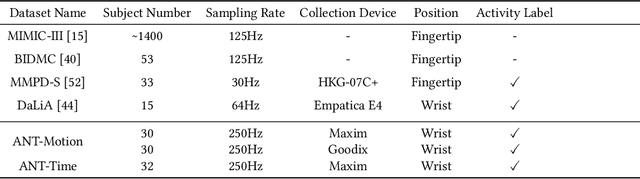

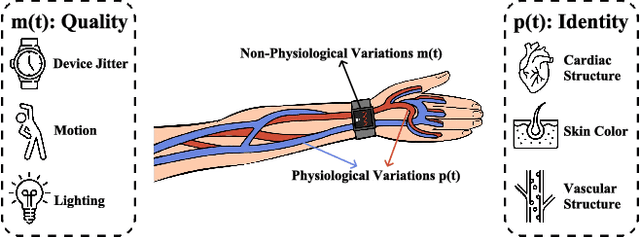

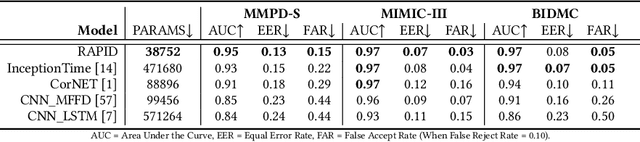

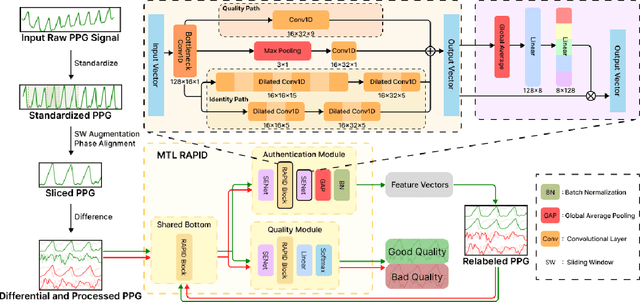

Abstract:Photoplethysmography (PPG) Sensors, widely deployed in smartwatches, offer a simple and non-invasive authentication approach for daily use. However, PPG authentication faces reliability issues due to motion artifacts from physical activity and physiological variability over time. To address these challenges, we propose MTL-RAPID, an efficient and reliable PPG authentication model, that employs a multitask joint training strategy, simultaneously assessing signal quality and verifying user identity. The joint optimization of these two tasks in MTL-RAPID results in a structure that outperforms models trained on individual tasks separately, achieving stronger performance with fewer parameters. In our comprehensive user studies regarding motion artifacts (N = 30), time variations (N = 32), and user preferences (N = 16), MTL-RAPID achieves a best AUC of 99.2\% and an EER of 3.5\%, outperforming existing baselines. We opensource our PPG authentication dataset along with the MTL-RAPID model to facilitate future research on GitHub.

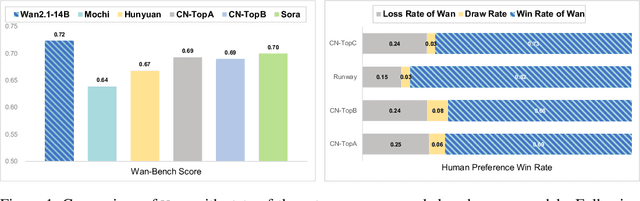

Wan: Open and Advanced Large-Scale Video Generative Models

Mar 26, 2025

Abstract:This report presents Wan, a comprehensive and open suite of video foundation models designed to push the boundaries of video generation. Built upon the mainstream diffusion transformer paradigm, Wan achieves significant advancements in generative capabilities through a series of innovations, including our novel VAE, scalable pre-training strategies, large-scale data curation, and automated evaluation metrics. These contributions collectively enhance the model's performance and versatility. Specifically, Wan is characterized by four key features: Leading Performance: The 14B model of Wan, trained on a vast dataset comprising billions of images and videos, demonstrates the scaling laws of video generation with respect to both data and model size. It consistently outperforms the existing open-source models as well as state-of-the-art commercial solutions across multiple internal and external benchmarks, demonstrating a clear and significant performance superiority. Comprehensiveness: Wan offers two capable models, i.e., 1.3B and 14B parameters, for efficiency and effectiveness respectively. It also covers multiple downstream applications, including image-to-video, instruction-guided video editing, and personal video generation, encompassing up to eight tasks. Consumer-Grade Efficiency: The 1.3B model demonstrates exceptional resource efficiency, requiring only 8.19 GB VRAM, making it compatible with a wide range of consumer-grade GPUs. Openness: We open-source the entire series of Wan, including source code and all models, with the goal of fostering the growth of the video generation community. This openness seeks to significantly expand the creative possibilities of video production in the industry and provide academia with high-quality video foundation models. All the code and models are available at https://github.com/Wan-Video/Wan2.1.

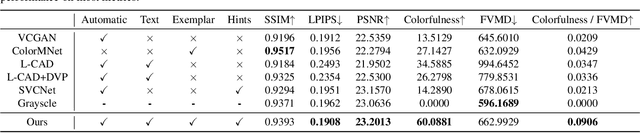

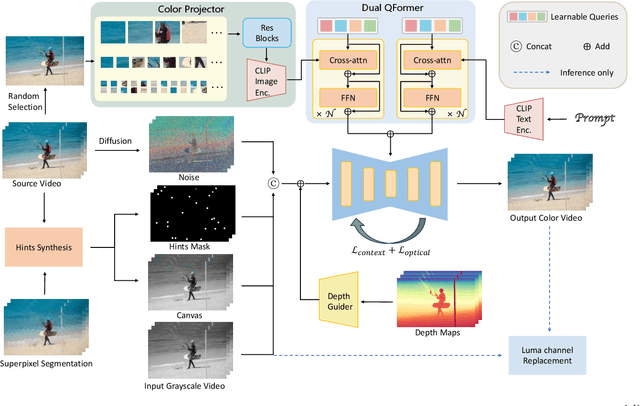

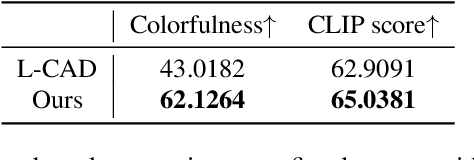

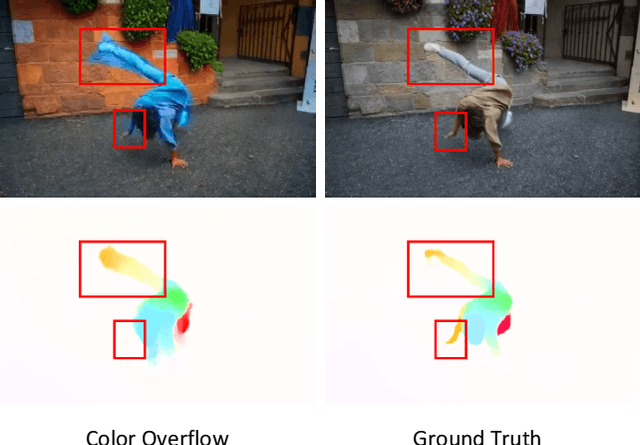

VanGogh: A Unified Multimodal Diffusion-based Framework for Video Colorization

Jan 16, 2025

Abstract:Video colorization aims to transform grayscale videos into vivid color representations while maintaining temporal consistency and structural integrity. Existing video colorization methods often suffer from color bleeding and lack comprehensive control, particularly under complex motion or diverse semantic cues. To this end, we introduce VanGogh, a unified multimodal diffusion-based framework for video colorization. VanGogh tackles these challenges using a Dual Qformer to align and fuse features from multiple modalities, complemented by a depth-guided generation process and an optical flow loss, which help reduce color overflow. Additionally, a color injection strategy and luma channel replacement are implemented to improve generalization and mitigate flickering artifacts. Thanks to this design, users can exercise both global and local control over the generation process, resulting in higher-quality colorized videos. Extensive qualitative and quantitative evaluations, and user studies, demonstrate that VanGogh achieves superior temporal consistency and color fidelity.Project page: https://becauseimbatman0.github.io/VanGogh.

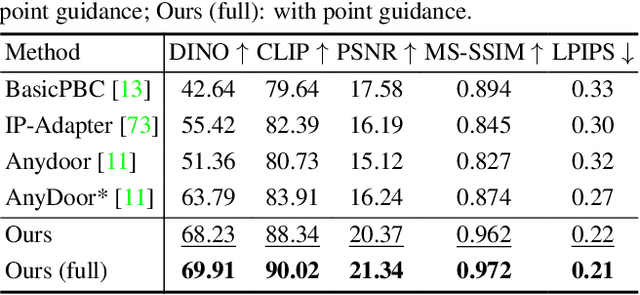

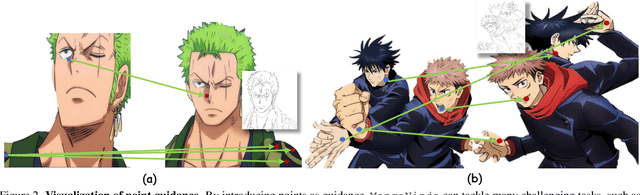

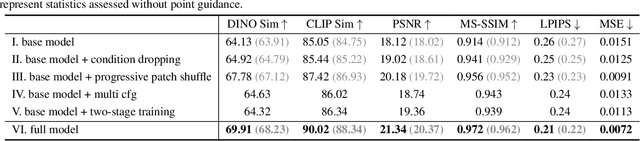

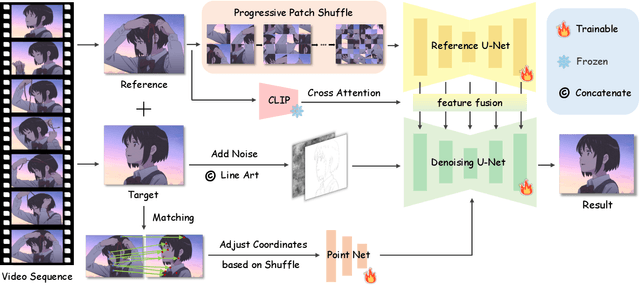

MangaNinja: Line Art Colorization with Precise Reference Following

Jan 14, 2025

Abstract:Derived from diffusion models, MangaNinjia specializes in the task of reference-guided line art colorization. We incorporate two thoughtful designs to ensure precise character detail transcription, including a patch shuffling module to facilitate correspondence learning between the reference color image and the target line art, and a point-driven control scheme to enable fine-grained color matching. Experiments on a self-collected benchmark demonstrate the superiority of our model over current solutions in terms of precise colorization. We further showcase the potential of the proposed interactive point control in handling challenging cases, cross-character colorization, multi-reference harmonization, beyond the reach of existing algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge