Jingwei Zhuo

Breaking the Hourglass Phenomenon of Residual Quantization: Enhancing the Upper Bound of Generative Retrieval

Jul 31, 2024

Abstract:Generative retrieval (GR) has emerged as a transformative paradigm in search and recommender systems, leveraging numeric-based identifier representations to enhance efficiency and generalization. Notably, methods like TIGER employing Residual Quantization-based Semantic Identifiers (RQ-SID), have shown significant promise in e-commerce scenarios by effectively managing item IDs. However, a critical issue termed the "\textbf{Hourglass}" phenomenon, occurs in RQ-SID, where intermediate codebook tokens become overly concentrated, hindering the full utilization of generative retrieval methods. This paper analyses and addresses this problem by identifying data sparsity and long-tailed distribution as the primary causes. Through comprehensive experiments and detailed ablation studies, we analyze the impact of these factors on codebook utilization and data distribution. Our findings reveal that the "Hourglass" phenomenon substantially impacts the performance of RQ-SID in generative retrieval. We propose effective solutions to mitigate this issue, thereby significantly enhancing the effectiveness of generative retrieval in real-world E-commerce applications.

Generative Retrieval with Preference Optimization for E-commerce Search

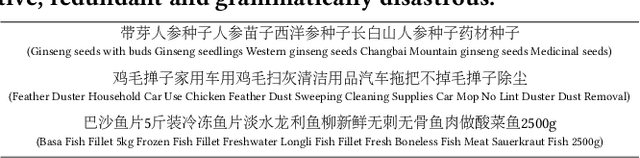

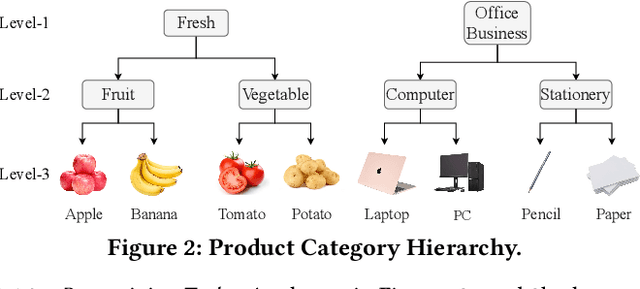

Jul 29, 2024Abstract:Generative retrieval introduces a groundbreaking paradigm to document retrieval by directly generating the identifier of a pertinent document in response to a specific query. This paradigm has demonstrated considerable benefits and potential, particularly in representation and generalization capabilities, within the context of large language models. However, it faces significant challenges in E-commerce search scenarios, including the complexity of generating detailed item titles from brief queries, the presence of noise in item titles with weak language order, issues with long-tail queries, and the interpretability of results. To address these challenges, we have developed an innovative framework for E-commerce search, called generative retrieval with preference optimization. This framework is designed to effectively learn and align an autoregressive model with target data, subsequently generating the final item through constraint-based beam search. By employing multi-span identifiers to represent raw item titles and transforming the task of generating titles from queries into the task of generating multi-span identifiers from queries, we aim to simplify the generation process. The framework further aligns with human preferences using click data and employs a constrained search method to identify key spans for retrieving the final item, thereby enhancing result interpretability. Our extensive experiments show that this framework achieves competitive performance on a real-world dataset, and online A/B tests demonstrate the superiority and effectiveness in improving conversion gains.

Learning Multi-Stage Multi-Grained Semantic Embeddings for E-Commerce Search

Mar 20, 2023

Abstract:Retrieving relevant items that match users' queries from billion-scale corpus forms the core of industrial e-commerce search systems, in which embedding-based retrieval (EBR) methods are prevailing. These methods adopt a two-tower framework to learn embedding vectors for query and item separately and thus leverage efficient approximate nearest neighbor (ANN) search to retrieve relevant items. However, existing EBR methods usually ignore inconsistent user behaviors in industrial multi-stage search systems, resulting in insufficient retrieval efficiency with a low commercial return. To tackle this challenge, we propose to improve EBR methods by learning Multi-level Multi-Grained Semantic Embeddings(MMSE). We propose the multi-stage information mining to exploit the ordered, clicked, unclicked and random sampled items in practical user behavior data, and then capture query-item similarity via a post-fusion strategy. We then propose multi-grained learning objectives that integrate the retrieval loss with global comparison ability and the ranking loss with local comparison ability to generate semantic embeddings. Both experiments on a real-world billion-scale dataset and online A/B tests verify the effectiveness of MMSE in achieving significant performance improvements on metrics such as offline recall and online conversion rate (CVR).

Pre-training Tasks for User Intent Detection and Embedding Retrieval in E-commerce Search

Aug 22, 2022

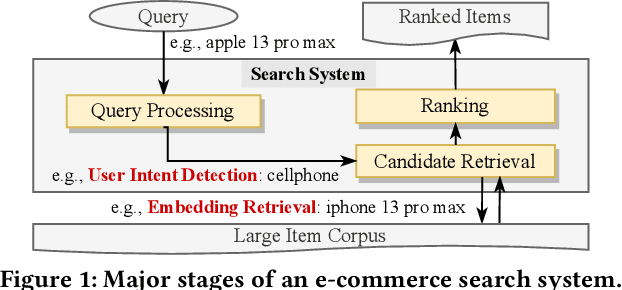

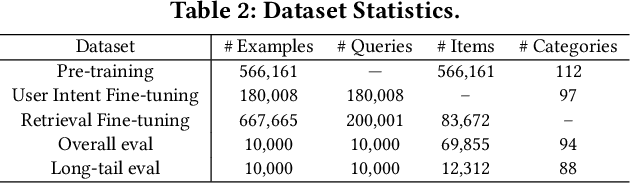

Abstract:BERT-style models pre-trained on the general corpus (e.g., Wikipedia) and fine-tuned on specific task corpus, have recently emerged as breakthrough techniques in many NLP tasks: question answering, text classification, sequence labeling and so on. However, this technique may not always work, especially for two scenarios: a corpus that contains very different text from the general corpus Wikipedia, or a task that learns embedding spacial distribution for a specific purpose (e.g., approximate nearest neighbor search). In this paper, to tackle the above two scenarios that we have encountered in an industrial e-commerce search system, we propose customized and novel pre-training tasks for two critical modules: user intent detection and semantic embedding retrieval. The customized pre-trained models after fine-tuning, being less than 10% of BERT-base's size in order to be feasible for cost-efficient CPU serving, significantly improve the other baseline models: 1) no pre-training model and 2) fine-tuned model from the official pre-trained BERT using general corpus, on both offline datasets and online system. We have open sourced our datasets for the sake of reproducibility and future works.

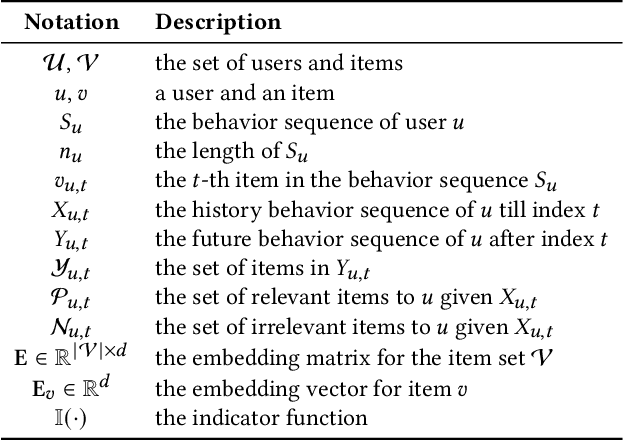

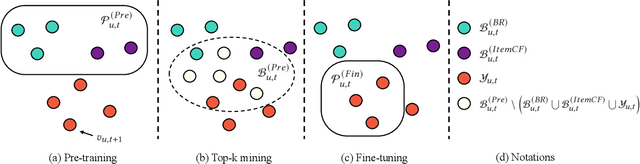

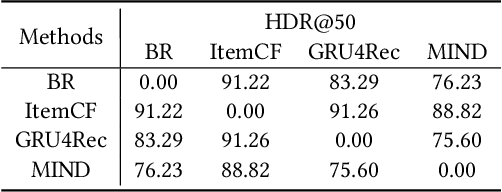

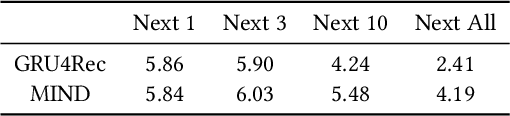

WSLRec: Weakly Supervised Learning for Neural Sequential Recommendation Models

Feb 28, 2022

Abstract:Learning the user-item relevance hidden in implicit feedback data plays an important role in modern recommender systems. Neural sequential recommendation models, which formulates learning the user-item relevance as a sequential classification problem to distinguish items in future behaviors from others based on the user's historical behaviors, have attracted a lot of interest in both industry and academic due to their substantial practical value. Though achieving many practical successes, we argue that the intrinsic {\bf incompleteness} and {\bf inaccuracy} of user behaviors in implicit feedback data is ignored and conduct preliminary experiments for supporting our claims. Motivated by the observation that model-free methods like behavioral retargeting (BR) and item-based collaborative filtering (ItemCF) hit different parts of the user-item relevance compared to neural sequential recommendation models, we propose a novel model-agnostic training approach called WSLRec, which adopts a three-stage framework: pre-training, top-$k$ mining, and fine-tuning. WSLRec resolves the incompleteness problem by pre-training models on extra weak supervisions from model-free methods like BR and ItemCF, while resolves the inaccuracy problem by leveraging the top-$k$ mining to screen out reliable user-item relevance from weak supervisions for fine-tuning. Experiments on two benchmark datasets and online A/B tests verify the rationality of our claims and demonstrate the effectiveness of WSLRec.

Learning Optimal Tree Models Under Beam Search

Jun 27, 2020

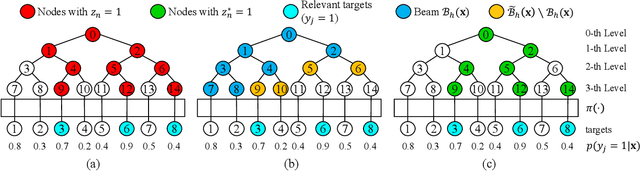

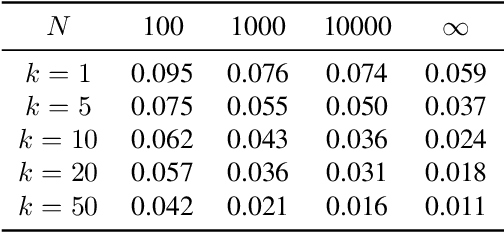

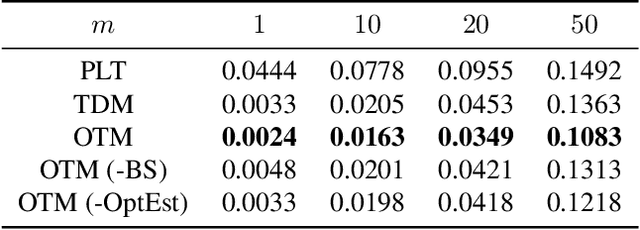

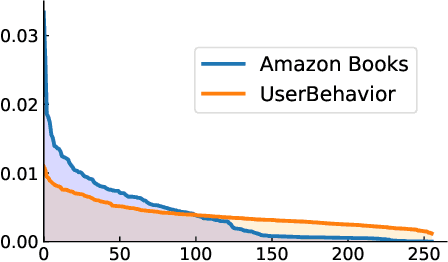

Abstract:Retrieving relevant targets from an extremely large target set under computational limits is a common challenge for information retrieval and recommendation systems. Tree models, which formulate targets as leaves of a tree with trainable node-wise scorers, have attracted a lot of interests in tackling this challenge due to their logarithmic computational complexity in both training and testing. Tree-based deep models (TDMs) and probabilistic label trees (PLTs) are two representative kinds of them. Though achieving many practical successes, existing tree models suffer from the training-testing discrepancy, where the retrieval performance deterioration caused by beam search in testing is not considered in training. This leads to an intrinsic gap between the most relevant targets and those retrieved by beam search with even the optimally trained node-wise scorers. We take a first step towards understanding and analyzing this problem theoretically, and develop the concept of Bayes optimality under beam search and calibration under beam search as general analyzing tools for this purpose. Moreover, to eliminate the discrepancy, we propose a novel algorithm for learning optimal tree models under beam search. Experiments on both synthetic and real data verify the rationality of our theoretical analysis and demonstrate the superiority of our algorithm compared to state-of-the-art methods.

Understanding MCMC Dynamics as Flows on the Wasserstein Space

Feb 01, 2019

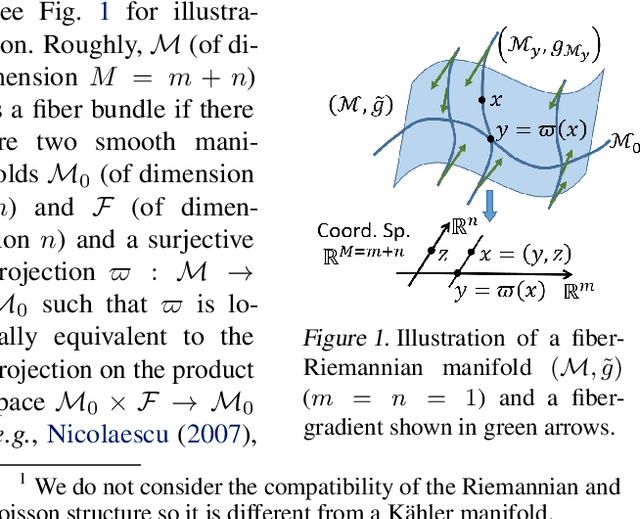

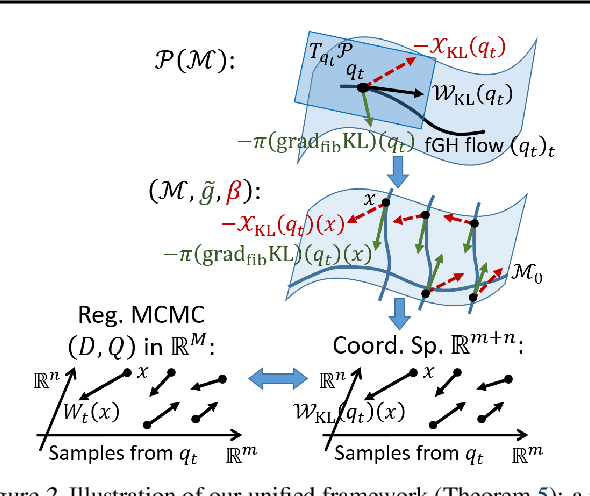

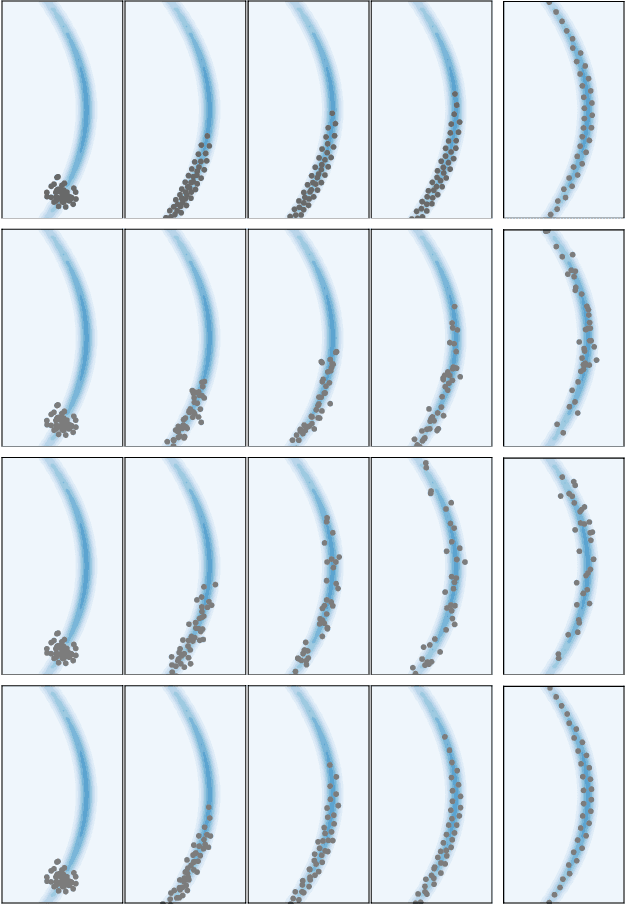

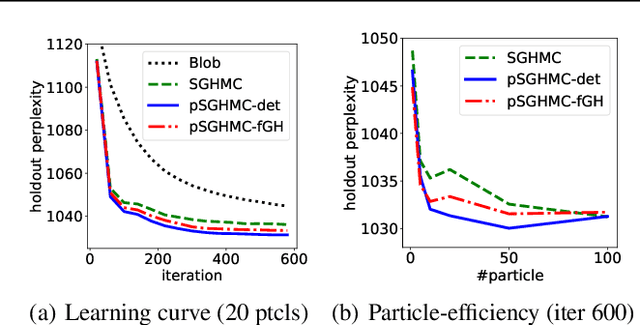

Abstract:It is known that the Langevin dynamics used in MCMC is the gradient flow of the KL divergence on the Wasserstein space, which helps convergence analysis and inspires recent particle-based variational inference methods (ParVIs). But no more MCMC dynamics is understood in this way. In this work, by developing novel concepts, we propose a theoretical framework that recognizes a general MCMC dynamics as the fiber-gradient Hamiltonian flow on the Wasserstein space of a fiber-Riemannian Poisson manifold. The "conservation + convergence" structure of the flow gives a clear picture on the behavior of general MCMC dynamics. We analyse existing MCMC instances under the framework. The framework also enables ParVI simulation of MCMC dynamics, which enriches the ParVI family with more efficient dynamics, and also adapts ParVI advantages to MCMCs. We develop two ParVI methods for a particular MCMC dynamics and demonstrate the benefits in experiments.

Accelerated First-order Methods on the Wasserstein Space for Bayesian Inference

Jul 04, 2018

Abstract:We consider doing Bayesian inference by minimizing the KL divergence on the 2-Wasserstein space $\mathcal{P}_2$. By exploring the Riemannian structure of $\mathcal{P}_2$, we develop two inference methods by simulating the gradient flow on $\mathcal{P}_2$ via updating particles, and an acceleration method that speeds up all such particle-simulation-based inference methods. Moreover we analyze the approximation flexibility of such methods, and conceive a novel bandwidth selection method for the kernel that they use. We note that $\mathcal{P}_2$ is quite abstract and general so that our methods can make closer approximation, while it still has a rich structure that enables practical implementation. Experiments show the effectiveness of the two proposed methods and the improvement of convergence by the acceleration method.

Message Passing Stein Variational Gradient Descent

Jun 08, 2018

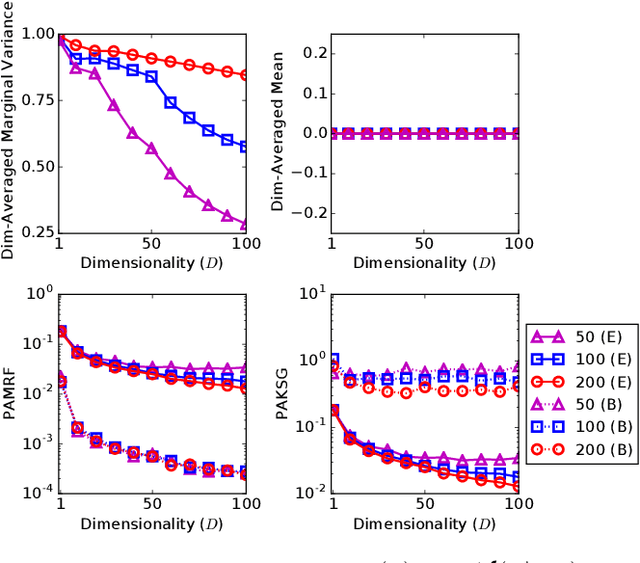

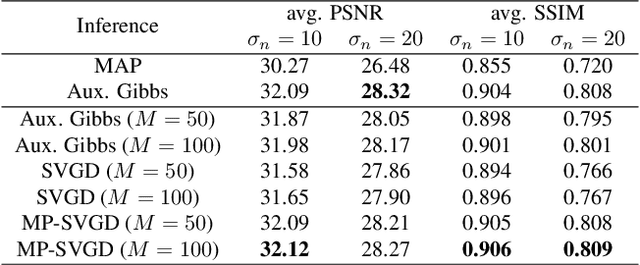

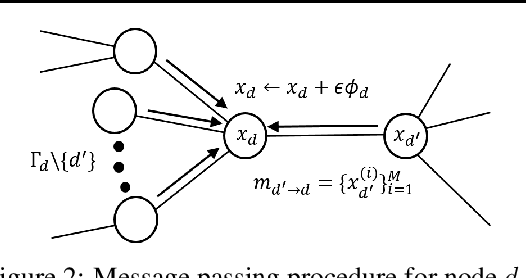

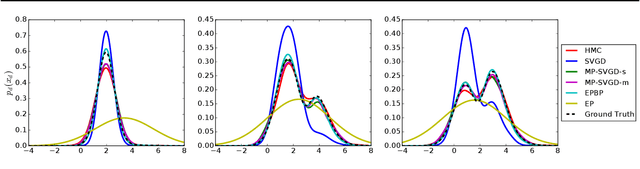

Abstract:Stein variational gradient descent (SVGD) is a recently proposed particle-based Bayesian inference method, which has attracted a lot of interest due to its remarkable approximation ability and particle efficiency compared to traditional variational inference and Markov Chain Monte Carlo methods. However, we observed that particles of SVGD tend to collapse to modes of the target distribution, and this particle degeneracy phenomenon becomes more severe with higher dimensions. Our theoretical analysis finds out that there exists a negative correlation between the dimensionality and the repulsive force of SVGD which should be blamed for this phenomenon. We propose Message Passing SVGD (MP-SVGD) to solve this problem. By leveraging the conditional independence structure of probabilistic graphical models (PGMs), MP-SVGD converts the original high-dimensional global inference problem into a set of local ones over the Markov blanket with lower dimensions. Experimental results show its advantages of preventing vanishing repulsive force in high-dimensional space over SVGD, and its particle efficiency and approximation flexibility over other inference methods on graphical models.

Learning Random Fourier Features by Hybrid Constrained Optimization

Dec 07, 2017

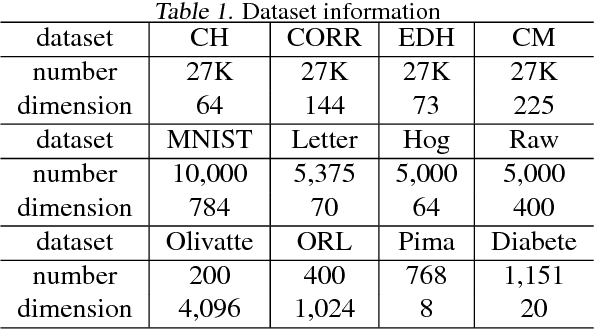

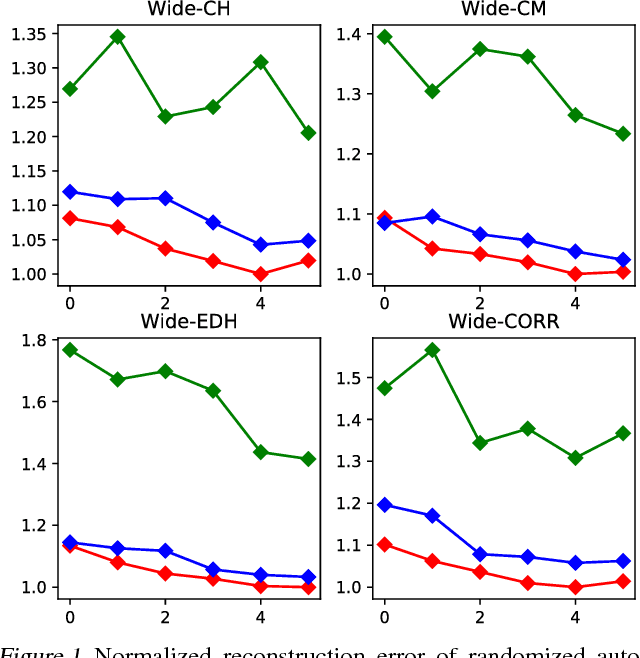

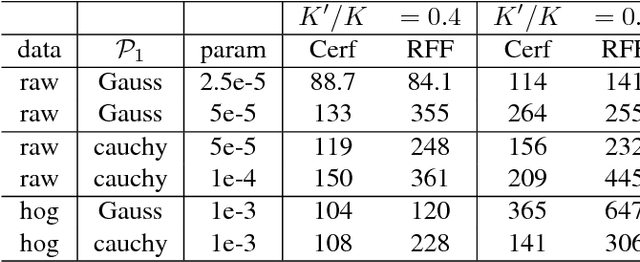

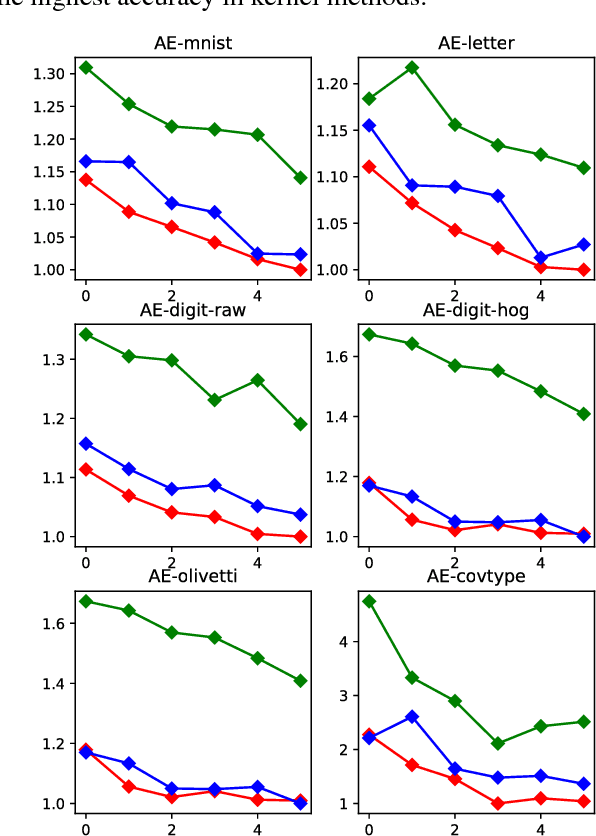

Abstract:The kernel embedding algorithm is an important component for adapting kernel methods to large datasets. Since the algorithm consumes a major computation cost in the testing phase, we propose a novel teacher-learner framework of learning computation-efficient kernel embeddings from specific data. In the framework, the high-precision embeddings (teacher) transfer the data information to the computation-efficient kernel embeddings (learner). We jointly select informative embedding functions and pursue an orthogonal transformation between two embeddings. We propose a novel approach of constrained variational expectation maximization (CVEM), where the alternate direction method of multiplier (ADMM) is applied over a nonconvex domain in the maximization step. We also propose two specific formulations based on the prevalent Random Fourier Feature (RFF), the masked and blocked version of Computation-Efficient RFF (CERF), by imposing a random binary mask or a block structure on the transformation matrix. By empirical studies of several applications on different real-world datasets, we demonstrate that the CERF significantly improves the performance of kernel methods upon the RFF, under certain arithmetic operation requirements, and suitable for structured matrix multiplication in Fastfood type algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge