WSLRec: Weakly Supervised Learning for Neural Sequential Recommendation Models

Paper and Code

Feb 28, 2022

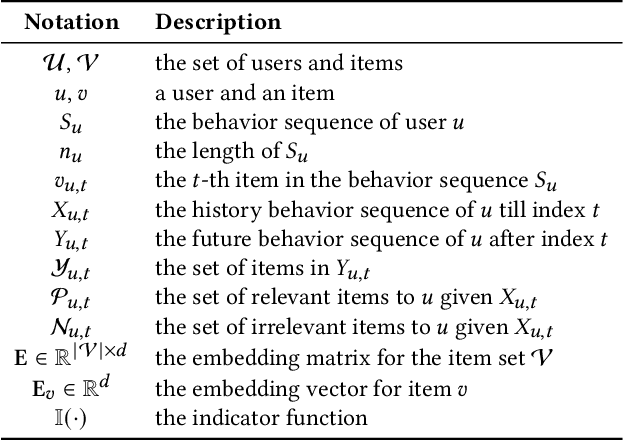

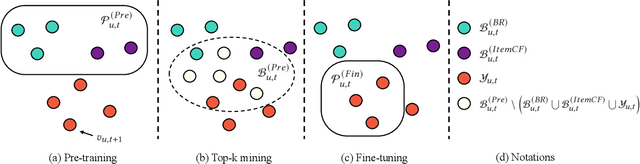

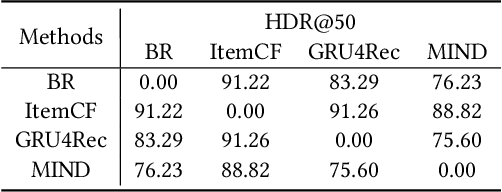

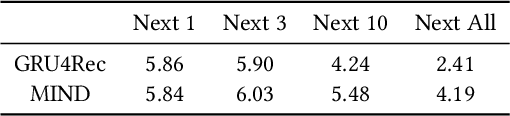

Learning the user-item relevance hidden in implicit feedback data plays an important role in modern recommender systems. Neural sequential recommendation models, which formulates learning the user-item relevance as a sequential classification problem to distinguish items in future behaviors from others based on the user's historical behaviors, have attracted a lot of interest in both industry and academic due to their substantial practical value. Though achieving many practical successes, we argue that the intrinsic {\bf incompleteness} and {\bf inaccuracy} of user behaviors in implicit feedback data is ignored and conduct preliminary experiments for supporting our claims. Motivated by the observation that model-free methods like behavioral retargeting (BR) and item-based collaborative filtering (ItemCF) hit different parts of the user-item relevance compared to neural sequential recommendation models, we propose a novel model-agnostic training approach called WSLRec, which adopts a three-stage framework: pre-training, top-$k$ mining, and fine-tuning. WSLRec resolves the incompleteness problem by pre-training models on extra weak supervisions from model-free methods like BR and ItemCF, while resolves the inaccuracy problem by leveraging the top-$k$ mining to screen out reliable user-item relevance from weak supervisions for fine-tuning. Experiments on two benchmark datasets and online A/B tests verify the rationality of our claims and demonstrate the effectiveness of WSLRec.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge